批量抓取表情包爬虫脚本

Posted 半块西瓜皮

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了批量抓取表情包爬虫脚本相关的知识,希望对你有一定的参考价值。

import re import os import time import requests import multiprocessing from multiprocessing.pool import ThreadPool picqueue = multiprocessing.Queue() pagequeue = multiprocessing.Queue() logqueue = multiprocessing.Queue() picpool = ThreadPool(50) pagepool = ThreadPool(5) error = [] for x in range(1, 838): pagequeue.put(x) def getimglist(body): imglist = re.findall( ur\'data-original="//ws\\d([^"]+)" data-backup="[^"]+" alt="([^"]+)"\', body) for url, name in imglist: if name: name = name + url[-4:] url = "http://ws1" + url logqueue.put(url) picqueue.put((name, url)) if len(imglist)==0: print body def savefile(): http = requests.Session() while True: name, url = picqueue.get() if not os.path.isfile(name): req = http.get(url) try: open(name, \'wb\').write(req.content) except: error.append([name, url]) def getpage(): http = requests.Session() while True: pageid = pagequeue.get() req = http.get( "https://www.doutula.com/photo/list/?page={}".format(pageid)) getimglist(req.text) time.sleep(1) for x in range(5): pagepool.apply_async(getpage) for x in range(50): picpool.apply_async(savefile) while True: print picqueue.qsize(), pagequeue.qsize(), logqueue.qsize() time.sleep(1)

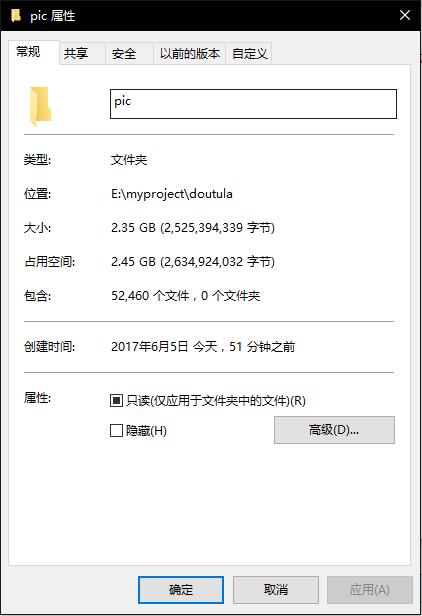

7分钟左右,即可爬完

以上是关于批量抓取表情包爬虫脚本的主要内容,如果未能解决你的问题,请参考以下文章