OpenStack Newton版本Ceph集成部署记录

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了OpenStack Newton版本Ceph集成部署记录相关的知识,希望对你有一定的参考价值。

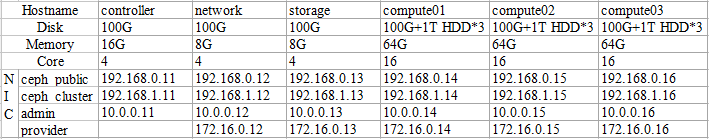

2017年2月,OpenStack Ocata版本正式release,就此记录上一版本 Newton 结合Ceph Jewel版的部署实践。宿主机操作系统为CentOS 7.2 。

初级版:

192.168.0.0/24 与 192.168.1.0/24 为Ceph使用,分别为南北向网络(Public_Network)和东西向网络(Cluster_Network)。

10.0.0.0/24 为 OpenStack 管理网络。

172.16.0.0/24 为用于 OpenStack Neutron 建立OVS bridge 用于租户业务的provider/external网络。

将它们粗暴的合并为一个网络是可以的,但在生产环境不推荐。

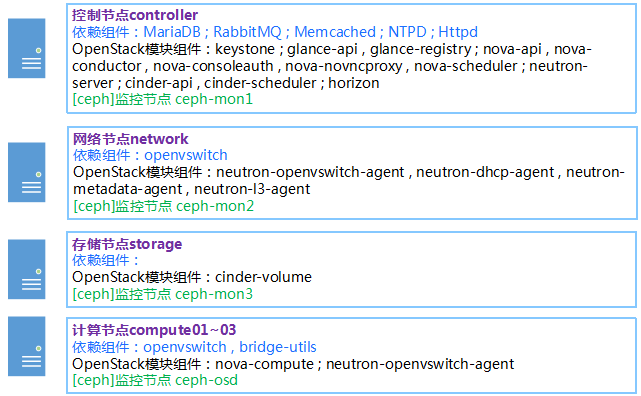

部署基本的 IaaS层服务核心模块:认证Keystone、镜像Glance、计算Nova、网络Neutron、块存储Cinder、Dashboard Horizon。使用 ceph-deploy部署Ceph集群,作为镜像、计算及块存储后端。

Ceph配置样例

[global]

mon_initial_members = controller, network, storage

mon_host = 192.168.0.11,192.168.0.12,192.168.0.13

auth_cluster_required = none

auth_service_required = none

auth_client_required = none

filestore_xattr_use_omap = true

osd_pool_default_size = 2

mon_clock_drift_allowed = 2

mon_clock_drift_warn_backoff = 30

mon_pg_warn_max_per_osd = 1000

public_network = 192.168.0.0/24

cluster_network = 192.168.1.0/24

3 mon+ [磁盘数] osd 使用ceph-deploy快捷部署不使用 keyring , 创建3个pool : glance , nova , cinder

根据情况调整pg_num 与pgp_num

“ 公式:

Total PGs = ((Total_number_of_OSD * 100) / max_replication_count) / pool_count

结算的结果往上取靠近2的N次方的值。比如总共OSD数量是160,复制份数3,pool数量也是3,那么按上述公式计算出的结果是1777.7。取跟它接近的2的N次方是2048,那么每个pool分配的PG数量就是2048。”

OpenStack组件配置样例

- controller

keystone.conf:

[database]

connection = mysql+pymysql://keystone:[email protected]/keystone

glance-api.conf:

[database]

connection = mysql+pymysql://glance:[email protected]/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = yourpasswd

[paste_deploy]

flavor = keystone

[glance_store]

stores = rbd

default_store = rbd

show_image_direct_url = True

rbd_store_pool = glance

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

glance-registry.conf

[database]

connection = mysql+pymysql://glance:[email protected]/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = yourpasswd

[paste_deploy]

flavor = keystone

nova.conf:

[DEFAULT]

enabled_apis = osapi_compute,metadata

[api_database]

connection = mysql+pymysql://nova:[email protected]/nova_api

[database]

connection = mysql+pymysql://nova:[email protected]/nova

[DEFAULT]

transport_url = rabbit://openstack:[email protected]

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = yourpasswd

[DEFAULT]

my_ip = 10.0.0.11

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = 10.0.0.11

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = yourpasswd

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

[DEFAULT]

metadata_listen=10.0.0.11

metadata_listen_port=8775

[cinder]

os_region_name = RegionOne

neutron.conf:

[database]

connection = mysql+pymysql://neutron:[email protected]/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

[DEFAULT]

transport_url = rabbit://openstack:[email protected]

rpc_response_timeout = 180

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = yourpasswd

[DEFAULT]

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = yourpasswd

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

neutron/plugin.ini:

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider #若业务网络provider为flat则写这里

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

network_vlan_ranges = provider:1:1000 #若业务网络provider为vlan则写这里

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group=True

cinder.conf:

[DEFAULT]

enable_v1_api = True

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

my_ip = 10.0.0.11

[database]

connection = mysql+pymysql://cinder:[email protected]/cinder

[key_manager]

[keystone_authtoken]

auth_uri = http://10.0.0.11:5000

auth_url = http://10.0.0.11:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = yourpasswd

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

- network

neutron.conf:

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

transport_url = rabbit://openstack:[email protected]

rpc_response_timeout = 180

auth_strategy = keystone

[agent]

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = yourpasswd

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

neutron/dhcp_agent.conf:

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

neutron/metadata_agent.ini:

[DEFAULT]

nova_metadata_ip = 10.0.0.11

nova_metadata_port = 8775

metadata_proxy_shared_secret = METADATA_SECRET

neutron/l3_agent.ini:

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

external_network_bridge=

metadata_port = 9697

openvswitch_agent.ini:

[DEFAULT]

[agent]

[ovs]

[securitygroup]

[ovs]

local_ip=10.0.0.12

bridge_mappings=provider:br-provider

[agent]

tunnel_types=vxlan

l2_population=True

prevent_arp_spoofing=True

[securitygroup]

firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

enable_security_group=True

shell command:

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider [172.16.0.12的网卡]

- storage

cinder.conf:

[database]

connection = mysql+pymysql://cinder:[email protected]/cinder

[DEFAULT]

transport_url = rabbit://openstack:[email protected]

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = yourpasswd

[DEFAULT]

my_ip = 10.0.0.13

[DEFAULT]

enabled_backends = ceph

[ceph]

volume_group = ceph

volume_backend_name = ceph

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = cinder

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

backup_driver = cinder.backup.drivers.ceph

backup_ceph_conf = /etc/ceph/ceph.conf

backup_ceph_chunk_size = 134217728

backup_ceph_pool = cinder

backup_ceph_stripe_unit = 0

backup_ceph_stripe_count = 0

restore_discard_excess_bytes = true

[DEFAULT]

glance_api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

- compute01-03

nova.conf:

[DEFAULT]

enabled_apis = osapi_compute,metadata

[DEFAULT]

transport_url = rabbit://openstack:[email protected]

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = yourpasswd

[DEFAULT]

my_ip = 10.0.0.14 (10.0.0.15 , 10.0.0.16)

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://10.0.0.11:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = yourpasswd

metadata_proxy_shared_secret = METADATA_SECRET

[libvirt]

images_type = rbd

images_rbd_pool = nova

images_rbd_ceph_conf = /etc/ceph/ceph.conf

libvirt_live_migration_flag="VIR_MIGRATE_UNDEFINE_SOURCE,VIR_MIGRATE_PEER2PEER,VIR_MIGRATE_LIVE,VIR_MIGRATE_PERSIST_DEST"

libvirt_inject_password = false

libvirt_inject_key = false

libvirt_inject_partition = -2

shell command:

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider [172.16.0.14,15,16的网卡]

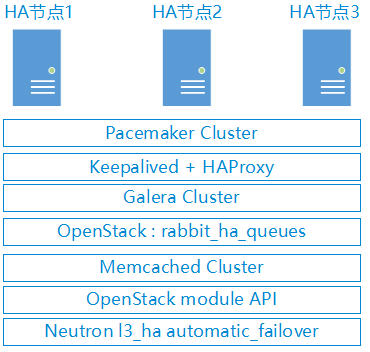

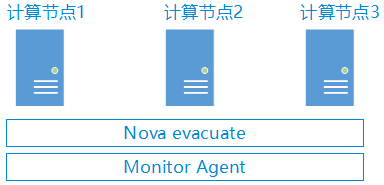

增强版:

提供HA能力的架构

参考:

Networking configuration options

以上是关于OpenStack Newton版本Ceph集成部署记录的主要内容,如果未能解决你的问题,请参考以下文章