机器学习笔记(Washington University)- Regression Specialization-week six

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了机器学习笔记(Washington University)- Regression Specialization-week six相关的知识,希望对你有一定的参考价值。

1. Fit locally

If the true model changes much, we want to fit our function locally to

different regions of the input space.

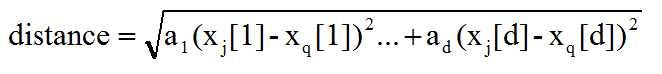

2. Scaled distance

\\

\\

we put weight on each input to define relative importance.

3. KNN

KNN is really sensitive to regions with little data and also noise in the data.

if we can get infinite amount of noiseless data, the 1-KNN will leads to no bias and variance.

boudary effect: near the boudary, the prediction tends to avergae over the same data sample.

Discontinuities: jumps in the prediction values.

the more dimensions d you have, the more points N you need to cover the space.

procedure:

1.find k closet x(i) in dataset

2,predict the value(the average value of k samples)

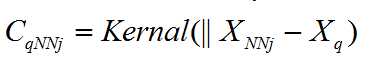

weighted KNN:

weight more similar data more than those similar in list.

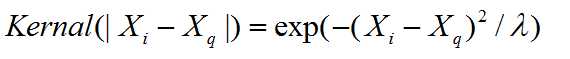

4. kernal

How the weights gonna decay as a function of the distance between a given point and query point

kernal has bounded support, only subset of data needed to compute local fit.

we can also use the validation set or cross validation to choose the lambda.

Gaussian kernal:

and the weights never goes to zero for gaussian kernal.

以上是关于机器学习笔记(Washington University)- Regression Specialization-week six的主要内容,如果未能解决你的问题,请参考以下文章