FastDFS 的安装与配置

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了FastDFS 的安装与配置相关的知识,希望对你有一定的参考价值。

FastDFS 的安装与配置

=============================================================================

概述:

=============================================================================

FastDFS介绍

1.简介

★介绍

开源的轻量级分布式文件系统;

使用 C++语言编写

FastDFS是一个开源的分布式文件系统,她对文件进行管理,功能包括:文件存储、文件同步、文件访问(文件上传、文件下载)等,解决了大容量存储和负载均衡的问题。特别适合以文件为载体的在线服务,如相册网站、视频网站等等。

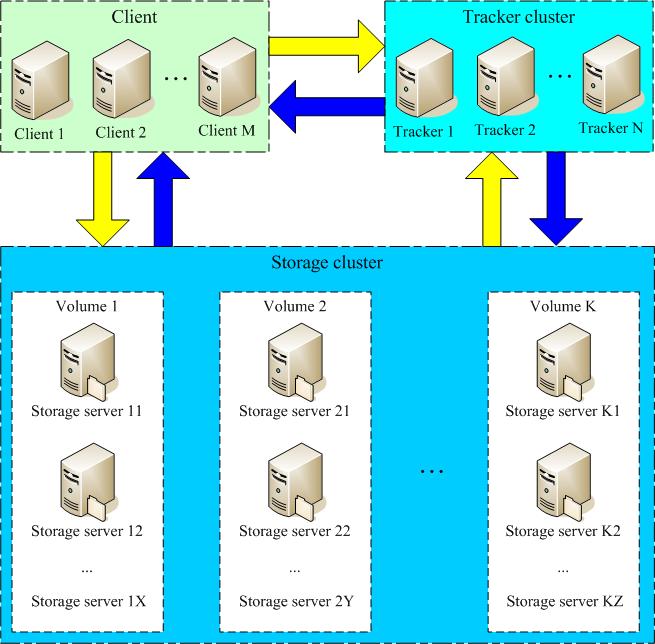

2.FastDFS 组件

★三个角色:

tracker、storage server、client

☉tracker server:

跟踪服务器,调度,在访问中起负载均衡的作用;

在内存中记录所有存储组和存储服务器的状态信息;

☉storage server:

存储服务器,文件(data)和文件属性(metadata);

存储节点存储文件,完成文件管理的所有功能:存储、同步和提供存取接口,FastDFS同时对文件的meta data进行管理。所谓文件的meta data就是文件的相关属性,以键值对(key value pair)方式表示,如:width=1024,其中的key为width,value为1024。文件meta data是文件属性列表,可以包含多个键值对。

☉client:

客户端,业务请求发起方,通过专用接口基于TCP协议与tracker以及storage server进行交互;

附图:

3.FastDFS的实现机制和工作原理:

★实现机制

跟踪器和存储节点都可以由一台多台服务器构成。跟踪器和存储节点中的服务器均可以随时增加或下线而不会影响线上服务。其中跟踪器中的所有服务器都是对等的,可以根据服务器的压力情况随时增加或减少。

为了支持大容量,存储节点(服务器)采用了分卷(或分组)的组织方式。存储系统由一个或多个卷组成,卷与卷之间的文件是相互独立的,所有卷 的文件容量累加就是整个存储系统中的文件容量。一个卷可以由一台或多台存储服务器组成,一个卷下的存储服务器中的文件都是相同的,卷中的多台存储服务器起 到了冗余备份和负载均衡的作用。

在卷中增加服务器时,同步已有的文件由系统自动完成,同步完成后,系统自动将新增服务器切换到线上提供服务。

当存储空间不足或即将耗尽时,可以动态添加卷。只需要增加一台或多台服务器,并将它们配置为一个新的卷,这样就扩大了存储系统的容量。

☉FastDFS中的文件标识分为两个部分:

卷名和文件名,二者缺一不可。

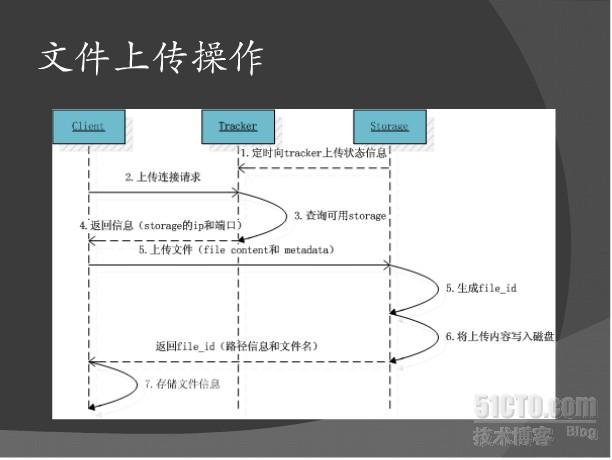

☉上传文件交互过程:

1. client询问tracker上传到的storage,不需要附加参数;

2. tracker返回一台可用的storage;

3. client直接和storage通讯完成文件上传。☉下载文件交互过程:

1. client询问tracker下载文件的storage,参数为文件标识(卷名和文件名);

2. tracker返回一台可用的storage;

3. client直接和storage通讯完成文件下载。

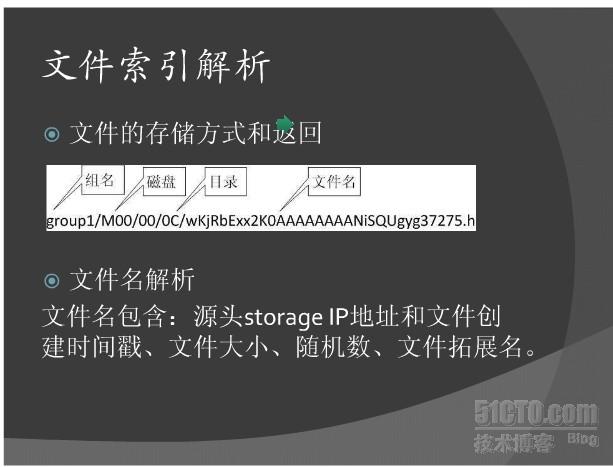

4.fid(文件的存储方式):

★group_name/M##/&&/&&/file_name

☉group_name:

存储组的组名;上传完成后,需要客户端自行保存;

☉M##:

服务器配置的虚拟路径,与磁盘选项store_path#对应;

☉两级以两位16进制数字命名的目录:

☉文件名:

与原文件名并不相同;由storage server根据特定信息生成;

文件名包含:源存储服务器的IP地址、文件创建时间戳、文件大小、随机数和文件扩展名等;

附图:

FastDFS安装和配置

1.安装

★官方站点

★安装:

☉使用 git clone 方法将所需要的源码克隆到本地;

yum install git -y

git clone https://github.com/happyfish100/fastdfs

git clone https://github.com/happyfish100/libfastcommon.git (被依赖的通用库)

演示:将下载的源码包制作成rpm包,方便下次安装使用,制作过程如下:

1.首先获取相应的版本信息后,将源码包打包成 .tar.gz 的格式

# 这是使用git 克隆后的源码包 [[email protected] FastDFS]# ls fastdfs libfastcommon [[email protected] FastDFS]# ls fastdfs-5.0.10 client common conf COPYING-3_0.txt fastdfs.spec HISTORY init.d INSTALL make.sh php_client README.md restart.sh stop.sh storage test tracker [[email protected] FastDFS]# ls libfastcommon-1.0.36 doc HISTORY INSTALL libfastcommon.spec make.sh php-fastcommon README src # 后去相应的版本号 然后打包成 .tar.gz 格式 [[email protected] FastDFS]# mv libfastcommon libfastcommon-master-1.0.36 [[email protected] FastDFS]# tar zcf libfastcommon-1.0.36.tar.gz libfastcommon-1.0.36/* [[email protected] FastDFS]# mv fastdfs fastdfs-5.0.10 [[email protected] FastDFS]# tar zcf fastdfs-5.0.10.tar.gz fastdfs-5.0.10/* [[email protected] FastDFS]# ls fastdfs-5.0.10 fastdfs-5.0.10.tar.gz libfastcommon-1.0.36 libfastcommon-1.0.36.tar.gz

2.在root目录下创建 rpmbuild 目录下的 SOURCES和SPESC 目录,并将源码包放在SOURCES目录下,将源码包中的以 .spec 结尾的放在 SPESC目录下

[[email protected]~]# mkdir rpmbuild/{SOURCES,SPECS} -pv mkdir: created directory ‘rpmbuild’ mkdir: created directory ‘rpmbuild/SOURCES’ mkdir: created directory ‘rpmbuild/SPESC’ [[email protected] ~]# cp /FastDFS/libfastcommon-1.0.36.tar.gz /root/rpmbuild/SOURCES/ [[email protected] ~]# cp /FastDFS/libfastcommon-1.0.36/libfastcommon.spec /root/rpmbuild/SPECS/ [[email protected]~]# cp /FastDFS/fastdfs-5.0.10.tar.gz /root/rpmbuild/SOURCES/ [[email protected]~]# cp /FastDFS/fastdfs-5.0.10/fastdfs.spec /root/rpmbuild/SPECS/

3. 执行rpmbuild -bb 命令,先制作libfastcommon的rpm包,并安装,因为fastdfs依赖此包

[[email protected] SPECS]# pwd /root/rpmbuild/SPECS [[email protected] SPECS]# rpmbuild -ba libfastcommon.spec [[email protected] rpmbuild]# ls BUILD BUILDROOT RPMS SOURCES SPECS SRPMS # 制作好的 rpm 包如下: [[email protected] rpmbuild]# ll RPMS/x86_64/ total 420 -rw-r--r-- 1 root root 99236 Mar 23 21:40 libfastcommon-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 290120 Mar 23 21:40 libfastcommon-debuginfo-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 34424 Mar 23 21:40 libfastcommon-devel-1.0.36-1.el7.centos.x86_64.rpm [[email protected] rpmbuild]# ll SRPMS/ total 428 -rw-r--r-- 1 root root 437253 Mar 23 21:40 libfastcommon-1.0.36-1.el7.centos.src.rpm # 安装 libfastcommon 包 [[email protected] SPECS]# yum install RPMS/x86_64/libfastcommon-devel-1.0.36-1.el7.centos.x86_64.rpm [[email protected] SPECS]# yum install RPMS/x86_64/libfastcommon-1.0.36-1.el7.centos.x86_64.rpm #===================================================================================== 同样方法制作 fastab 的rpm 包 [[email protected] SPECS]# rpmbuild -ba fastdfs.spec # 制作的 rpm 包如下: [[email protected] rpmbuild]# ll RPMS/x86_64/ total 1476 -rw-r--r-- 1 root root 1888 Mar 23 21:52 fastdfs-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 716396 Mar 23 21:52 fastdfs-debuginfo-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 169296 Mar 23 21:52 fastdfs-server-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 129872 Mar 23 21:52 fastdfs-tool-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 99236 Mar 23 21:40 libfastcommon-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 290120 Mar 23 21:40 libfastcommon-debuginfo-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 34424 Mar 23 21:40 libfastcommon-devel-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 36080 Mar 23 21:52 libfdfsclient-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 18216 Mar 23 21:52 libfdfsclient-devel-5.0.10-1.el7.centos.x86_64.rpm # 安装fastdfs相关的rpm包即可

4. 完整的与fastdfs相关的rpm包如下:

[[email protected] FastDFS]# ll rpm/ total 492 # fastdfs 依赖到的包 -rw-r--r-- 1 root root 99236 Mar 23 21:40 libfastcommon-1.0.36-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 34424 Mar 23 21:40 libfastcommon-devel-1.0.36-1.el7.centos.x86_64.rpm # fastdfs 相关的包 -rw-r--r-- 1 root root 1888 Mar 23 21:52 fastdfs-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 169296 Mar 23 21:52 fastdfs-server-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 129872 Mar 23 21:52 fastdfs-tool-5.0.10-1.el7.centos.x86_64.rpm # 客户端相关的包 -rw-r--r-- 1 root root 36080 Mar 23 21:52 libfdfsclient-5.0.10-1.el7.centos.x86_64.rpm -rw-r--r-- 1 root root 18216 Mar 23 21:52 libfdfsclient-devel-5.0.10-1.el7.centos.x86_64.rpm

2.程序环境及配置文件

[[email protected] ~]# rpm -ql fastdfs-server /etc/fdfs/storage.conf.sample # storaged 配置文件实例 /etc/fdfs/storage_ids.conf.sample /etc/fdfs/tracker.conf.sample # tracker 配置文件实例 /etc/init.d/fdfs_storaged # 服务脚本 /etc/init.d/fdfs_trackerd /usr/bin/fdfs_storaged # storaged 主程序文件 /usr/bin/fdfs_trackerd # tracker 主程序文件 /usr/bin/restart.sh # 服务启动和停止的命令 /usr/bin/stop.sh [[email protected] ~]# rpm -ql fastdfs-tool /usr/bin/fdfs_append_file /usr/bin/fdfs_appender_test /usr/bin/fdfs_appender_test1 /usr/bin/fdfs_crc32 /usr/bin/fdfs_delete_file /usr/bin/fdfs_download_file /usr/bin/fdfs_file_info /usr/bin/fdfs_monitor /usr/bin/fdfs_test /usr/bin/fdfs_test1 /usr/bin/fdfs_upload_appender [[email protected] ~]# rpm -ql libfdfsclient /etc/fdfs/client.conf.sample /usr/lib/libfdfsclient.so /usr/lib64/libfdfsclient.so

tracker 配置启动(node1节点:192.168.1.112)

1.tracker 配置文件修改如下:

[[email protected] ~]# cd /etc/fdfs/ [[email protected] fdfs]# ls client.conf.sample storage.conf.sample storage_ids.conf.sample tracker.conf.sample [[email protected] fdfs]# cp tracker.conf.sample tracker.conf [[email protected] fdfs]# vim tracker.conf # is this config file disabled # false for enabled # true for disabled disabled=false # bind an address of this host # empty for bind all addresses of this host bind_addr= # the tracker server port port=22122 # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # the base path to store data and log files base_path=/data/fdfs/tracker # 修改为自己需要的位置 # max concurrent connections this server supported max_connections=256 # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # default value is 4 # since V2.00 work_threads=4 # min buff size # default value 8KB min_buff_size = 8KB # max buff size # default value 128KB max_buff_size = 128KB # the method of selecting group to upload files # 0: round robin # 1: specify group # 2: load balance, select the max free space group to upload file store_lookup=2 # which group to upload file # when store_lookup set to 1, must set store_group to the group name store_group=group2 # which storage server to upload file # 0: round robin (default) # 1: the first server order by ip address # 2: the first server order by priority (the minimal) store_server=0 # which path(means disk or mount point) of the storage server to upload file # 0: round robin # 2: load balance, select the max free space path to upload file store_path=0 # which storage server to download file # 0: round robin (default) # 1: the source storage server which the current file uploaded to download_server=0 # reserved storage space for system or other applications. # if the free(available) space of any stoarge server in # a group <= reserved_storage_space, # no file can be uploaded to this group. # bytes unit can be one of follows: ### G or g for gigabyte(GB) ### M or m for megabyte(MB) ### K or k for kilobyte(KB) ### no unit for byte(B) ### XX.XX% as ratio such as reserved_storage_space = 10% reserved_storage_space = 10% #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # sync log buff to disk every interval seconds # default value is 10 seconds sync_log_buff_interval = 10 # check storage server alive interval seconds check_active_interval = 120 # thread stack size, should >= 64KB # default value is 64KB thread_stack_size = 64KB # auto adjust when the ip address of the storage server changed # default value is true storage_ip_changed_auto_adjust = true # storage sync file max delay seconds # default value is 86400 seconds (one day) # since V2.00 storage_sync_file_max_delay = 86400 # the max time of storage sync a file # default value is 300 seconds # since V2.00 storage_sync_file_max_time = 300 # if use a trunk file to store several small files # default value is false # since V3.00 use_trunk_file = false # the min slot size, should <= 4KB # default value is 256 bytes # since V3.00 slot_min_size = 256 # the max slot size, should > slot_min_size # store the upload file to trunk file when it‘s size <= this value # default value is 16MB # since V3.00 slot_max_size = 16MB # the trunk file size, should >= 4MB # default value is 64MB # since V3.00 trunk_file_size = 64MB # if create trunk file advancely # default value is false # since V3.06 trunk_create_file_advance = false # the time base to create trunk file # the time format: HH:MM # default value is 02:00 # since V3.06 trunk_create_file_time_base = 02:00 # the interval of create trunk file, unit: second # default value is 38400 (one day) # since V3.06 trunk_create_file_interval = 86400 # the threshold to create trunk file # when the free trunk file size less than the threshold, will create # the trunk files # default value is 0 # since V3.06 trunk_create_file_space_threshold = 20G # if check trunk space occupying when loading trunk free spaces # the occupied spaces will be ignored # default value is false # since V3.09 # NOTICE: set this parameter to true will slow the loading of trunk spaces # when startup. you should set this parameter to true when neccessary. trunk_init_check_occupying = false # if ignore storage_trunk.dat, reload from trunk binlog # default value is false # since V3.10 # set to true once for version upgrade when your version less than V3.10 trunk_init_reload_from_binlog = false # the min interval for compressing the trunk binlog file # unit: second # default value is 0, 0 means never compress # FastDFS compress the trunk binlog when trunk init and trunk destroy # recommand to set this parameter to 86400 (one day) # since V5.01 trunk_compress_binlog_min_interval = 0 # if use storage ID instead of IP address # default value is false # since V4.00 use_storage_id = false # specify storage ids filename, can use relative or absolute path # since V4.00 storage_ids_filename = storage_ids.conf # id type of the storage server in the filename, values are: ## ip: the ip address of the storage server ## id: the server id of the storage server # this paramter is valid only when use_storage_id set to true # default value is ip # since V4.03 id_type_in_filename = ip # if store slave file use symbol link # default value is false # since V4.01 store_slave_file_use_link = false # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # HTTP port on this tracker server http.server_port=8080 # check storage HTTP server alive interval seconds # <= 0 for never check # default value is 30 http.check_alive_interval=30 # check storage HTTP server alive type, values are: # tcp : connect to the storge server with HTTP port only, # do not request and get response # http: storage check alive url must return http status 200 # default value is tcp http.check_alive_type=tcp # check storage HTTP server alive uri/url # NOTE: storage embed HTTP server support uri: /status.html http.check_alive_uri=/status.html

2.创建对应的目录,并启动 tracker

[[email protected] fdfs]# mkdir /data/fdfs/tracker -pv mkdir: created directory ‘/data/fdfs’ mkdir: created directory ‘/data/fdfs/tracker’ # 启动tracker 程序 [[email protected] fdfs]# service fdfs_trackerd start Starting fdfs_trackerd (via systemctl): [ OK ] [[email protected] fdfs]# ls /etc/rc.d/init.d/ # 服务脚本如下 fdfs_storaged fdfs_trackerd functions jexec netconsole network README # 查看监听端口 22122 [[email protected] fdfs]# ss -tnlp |grep "fdfs_trackerd" LISTEN 0 128 *:22122 *:* users:(("fdfs_trackerd",pid=4835,fd=5))

如上 tracker 节点就已经配置完成。。。

storage 配置启动(node2,node3 节点:192.168.1.113 和 192.168.1.114)

1.storage 配置文件修改如下:

[[email protected] fdfs]# cp storage.conf.sample storage.conf [[email protected] fdfs]# vim storage.conf # is this config file disabled # false for enabled # true for disabled disabled=false # the name of the group this storage server belongs to # # comment or remove this item for fetching from tracker server, # in this case, use_storage_id must set to true in tracker.conf, # and storage_ids.conf must be configed correctly. group_name=group1 # bind an address of this host # empty for bind all addresses of this host bind_addr= # if bind an address of this host when connect to other servers # (this storage server as a client) # true for binding the address configed by above parameter: "bind_addr" # false for binding any address of this host client_bind=true # the storage server port port=23000 # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # heart beat interval in seconds heart_beat_interval=30 # disk usage report interval in seconds stat_report_interval=60 # the base path to store data and log files base_path=/data/fdfs/storage # 存储数据的位置 # max concurrent connections the server supported # default value is 256 # more max_connections means more memory will be used max_connections=256 # the buff size to recv / send data # this parameter must more than 8KB # default value is 64KB # since V2.00 buff_size = 256KB # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # work thread deal network io # default value is 4 # since V2.00 work_threads=4 # if disk read / write separated ## false for mixed read and write ## true for separated read and write # default value is true # since V2.00 disk_rw_separated = true # disk reader thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_reader_threads = 1 # disk writer thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_writer_threads = 1 # when no entry to sync, try read binlog again after X milliseconds # must > 0, default value is 200ms sync_wait_msec=50 # after sync a file, usleep milliseconds # 0 for sync successively (never call usleep) sync_interval=0 # storage sync start time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_start_time=00:00 # storage sync end time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_end_time=23:59 # write to the mark file after sync N files # default value is 500 write_mark_file_freq=500 # path(disk or mount point) count, default value is 1 store_path_count=1 # store_path#, based 0, if store_path0 not exists, it‘s value is base_path # the paths must be exist store_path0=/data/fdfs/storage/0 # 存储节点数据的存储点,如果为空则为 base_path 定义的路径,生产环境中 要为单个磁盘设备 #store_path1=/home/yuqing/fastdfs2 # subdir_count * subdir_count directories will be auto created under each # store_path (disk), value can be 1 to 256, default value is 256 subdir_count_per_path=256 # tracker_server can ocur more than once, and tracker_server format is # "host:port", host can be hostname or ip address tracker_server=192.168.1.112:22122 # 连接 tracker 的地址和端口 #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # the mode of the files distributed to the data path # 0: round robin(default) # 1: random, distributted by hash code file_distribute_path_mode=0 # valid when file_distribute_to_path is set to 0 (round robin), # when the written file count reaches this number, then rotate to next path # default value is 100 file_distribute_rotate_count=100 # call fsync to disk when write big file # 0: never call fsync # other: call fsync when written bytes >= this bytes # default value is 0 (never call fsync) fsync_after_written_bytes=0 # sync log buff to disk every interval seconds # must > 0, default value is 10 seconds sync_log_buff_interval=10 # sync binlog buff / cache to disk every interval seconds # default value is 60 seconds sync_binlog_buff_interval=10 # sync storage stat info to disk every interval seconds # default value is 300 seconds sync_stat_file_interval=300 # thread stack size, should >= 512KB # default value is 512KB thread_stack_size=512KB # the priority as a source server for uploading file. # the lower this value, the higher its uploading priority. # default value is 10 upload_priority=10 # the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a # multi aliases split by comma. empty value means auto set by OS type # default values is empty if_alias_prefix= # if check file duplicate, when set to true, use FastDHT to store file indexes # 1 or yes: need check # 0 or no: do not check # default value is 0 check_file_duplicate=0 # file signature method for check file duplicate ## hash: four 32 bits hash code ## md5: MD5 signature # default value is hash # since V4.01 file_signature_method=hash # namespace for storing file indexes (key-value pairs) # this item must be set when check_file_duplicate is true / on key_namespace=FastDFS # set keep_alive to 1 to enable persistent connection with FastDHT servers # default value is 0 (short connection) keep_alive=0 # you can use "#include filename" (not include double quotes) directive to # load FastDHT server list, when the filename is a relative path such as # pure filename, the base path is the base path of current/this config file. # must set FastDHT server list when check_file_duplicate is true / on # please see INSTALL of FastDHT for detail ##include /home/yuqing/fastdht/conf/fdht_servers.conf # if log to access log # default value is false # since V4.00 use_access_log = false # if rotate the access log every day # default value is false # since V4.00 rotate_access_log = false # rotate access log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.00 access_log_rotate_time=00:00 # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate access log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_access_log_size = 0 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if skip the invalid record when sync file # default value is false # since V4.02 file_sync_skip_invalid_record=false # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # use the ip address of this storage server if domain_name is empty, # else this domain name will ocur in the url redirected by the tracker server http.domain_name= # the port of the web server on this storage server http.server_port=8080 # 监听的端口要和 tracker 中的相同

2.创建相应的目录文件

[[email protected] ~]# mkdir -pv /data/fdfs/storage/0 mkdir: created directory ‘/data/fdfs’ mkdir: created directory ‘/data/fdfs/storage’ mkdir: created directory ‘/data/fdfs/storage/0’

3.启动 storage 服务,并查看监听端口 23000

[[email protected] ~]# service fdfs_storaged start Starting fdfs_storaged (via systemctl): [ OK ] [[email protected] ~]# ss -tnlp |grep "fdfs" LISTEN 0 128 *:23000 *:* users:(("fdfs_storaged",pid=1926,fd=5))

如上 storage 节点就已经配置完成。。。

配置 client (在node3 节点上)

[[email protected] fdfs]# cp client.conf.sample client.conf [[email protected] fdfs]# vim client.conf [[email protected] fdfs]# cat client.conf # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # the base path to store log files base_path=/data/fdfs/storage # 日志文件的保存位置,这里我保存在和storage相同的目录下 # tracker_server can ocur more than once, and tracker_server format is # "host:port", host can be hostname or ip address tracker_server=192.168.1.112:22122 # 连接 tracker 的地址和端口 #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # if load FastDFS parameters from tracker server # since V4.05 # default value is false load_fdfs_parameters_from_tracker=false # if use storage ID instead of IP address # same as tracker.conf # valid only when load_fdfs_parameters_from_tracker is false # default value is false # since V4.05 use_storage_id = false # specify storage ids filename, can use relative or absolute path # same as tracker.conf # valid only when load_fdfs_parameters_from_tracker is false # since V4.05 storage_ids_filename = storage_ids.conf #HTTP settings http.tracker_server_port=80 #use "#include" directive to include HTTP other settiongs ##include http.conf

1.使用 fdfs_monitor 工具可以监控 tracker 和各 storage 节点的状态,如下:

[[email protected] fdfs]# fdfs_monitor client.conf [2017-03-23 23:36:04] DEBUG - base_path=/data/fdfs/storage, connect_timeout=30, network_timeout=60, tracker_server_count=1, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0 server_count=1, server_index=0 tracker server is 192.168.1.112:22122 group count: 1 Group 1: group name = group1 disk total space = 40940 MB disk free space = 39952 MB trunk free space = 0 MB storage server count = 2 active server count = 2 storage server port = 23000 storage HTTP port = 8080 store path count = 1 subdir count per path = 256 current write server index = 0 current trunk file id = 0 Storage 1: id = 192.168.1.113 ip_addr = 192.168.1.113 (node2.taotao.com) ACTIVE http domain = version = 5.10 join time = 2017-03-23 23:11:26 up time = 2017-03-23 23:11:26 total storage = 40940 MB free storage = 40521 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8080 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 1 connection.max_count = 1 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2017-03-23 23:35:59 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Storage 2: id = 192.168.1.114 ip_addr = 192.168.1.114 (node3.taotao.com) ACTIVE http domain = version = 5.10 join time = 2017-03-23 23:15:37 up time = 2017-03-23 23:15:37 total storage = 40940 MB free storage = 39952 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8080 current_write_path = 0 source storage id = 192.168.1.113 if_trunk_server = 0 connection.alloc_count = 256 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2017-03-23 23:35:41 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00

测试:

上传文件

# 指明配置文件和要上传的文件 [[email protected] fdfs]# fdfs_upload_file -h Usage: fdfs_upload_file <config_file> <local_filename> [storage_ip:port] [store_path_index] [[email protected] fdfs]# fdfs_upload_file /etc/fdfs/client.conf /etc/fstab group1/M00/00/00/wKgBcVjT7QSAYAiFAAACU3UXaYE5252654 # 文件路径

查看上传文件信息

[[email protected] fdfs]# fdfs_file_info -h Usage: fdfs_file_info <config_file> <file_id> [[email protected] fdfs]# fdfs_file_info /etc/fdfs/client.conf group1/M00/00/00/wKgBcVjT7QSAYAiFAAACU3UXaYE5252654 source storage id: 0 source ip address: 192.168.1.113 # 存储的位置 file create timestamp: 2017-03-23 23:43:00 # 时间戳 file size: 595 # 大小 file crc32: 1964468609 (0x75176981) # 校验码

下载文件

[[email protected] fdfs]# fdfs_download_file -h Usage: fdfs_download_file <config_file> <file_id> [local_filename] [<download_offset> <download_bytes>] [[email protected] fdfs]# fdfs_download_file /etc/fdfs/client.conf group1/M00/00/00/wKgBcVjT7QSAYAiFAAACU3UXaYE5252654 /tmp/fstab [[email protected] fdfs]# cat /tmp/fstab # # /etc/fstab # Created by anaconda on Tue Aug 30 09:45:37 2016 # # Accessible filesystems, by reference, are maintained under ‘/dev/disk‘ # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # UUID=90880561-dca2-447b-a935-4c47e1bd03d8 / xfs defaults 0 0 UUID=219cc6c3-bd54-4bac-a47f-b498c491107f /boot xfs defaults 0 0 UUID=409f2fa0-f642-4cc2-9ed7-b20bda111d8d /usr xfs defaults 0 0 UUID=af279379-acbd-47f5-a814-870666bdd6d1 swap swap defaults 0 0

本文出自 “逐梦小涛” 博客,请务必保留此出处http://1992tao.blog.51cto.com/11606804/1909834

以上是关于FastDFS 的安装与配置的主要内容,如果未能解决你的问题,请参考以下文章