Flume环境搭建_五种案例

Posted 日月的弯刀

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Flume环境搭建_五种案例相关的知识,希望对你有一定的参考价值。

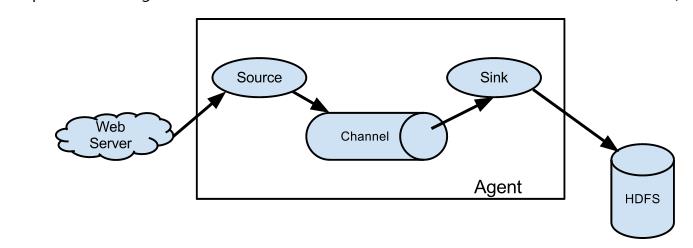

Flume环境搭建_五种案例

http://flume.apache.org/FlumeUserGuide.html

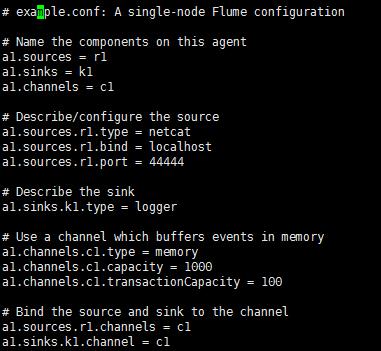

A simple example

Here, we give an example configuration file, describing a single-node Flume deployment. This configuration lets a user generate events and subsequently logs them to the console.

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = localhost

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

This configuration defines a single agent named a1. a1 has a source that listens for data on port 44444, a channel that buffers event data in memory, and a sink that logs event data to the console. The configuration file names the various components, then describes their types and configuration parameters. A given configuration file might define several named agents; when a given Flume process is launched a flag is passed telling it which named agent to manifest.

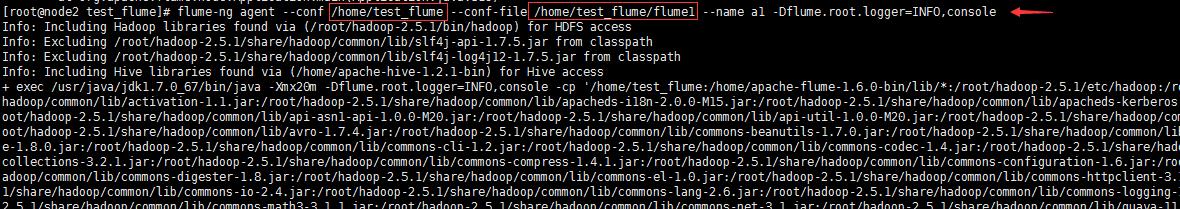

Given this configuration file, we can start Flume as follows:

$ bin/flume-ng agent --conf conf --conf-file example.conf --name a1 -Dflume.root.logger=INFO,console

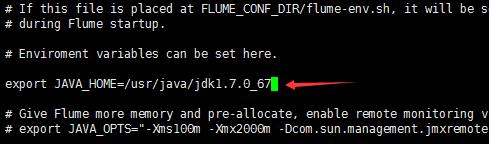

Note that in a full deployment we would typically include one more option: --conf=<conf-dir>. The <conf-dir> directory would include a shell script flume-env.sh and potentially a log4j properties file. In this example, we pass a Java option to force Flume to log to the console and we go without a custom environment script.

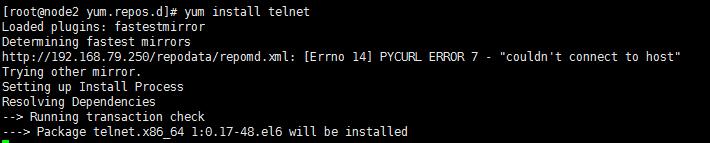

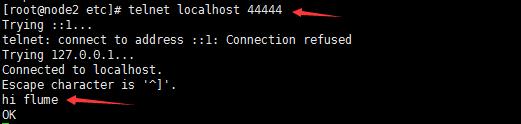

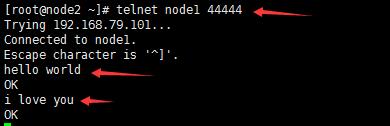

From a separate terminal, we can then telnet port 44444 and send Flume an event:

$ telnet localhost 44444

Trying 127.0.0.1...

Connected to localhost.localdomain (127.0.0.1).

Escape character is \'^]\'.

Hello world! <ENTER>

OK

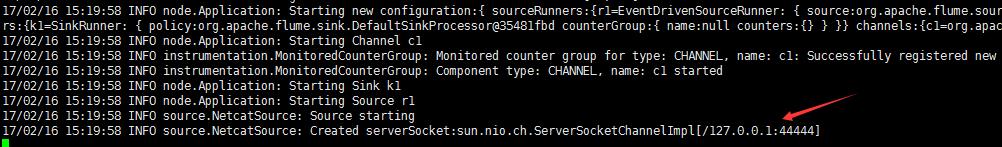

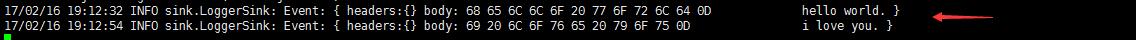

The original Flume terminal will output the event in a log message.

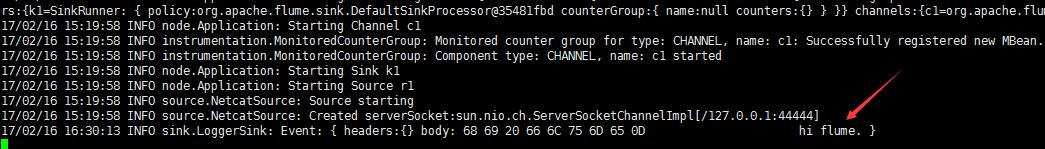

12/06/19 15:32:19 INFO source.NetcatSource: Source starting

12/06/19 15:32:19 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/127.0.0.1:44444]

12/06/19 15:32:34 INFO sink.LoggerSink: Event: { headers:{} body: 48 65 6C 6C 6F 20 77 6F 72 6C 64 21 0D Hello world!. }

Congratulations - you’ve successfully configured and deployed a Flume agent! Subsequent sections cover agent configuration in much more detail.

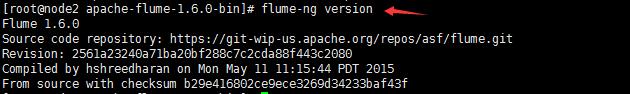

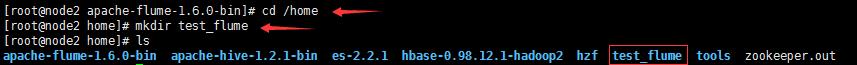

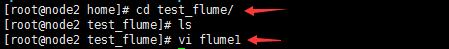

以下为具体搭建流程

Flume搭建_案例一:单个Flume

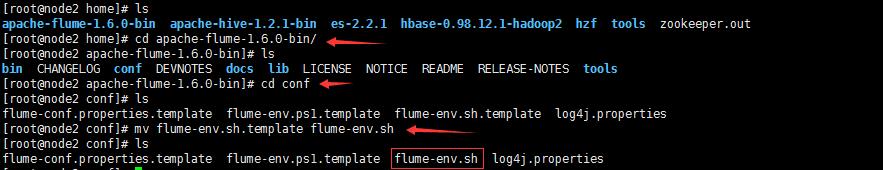

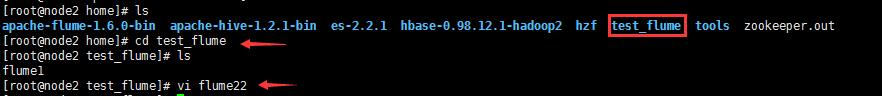

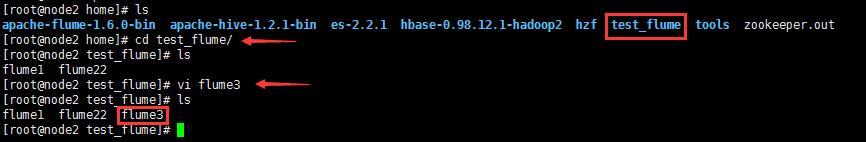

安装node2上

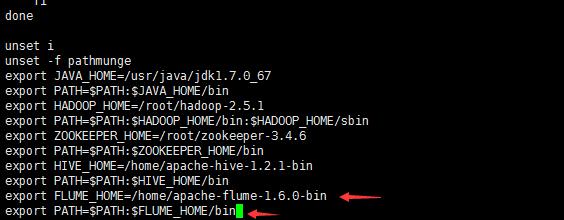

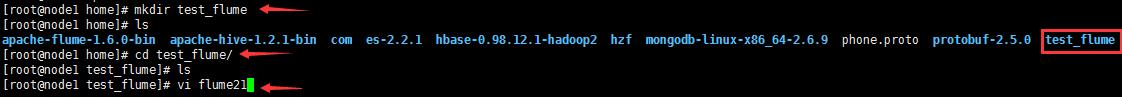

1. 上传到/home/tools,解压,解压后移动到/home下

2. 重命名,并修改flume-env.sh

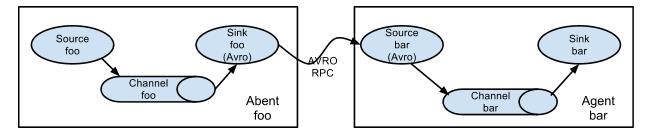

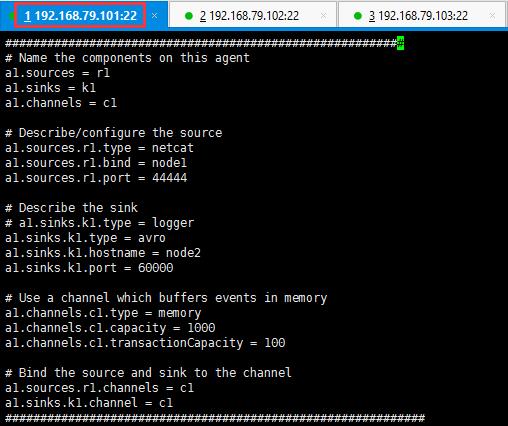

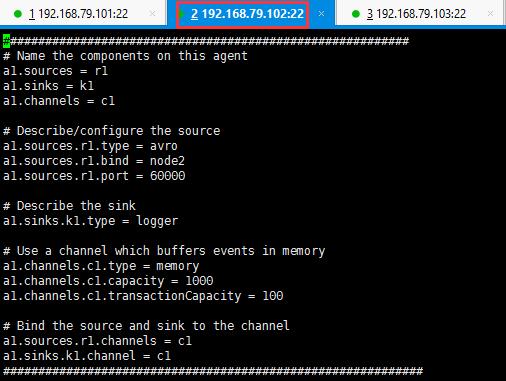

Flume搭建_案例二:两个Flume做集群

MemoryChanel配置capacity:默认该通道中最大的可以存储的event数量是100,trasactionCapacity:每次最大可以从source中拿到或者送到sink中的event数量也是100keep-alive:event添加到通道中或者移出的允许时间byte**:即event的字节量的限制,只包括eventbody

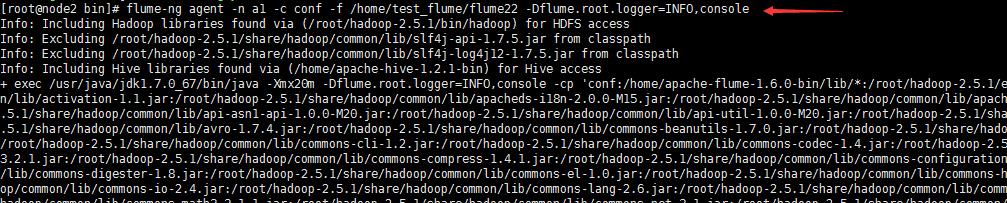

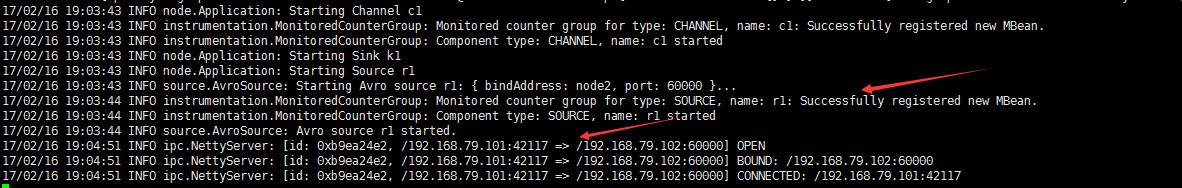

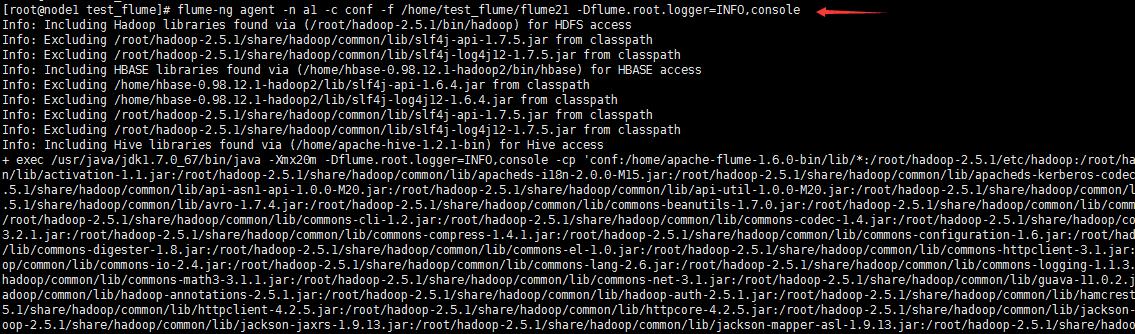

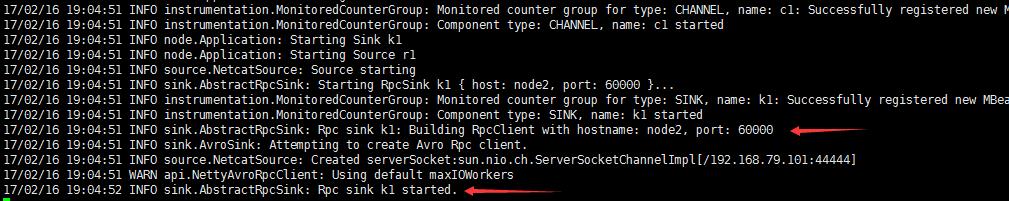

先启动node02的Flumeflume-ng agent -n a1 -c conf -f avro.conf -Dflume.root.logger=INFO,consoleflume-ng agent -n a1 -c conf -f /home/test_flume/flume22 -Dflume.root.logger=INFO,console再启动node01的Flumeflume-ng agent -n a1 -c conf -f simple.conf2 -Dflume.root.logger=INFO,consoleflume-ng agent -n a1 -c conf -f /home/test_flume/flume21 -Dflume.root.logger=INFO,console

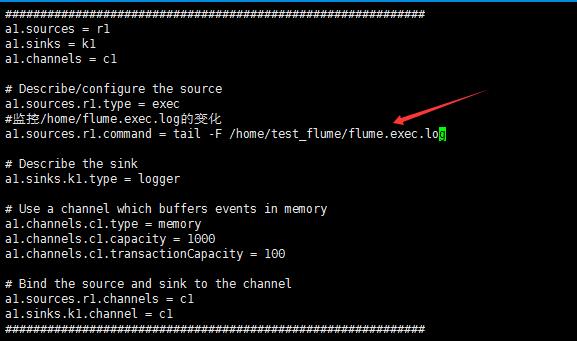

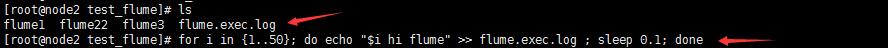

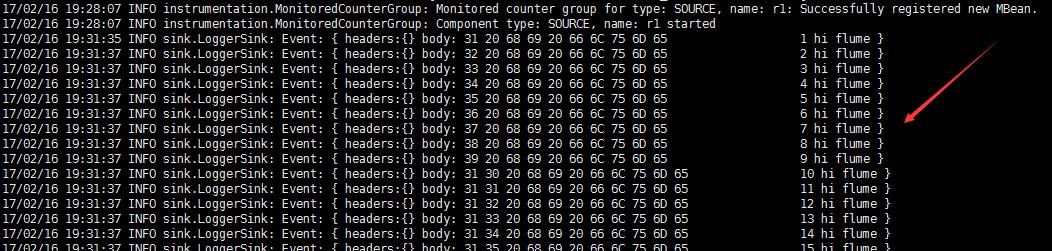

Flume搭建_案例三:如何监控一个文件的变化?

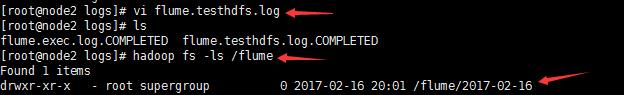

启动Flumeflume-ng agent -n a1 -c conf -f exec.conf -Dflume.root.logger=INFO,consoleflume-ng agent -n a1 -c conf -f /home/test_flume/flume3 -Dflume.root.logger=INFO,console

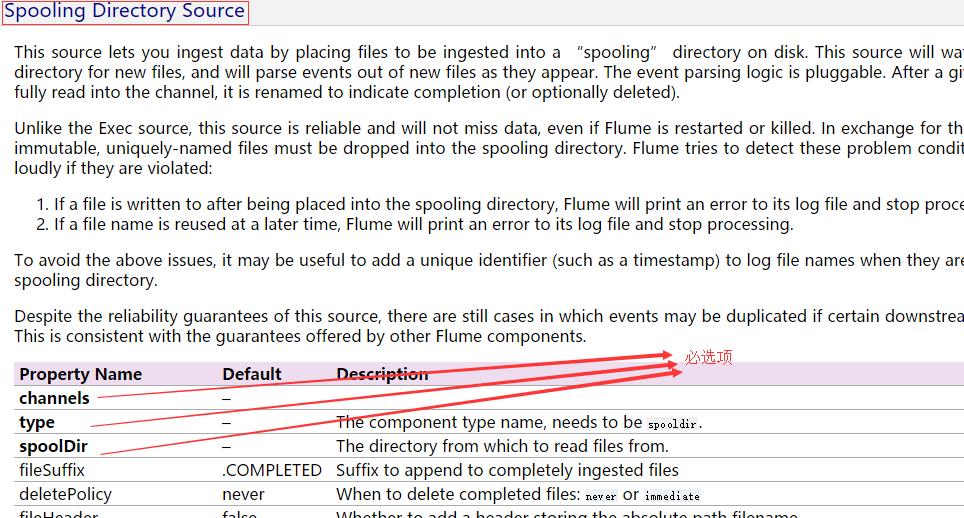

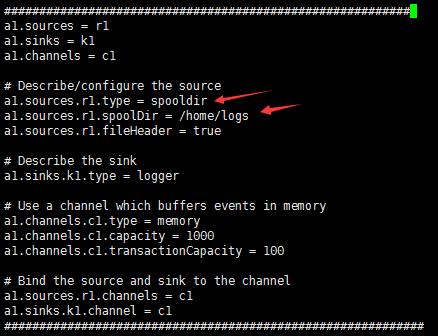

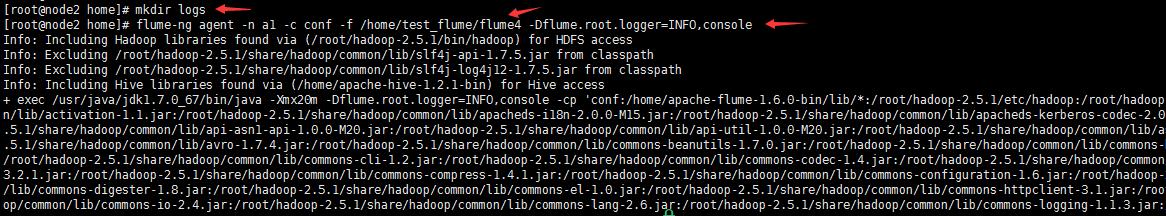

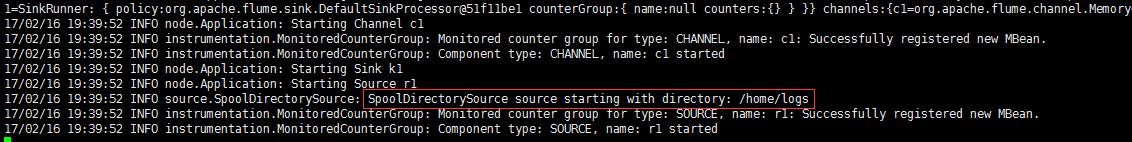

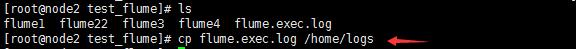

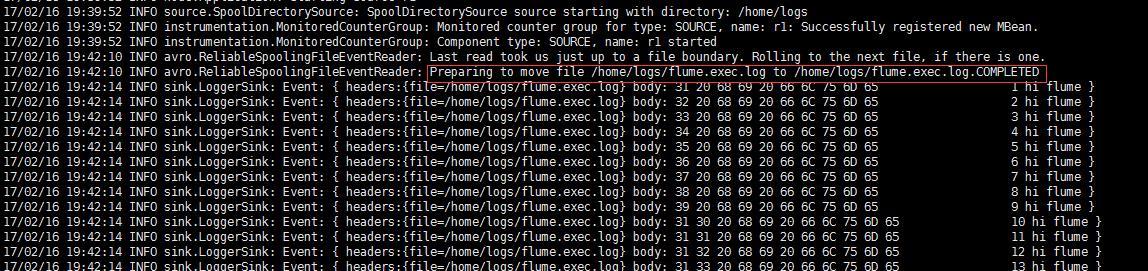

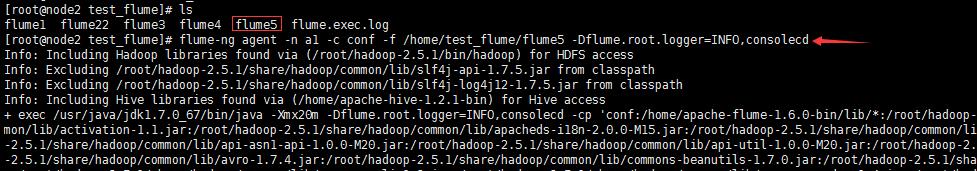

Flume搭建_案例四: 如何监控一个文件:目录的变化?

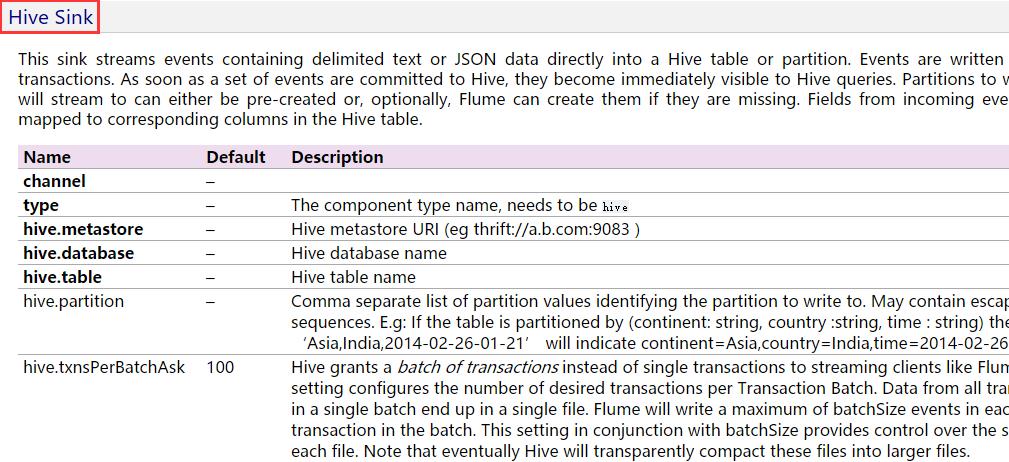

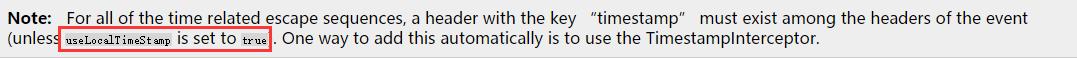

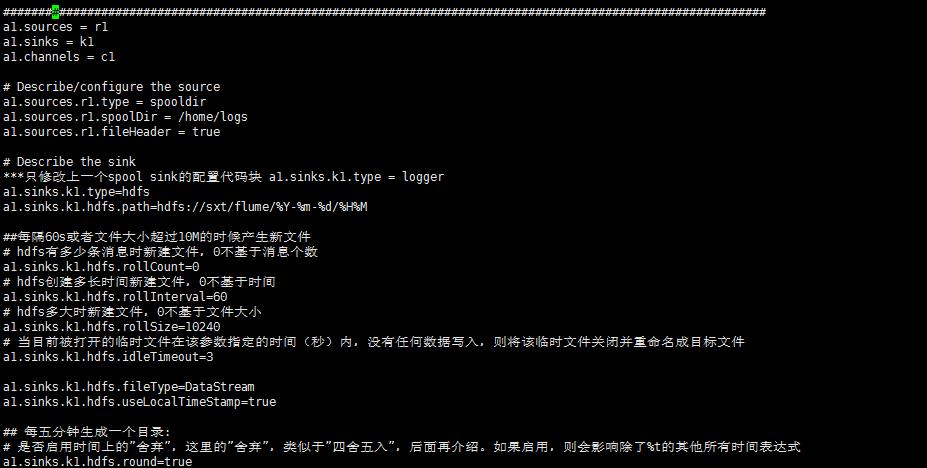

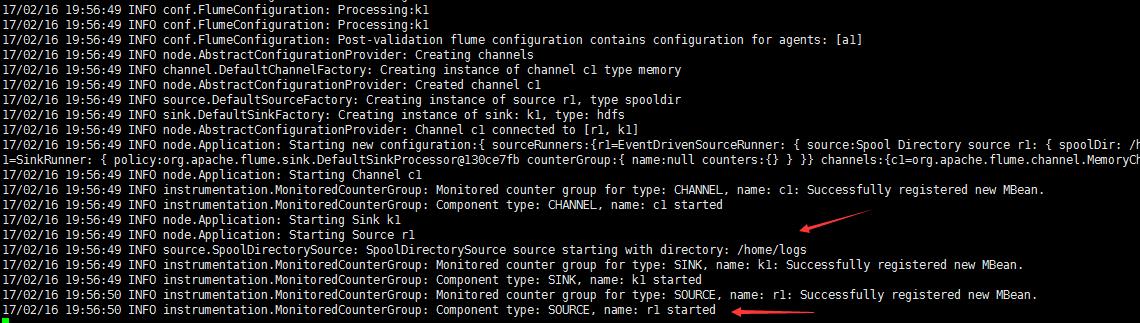

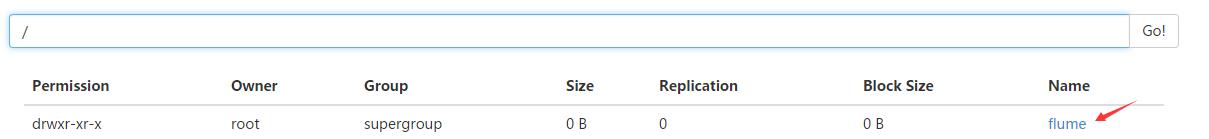

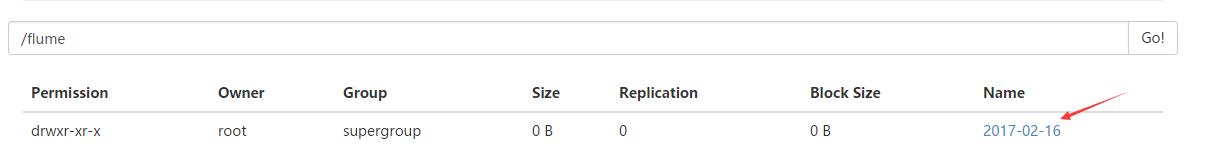

Flume搭建_案例五: 如何定义一个HDFS类型的Sink?

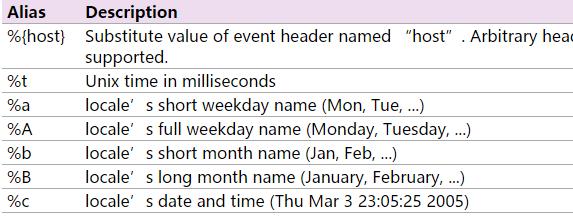

Flume搭建_案例五_配置项解读

| hdfs.rollInterval | 30 | Number of seconds to wait before rolling current file (0 = never roll based on time interval) |

| hdfs.rollSize | 1024 | File size to trigger roll, in bytes (0: never roll based on file size) |

| hdfs.rollCount | 10 | Number of events written to file before it rolled (0 = never roll based on number of events) |

4. 多长时间没有操作,Flume将一个临时文件生成新文件?

| hdfs.idleTimeout | 0 | Timeout after which inactive files get closed (0 = disable automatic closing of idle files) |

5. 多长时间生成一个新的目录?(比如每10s生成一个新的目录)

四舍五入,没有五入,只有四舍

(比如57分划分为55分,5,6,7,8,9在一个目录,10,11,12,13,14在一个目录)

| hdfs.round | false | Should the timestamp be rounded down (if true, affects all time based escape sequences except %t) |

| hdfs.roundValue | 1 | Rounded down to the highest multiple of this (in the unit configured using hdfs.roundUnit), less than current time. |

| hdfs.roundUnit | second | The unit of the round down value - second, minute or hour. |

以上是关于Flume环境搭建_五种案例的主要内容,如果未能解决你的问题,请参考以下文章