编写Spark的WordCount程序并提交到集群运行[含scala和java两个版本]

Posted 十光年

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了编写Spark的WordCount程序并提交到集群运行[含scala和java两个版本]相关的知识,希望对你有一定的参考价值。

编写Spark的WordCount程序并提交到集群运行[含scala和java两个版本]

1. 开发环境

Jdk 1.7.0_72 Maven 3.2.1 Scala 2.10.6 Spark 1.6.2 Hadoop 2.6.4 IntelliJ IDEA 2016.1.1

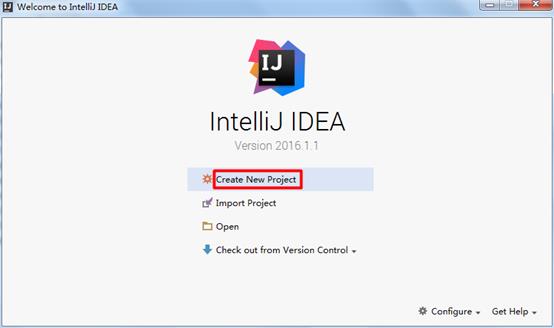

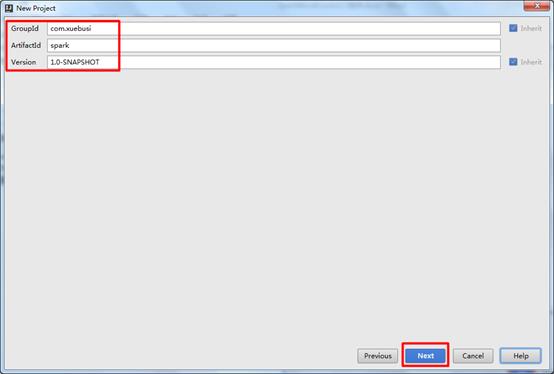

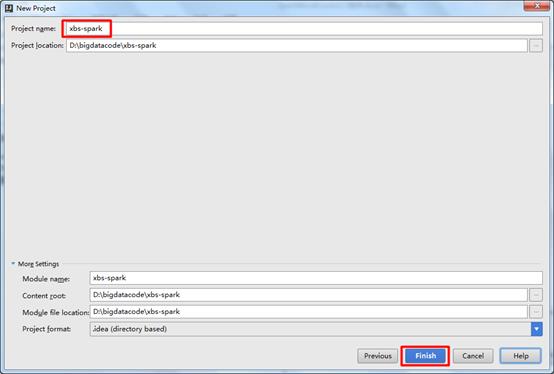

2. 创建项目

1) 新建Maven项目

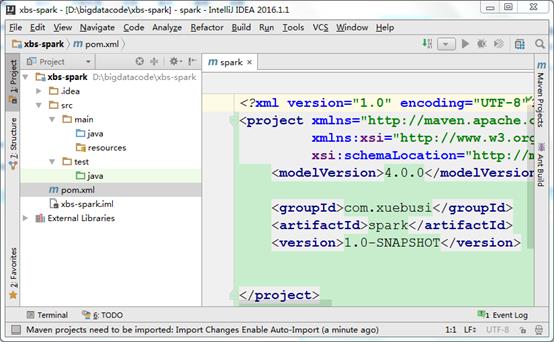

2) 在pom文件中导入依赖

pom.xml文件内容如下:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.xuebusi</groupId> <artifactId>spark</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> <encoding>UTF-8</encoding> <!-- 这里对jar包版本做集中管理 --> <scala.version>2.10.6</scala.version> <spark.version>1.6.2</spark.version> <hadoop.version>2.6.4</hadoop.version> </properties> <dependencies> <dependency> <!-- scala语言核心包 --> <groupId>org.scala-lang</groupId> <artifactId>scala-library</artifactId> <version>${scala.version}</version> </dependency> <dependency> <!-- spark核心包 --> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>${spark.version}</version> </dependency> <dependency> <!-- hadoop的客户端,用于访问HDFS --> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>${hadoop.version}</version> </dependency> </dependencies> <build> <sourceDirectory>src/main/scala</sourceDirectory> <testSourceDirectory>src/test/scala</testSourceDirectory> <plugins> <plugin> <groupId>net.alchim31.maven</groupId> <artifactId>scala-maven-plugin</artifactId> <version>3.2.2</version> <executions> <execution> <goals> <goal>compile</goal> <goal>testCompile</goal> </goals> <configuration> <args> <arg>-make:transitive</arg> <arg>-dependencyfile</arg> <arg>${project.build.directory}/.scala_dependencies</arg> </args> </configuration> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>2.4.3</version> <executions> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> <configuration> <filters> <filter> <artifact>*:*</artifact> <excludes> <exclude>META-INF/*.SF</exclude> <exclude>META-INF/*.DSA</exclude> <exclude>META-INF/*.RSA</exclude> </excludes> </filter> </filters> <!-- 由于我们的程序可能有很多,所以这里可以不用指定main方法所在的类名,我们可以在提交spark程序的时候手动指定要调用那个main方法 --> <!-- <transformers> <transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer"> <mainClass>com.xuebusi.spark.WordCount</mainClass> </transformer> </transformers> --> </configuration> </execution> </executions> </plugin> </plugins> </build> </project>

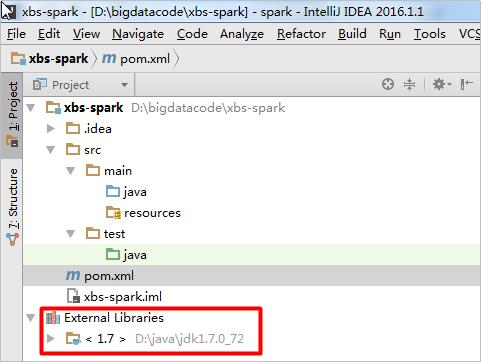

虽然我们的pom文件中的jar包依赖准备好了,但是在Project的External Libraries缺少Maven依赖:

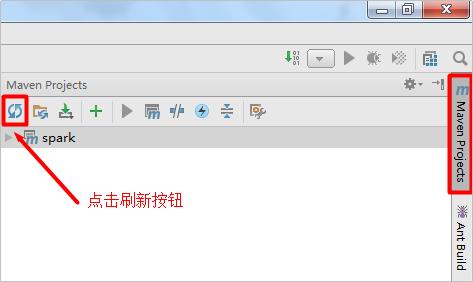

需要点击右侧的Maven Project侧边栏中的刷新按钮,才会导入Maven依赖,前提是保证电脑能够联网,Maven可能会到中央仓库下载一些依赖:

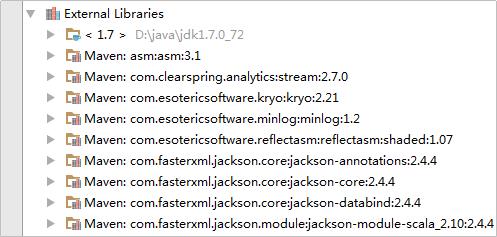

在左侧的Project侧边栏中的External Libraries下就可以看到新导入的Maven依赖了:

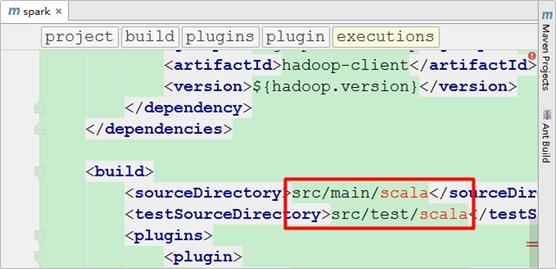

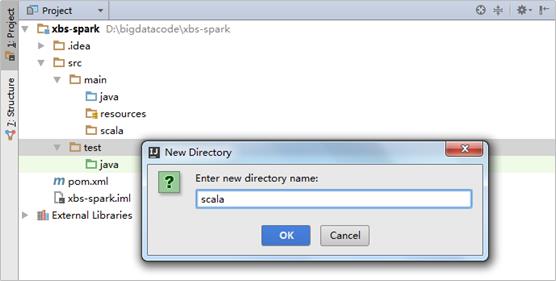

但是在pom.xml文件中还有错误提示,因为src/main/和src/test/这两个目录下面没有scala目录:

分别在main和test目录之上点击鼠标右键选择new->Directory创建scala目录:

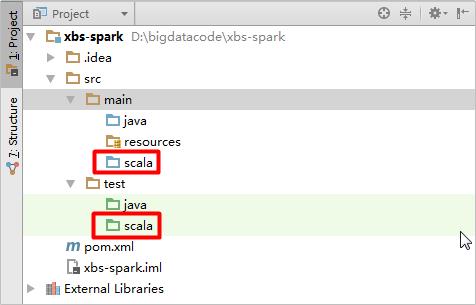

由于新创建的scala文件夹前面的图标颜色和java文件夹不一样,我们需要再次点击右侧Maven Project侧边栏中的刷新按钮,其颜色就会发生变化:

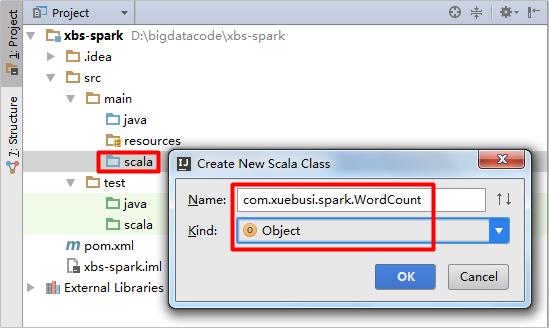

在scala目录下面创建WordCount(类型为Object):

3. 编写WordCount程序

下面是使用scala语言编写的Spark的一个简单的单词计数程序:

package com.xuebusi.spark import org.apache.spark.rdd.RDD import org.apache.spark.{SparkConf, SparkContext} /** * Created by SYJ on 2017/1/23. */ object WordCount { def main(args: Array[String]) { //创建SparkConf val conf: SparkConf = new SparkConf() //创建SparkContext val sc: SparkContext = new SparkContext(conf) //从文件读取数据 val lines: RDD[String] = sc.textFile(args(0)) //按空格切分单词 val words: RDD[String] = lines.flatMap(_.split(" ")) //单词计数,每个单词每出现一次就计数为1 val wordAndOne: RDD[(String, Int)] = words.map((_, 1)) //聚合,统计每个单词总共出现的次数 val result: RDD[(String, Int)] = wordAndOne.reduceByKey(_+_) //排序,根据单词出现的次数排序 val fianlResult: RDD[(String, Int)] = result.sortBy(_._2, false) //将统计结果保存到文件 fianlResult.saveAsTextFile(args(1)) //释放资源 sc.stop() } }

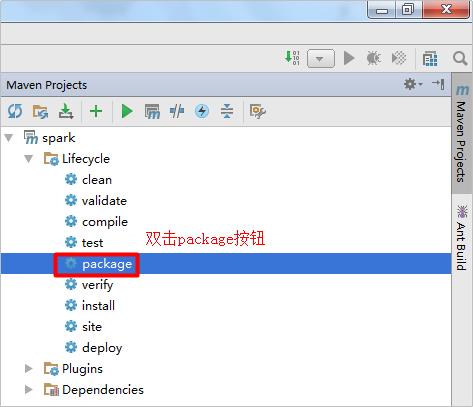

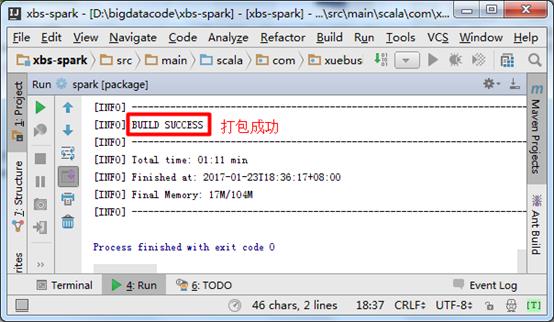

4. 打包

将编写好的WordCount程序使用Maven插件打成jar包,打包的时候也要保证电脑能够联网,因为Maven可能会到中央仓库中下载一些依赖:

在jar包名称上面点击鼠标右键选择“Copy Path”,得到jar包在Windows磁盘上的绝对路径:D:\\bigdatacode\\xbs-spark\\target\\spark-1.0-SNAPSHOT.jar,在下面上传jar包时会用到此路径。

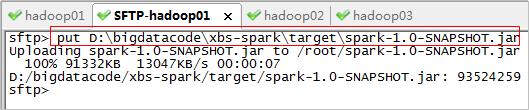

5. 上传jar包

使用SecureCRT工具连接Spark集群服务器,将spark-1.0-SNAPSHOT.jar上传到服务器:

6. 同步时间

date -s "2017-01-23 19:19:30"

7. 启动Zookeeper

/root/apps/zookeeper/bin/zkServer.sh start

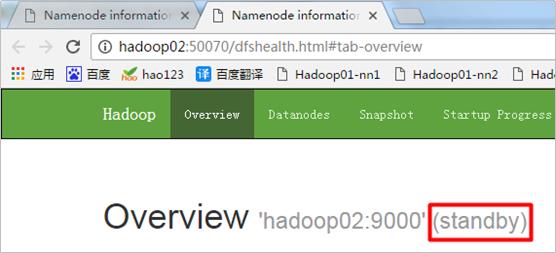

8. 启动hdfs

/root/apps/hadoop/sbin/start-dfs.sh

HDFS的活跃的NameNode节点:

HDFS的备选NameNode节点:

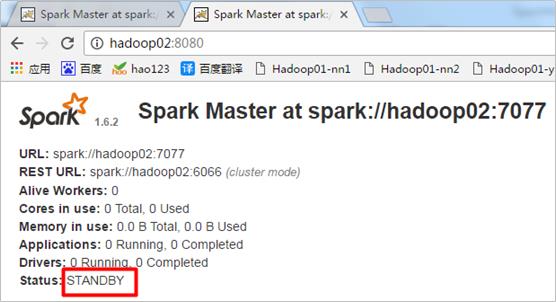

9. 启动Spark集群

/root/apps/spark/sbin/start-all.sh

启动单个Master进程使用如下命令:

/root/apps/spark/sbin/start-master.sh

Spark活跃的Master节点:

Spark的备选Master节点:

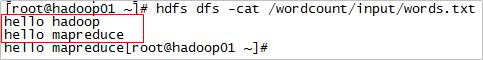

10. 准备输入数据

11. 提交Spark程序

提交Spark的WordCount程序需要两个参数,一个输入目录,一个输出目录,首先确定输出目录不存在,如果存在则删除:

hdfs dfs -rm -r /wordcount/output

使用spark-submit脚本提交spark程序:

/root/apps/spark/bin/spark-submit \\ --master spark://hadoop01:7077,hadoop02:7077 \\ --executor-memory 512m \\ --total-executor-cores 7 \\ --class com.xuebusi.spark.WordCount /root/spark-1.0-SNAPSHOT.jar hdfs://hadoop01:9000/wordcount/input hdfs://hadoop01:9000/wordcount/output

通过Spark的UI界面来观察程序执行过程:

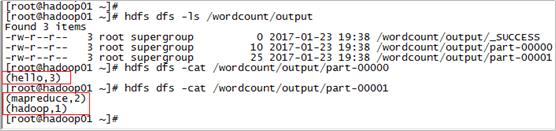

12. 查看结果

附1:程序打包日志

1 D:\\java\\jdk1.7.0_72\\bin\\java -Dmaven.home=D:\\apache-maven-3.2.1 -Dclassworlds.conf=D:\\apache-maven-3.2.1\\bin\\m2.conf -Didea.launcher.port=7533 "-Didea.launcher.bin.path=D:\\java\\IntelliJ_IDEA\\IntelliJ IDEA Community Edition 2016.1.1\\bin" -Dfile.encoding=UTF-8 -classpath "D:\\apache-maven-3.2.1\\boot\\plexus-classworlds-2.5.1.jar;D:\\java\\IntelliJ_IDEA\\IntelliJ IDEA Community Edition 2016.1.1\\lib\\idea_rt.jar" com.intellij.rt.execution.application.AppMain org.codehaus.classworlds.Launcher -Didea.version=2016.1.1 package 2 [INFO] Scanning for projects... 3 [INFO] 4 [INFO] Using the builder org.apache.maven.lifecycle.internal.builder.singlethreaded.SingleThreadedBuilder with a thread count of 1 5 [INFO] 6 [INFO] ------------------------------------------------------------------------ 7 [INFO] Building spark 1.0-SNAPSHOT 8 [INFO] ------------------------------------------------------------------------ 9 [INFO] 10 [INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ spark --- 11 [INFO] Using \'UTF-8\' encoding to copy filtered resources. 12 [INFO] Copying 0 resource 13 [INFO] 14 [INFO] --- maven-compiler-plugin:2.5.1:compile (default-compile) @ spark --- 15 [INFO] Nothing to compile - all classes are up to date 16 [INFO] 17 [INFO] --- scala-maven-plugin:3.2.2:compile (default) @ spark --- 18 [WARNING] Expected all dependencies to require Scala version: 2.10.6 19 [WARNING] com.xuebusi:spark:1.0-SNAPSHOT requires scala version: 2.10.6 20 [WARNING] com.twitter:chill_2.10:0.5.0 requires scala version: 2.10.4 21 [WARNING] Multiple versions of scala libraries detected! 22 [INFO] Nothing to compile - all classes are up to date 23 [INFO] 24 [INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ spark --- 25 [INFO] Using \'UTF-8\' encoding to copy filtered resources. 26 [INFO] skip non existing resourceDirectory D:\\bigdatacode\\spark-wordcount\\src\\test\\resources 27 [INFO] 28 [INFO] --- maven-compiler-plugin:2.5.1:testCompile (default-testCompile) @ spark --- 29 [INFO] Nothing to compile - all classes are up to date 30 [INFO] 31 [INFO] --- scala-maven-plugin:3.2.2:testCompile (default) @ spark --- 32 [WARNING] Expected all dependencies to require Scala version: 2.10.6 33 [WARNING] com.xuebusi:spark:1.0-SNAPSHOT requires scala version: 2.10.6 34 [WARNING] com.twitter:chill_2.10:0.5.0 requires scala version: 2.10.4 35 [WARNING] Multiple versions of scala libraries detected! 36 [INFO] No sources to compile 37 [INFO] 38 [INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ spark --- 39 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-booter/2.12.4/surefire-booter-2.12.4.pom 40 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-booter/2.12.4/surefire-booter-2.12.4.pom (3 KB at 1.7 KB/sec) 41 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-api/2.12.4/surefire-api-2.12.4.pom 42 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-api/2.12.4/surefire-api-2.12.4.pom (3 KB at 2.4 KB/sec) 43 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/maven-surefire-common/2.12.4/maven-surefire-common-2.12.4.pom 44 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/maven-surefire-common/2.12.4/maven-surefire-common-2.12.4.pom (6 KB at 3.2 KB/sec) 45 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-annotations/3.1/maven-plugin-annotations-3.1.pom 46 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-annotations/3.1/maven-plugin-annotations-3.1.pom (2 KB at 1.7 KB/sec) 47 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-tools/3.1/maven-plugin-tools-3.1.pom 48 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-tools/3.1/maven-plugin-tools-3.1.pom (16 KB at 12.0 KB/sec) 49 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/maven-surefire-common/2.12.4/maven-surefire-common-2.12.4.jar 50 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-api/2.12.4/surefire-api-2.12.4.jar 51 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-booter/2.12.4/surefire-booter-2.12.4.jar 52 Downloading: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-annotations/3.1/maven-plugin-annotations-3.1.jar 53 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/plugin-tools/maven-plugin-annotations/3.1/maven-plugin-annotations-3.1.jar (14 KB at 10.6 KB/sec) 54 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-booter/2.12.4/surefire-booter-2.12.4.jar (34 KB at 21.5 KB/sec) 55 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/maven-surefire-common/2.12.4/maven-surefire-common-2.12.4.jar (257 KB at 161.0 KB/sec) 56 Downloaded: http://repo.maven.apache.org/maven2/org/apache/maven/surefire/surefire-api/2.12.4/surefire-api-2.12.4.jar (115 KB at 55.1 KB/sec) 57 [INFO] No tests to run. 58 [INFO] 59 [INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ spark --- 60 [INFO] Building jar: D:\\bigdatacode\\spark-wordcount\\target\\spark-1.0-SNAPSHOT.jar 61 [INFO] 62 [INFO] --- maven-shade-plugin:2.4.3:shade (default) @ spark --- 63 [INFO] Including org.scala-lang:scala-library:jar:2.10.6 in the shaded jar. 64 [INFO] Including org.apache.spark:spark-core_2.10:jar:1.6.2 in the shaded jar. 65 [INFO] Including org.apache.avro:avro-mapred:jar:hadoop2:1.7.7 in the shaded jar. 66 [INFO] Including org.apache.avro:avro-ipc:jar:1.7.7 in the shaded jar. 67 [INFO] Including org.apache.avro:avro-ipc:jar:tests:1.7.7 in the shaded jar. 68 [INFO] Including org.codehaus.jackson:jackson-core-asl:jar:1.9.13 in the shaded jar. 69 [INFO] Including org.codehaus.jackson:jackson-mapper-asl:jar:1.9.13 in the shaded jar. 70 [INFO] Including com.twitter:chill_2.10:jar:0.5.0 in the shaded jar. 71 [INFO] Including com.esotericsoftware.kryo:kryo:jar:2.21 in the shaded jar. 72 [INFO] Including com.esotericsoftware.reflectasm:reflectasm:jar:shaded:1.07 in the shaded jar. 73 [INFO] Including com.esotericsoftware.minlog:minlog:jar:1.2 in the shaded jar. 74 [INFO] Including org.objenesis:objenesis:jar:1.2 in the shaded jar. 75 [INFO] Including com.twitter:chill-java:jar:0.5.0 in the shaded jar. 76 [INFO] Including org.apache.xbean:xbean-asm5-shaded:jar:4.4 in the shaded jar. 77 [INFO] Including org.apache.spark:spark-launcher_2.10:jar:1.6.2 in the shaded jar. 78 [INFO] Including org.apache.spark:spark-network-common_2.10:jar:1.6.2 in the shaded jar. 79 [INFO] Including org.apache.spark:spark-network-shuffle_2.10:jar:1.6.2 in the shaded jar. 80 [INFO] Including org.fusesource.leveldbjni:leveldbjni-all:jar:1.8 in the shaded jar. 81 [INFO] Including com.fasterxml.jackson.core:jackson-annotations:jar:2.4.4 in the shaded jar. 82 [INFO] Including org.apache.spark:spark-unsafe_2.10:jar:1.6.2 in the shaded jar. 83 [INFO] Including net.java.dev.jets3t:jets3t:jar:0.7.1 in the shaded jar. 84 [INFO] Including commons-codec:commons-codec:jar:1.3 in the shaded jar. 85 [INFO] Including commons-httpclient:commons-httpclient:jar:3.1 in the shaded jar. 86 [INFO] Including org.apache.curator:curator-recipes:jar:2.4.0 in the shaded jar. 87 [INFO] Including org.apache.curator:curator-framework:jar:2.4.0 in the shaded jar. 88 [INFO] Including org.apache.zookeeper:zookeeper:jar:3.4.5 in the shaded jar. 89 [INFO] Including jline:jline:jar:0.9.94 in the shaded jar. 90 [INFO] Including com.google.guava:guava:jar:14.0.1 in the shaded jar. 91 [INFO] Including org.eclipse.jetty.orbit:javax.servlet:jar:3.0.0.v201112011016 in the shaded jar. 92 [INFO] Including org.apache.commons:commons-lang3:jar:3.3.2 in the shaded jar. 93 [INFO] Including org.apache.commons:commons-math3:jar:3.4.1 in the shaded jar. 94 [INFO] Including com.google.code.findbugs:jsr305:jar:1.3.9 in the shaded jar. 95 [INFO] Including org.slf4j:slf4j-api:jar:1.7.10 in the shaded jar. 96 [INFO] Including org.slf4j:jul-to-slf4j:jar:1.7.10 in the shaded jar. 97 [INFO] Including org.slf4j:jcl-over-slf4j:jar:1.7.10 in the shaded jar. 98 [INFO] Including log4j:log4j:jar:1.2.17 in the shaded jar. 99 [INFO] Including org.slf4j:slf4j-log4j12:jar:1.7.10 in the shaded jar. 100 [INFO] Including com.ning:compress-lzf:jar:1.0.3 in the shaded jar. 101 [INFO] Including org.xerial.snappy:snappy-java:jar:1.1.2.1 in the shaded jar. 102 [INFO] Including net.jpountz.lz4:lz4:jar:1.3.0 in the shaded jar. 103 [INFO] Including org.roaringbitmap:RoaringBitmap:jar:0.5.11 in the shaded jar. 104 [INFO] Including commons-net:commons-net:jar:2.2 in the shaded jar. 105 [INFO] Including com.typesafe.akka:akka-remote_2.10:jar:2.3.11 in the shaded jar. 106 [INFO] Including com.typesafe.akka:akka-actor_2.10:jar:2.3.11 in the shaded jar. 107 [INFO] Including com.typesafe:config:jar:1.2.1 in the shaded jar. 108 [INFO] Including io.netty:netty:jar:3.8.0.Final in the shaded jar. 109 [INFO] Including com.google.protobuf:protobuf-java:jar:2.5.0 in the shaded jar. 110 [INFO] Including org.uncommons.maths:uncommons-maths:jar:1.2.2a in the shaded jar. 111 [INFO] Including com.typesafe.akka:akka-slf4j_2.10:jar:2.3.11 in the shaded jar. 112 [INFO] Including org.json4s:json4s-jackson_2.10:jar:3.2.10 in the shaded jar. 113 [INFO] Including org.json4s:json4s-core_2.10:jar:3.2.10 in the shaded jar. 114 [INFO] Including org.json4s:json4s-ast_2.10:jar:3.2.10 in the shaded jar. 115 [INFO] Including org.scala-lang:scalap:jar:2.10.0 in the shaded jar. 116 [INFO] Including org.scala-lang:scala-compiler:jar:2.10.0 in the shaded jar. 117 [INFO] Including com.sun.jersey:jersey-server:jar:1.9 in the shaded jar. 118 [INFO] Including asm:asm:jar:3.1 in the shaded jar. 119 [INFO] Including com.sun.jersey:jersey-core:jar:1.9 in the shaded jar. 120 [INFO] Including org.apache.mesos:mesos:jar:shaded-protobuf:0.21.1 in the shaded jar. 121 [INFO] Including io.netty:netty-all:jar:4.0.29.Final in the shaded jar. 122 [INFO] Including com.clearspring.analytics:stream:jar:2.7.0 in the shaded jar. 123 [INFO] Including io.dropwizard.metrics:metrics-core:jar:3.1.2 in the shaded jar. 124 [INFO] Including io.dropwizard.metrics:metrics-jvm:jar:3.1.