[OpenCV] Samples 13: opencv_version

Posted 机器学习水很深

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[OpenCV] Samples 13: opencv_version相关的知识,希望对你有一定的参考价值。

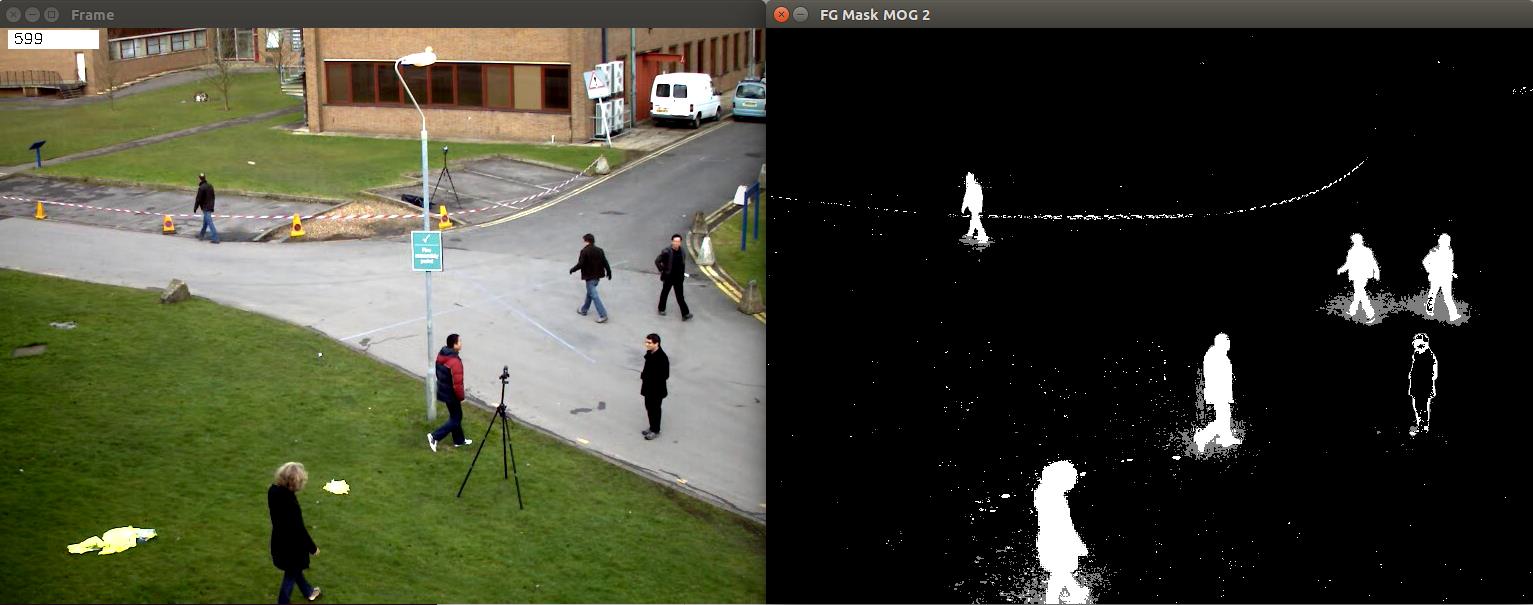

不错的草稿。但进一步处理是必然的,也是难点所在。

Extended:

固定摄像头,采用Gaussian mixture models对背景建模。

OpenCV 中实现了两个版本的高斯混合背景/前景分割方法(Gaussian Mixture-based Background/Foreground Segmentation Algorithm),调用接口很明朗,效果也很好。

参见:[Scikit-learn] 2.1 Gaussian mixture models & EM

[1] 有趣的应用 之 背景替换:http://www.360doc.com/content/16/0709/07/7863900_574170269.shtml

[2] 手势识别(code, demo):FingerCounter_withMOG2_BGsubtractor

理论:http://blog.csdn.net/jinshengtao/article/details/26278725

混合高斯背景建模是基于像素样本统计信息的背景表示方法,

利用像素在较长时间内大量样本值的概率密度等统计信息(如模式数量、每个模式的均值和标准差)表示背景,

然后使用统计差分(如3σ原则)进行目标像素判断,可以对复杂动态背景进行建模,计算量较大。

在混合高斯背景模型中,认为像素之间的颜色信息互不相关,对各像素点的处理都是相互独立的。

对于视频图像中的每一个像素点,其值在序列图像中的变化可看作是不断产生像素值的随机过程,即用高斯分布来描述每个像素点的颜色呈现规律【单模态(单峰),多模态(多峰)】。

对于多峰高斯分布模型,图像的每一个像素点按不同权值的多个高斯分布的叠加来建模,每种高斯分布对应一个可能产生像素点所呈现颜色的状态,各个高斯分布的权值和分布参数随时间更新。

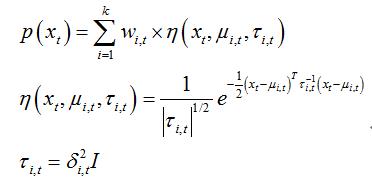

当处理彩色图像时,假定图像像素点R、G、B三色通道相互独立并具有相同的方差。对于随机变量X的观测数据集{x1,x2,…,xN},xt=(rt,gt,bt)为t时刻像素的样本,则单个采样点xt其服从的混合高斯分布概率密度函数:

其中k为分布模式总数,η(xt,μi,t, τi,t)为t时刻第i个高斯分布,μi,t为其均值,τi,t为其协方差矩阵,δi,t为方差,I为三维单位矩阵,ωi,t为t时刻第i个高斯分布的权重。

详细算法流程:http://www.cnblogs.com/yingying0907/archive/2012/07/22/2603452.html

混合高斯模型使用K(基本为3到5个)个高斯模型来表征图像中各个像素点的特征,在新一帧图像获得后更新混合高斯模型, 用当前图像中的每个像素点与混合高斯模型匹配:如果成功则判定该点为背景点, 否则为前景点。

通观整个高斯模型,主要是有方差和均值两个参数决定,对均值和方差的学习,采取不同的学习机制,将直接影响到模型的稳定性、精确性和收敛性 。

由于我们是对运动目标的背景提取建模,因此需要对高斯模型中方差和均值两个参数实时更新。

为提高模型的学习能力,改进方法对均值和方差的更新采用不同的学习率;

为提高在繁忙的场景下,大而慢的运动目标的检测效果,引入权值均值的概念,建立背景图像并实时更新,然后结合权值、权值均值和背景图像对像素点进行前景和背景的分类。

到这里为止,混合高斯模型的建模基本完成,我在归纳一下其中的流程:

-

- 首先初始化预先定义的几个高斯模型,对高斯模型中的参数进行初始化,并求出之后将要用到的参数。

- 其次,对于每一帧中的每一个像素进行处理,看其是否匹配某个模型,

- 若匹配,则将其归入该模型中,并对该模型根据新的像素值进行更新,

- 若不匹配,则以该像素建立一个高斯模型,初始化参数,代理原有模型中最不可能的模型。

- 最后选择前面几个最有可能的模型作为背景模型,为背景目标提取做铺垫。

From: http://docs.opencv.org/master/d1/dc5/tutorial_background_subtraction.html#gsc.tab=0

//opencv #include "opencv2/imgcodecs.hpp" #include "opencv2/imgproc.hpp" #include "opencv2/videoio.hpp" #include <opencv2/highgui.hpp> #include <opencv2/video.hpp> //C #include <stdio.h> //C++ #include <iostream> #include <sstream> using namespace cv; using namespace std; // Global variables Mat frame; //current frame Mat fgMaskMOG2; //fg mask fg mask generated by MOG2 method Ptr<BackgroundSubtractor> pMOG2; //MOG2 Background subtractor int keyboard; //input from keyboard void help(); void processVideo(char* videoFilename); void processImages(char* firstFrameFilename); void help() { cout << "--------------------------------------------------------------------------" << endl << "This program shows how to use background subtraction methods provided by " << endl << " OpenCV. You can process both videos (-vid) and images (-img)." << endl << endl << "Usage:" << endl << "./bg_sub {-vid <video filename>|-img <image filename>}" << endl << "for example: ./bg_sub -vid video.avi" << endl << "or: ./bg_sub -img /data/images/1.png" << endl << "--------------------------------------------------------------------------" << endl << endl; } int main(int argc, char* argv[]) { //print help information help(); //check for the input parameter correctness if(argc != 3) { cerr <<"Incorret input list" << endl; cerr <<"exiting..." << endl; return EXIT_FAILURE; } //create GUI windows namedWindow("Frame"); namedWindow("FG Mask MOG 2"); //create Background Subtractor objects pMOG2 = createBackgroundSubtractorMOG2(); //MOG2 approach if(strcmp(argv[1], "-vid") == 0) { //input data coming from a video processVideo(argv[2]); } else if(strcmp(argv[1], "-img") == 0) { //input data coming from a sequence of images processImages(argv[2]); } else { //error in reading input parameters cerr <<"Please, check the input parameters." << endl; cerr <<"Exiting..." << endl; return EXIT_FAILURE; } //destroy GUI windows destroyAllWindows(); return EXIT_SUCCESS; } void processVideo(char* videoFilename) { //create the capture object VideoCapture capture(videoFilename); if(!capture.isOpened()){ //error in opening the video input cerr << "Unable to open video file: " << videoFilename << endl; exit(EXIT_FAILURE); } //read input data. ESC or \'q\' for quitting while( (char)keyboard != \'q\' && (char)keyboard != 27 ){ //read the current frame if(!capture.read(frame)) { cerr << "Unable to read next frame." << endl; cerr << "Exiting..." << endl; exit(EXIT_FAILURE); } //update the background model pMOG2->apply(frame, fgMaskMOG2); //get the frame number and write it on the current frame stringstream ss; rectangle(frame, cv::Point(10, 2), cv::Point(100,20), cv::Scalar(255,255,255), -1); ss << capture.get(CAP_PROP_POS_FRAMES); string frameNumberString = ss.str(); putText(frame, frameNumberString.c_str(), cv::Point(15, 15), FONT_HERSHEY_SIMPLEX, 0.5 , cv::Scalar(0,0,0)); //show the current frame and the fg masks imshow("Frame", frame); imshow("FG Mask MOG 2", fgMaskMOG2); //get the input from the keyboard keyboard = waitKey( 30 ); } //delete capture object capture.release(); } void processImages(char* fistFrameFilename) { //read the first file of the sequence frame = imread(fistFrameFilename); if(frame.empty()){ //error in opening the first image cerr << "Unable to open first image frame: " << fistFrameFilename << endl; exit(EXIT_FAILURE); } //current image filename string fn(fistFrameFilename); //read input data. ESC or \'q\' for quitting while( (char)keyboard != \'q\' && (char)keyboard != 27 ){ //update the background model pMOG2->apply(frame, fgMaskMOG2); //get the frame number and write it on the current frame size_t index = fn.find_last_of("/"); if(index == string::npos) { index = fn.find_last_of("\\\\"); } size_t index2 = fn.find_last_of("."); string prefix = fn.substr(0,index+1); string suffix = fn.substr(index2); string frameNumberString = fn.substr(index+1, index2-index-1); istringstream iss(frameNumberString); int frameNumber = 0; iss >> frameNumber; rectangle(frame, cv::Point(10, 2), cv::Point(100,20), cv::Scalar(255,255,255), -1); putText(frame, frameNumberString.c_str(), cv::Point(15, 15), FONT_HERSHEY_SIMPLEX, 0.5 , cv::Scalar(0,0,0)); //show the current frame and the fg masks imshow("Frame", frame); imshow("FG Mask MOG 2", fgMaskMOG2); //get the input from the keyboard keyboard = waitKey( 30 ); //search for the next image in the sequence ostringstream oss; oss << (frameNumber + 1); string nextFrameNumberString = oss.str(); string nextFrameFilename = prefix + nextFrameNumberString + suffix; //read the next frame frame = imread(nextFrameFilename); if(frame.empty()){ //error in opening the next image in the sequence cerr << "Unable to open image frame: " << nextFrameFilename << endl; exit(EXIT_FAILURE); } //update the path of the current frame fn.assign(nextFrameFilename); } }

以上是关于[OpenCV] Samples 13: opencv_version的主要内容,如果未能解决你的问题,请参考以下文章