elasticsearch5.0集群部署及故障测试

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了elasticsearch5.0集群部署及故障测试相关的知识,希望对你有一定的参考价值。

本文主要介绍两节点集群部署,节点故障测试,模拟验证集群脑裂现象。

一、实验环境

节点1:192.168.115.11

节点1:192.168.115.12

版本:5.0.1

二、安装配置

具体部署过程见单机版:http://hnr520.blog.51cto.com/4484939/1867033

1.修改配置文件

cat elasticsearch.yml cluster.name: hnrtest node.name: hnr01 path.data: /data/elasticsearch5/data path.logs: /data/elasticsearch5/logs network.host: 192.168.115.11 discovery.zen.ping.unicast.hosts: ["192.168.115.11", "192.168.115.12"] discovery.zen.minimum_master_nodes: 1 # 由于只部署两个节点,因此设置为1,否则当master宕机,将无法重新选取master http.cors.enabled: true http.cors.allow-origin: "*"

2.启动服务

分别启动两台服务

su - elasticsearch -c "/usr/local/elasticsearch/bin/elasticsearch &"

三、验证

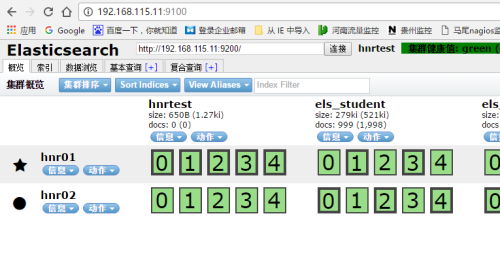

1.通过head插件连接查看

日志信息,提示配置的discovery.zen.minimum_master_nodes太少了

[2016-11-22T14:30:38,276][INFO ][o.e.c.s.ClusterService ] [hnr01] added {{hnr02}{L-jKkvuDQOWw8G6aCF8lPQ}{kCIS2DSDQvS-msW_k8cXIw}{192.168.115.12}{192.168.115.12:9300},}, reason: zen-disco-node-join[{hnr02}{L-jKkvuDQOWw8G6aCF8lPQ}{kCIS2DSDQvS-msW_k8cXIw}{192.168.115.12}{192.168.115.12:9300}]

[2016-11-22T14:30:38,824][WARN ][o.e.d.z.ElectMasterService] [hnr01] value for setting "discovery.zen.minimum_master_nodes" is too low. This can result in data loss! Please set it to at least a quorum of master-eligible nodes (current value: [1], total number of master-eligible nodes used for publishing in this round: [2])

[2016-11-22T14:30:41,468][INFO ][o.e.c.r.a.AllocationService] [hnr01] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[hnrtest][4]] ...]).

2.批量插入数据

使用python脚本插入数据,提前安装模块

pip3 install elasticsearch

#!/usr/bin/env python

# -*- coding: utf-8 -*-

from elasticsearch import Elasticsearch

from datetime import datetime

# 创建连接

es = Elasticsearch(hosts=‘192.168.115.11‘)

for i in range(1,1000):

es.index(index=‘els_student‘, doc_type=‘test-type‘, id=i, body={"name": "student" + str(i), "age": (i % 100), "timestamp": datetime.now()})四、故障测试

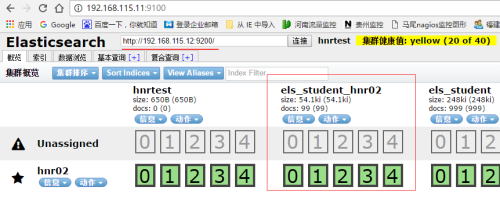

1.关闭hnr01节点,此时hnr02提升为主节点

重新选举master日志

[2016-11-22T14:29:13,491][INFO ][o.e.c.s.ClusterService ] [hnr02] detected_master {hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300}, added {{hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300},}, reason: zen-disco-receive(from master [master {hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300} committed version [7]])

[2016-11-22T14:29:14,034][INFO ][o.e.h.HttpServer ] [hnr02] publish_address {192.168.115.12:9200}, bound_addresses {192.168.115.12:9200}

[2016-11-22T14:29:14,034][INFO ][o.e.n.Node ] [hnr02] started

[2016-11-22T15:43:41,575][INFO ][o.e.d.z.ZenDiscovery ] [hnr02] master_left [{hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300}], reason [shut_down]

[2016-11-22T15:43:41,584][WARN ][o.e.d.z.ZenDiscovery ] [hnr02] master left (reason = shut_down), current nodes: {{hnr02}{L-jKkvuDQOWw8G6aCF8lPQ}{kCIS2DSDQvS-msW_k8cXIw}{192.168.115.12}{192.168.115.12:9300},}

[2016-11-22T15:43:41,587][INFO ][o.e.c.s.ClusterService ] [hnr02] removed {{hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300},}, reason: master_failed ({hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{73M2VfECTi2wfJO6WYR5Dw}{192.168.115.11}{192.168.115.11:9300})

[2016-11-22T15:43:44,654][INFO ][o.e.c.r.a.AllocationService] [hnr02] Cluster health status changed from [GREEN] to [YELLOW] (reason: [removed dead nodes on election]).

[2016-11-22T15:43:44,704][INFO ][o.e.c.s.ClusterService ] [hnr02] new_master {hnr02}{L-jKkvuDQOWw8G6aCF8lPQ}{kCIS2DSDQvS-msW_k8cXIw}{192.168.115.12}{192.168.115.12:9300}, reason: zen-disco-elected-as-master ([0] nodes joined)

[2016-11-22T15:43:44,712][INFO ][o.e.c.r.DelayedAllocationService] [hnr02] scheduling reroute for delayed shards in [59.9s] (15 delayed shards)

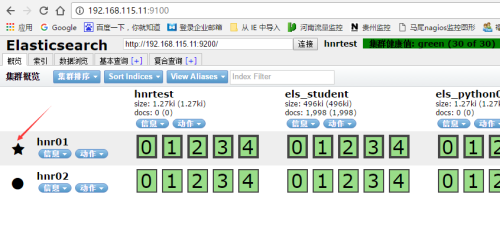

2.重新启动hnr01节点

master上日志输出

[2016-11-22T15:49:40,458][INFO ][o.e.c.s.ClusterService ] [hnr02] added {{hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{6RHGfHU7RdC5hWVlgQg7WQ}{192.168.115.11}{192.168.115.11:9300},}, reason: zen-disco-node-join[{hnr01}{dr7haiVXSc2Pz8V9lHUr1Q}{6RHGfHU7RdC5hWVlgQg7WQ}{192.168.115.11}{192.168.115.11:9300}]

[2016-11-22T15:49:41,039][WARN ][o.e.d.z.ElectMasterService] [hnr02] value for setting "discovery.zen.minimum_master_nodes" is too low. This can result in data loss! Please set it to at least a quorum of master-eligible nodes (current value: [1], total number of master-eligible nodes used for publishing in this round: [2])

[2016-11-22T15:49:50,276][INFO ][o.e.c.r.a.AllocationService] [hnr02] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[hnrtest][4]] ...]).

五、集群脑裂验证

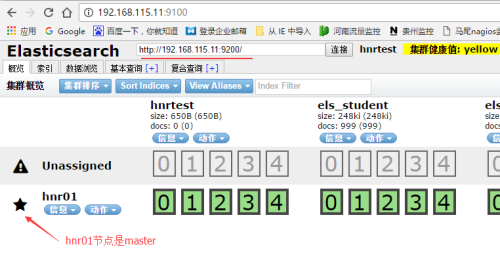

1.集群无法正常通信

在hnr02节点上防火墙添加一条规则

iptables -A OUTPUT -s 192.168.115.12 -p tcp --dport 9300 -j DROP

在hnr01节点上防火墙添加一条规则

iptables -A OUTPUT -s 192.168.115.11 -p tcp --dport 9300 -j DROP

经过180秒

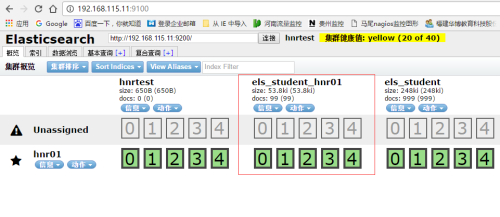

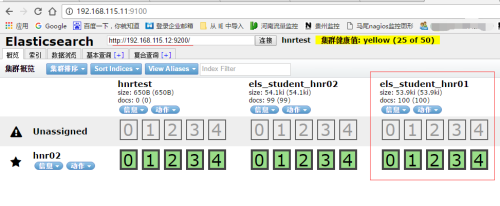

2.往各自节点写入数据

hnr01节点

hnr02节点

3.恢复集群

取消防火墙规则,集群无法恢复,重启hnr02节点,集群恢复正常,往hnr02节点上写入的数据与hnr01节点索引相同,这部分数据会丢失

本文出自 “linux之路” 博客,请务必保留此出处http://hnr520.blog.51cto.com/4484939/1876467

以上是关于elasticsearch5.0集群部署及故障测试的主要内容,如果未能解决你的问题,请参考以下文章