elk安装

Posted 运维工匠实战(如果发现有错误请大家把正确的方法发送给我,方便

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了elk安装相关的知识,希望对你有一定的参考价值。

http://udn.yyuap.com/doc/logstash-best-practice-cn/index.html

ELK其实并不是一款软件,而是一整套解决方案,是三个开源软件Elasticsearch,Logstash和Kibana。

Elasticsearch是一个实时的分布式搜索和分析引擎,它可以用于全文搜索以及分析。它是一个建立在全文搜索引擎ApacheLucene基础上的搜索引擎,便用java语言编写。

主要特点:实时分析;分布式实时文件 存储,并将每个字段都编入索引;文档导向,所有的对象全部是文档;高可用性,易扩展,支持集群(cluster)分片和复制(shards和replicas);支持JSON

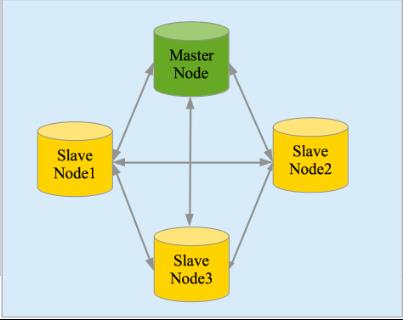

集群:

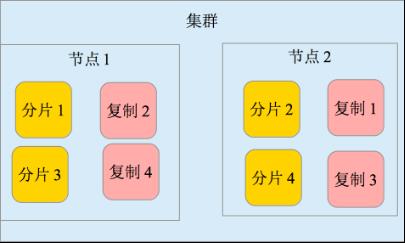

分片和复制

Logstash:是一个具有实时渠道能力的数据收集引擎。

主要特点:几乎可以访问任何数据;可以和多种外部应用结合;支持弹性扩展

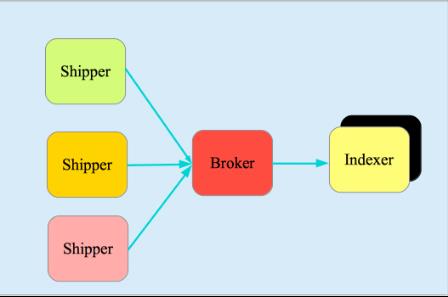

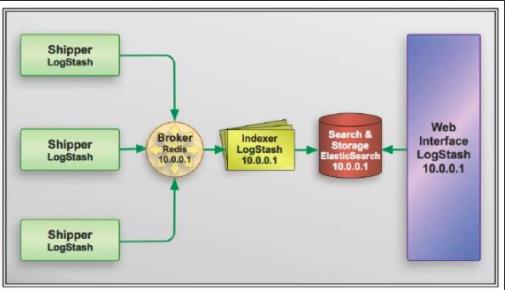

它由三个主要部分组成:shipper(发送日志数据);broker(收集数据,缺省内置redis)indexer(数据写入)

shipper是托运者的意思;很多运用场景都没有用logstash作为shipper,或者说没有用elasticsearch作为数据存储也就是说没有indexer(indexer有索引器的意思);logstash怎么配置logstash进程,然后运用到斧的日志管理架构中最合适它位置。

kibana:是一款基于apache开源协议,使用javascript语言编写,为elasticsearch提供分析和可视代的web平台。它可以在elasticsearch的索引中查找,交互数据,并生成各种维度的表图。

ELK协义体系结构。基本流程是shipper负责从各种数据源里采集数据,然后发送到broker,indexer将存放在broker中的数据再写入Elasticsearch,elasticsearch对这些数据创建索引,然后由kibana对其进行各种分析并以图表展示:

安装elasticsearch需要java8:先安装java8

解压即可,然后修改参数:

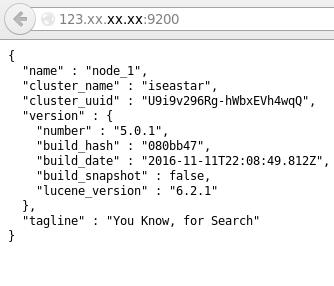

sh-4.1$ egrep -v \'^#\' config/elasticsearch.yml cluster.name: iseastar node.name: node_1 path.data: /usr/local/elasticsearch/data path.logs: /usr/local/elasticsearch/logs network.host: 123.xx.xx.xxx http.port: 9200 sh-4.1$ pwd /usr/local/elasticsearch

对系统参数调整:

sh-4.1# tail -1 /etc/sysctl.conf vm.max_map_count = 262144 #在/etc/sysctl.conf追加上面一条 #并执行命令: sysctl -p 天配置文件中追加如下两行 sh-4.1# tail -2 /etc/security/limits.conf * hard nofile 65536 * soft nofile 65536 # cat /etc/security/limits.d/90-nproc.conf # Default limit for number of user\'s processes to prevent # accidental fork bombs. # See rhbz #432903 for reasoning. * soft nproc 2048 root soft nproc unlimited #在这里修改

elasticsearch是一个近似实时的搜索平台,从索引文档到可搜索有些延迟,通常为1秒。

启动elasticsearch不能以root开启动,只能以普通用户启动

useradd elastic passwd elastic 改变目录的属组履主 chown -R elastic:elastic ELK/ 以DEMO的方式启动: ./bin/elasticsearch

logstash安装很简单,解压做个软链接,然后修改一下配置文件:

lrwxrwxrwx 1 root root 29 Nov 24 08:52 logstash -> /usr/local/ELK/logstash-5.0.1

测试logstash

sh-4.1# pwd /usr/local/logstash sh-4.1# ./bin/logstash -e \'input { stdin { } } output { stdout { } }\' Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties The stdin plugin is now waiting for input: [2016-11-24T09:00:54,674][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500} [2016-11-24T09:00:54,710][INFO ][logstash.pipeline ] Pipeline main started [2016-11-24T09:00:54,764][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} 2016-11-24T01:00:54.694Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:00:54.716Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:00:54.718Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:00:54.719Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:01:28.279Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:01:28.499Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:01:28.692Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:01:30.136Z iZ2zeiqn00z9x5uu126tjpZ 2016-11-24T01:01:30.992Z iZ2zeiqn00z9x5uu126tjpZ

配置 logstash

sh-4.1# mkdir /usr/local/logstash/etc sh-4.1# vim /usr/local/logstash/etc/hello_search.conf sh-4.1# cat /usr/local/logstash/etc/hello_search.conf input { stdin { type =>"human" } } output { stdout { codec =>rubydebug } elasticsearch { host =>"123.57.15.154" port => 9200 } } sh-4.1#

启动: 有报错!!!!(先不管最后再解决)

sh-4.1# ./logstash -e \'input {stdin {} } output {stdout {} }\' Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties The stdin plugin is now waiting for input: [2016-11-25T16:42:12,468][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500} [2016-11-25T16:42:12,485][INFO ][logstash.pipeline ] Pipeline main started [2016-11-25T16:42:12,554][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

输入hello world

sh-4.1# ./logstash -e \'input {stdin {} } output {stdout {} }\' Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties The stdin plugin is now waiting for input: [2016-11-25T16:42:12,468][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500} [2016-11-25T16:42:12,485][INFO ][logstash.pipeline ] Pipeline main started [2016-11-25T16:42:12,554][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} hello world!!!! 2016-11-25T08:48:10.336Z iZ2zeiqn00z9x5uu126tjpZ hello world!!!! 这里通过stdin输入信息,然后通过stdout输出信息。在输入hello world后logstash将处理后的信息输出到屏幕

# ./bin/logstash -e "input {stdin{}}output{stdout{codec=>rubydebug}}" Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties The stdin plugin is now waiting for input: [2016-11-27T00:39:15,990][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>4, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>500} [2016-11-27T00:39:16,022][INFO ][logstash.pipeline ] Pipeline main started [2016-11-27T00:39:16,175][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9601} hello world { "@timestamp" => 2016-11-26T16:39:25.365Z, "@version" => "1", "host" => "iZ2zeiqn00z9x5uu126tjpZ", "message" => "hello world", "tags" => [] }

logstash提供了一个shell脚本叫logstash方便快速运行。它支持一下参数:

-e:意即执行。我们在"Hello World"的时候已经用过这个参数了。事实上你可以不写任何具体配置,直接运行:

./bin/logstash -e \'\'

达到相同效果。这个参数的默认值是下面这样:

input {

stdin {}

}

output {

stdout {}

}

--config或-f:意即文件。真实运用中,我们会写很长的配置,甚至可能超过shell所能支持的1024个字符长度。所以我们必把配置固化到文件,然后通过:

./bin/logstash -f file_name.conf

#这样的形式来运行

些外,logstash还担供一个方便我们规划和书写配置的小功能。你可以直接用:

./bin/logstash -f /etc/logstash.d/

来运行。loogstash会自动读取

/etc/logstsh.d/目录下所有的文本文件,然后在自己内存里拼接成一个完整的大配置文件,再去执行

--configtest 或 -t:意即测试。用来测试logstash读取到的配置文件语法是否能正常解析。logstash配置语法是用grammar.treetop定义的尤其是使用了上一条提到的读取目录方式折读者,尤其要提前测试。

./bin/logstash -t -f conf/st.conf Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties Configuration OK [2016-11-27T01:26:26,665][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash

sh-4.1# /usr/local/logstash/bin/logstash -f /usr/local/logstash/etc/hello_search.conf

Sending Logstash\'s logs to /usr/local/logstash/logs which is now configured via log4j2.properties [2016-11-24T10:52:45,880][ERROR][logstash.outputs.elasticsearch] Unknown setting \'host\' for elasticsearch [2016-11-24T10:52:45,883][ERROR][logstash.outputs.elasticsearch] Unknown setting \'port\' for elasticsearch [2016-11-24T10:52:45,891][ERROR][logstash.agent ] fetched an invalid config {:config=>"input {\\n\\tstdin {\\n\\t\\ttype => \\"human\\"\\n\\t}\\n}\\n\\noutput {\\n\\tstdout {\\n\\t\\tcodec => rubydebug\\n\\t}\\n\\telasticsearch {\\n\\t\\thost => \\"123.xxx.xxx.xxx\\"\\n\\t\\tport => 9200\\n\\t}\\n\\n}\\n\\n", :reason=>"Something is wrong with your configuration."}

再次配置logstash:

# cat conf/logstash.conf input { file { type => "stagefalcon" tags => "falcon" path => "/var/log/facon/access.log" start_position => beginning sincedb_path => "/usr/local/logstash/conf/access.sincedb" } } output { stdout {} redis { host => "127.0.0.1" port => "6379" data_type => "list" key => "stagefalcon" } }

logstash启动成功:

/usr/local/logstash/bin/logstash -f /usr/local/logstash/conf/logstash.conf

stdin: 标准输入

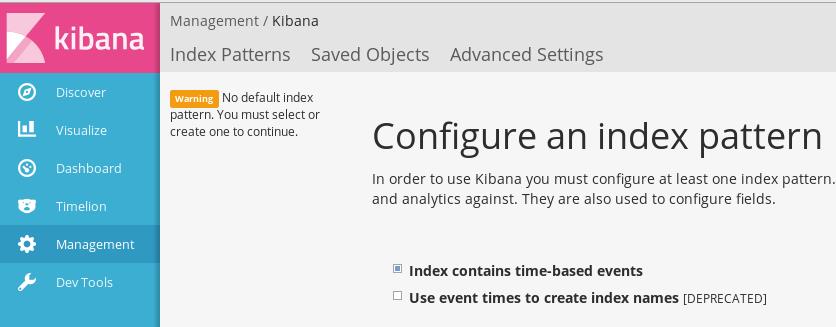

安装kibana

这个很简单,也是解压就可以了,配置暂时用默认的,但要做两处修改:下面的ip是本机的外网ip

# egrep -v "(^#|^$)" /usr/local/kibana/config/kibana.yml server.host: "123.xx.xx.xx" elasticsearch.url: "http://123.xx.xx.xx:9200"

输入ip加端口:5601

logstash的相关插件下载: https://github.com/logstash-plugins

注意配置hosts文件:(布属elasticsearch的服务器)

# cat /etc/hosts 127.0.0.1 localhost ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.17x1.xx.1xx iZ2zx xx171.6x105 elasticsearch xx.57.15.xx elasticsearch

以上是关于elk安装的主要内容,如果未能解决你的问题,请参考以下文章