如何调整随机森林的参数达到更好的效果。

Posted realzjx

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何调整随机森林的参数达到更好的效果。相关的知识,希望对你有一定的参考价值。

原文地址: https://www.analyticsvidhya.com/blog/2015/06/tuning-random-forest-model/

A month back, I participated in a Kaggle competition called TFI. I started with my first submission at 50th percentile. Having worked relentlessly on feature engineering for more than 2 weeks, I managed to reach 20th percentile. To my surprise, right after tuning the parameters of the machine learning algorithm I was using, I was able to breach top 10th percentile.

This is how important tuning these machine learning algorithms are. Random Forest is one of the easiest machine learning tool used in the industry. In our previous articles, we have introduced you to Random Forest and compared it against a CART model. Machine Learning tools are known for their performance.

What is a Random Forest?

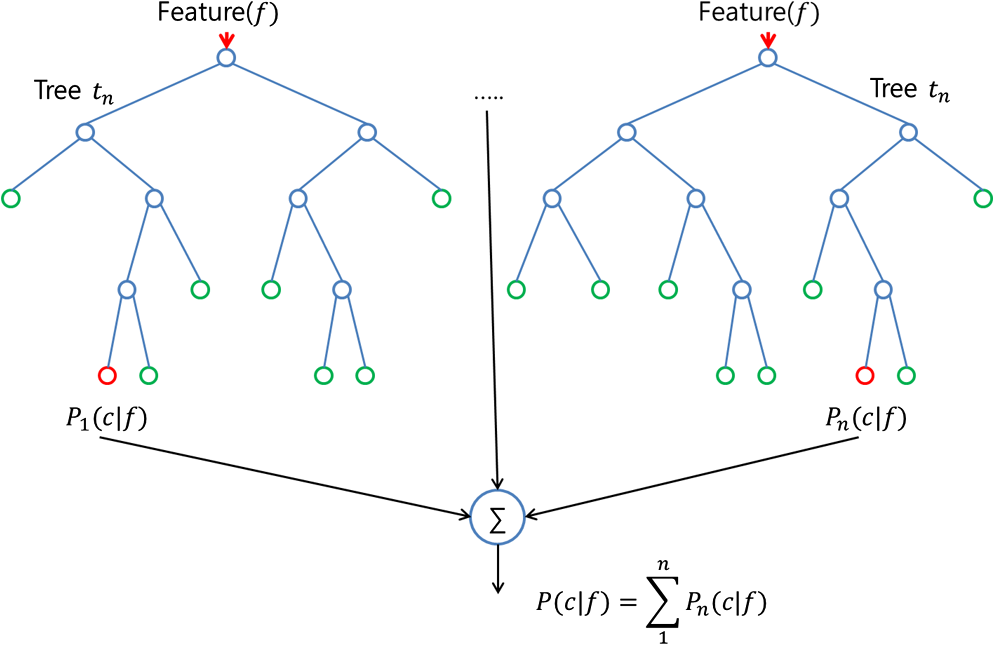

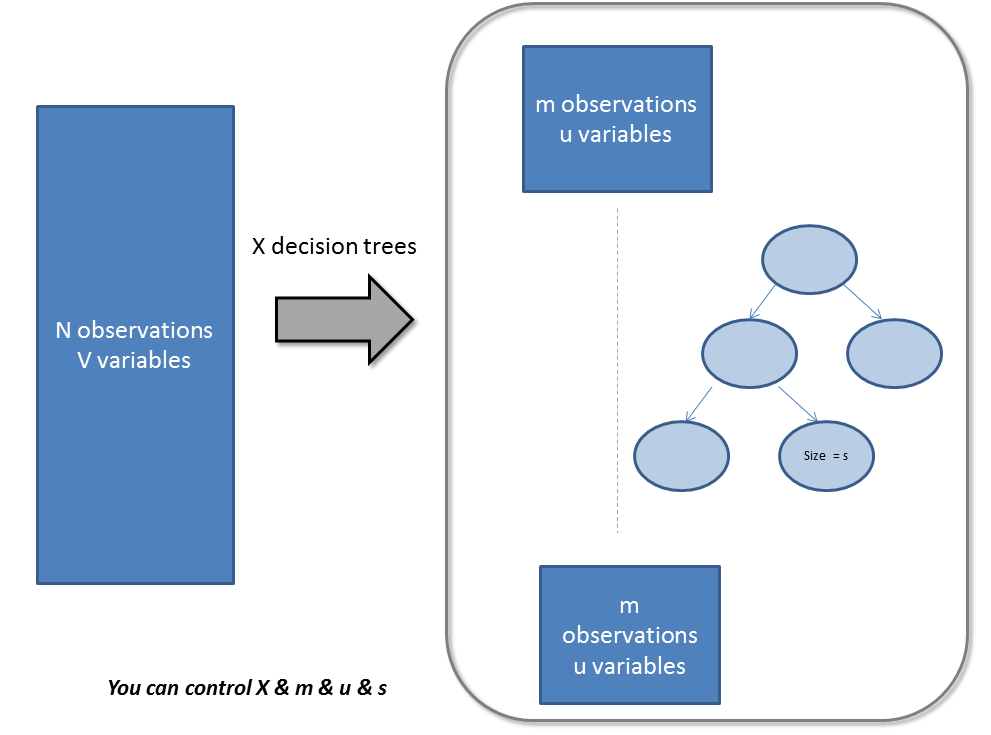

Random forest is an ensemble tool which takes a subset of observations and a subset of variables to build a decision trees. It builds multiple such decision tree and amalgamate them together to get a more accurate and stable prediction. This is direct consequence of the fact that by maximum voting from a panel of independent judges, we get the final prediction better than the best judge.

We generally see a random forest as a black box which takes in input and gives out predictions, without worrying too much about what calculations are going on the back end. This black box itself have a few levers we can play with. Each of these levers have some effect on either the performance of the model or the resource – time balance. In this article we will talk more about these levers we can tune, while building a random forest model.

Parameters / levers to tune Random Forests

Parameters in random forest are either to increase the predictive power of the model or to make it easier to train the model. Following are the parameters we will be talking about in more details (Note that I am using Python conventional nomenclatures for these parameters) :

1. Features which make predictions of the model better

There are primarily 3 features which can be tuned to improve the predictive power of the model :

1.a. max_features:

These are the maximum number of features Random Forest is allowed to try in individual tree. There are multiple options available in Python to assign maximum features. Here are a few of them :

- Auto/None : This will simply take all the features which make sense in every tree.Here we simply do not put any restrictions on the individual tree.

- sqrt : This option will take square root of the total number of features in individual run. For instance, if the total number of variables are 100, we can only take 10 of them in individual tree.”log2″ is another similar type of option for max_features.

- 0.2 : This option allows the random forest to take 20% of variables in individual run. We can assign and value in a format “0.x” where we want x% of features to be considered.

How does “max_features” impact performance and speed?

Increasing max_features generally improves the performance of the model as at each node now we have a higher number of options to be considered. However, this is not necessarily true as this decreases the diversity of individual tree which is the USP of random forest. But, for sure, you decrease the speed of algorithm by increasing the max_features. Hence, you need to strike the right balance and choose the optimal max_features.

1.b. n_estimators :

This is the number of trees you want to build before taking the maximum voting or averages of predictions. Higher number of trees give you better performance but makes your code slower. You should choose as high value as your processor can handle because this makes your predictions stronger and more stable.

1.c. min_sample_leaf :

If you have built a decision tree before, you can appreciate the importance of minimum sample leaf size. Leaf is the end node of a decision tree. A smaller leaf makes the model more prone to capturing noise in train data. Generally I prefer a minimum leaf size of more than 50. However, you should try multiple leaf sizes to find the most optimum for your use case.

2. Features which will make the model training easier

There are a few attributes which have a direct impact on model training speed. Following are the key parameters which you can tune for model speed :

2.a. n_jobs :

This parameter tells the engine how many processors is it allowed to use. A value of “-1” means there is no restriction whereas a value of “1” means it can only use one processor. Here is a simple experiment you can do with Python to check this metric :

%timeit

model = RandomForestRegressor(n_estimator = 100, oob_score = TRUE,n_jobs = 1,random_state =1)

model.fit(X,y)

Output ———- 1 loop best of 3 : 1.7 sec per loop

%timeit

model = RandomForestRegressor(n_estimator = 100,oob_score = TRUE,n_jobs = -1,random_state =1)

model.fit(X,y)

Output ———- 1 loop best of 3 : 1.1 sec per loop

“%timeit” is an awsum function which runs a function multiple times and gives the fastest loop run time. This comes out very handy while scalling up a particular function from prototype to final dataset.

2.b. random_state :

This parameter makes a solution easy to replicate. A definite value of random_state will always produce same results if given with same parameters and training data. I have personally found an ensemble with multiple models of different random states and all optimum parameters sometime performs better than individual random state.

2.c. oob_score :

This is a random forest cross validation method. It is very similar to leave one out validation technique, however, this is so much faster. This method simply tags every observation used in different tress. And then it finds out a maximum vote score for every observation based on only trees which did not use this particular observation to train itself.

Here is a single example of using all these parameters in a single function :

model = RandomForestRegressor(n_estimator = 100, oob_score = TRUE, n_jobs = -1,random_state =50, max_features = "auto", min_samples_leaf = 50)

model.fit(X,y)

Learning through a case study

We have referred to Titanic case study in many of our previous articles. Let’s try the same problem again. The objective of this case here will be to get a feel of random forest parameter tuning and not getting the right features. Try following code to build a basic model :

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import roc_auc_score

import pandas as pd

x = pd.read_csv("train.csv")

y = x.pop("Survived")

model = RandomForestRegressor(n_estimator = 100 , oob_score = TRUE, random_state = 42)

model.fit(x(numeric_variable,y)

print "AUC - ROC : ", roc_auc_score(y,model.oob_prediction)

AUC – ROC : 0.7386

This is a very simplistic model with no parameter tuning. Now let’s do some parameter tuning. As we have discussed before, we have 6 key parameters to tune. We have some grid search algorithm built in Python, which can tune all parameters automatically.But here let’s get our hands dirty to understand the mechanism better. Following code will help you tune the model for different leaf size.

Exercise : Try runing the following code and find the optimal leaf size in the comment box.

sample_leaf_options = [1,5,10,50,100,200,500]

for leaf_size in sample_leaf_options :

model = RandomForestRegressor(n_estimator = 200, oob_score = TRUE, n_jobs = -1,random_state =50, max_features = "auto", min_samples_leaf = leaf_size)

model.fit(x(numeric_variable,y)

print "AUC - ROC : ", roc_auc_score(y,model.oob_prediction)

End Notes

Machine learning tools like random forest, SVM, neural networks etc. are all used for high performance. They do give high performance, but users generally don’t understand how they actually work. Not knowing the statistical details of the model is not a concern however not knowing how the model can be tuned well to clone the training data restricts the user to use the algorithm to its full potential. In some of the future articles we will take up tuning of other machine learning algorithm like SVM , GBM and neaural networks.

Have you used random forest before? What parameters did you tune? How did tuning the algorithm impact the performance of the model? Did you see any significant benefits by doing the same? Do let us know your thoughts about this guide in the comments section below.

附一个随机森林, 检查模型参数的例子。

spark文档中有一些可以调整的参数。 setMinInstancesPerNode, 应该是落在某个一决策点上的实例数目不能少于多少个。 这个训练集越大, 这个数就越不能太小, 否则就是overfitting。 默认是5. 如果几十万条的训练集合, 5可能是有点小了。

setSubsamplingRate 随机取样的比例, 随机森林, 有两个随机, 1是特征列的随机选取, 这个可以设置成每棵树选取全部特征列, 或者随机几列。

2是训练集数据的随机选取。 这里设置成0.382黄金分割, 就是每棵树上随机选取多少训练数据做学习训练。 这个值应该也会影响到MinInstancesPerNode。 这个值设的越小, MinInstancesPerNode如果原来比较高,也应该相应调低, 否则就会欠拟合。

决策树深度setMaxDepth,这个越深, 发现的模式越多, 但是就会导致计算速度下降很多。

val rf = new RandomForestRegressor().setNumTrees(20).setLabelCol("label").setFeaturesCol("indexedFeatures")

rf.setMaxDepth(20).setSeed(8465185).setSubsamplingRate(0.382).setMinInstancesPerNode(8)

import org.apache.spark.mllib.linalg.Vectors

val features = Vectors.sparse(10, Seq((2, 0.2), (4, 0.4)))

val data = (0.0 to 4.0 by 1).map(d => (d, features)).toDF("label", "features")

// data.as[LabeledPoint]

scala> data.show(false)

+-----+--------------------------+

|label|features |

+-----+--------------------------+

|0.0 |(10,[2,4,6],[0.2,0.4,0.6])|

|1.0 |(10,[2,4,6],[0.2,0.4,0.6])|

|2.0 |(10,[2,4,6],[0.2,0.4,0.6])|

|3.0 |(10,[2,4,6],[0.2,0.4,0.6])|

|4.0 |(10,[2,4,6],[0.2,0.4,0.6])|

+-----+--------------------------+

import org.apache.spark.ml.regression.{ RandomForestRegressor, RandomForestRegressionModel }

val rfr = new RandomForestRegressor

val model: RandomForestRegressionModel = rfr.fit(data)

scala> model.trees.foreach(println)

DecisionTreeRegressionModel (uid=dtr_247e77e2f8e0) of depth 1 with 3 nodes

DecisionTreeRegressionModel (uid=dtr_61f8eacb2b61) of depth 2 with 7 nodes

DecisionTreeRegressionModel (uid=dtr_63fc5bde051c) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_64d4e42de85f) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_693626422894) of depth 3 with 9 nodes

DecisionTreeRegressionModel (uid=dtr_927f8a0bc35e) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_82da39f6e4e1) of depth 3 with 7 nodes

DecisionTreeRegressionModel (uid=dtr_cb94c2e75bd1) of depth 0 with 1 nodes

DecisionTreeRegressionModel (uid=dtr_29e3362adfb2) of depth 1 with 3 nodes

DecisionTreeRegressionModel (uid=dtr_d6d896abcc75) of depth 3 with 7 nodes

DecisionTreeRegressionModel (uid=dtr_aacb22a9143d) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_18d07dadb5b9) of depth 2 with 7 nodes

DecisionTreeRegressionModel (uid=dtr_f0615c28637c) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_4619362d02fc) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_d39502f828f4) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_896f3a4272ad) of depth 3 with 9 nodes

DecisionTreeRegressionModel (uid=dtr_891323c29838) of depth 3 with 7 nodes

DecisionTreeRegressionModel (uid=dtr_d658fe871e99) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_d91227b13d41) of depth 2 with 5 nodes

DecisionTreeRegressionModel (uid=dtr_4a7976921f4b) of depth 2 with 5 nodes

scala> model.treeWeights

res12: Array[Double] = Array(1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0, 1.0)

scala> model.featureImportances

res13: org.apache.spark.mllib.linalg.Vector = (1,[0],[1.0])以上是关于如何调整随机森林的参数达到更好的效果。的主要内容,如果未能解决你的问题,请参考以下文章

使用 GridSearchCV 调整 scikit-learn 的随机森林超参数