学习笔记--HA高可用集群

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了学习笔记--HA高可用集群相关的知识,希望对你有一定的参考价值。

实验环境:火墙 selinux关闭 实验机时间同步 各节点解析完整

配置好yum源:

[source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.60.250/rhel6

gpgcheck=0

[HighAvailability]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.60.250/rhel6/HighAvailability

gpgcheck=0

[LoadBalancer]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.60.250/rhel6/LoadBalancer

gpgcheck=0

[ResilientStorage]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.60.250/rhel6/ResilientStorage

gpgcheck=0

[ScalableFileSystem]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.60.250/rhel6/ScalableFileSystem

gpgcheck=0

实验主机:server6 server7 server8 网段:172.25.60.0

访问 https://server8.example.com:8084

帐号:root密码:123456

FENCE机制

在真机上安装 fence-virt-multicast

fence-virt-libvirt

fence-virt

dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

systemctl start fence_virtd

fence_virtd -c进入执行界面,按照以下【】中内容填写

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to ‘none‘ for no interface.

Interface [br0]:

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

上述配置文件会被写入到/etc/fence_virt.conf中

scpfence_xvm.key [email protected] 7 :/etc/cluster

进入网页管理界面:

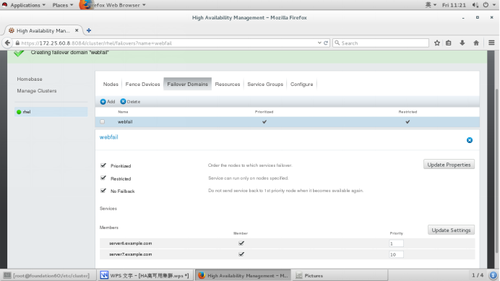

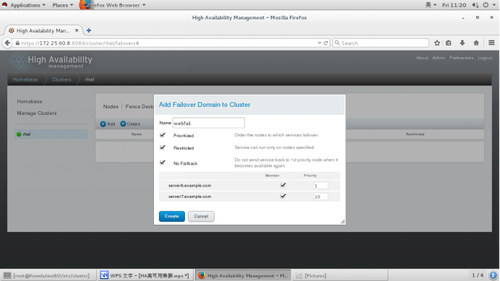

添加完成后点击Nodes--->Add Fence Method(name默认即可)--->Add Fence Instance

在Domain 中填写物理主机中的虚拟机名称或者填写UUID--->提交完成

测试:

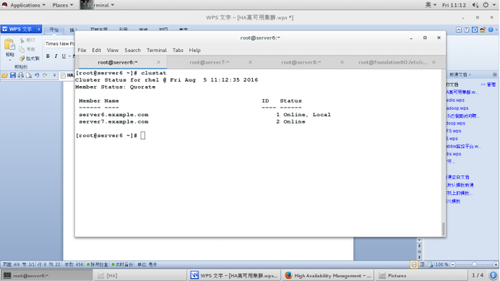

在server6上查看状态 clustat

执行fence_node server7.example.com,观察7上的运行状态

此时server7被fence掉执行重启,重启成功后自动加入节点中

整合httpd

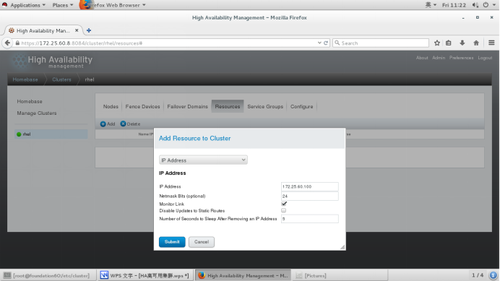

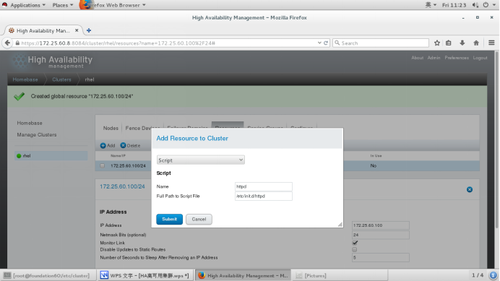

添加虚拟ip和脚本文件

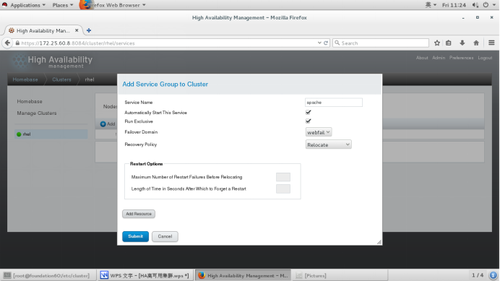

添加服务组

Add Resource时注意启动顺序 1.Ip Address 2.httpd

测试:关闭server6和server7上的httpd服务

clusvcadm -e apache开启apache服务组

查看server6的httpd服务和ip地址

clusvcadm -r apache -m server7.example.com将服务转换到server7上

查看server7的httpd服务和ip地址

在server8上添加一块空白硬盘做iscsi挂载

相关配置文件:/etc/tgt/targets.conf

# Sample target with one LUN only. Defaults to allow access for all initiators:

<target iqn.2016-08.com.example:server.target1>

backing-store /dev/vda

initiator-address 172.25.60.6

initiator-address 172.25.60.7

</target>

启动tgtd服务

并在server6 7 上登陆

iscsiadm -m discovery -t st -p 172.25.60.8

iscsiadm -m node -l

fdisk -cu /dev/sdb----> pvcreate /dev/sdb1--->vgcreate clustervg /dev/sdb1--->lvcreate -L 2G -n data1 /dev/clustervg

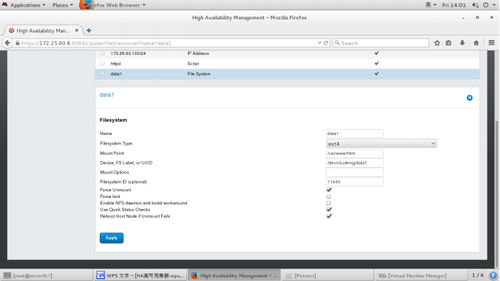

添加Resource

添加服务组

Ipaddr--->httpd--->data1

重启服务:clusvcadm -d apache

clusvcadm -e apache

查看挂载

转换节点:clusvcadm -r apache -m server7.example.com

HA mysql 数据库高可用

安装mysql mysql-server 启动mysqld服务 cp -rp /var/lib/mysql* /var/www/html (此目录为刚才挂载的目录,并不是这是目录) chown mysql.mysql /var/www/html

clusvcadm -d apache 停掉之前服务

删除Resources 中httpd和data1并创立新的源(删除之前在服务组先删除)

在服务组里添加服务:

Ipaddr--->filesystem--->script

关闭6 7 的mysqld服务

clusvcadm -e apache 开启服务组 查看对应节点上的mysql是否启动成功

(由于cp -rp时将sock套接字也一起复制过去了导致mysql服务启动失败,删除该套借字即可)

附加:

gfs2 分布式集群锁管理器

ext4格式文件系统只能单点挂载,gfs2可多点同时写入

格式化

mkfs.gfs2 -p lock_dlm -t rhel(此为开始创建的集群名称):mygf2 -j 3 /dev/clustervg/data1

mount /dev/clustervg/data1 /mntchown mysql.mysql /mntcd /mnt

gfs2_tool sb /dev/clustervg/data1 all 查看GFS2块参数

gfs2_tool journals /dev/clustervg/data1查看日志文件

实现开机自挂载

blkid查询设备UUID

vim /etc/fstab

UUID=xxxxxxxxxxxxx/var/lib/mysqlgfs2_netdev0 0

gfs2 lv扩容

lvextend -L +2G /dev/clustervg/data1

gfs2_grow /dev/clustervg/data1

gfs2_jadd -j 3 /dev/clustervg/data1 增加三个日志文件(每个128M)

####关闭集群的顺序

停止服务---> leave---> remove---> 关闭节点上的服务(cman rgmanager clvmd等)

####iscsi的登出删除

iscsiadm -m node -u

iscsiadm -m node -o delete

以上是关于学习笔记--HA高可用集群的主要内容,如果未能解决你的问题,请参考以下文章