直播技术(从服务端到客户端)二

Posted Jarlene

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了直播技术(从服务端到客户端)二相关的知识,希望对你有一定的参考价值。

播放

在上一篇文章中,我们叙述了直播技术的环境配置(包括服务端nginx,nginx-rtmp-module, ffmpeg, android编译,ios编译)。从本文开始,我们将叙述播放相关的东西,播放是直播技术中关键的一步,它包括很多技术如:解码,缩放,时间基线选择,缓存队列,画面渲染,声音播放等等。我将分为三个部分为大家讲述整个播放流程;

Android

第一部分是基于NativeWindow的视频渲染,主要使用的OpenGL ES2通过传入surface来将视频数据渲染到surface上显示出来。第二部分是基于OpenSL ES来音频播放。第三部分,音视频同步。我们使用的都是android原生自带的一些库来做音视频渲染处理。

IOS

同样IOS也分成三个部分,第一部分视频渲染:使用OpenGLES.framework,通过OpenGL来渲染视频画面,第二部分是音频播放,基于AudioToolbox.framework做音频播放;第三部分,视音频同步。

利用原生库可以减少资源的利用,降低内存,提高性能;一般而言,如果不是通晓android、ios的程序员会选择一个统一的视频显示和音频播放库(SDL),这个库可以实现视频显示和音频播。但是增加额外的库意味着资源的浪费和性能的降低。

Android

我们首先带来android端的视频播放功能,我们分成三个部分,1、视频渲染;2、音频播放;3、时间基线(音视频同步)来阐述。

1、视频渲染

ffmpeg为我们提供浏览丰富的编解码类型(ffmpeg所具备编解码能力都是软件编解码,不是指硬件编解码。具体之后文章会详细介绍ffmpeg),视频解码包括flv, mpeg, mov 等;音频包括aac, mp3等。对于整个播放,FFmpeg主要处理流程如下:

av_register_all(); // 注册所有的文件格式和编解码器的库,打开的合适格式的文件上会自动选择相应的编解码库

avformat_network_init(); // 注册网络服务

avformat_alloc_context(); // 分配FormatContext内存,

avformat_open_input(); // 打开输入流,获取头部信息,配合av_close_input_file()关闭流

avformat_find_stream_info(); // 读取packets,来获取流信息,并在pFormatCtx->streams 填充上正确的信息

avcodec_find_decoder(); // 获取解码器,

avcodec_open2(); // 通过AVCodec来初始化AVCodecContext

av_read_frame(); // 读取每一帧

avcodec_decode_video2(); // 解码帧数据

avcodec_close(); // 关闭编辑器上下文

avformat_close_input(); // 关闭文件流我们先来看一段代码:

av_register_all();

avformat_network_init();

pFormatCtx = avformat_alloc_context();

if (avformat_open_input(&pFormatCtx, pathStr, NULL, NULL) != 0) {

LOGE("Couldn‘t open file: %s\n", pathStr);

return;

}

if (avformat_find_stream_info(pFormatCtx, &dictionary) < 0) {

LOGE("Couldn‘t find stream information.");

return;

}

av_dump_format(pFormatCtx, 0, pathStr, 0);

这段代码可以算是初始化FFmpeg,首先注册编解码库,为FormatContext分配内存,调用avformat_open_input打开输入流,获取头部信息,配合avformat_find_stream_info来填充FormatContext中相关内容,av_dump_format这个是dump出流信息。这个信息是这个样子的:

video infomation:

Input #0, flv, from ‘rtmp:127.0.0.1:1935/live/steam‘:

Metadata:

Server : NGINX RTMP (github.com/sergey-dryabzhinsky/nginx-rtmp-module)

displayWidth : 320

displayHeight : 240

fps : 15

profile :

level :

Duration: 00:00:00.00, start: 15.400000, bitrate: N/A

Stream #0:0: Video: flv1 (flv), yuv420p, 320x240, 15 tbr, 1k tbn, 1k tbc

Stream #0:1: Audio: mp3, 11025 Hz, stereo, s16p, 32 kb/s接下来就是找到视频的解码器上下文AVCodecContext和AVCodec解码器。

videoStream = -1;

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

videoStream = i;

break;

}

}

if (videoStream == -1) {

LOGE("Didn‘t find a video stream.");

return;

}

/**

获取视频解码器上下文和解码器

*/

pVideoCodecCtx = pFormatCtx->streams[videoStream]->codec;

pVideoCodec = avcodec_find_decoder(pVideoCodecCtx->codec_id);

if (pVideoCodec == NULL) {

LOGE("pVideoCodec not found.");

return;

}

/**

获取视频宽度和高度

*/

width = pVideoCodecCtx->width;

height = pVideoCodecCtx->height;

/**

获取设置NativeWindow buffer属性,

*/

ANativeWindow_setBuffersGeometry(nativeWindow, width, height, WINDOW_FORMAT_RGBA_8888);

LOGD("the width is %d, the height is %d", width, height);

// 分配每一帧的内存,pFrame原始帧,pFrameRGB为转换帧

pFrame = avcodec_alloc_frame();

pFrameRGB = avcodec_alloc_frame();

if (pFrameRGB == NULL || pFrameRGB == NULL) {

LOGE("Could not allocate video frame.");

return;

}

int numBytes = avpicture_get_size(PIX_FMT_RGBA, pVideoCodecCtx->width,

pVideoCodecCtx->height);

buffer = (uint8_t *) av_malloc(numBytes * sizeof(uint8_t));

if (buffer == NULL) {

LOGE("buffer is null");

return;

}

// 填充AVPicture信息

avpicture_fill((AVPicture *) pFrameRGB, buffer, PIX_FMT_RGBA,

pVideoCodecCtx->width, pVideoCodecCtx->height);

// 获取视频缩放上下文

pSwsCtx = sws_getContext(pVideoCodecCtx->width,

pVideoCodecCtx->height,

pVideoCodecCtx->pix_fmt,

width,

height,

PIX_FMT_RGBA,

SWS_BILINEAR,

NULL,

NULL,

NULL);

int frameFinished = 0;

// 循环读取每一帧

while (av_read_frame(pFormatCtx, &packet) >= 0) {

if (packet.stream_index == videoStream) {

// 解码每一帧数据

avcodec_decode_video2(pVideoCodecCtx, pFrame, &frameFinished, &packet);

if (frameFinished) {

LOGD("av_read_frame");

// 调用NativeWindows展示画面

ANativeWindow_lock(nativeWindow, &windowBuffer, 0);

// 缩放视频

sws_scale(pSwsCtx, (uint8_t const * const *)pFrame->data,

pFrame->linesize, 0, pVideoCodecCtx->height,

pFrameRGB->data, pFrameRGB->linesize);

uint8_t * dst = (uint8_t *)windowBuffer.bits;

int dstStride = windowBuffer.stride * 4;

uint8_t * src = (pFrameRGB->data[0]);

int srcStride = pFrameRGB->linesize[0];

for (int h = 0; h < height; h++) {

memcpy(dst + h * dstStride, src + h * srcStride, srcStride);

}

ANativeWindow_unlockAndPost(nativeWindow);

}

}

av_free_packet(&packet); // 释放packet

}从整个解码到缩放,再到渲染到nativeWindow思路非常清晰,当然我们这里没有考虑时间基线,这就意味着播放的时候会很快或者很慢(这个取决于视频的帧率)。我们在第三部分详细讨论时间基线(音视频同步问题)。

2、音频渲染

音频渲染我们同样适用的是android原生的库,OpenSL ES。我们会使用一些主要的接口参数如下:

// engine interfaces

static SLObjectItf engineObject;

static SLEngineItf engineEngine;

// output mix interfaces

static SLObjectItf outputMixObject;

static SLEnvironmentalReverbItf outputMixEnvironmentalReverb;

static SLObjectItf bqPlayerObject;

static SLEffectSendItf bqPlayerEffectSend;

static SLMuteSoloItf bqPlayerMuteSolo;

static SLVolumeItf bqPlayerVolume;

static SLPlayItf bqPlayerPlay;

static SLAndroidSimpleBufferQueueItf bqPlayerBufferQueue;

// aux effect on the output mix, used by the buffer queue player

const static SLEnvironmentalReverbSettings reverbSettings = SL_I3DL2_ENVIRONMENT_PRESET_STONECORRIDOR;这些参数都是音频播放过程使用到的,而整个音频播放的流程主要包括以下几个方面:

//创建播放引擎,初始化接口参数。

bool createEngine() {

SLresult result;

// 创建引擎engineObject

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

if (SL_RESULT_SUCCESS != result) {

return false;

}

// 实现引擎engineObject

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return false;

}

// 获取引擎接口engineEngine

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE,

&engineEngine);

if (SL_RESULT_SUCCESS != result) {

return false;

}

//

const SLInterfaceID ids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean req[1] = {SL_BOOLEAN_FALSE};

// 创建混音器outputMixObject

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1,

ids, req);

if (SL_RESULT_SUCCESS != result) {

return false;

}

// 实现混音器outputMixObject

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return false;

}

// 获取混音器接口outputMixEnvironmentalReverb

result = (*outputMixObject)->GetInterface(outputMixObject,

SL_IID_ENVIRONMENTALREVERB,

&outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

}

return true;

}在创建OpenSL ES音频播放引擎的时候,我们主要针对引擎和混音器进行初始化。之后我们会创建音频播放缓冲。

bool createBufferQueueAudioPlayer(PlayCallBack callback) {

SLresult result;

SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

// pcm数据格式

SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, 1, SL_SAMPLINGRATE_44_1,

SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16,

SL_SPEAKER_FRONT_CENTER, SL_BYTEORDER_LITTLEENDIAN};

SLDataSource audioSrc = {&loc_bufq, &format_pcm};

SLDataLocator_OutputMix loc_outmix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&loc_outmix, NULL};

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE};

// 创建音频播放器

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject,

&audioSrc, &audioSnk, 3, ids, req);

if (SL_RESULT_SUCCESS != result)

return false;

// 实现音频播放器(bqPlayerObject)

result = (*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result)

return false;

// 获取音频播放器接口bqPlayerPlay(获取播放器)

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY,

&bqPlayerPlay);

if (SL_RESULT_SUCCESS != result)

return false;

// 获取音频播放器接口bqPlayerBufferQueue(缓冲buffer)

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE,

&bqPlayerBufferQueue);

if (SL_RESULT_SUCCESS != result)

return false;

// 注册播放回调 callback

result = (*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue,

callback, NULL);

if (SL_RESULT_SUCCESS != result) {

return false;

}

// 获取音频播放器接口bqPlayerEffectSend(音效)

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_EFFECTSEND,

&bqPlayerEffectSend);

if (SL_RESULT_SUCCESS != result)

return false;

// 获取音频播放器接口bqPlayerVolume(音量)

result = (*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME,

&bqPlayerVolume);

if (SL_RESULT_SUCCESS != result)

return false;

// 设置播放bqPlayerPlay状态(暂停)

result = (*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_STOPPED);

return result == SL_RESULT_SUCCESS;

}整个音频播放流畅其实看起来也是很简单的,主要分:1、创建实现播放引擎;2、创建实现混音器;3、设置缓冲和pcm格式;4、创建实现播放器;5、获取音频播放器接口;6、获取缓冲buffer;7、注册播放回调;8、获取音效接口;9、获取音量接口;10、获取播放状态接口;

做完这10步,整个音频播放器引擎就创建完毕,接下来就是引擎读取数据播放。

void playBuffer(void *pBuffer, int size) {

// 判断数据可用性

if (pBuffer == NULL || size == -1) {

return;

}

LOGV("PlayBuff!");

// 数据存放进bqPlayerBufferQueue中

SLresult result = (*bqPlayerBufferQueue)->Enqueue(bqPlayerBufferQueue,

pBuffer, size);

if (result != SL_RESULT_SUCCESS)

LOGE("Play buffer error!");

}这段代码主要阐述的播放的过程,通过将数据放进bqPlayerBufferQueue,供播放引擎读取播放。记得我们在创建缓冲buffer的时候,注册了一个callback,这个callBack的作用就是通知可以向缓冲队列中添加数据,这个callBack的原型如下:

void videoPlayCallBack(SLAndroidSimpleBufferQueueItf bq, void *context) {

// 添加数据到bqPlayerBufferQueue中,通过调用playBuffer方法。

void* data = getData();

int size = getDataSize();

playBuffer(data, size);

}这样就循环向缓冲队列中添加数据,可以一直播放。有很多简单的音乐播放器就是基于这种模式设计的。这种方式也很可靠,能够非常清楚的展现整个播放流程。

3、时间基线(音视频同步)

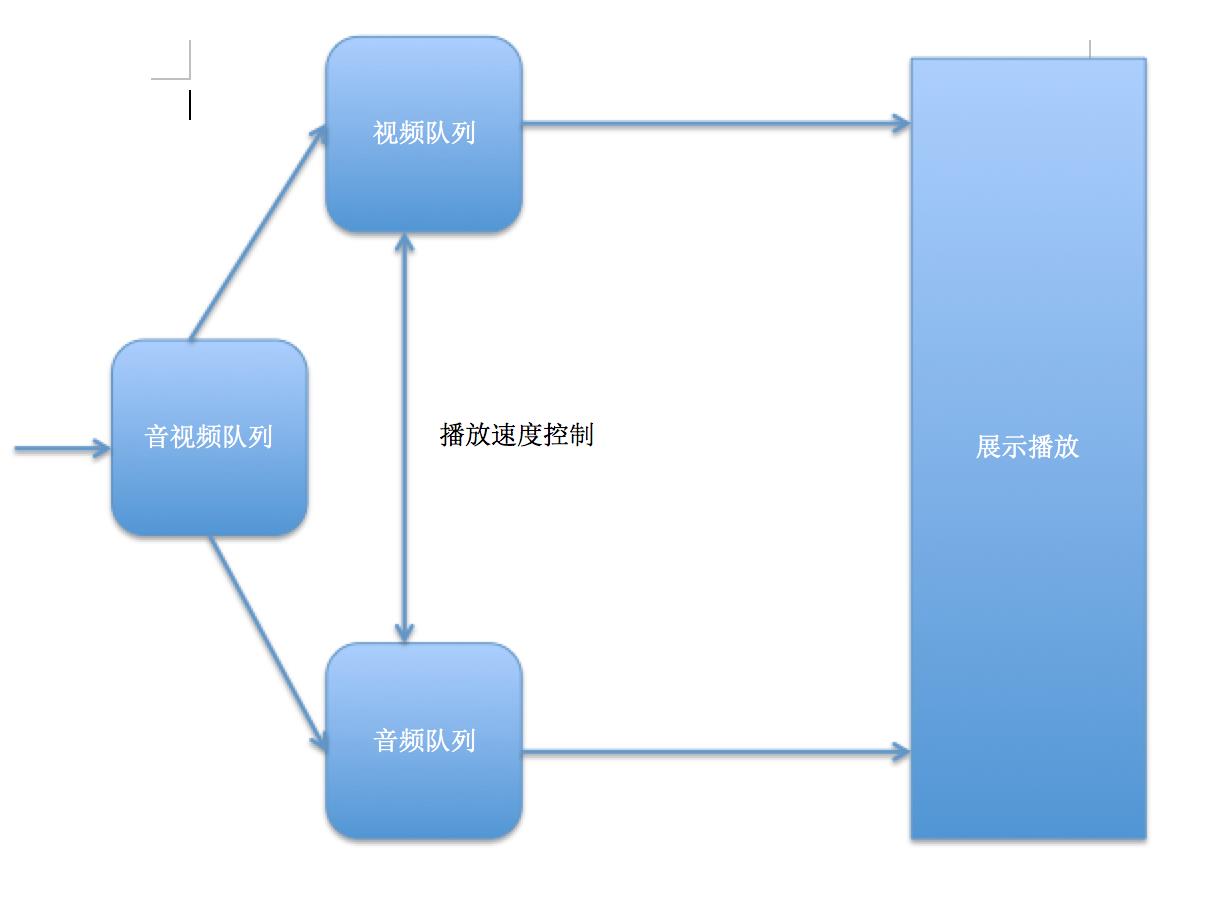

为了能够完整的播放视频和音频,我们需要对一些数据进行整合,包括音视频数据,时间参数等。在ffmpeg源码的ffplayer.c使用就是这种方式,对齐时间基线有三种方式:1、对齐音频时间基线;2、对齐视频时间基线;3、对齐第三方时间基线。为了能够能够清楚展现播放速度控制和音视频同步,特意给出了一个流程图。音视频队列从ffmpeg的av_read_frame中读取每一帧数据到音视频队列中(保存的是AVPacket数据),然后音频队列和视频队列从音视频队列中不停的拿数据,期间两者做一个同步,最后展示到画面和播放音频。

void getPacket() {

struct timespec time;

time.tv_sec = 10;//网络不好最多等10秒

time.tv_nsec = 0;

struct ThreadMsg msg;

while (true) {

memset(&msg, 0, sizeof(struct ThreadMsg));

msg.data = NULL;

// 等待网络缓冲

ThreadList::queueGet(playInstance->queue, &time, &msg);

if (msg.msgtype == -1) {//正常退出

ThreadList::queueAdd(playInstance->video_queue, NULL, -1);

ThreadList::queueAdd(playInstance->audio_queue, NULL, -1);

break;

}

if (msg.data == NULL) {

ThreadList::queueAdd(playInstance->video_queue, NULL, -1);

ThreadList::queueAdd(playInstance->audio_queue, NULL, -1);

playInstance->timeout_flag = 1;

break;

}

AVPacket *packet_p = (AVPacket *) msg.data;

if (packet_p->stream_index == playInstance->videoState->videoStream) {

ThreadList::queueAdd(playInstance->video_queue, packet_p, 1);

} else if (packet_p->stream_index == playInstance->videoState->audioStream) {

ThreadList::queueAdd(playInstance->audio_queue, packet_p, 1);

}

}

}int queueGet(struct ThreadQueue *queue, const struct timespec *timeout,

struct ThreadMsg *msg) {

struct MsgList *firstrec;

int ret = 0;

struct timespec abstimeout;

if (queue == NULL || msg == NULL) {

return EINVAL;

}

if (timeout) {

struct timeval now;

gettimeofday(&now, NULL);

abstimeout.tv_sec = now.tv_sec + timeout->tv_sec;

abstimeout.tv_nsec = (now.tv_usec * 1000) + timeout->tv_nsec;

if (abstimeout.tv_nsec >= 1000000000) {

abstimeout.tv_sec++;

abstimeout.tv_nsec -= 1000000000;

}

}

pthread_mutex_lock(&queue->mutex);

/* Will wait until awakened by a signal or broadcast */

while (queue->first == NULL && ret != ETIMEDOUT) { //Need to loop to handle spurious wakeups

if (timeout) {

ret = pthread_cond_timedwait(&queue->cond, &queue->mutex, &abstimeout);

} else {

pthread_cond_wait(&queue->cond, &queue->mutex);

}

}

if (ret == ETIMEDOUT) {

pthread_mutex_unlock(&queue->mutex);

return ret;

}

firstrec = queue->first;

queue->first = queue->first->next;

queue->length--;

if (queue->first == NULL) {

queue->last = NULL; // we know this since we hold the lock

queue->length = 0;

}

msg->data = firstrec->msg.data;

msg->msgtype = firstrec->msg.msgtype;

msg->qlength = queue->length;

release_msglist(queue, firstrec);

pthread_mutex_unlock(&queue->mutex);

return 0;

}我们分三个步骤进行操作。

- 整合数据

整合数据指的是讲音频数据和视频数据添加到相应的队列中,以便播放使用,具体如下;这个数据结构主要是针对音视频中一些基本参数的。我们将利用这些东西做解码,播放速度控制,音视频同步等等。

typedef struct VideoState { //解码过程中的数据结构

AVFormatContext *pFormatCtx;

AVCodecContext *pVideoCodecCtx; // 视频解码器上下文

AVCodecContext *pAudioCodecCtx; // 音频解码器上下文

AVCodec *pAudioCodec; // 音频解码器

AVCodec *pVideoCodec; // 视频解码器

AVFrame *pVideoFrame; // 视频为转码帧

AVFrame *pVideoFrameRgba; // 视频转码为rgba数据的帧

struct SwsContext *sws_ctx; // 视频转码工具

void *pVideobuffer; // 视频缓存

AVFrame *pAudioFrame;// 音频帧

struct SwrContext *swr_ctx; // 音频转码工具

int sample_rate_src; //音频采样率

int sample_fmt; // 音频采用格式

int sample_layout; // 音频通道数

int64_t video_start_time; // 视频开始时间

int64_t audio_start_time; // 音频时间

double video_time_base; // 视频基准时间

double audio_time_base; // 音频基准时间

int videoStream; // FormatContext.streams中视频帧位置

int audioStream; // FormatContext.streams中音频帧位置

} VideoState;

typedef struct PlayInstance {

ANativeWindow *window; // nativeWindow // 通过传入surface构建

int display_width; // 显示宽度

int display_height; // 显示高度

int stop; // 停止

int timeout_flag; // 超时标记

int disable_video;

VideoState *videoState;

//队列

struct ThreadQueue *queue; // 音视频帧队列

struct ThreadQueue *video_queue; // 视频帧队列

struct ThreadQueue *audio_queue; // 音频帧队列

} PlayInstance;播放速度控制

播放速度控制可以通过获取音、视频队列中的数据来控制时间,我们是在单独的线程中从音、视频队列中获取相应的数据。然后根据数据时间基准线进行播放速度控制。这一块结合音视频同步一起实现。音视频同步

下面代码是视频展示过程(在单独线程中做的),我们主要考虑的是延时同步那一块。将音频队列中的数据分类存放的音频队列和视频队列。

void video_thread() {

struct timespec time;

time.tv_sec = 10;//网络不好最多等10秒

time.tv_nsec = 0;

struct ThreadMsg msg;

int packet_count = 0;

while (true) {

if (playInstance->stop) {

break;

}

msg.data = NULL;

// 从视频队列中获取数据,并等待

ThreadList::queueGet(playInstance->video_queue, &time, &msg);

if (msg.msgtype == -1) {

break;

}

if (msg.data == NULL) {

LOGE("视频线程空循环\n");

break;

}

AVPacket *packet_p = (AVPacket *) msg.data;

AVPacket pavpacket = *packet_p;

packet_count++;

if (packet_count == 1) {//拿到第一个视频包

playInstance->videoState->video_start_time = av_gettime();

LOGE("视频开始时间 %lld\n", playInstance->videoState->video_start_time);

}

if (playInstance->disable_video) {

av_free_packet(packet_p);

av_free(msg.data);

continue;

}

ANativeWindow_Buffer windowBuffer;

// 延时同步,控制播放速度。

int64_t pkt_pts = pavpacket.pts;

double show_time = pkt_pts * (playInstance->videoState->video_time_base);

int64_t show_time_micro = show_time * 1000000;

int64_t played_time = av_gettime() - playInstance->videoState->video_start_time;

int64_t delta_time = show_time_micro - played_time;

if (delta_time < -(0.2 * 1000000)) {

LOGE("视频跳帧\n");

continue;

} else if (delta_time > 0) {

av_usleep(delta_time);

}

int frame_finished = 0;

avcodec_decode_video2(playInstance->videoState->pVideoCodecCtx,

playInstance->videoState->pVideoFrame,

&frame_finished, &pavpacket);

if (frame_finished) {

sws_scale(//对解码后的数据进行色彩空间转换,yuv420p 转为rgba8888

playInstance->videoState->sws_ctx,

(uint8_t const *const *) (playInstance->videoState->pVideoFrame)->data,

(playInstance->videoState->pVideoFrame)->linesize,

0,

playInstance->videoState->pVideoCodecCtx->height,

playInstance->videoState->pVideoFrameRgba->data,

playInstance->videoState->pVideoFrameRgba->linesize

);

if (!(playInstance->disable_video) &&

ANativeWindow_lock(playInstance->window, &windowBuffer, NULL) < 0) {

LOGE("cannot lock window");

continue;

} else if (!playInstance->disable_video) {

uint8_t *dst = (uint8_t *) windowBuffer.bits;

int dstStride = windowBuffer.stride * 4;

uint8_t *src = (playInstance->videoState->pVideoFrameRgba->data[0]);

int srcStride = playInstance->videoState->pVideoFrameRgba->linesize[0];

for (int h = 0; h < playInstance->display_height; h++) {

memcpy(dst + h * dstStride, src + h * srcStride, srcStride);

}

ANativeWindow_unlockAndPost(playInstance->window);//释放对surface的锁,并且更新对应surface数据进行显示

}

av_free_packet(packet_p);

av_free(msg.data);

}

}

}我们主要分析延时同步的那一段代码:

// 延时同步

int64_t pkt_pts = pavpacket.pts;

double show_time = pkt_pts * (playInstance->videoState->video_time_base);

int64_t show_time_micro = show_time * 1000000;

int64_t played_time = av_gettime() - playInstance->videoState->video_start_time;

int64_t delta_time = show_time_micro - played_time;

if (delta_time < -(0.2 * 1000000)) {

LOGE("视频跳帧\n");

continue;

} else if (delta_time > 0.2 * 1000000) {

av_usleep(delta_time);

}这段代码主要是音视频同步的,这块采用的是基于第三方时间基准,同步调整音频和视频。在音频处理中也有类似的代码。针对延时做同步。这个基准线是标准时间,通过修改过时间差值来设置跳帧还是等待。当然这里音视频同步实现比较简单,按照正常的使用应该是在一定范围内可以认为是同步的。

由于声音对于人来说比较敏感,一点杂音都能分辨出来,而视频的跳帧相对来说更容易让人接受,这是因为视觉停留的原因。因此,建议采用基于音频时间基准来进行音视频同步。

IOS

IOS相对于android而言在视频渲染上来说可能比android端稍微复杂,因为ios没有像android的surfaceView可以直接进行操作,都是通过OpenGL来绘制画面。因此可能会比较难于理解。而对于音频,IOS可以采用AudioToolbox进行处理。对于ffmpeg相关的东西android和ios是一样的,也主要是一个流程。音视频同步采用的是同一种方案。在此就不在介绍ios的音视频同步问题。

1、视频渲染

前面在android部分已经阐述到使用av_read_frame方法来读取每一帧的信息,这部分ios和android一样,android由于原生支持surfaceView进行绘制渲染,而ios不支持,需要借助opengl来绘制,因此在av_read_frame之后就和android存在区别。主要区别如下代码:

- (NSArray *) decodeFrames: (CGFloat) minDuration

{

if (_videoStream == -1 &&

_audioStream == -1)

return nil;

if (_formatCtx == nil) {

printf("AvFormatContext is nil");

return nil;

}

NSMutableArray *result = [NSMutableArray array];

AVPacket packet;

CGFloat decodedDuration = 0;

BOOL finished = NO;

while (!finished) {

if (av_read_frame(_formatCtx, &packet) < 0) {

_isEOF = YES;

break;

}

if (packet.stream_index ==_videoStream) {

int pktSize = packet.size;

while (pktSize > 0) {

int gotframe = 0;

int len = avcodec_decode_video2(_videoCodecCtx,

_videoFrame,

&gotframe,

&packet);

if (len < 0) {

LoggerVideo(0, @"decode video error, skip packet");

break;

}

if (gotframe) {

if (!_disableDeinterlacing &&

_videoFrame->interlaced_frame) {

avpicture_deinterlace((AVPicture*)_videoFrame,

(AVPicture*)_videoFrame,

_videoCodecCtx->pix_fmt,

_videoCodecCtx->width,

_videoCodecCtx->height);

}

KxVideoFrame *frame = [self handleVideoFrame];

if (frame) {

[result addObject:frame];

_position = frame.position;

decodedDuration += frame.duration;

if (decodedDuration > minDuration)

finished = YES;

}

char *buf = (char *)malloc(_videoFrame->width * _videoFrame->height * 3 / 2);

AVPicture *pict;

int w, h;

char *y, *u, *v;

pict = (AVPicture *)_videoFrame;//这里的frame就是解码出来的AVFrame

w = _videoFrame->width;

h = _videoFrame->height;

y = buf;

u = y + w * h;

v = u + w * h / 4;

for (int i=0; i<h; i++)

memcpy(y + w * i, pict->data[0] + pict->linesize[0] * i, w);

for (int i=0; i<h/2; i++)

memcpy(u + w / 2 * i, pict->data[1] + pict->linesize[1] * i, w / 2);

for (int i=0; i<h/2; i++)

memcpy(v + w / 2 * i, pict->data[2] + pict->linesize[2] * i, w / 2);

[myview setVideoSize:_videoFrame->height height:_videoFrame->width];

[myview displayYUV420pData:buf width:_videoFrame->width height:_videoFrame->height];

free(buf);

}

if (0 == len)

break;

pktSize -= len;

}

} else if (packet.stream_index == _audioStream) {

int pktSize = packet.size;

while (pktSize > 0) {

int gotframe = 0;

int len = avcodec_decode_audio4(_audioCodecCtx,

_audioFrame,

&gotframe,

&packet);

if (len < 0) {

LoggerAudio(0, @"decode audio error, skip packet");

break;

}

if (gotframe) {

KxAudioFrame * frame = [self handleAudioFrame];

if (frame) {

[result addObject:frame];

if (_videoStream == -1) {

_position = frame.position;

decodedDuration += frame.duration;

if (decodedDuration > minDuration)

finished = YES;

}

}

}

if (0 == len)

break;

pktSize -= len;

}

} else if (packet.stream_index == _artworkStream) {

if (packet.size) {

KxArtworkFrame *frame = [[KxArtworkFrame alloc] init];

frame.picture = [NSData dataWithBytes:packet.data length:packet.size];

[result addObject:frame];

}

} else if (packet.stream_index == _subtitleStream) {

int pktSize = packet.size;

while (pktSize > 0) {

AVSubtitle subtitle;

int gotsubtitle = 0;

int len = avcodec_decode_subtitle2(_subtitleCodecCtx,

&subtitle,

&gotsubtitle,

&packet);

if (len < 0) {

LoggerStream(0, @"decode subtitle error, skip packet");

break;

}

if (gotsubtitle) {

KxSubtitleFrame *frame = [self handleSubtitle: &subtitle];

if (frame) {

[result addObject:frame];

}

avsubtitle_free(&subtitle);

}

if (0 == len)

break;

pktSize -= len;

}

}

av_free_packet(&packet);

}

return result;

}

这段代码主要描述的是decodeFrames,将视频帧和音频帧单独处理。视频主要代码如下:

if (packet.stream_index ==_videoStream) {

int pktSize = packet.size;

while (pktSize > 0) {

int gotframe = 0;

int len = avcodec_decode_video2(_videoCodecCtx,

_videoFrame,

&gotframe,

&packet);

if (len < 0) {

LoggerVideo(0, @"decode video error, skip packet");

break;

}

if (gotframe) {

if (!_disableDeinterlacing &&

_videoFrame->interlaced_frame) {

avpicture_deinterlace((AVPicture*)_videoFrame,

(AVPicture*)_videoFrame,

_videoCodecCtx->pix_fmt,

_videoCodecCtx->width,

_videoCodecCtx->height);

}

VideoFrame *frame = [self handleVideoFrame];

if (frame) {

[result addObject:frame];

_position = frame.position;

decodedDuration += frame.duration;

if (decodedDuration > minDuration)

finished = YES;

}

char *buf = (char *)malloc(_videoFrame->width * _videoFrame->height * 3 / 2);

AVPicture *pict;

int w, h;

char *y, *u, *v;

pict = (AVPicture *)_videoFrame;//这里的frame就是解码出来的AVFrame

w = _videoFrame->width;

h = _videoFrame->height;

y = buf;

u = y + w * h;

v = u + w * h / 4;

for (int i=0; i<h; i++)

memcpy(y + w * i, pict->data[0] + pict->linesize[0] * i, w);

for (int i=0; i<h/2; i++)

memcpy(u + w / 2 * i, pict->data[1] + pict->linesize[1] * i, w / 2);

for (int i=0; i<h/2; i++)

memcpy(v + w / 2 * i, pict->data[2] + pict->linesize[2] * i, w / 2);

[myview setVideoSize:_videoFrame->height height:_videoFrame->width];

[myview displayYUV420pData:buf width:_videoFrame->width height:_videoFrame->height];

free(buf);

}

if (0 == len)

break;

pktSize -= len;

}

} else if (packet.stream_index == _audioStream) {

int pktSize = packet.size;

while (pktSize > 0) {

int gotframe = 0;

int len = avcodec_decode_audio4(_audioCodecCtx,

_audioFrame,

&gotframe,

&packet);

if (len < 0) {

LoggerAudio(0, @"decode audio error, skip packet");

break;

}

if (gotframe) {

AudioFrame * frame = [self handleAudioFrame];

if (frame) {

[result addObject:frame];

if (_videoStream == -1) {

_position = frame.position;

decodedDuration += frame.duration;

if (decodedDuration > minDuration)

finished = YES;

}

}

}

if (0 == len)

break;

pktSize -= len;

}

}这块代码和android的类似,将数据转化为yuv格式,然后拿去渲染,myview是一个View,它主要就是通过OpenGL 将数据渲染成画面。主要代码:

- (void)displayYUV420pData:(void *)data width:(NSInteger)w height:(NSInteger)h

{

@synchronized(self)

{

if (w != _videoW || h != _videoH)

{

[self setVideoSize:w height:h];

}

[EAGLContext setCurrentContext:_glContext];

glBindTexture(GL_TEXTURE_2D, _textureYUV[TEXY]);

glTexSubImage2D(GL_TEXTURE_2D, 0, 0, 0, w, h, GL_RED_EXT, GL_UNSIGNED_BYTE, data);

//[self debugGlError];

glBindTexture(GL_TEXTURE_2D, _textureYUV[TEXU]);

glTexSubImage2D(GL_TEXTURE_2D, 0, 0, 0, w/2, h/2, GL_RED_EXT, GL_UNSIGNED_BYTE, data + w * h);

// [self debugGlError];

glBindTexture(GL_TEXTURE_2D, _textureYUV[TEXV]);

glTexSubImage2D(GL_TEXTURE_2D, 0, 0, 0, w/2, h/2, GL_RED_EXT, GL_UNSIGNED_BYTE, data + w * h * 5 / 4);

//[self debugGlError];

[self render];

}

#ifdef DEBUG

GLenum err = glGetError();

if (err != GL_NO_ERROR)

{

printf("GL_ERROR=======>%d\n", err);

}

struct timeval nowtime;

gettimeofday(&nowtime, NULL);

if (nowtime.tv_sec != _time.tv_sec)

{

printf("视频 %d 帧率: %d\n", self.tag, _frameRate);

memcpy(&_time, &nowtime, sizeof(struct timeval));

_frameRate = 1;

}

else

{

_frameRate++;

}

#endif

}

通过OpenGL将数据绘制出来。这就是整个IOS展示画面主要代码。其他的一些东西类似于android。

2、音频渲染

在上面视频渲染的过程中,列出了部分对音频的处理过程。其主要方法为handleAudioFrame,下面代码书handleAudioFrame方法:

- (AudioFrame *) handleAudioFrame

{

if (!_audioFrame->data[0])

return nil;

id<AudioManager> audioManager = [AudioManager audioManager];

const NSUInteger numChannels = audioManager.numOutputChannels;

NSInteger numFrames;

void * audioData;

if (_swrContext) {

const NSUInteger ratio = MAX(1, audioManager.samplingRate / _audioCodecCtx->sample_rate) *

MAX(1, audioManager.numOutputChannels / _audioCodecCtx->channels) * 2;

const int bufSize = av_samples_get_buffer_size(NULL,

audioManager.numOutputChannels,

_audioFrame->nb_samples * ratio,

AV_SAMPLE_FMT_S16,

1);

if (!_swrBuffer || _swrBufferSize < bufSize) {

_swrBufferSize = bufSize;

_swrBuffer = realloc(_swrBuffer, _swrBufferSize);

}

Byte *outbuf[2] = { _swrBuffer, 0 };

numFrames = swr_convert(_swrContext,

outbuf,

_audioFrame->nb_samples * ratio,

(const uint8_t **)_audioFrame->data,

_audioFrame->nb_samples);

if (numFrames < 0) {

LoggerAudio(0, @"fail resample audio");

return nil;

}

//int64_t delay = swr_get_delay(_swrContext, audioManager.samplingRate);

//if (delay > 0)

// LoggerAudio(0, @"resample delay %lld", delay);

audioData = _swrBuffer;

} else {

if (_audioCodecCtx->sample_fmt != AV_SAMPLE_FMT_S16) {

NSAssert(false, @"bucheck, audio format is invalid");

return nil;

}

audioData = _audioFrame->data[0];

numFrames = _audioFrame->nb_samples;

}

const NSUInteger numElements = numFrames * numChannels;

NSMutableData *data = [NSMutableData dataWithLength:numElements * sizeof(float)];

float scale = 1.0 / (float)INT16_MAX ;

vDSP_vflt16((SInt16 *)audioData, 1, data.mutableBytes, 1, numElements);

vDSP_vsmul(data.mutableBytes, 1, &scale, data.mutableBytes, 1, numElements);

AudioFrame *frame = [[AudioFrame alloc] init];

frame.position = av_frame_get_best_effort_timestamp(_audioFrame) * _audioTimeBase;

frame.duration = av_frame_get_pkt_duration(_audioFrame) * _audioTimeBase;

frame.samples = data;

if (frame.duration == 0) {

// sometimes ffmpeg can‘t determine the duration of audio frame

// especially of wma/wmv format

// so in this case must compute duration

frame.duration = frame.samples.length / (sizeof(float) * numChannels * audioManager.samplingRate);

}

#if DEBUG

LoggerAudio(2, @"AFD: %.4f %.4f | %.4f ",

frame.position,

frame.duration,

frame.samples.length / (8.0 * 44100.0));

#endif

return frame;

}handleAudioFrame主要方法是将数据组合转换,比如采样率重设,转码等等功能,最后放进一个数组中,最后交给AudioToolbox进行处理。我们接着放下看:

func enableAudio(on: Bool) {

let audioManager = AudioManager.audioManager()

if on && decoder.validAudio {

// 闭包

audioManager.outputBlock = {(outData: UnsafeMutablePointer<Float>,numFrames: UInt32, numChannels: UInt32) -> Void in

self.audioCallbackFillData(outData, numFrames: Int(numFrames), numChannels: Int(numChannels))

}

audioManager.play()

}else{

audioManager.pause()

audioManager.outputBlock = nil

}

}

func audioCallbackFillData(outData: UnsafeMutablePointer<Float>, numFrames: Int, numChannels: Int) {

var numFrames = numFrames

var weakOutData = outData

if buffered {

memset(weakOutData, 0, numFrames * numChannels * sizeof(Float))

return

}

autoreleasepool {

while numFrames > 0 {

if currentAudioFrame == nil {

dispatch_sync(lockQueue) {

if let count = self.audioFrames?.count {

if count > 0 {

let frame: AudioFrame = self.audioFrames![0] as! AudioFrame

self.audioFrames!.removeObjectAtIndex(0)

self.moviePosition = frame.position

self.bufferedDuration -= Float(frame.duration)

self.currentAudioFramePos = 0

self.currentAudioFrame = frame.samples

}

}

}

}

if (currentAudioFrame != nil) {

let bytes = currentAudioFrame.bytes + currentAudioFramePos

let bytesLeft: Int = (currentAudioFrame.length - currentAudioFramePos)

let frameSizeOf: Int = numChannels * sizeof(Float)

let bytesToCopy: Int = min(numFrames * frameSizeOf, bytesLeft)

let framesToCopy: Int = bytesToCopy / frameSizeOf

memcpy(weakOutData, bytes, bytesToCopy)

numFrames -= framesToCopy

weakOutData = weakOutData.advancedBy(framesToCopy * numChannels)

if bytesToCopy < bytesLeft {

self.currentAudioFramePos += bytesToCopy

}

else {

self.currentAudioFrame = nil

}

}else{

memset(weakOutData, 0, numFrames * numChannels * sizeof(Float))

//LoggerStream(1, @"silence audio");

self.debugAudioStatus = 3

self.debugAudioStatusTS = NSDate()

break

}

}

}

}

这是一段swift代码。在ios采用的是swift+oc+c++混合编译,正好借此熟悉swift于oc和c++的交互。enableAudio主要是创建一个audioManager实例,进行注册回调,和开始播放和暂停服务。audioManager是一个单例。是一个封装AudioToolbox类。下面的代码是激活AudioSession(初始化Audio)和失效AudioSession代码。

- (BOOL) activateAudioSession

{

if (!_activated) {

if (!_initialized) {

if (checkError(AudioSessionInitialize(NULL,

kCFRunLoopDefaultMode,

sessionInterruptionListener,

(__bridge void *)(self)),

"Couldn‘t initialize audio session"))

return NO;

_initialized = YES;

}

if ([self checkAudioRoute] &&

[self setupAudio]) {

_activated = YES;

}

}

return _activated;

}

- (void) deactivateAudioSession

{

if (_activated) {

[self pause];

checkError(AudioUnitUninitialize(_audioUnit),

"Couldn‘t uninitialize the audio unit");

/*

fails with error (-10851) ?

checkError(AudioUnitSetProperty(_audioUnit,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Input,

0,

NULL,

0),

"Couldn‘t clear the render callback on the audio unit");

*/

checkError(AudioComponentInstanceDispose(_audioUnit),

"Couldn‘t dispose the output audio unit");

checkError(AudioSessionSetActive(NO),

"Couldn‘t deactivate the audio session");

checkError(AudioSessionRemovePropertyListenerWithUserData(kAudioSessionProperty_AudioRouteChange,

sessionPropertyListener,

(__bridge void *)(self)),

"Couldn‘t remove audio session property listener");

checkError(AudioSessionRemovePropertyListenerWithUserData(kAudioSessionProperty_CurrentHardwareOutputVolume,

sessionPropertyListener,

(__bridge void *)(self)),

"Couldn‘t remove audio session property listener");

_activated = NO;

}

}

- (BOOL) setupAudio

{

// --- Audio Session Setup ---

UInt32 sessionCategory = kAudioSessionCategory_MediaPlayback;

//UInt32 sessionCategory = kAudioSessionCategory_PlayAndRecord;

if (checkError(AudioSessionSetProperty(kAudioSessionProperty_AudioCategory,

sizeof(sessionCategory),

&sessionCategory),

"Couldn‘t set audio category"))

return NO;

if (checkError(AudioSessionAddPropertyListener(kAudioSessionProperty_AudioRouteChange,

sessionPropertyListener,

(__bridge void *)(self)),

"Couldn‘t add audio session property listener"))

{

// just warning

}

if (checkError(AudioSessionAddPropertyListener(kAudioSessionProperty_CurrentHardwareOutputVolume,

sessionPropertyListener,

(__bridge void *)(self)),

"Couldn‘t add audio session property listener"))

{

// just warning

}

// Set the buffer size, this will affect the number of samples that get rendered every time the audio callback is fired

// A small number will get you lower latency audio, but will make your processor work harder

#if !TARGET_IPHONE_SIMULATOR

Float32 preferredBufferSize = 0.0232;

if (checkError(AudioSessionSetProperty(kAudioSessionProperty_PreferredHardwareIOBufferDuration,

sizeof(preferredBufferSize),

&preferredBufferSize),

"Couldn‘t set the preferred buffer duration")) {

// just warning

}

#endif

if (checkError(AudioSessionSetActive(YES),

"Couldn‘t activate the audio session"))

return NO;

[self checkSessionProperties];

// ----- Audio Unit Setup -----

// Describe the output unit.

AudioComponentDescription description = {0};

description.componentType = kAudioUnitType_Output;

description.componentSubType = kAudioUnitSubType_RemoteIO;

description.componentManufacturer = kAudioUnitManufacturer_Apple;

// Get component

AudioComponent component = AudioComponentFindNext(NULL, &description);

if (checkError(AudioComponentInstanceNew(component, &_audioUnit),

"Couldn‘t create the output audio unit"))

return NO;

UInt32 size;

// Check the output stream format

size = sizeof(AudioStreamBasicDescription);

if (checkError(AudioUnitGetProperty(_audioUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input,

0,

&_outputFormat,

&size),

"Couldn‘t get the hardware output stream format"))

return NO;

_outputFormat.mSampleRate = _samplingRate;

if (checkError(AudioUnitSetProperty(_audioUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input,

0,

&_outputFormat,

size),

"Couldn‘t set the hardware output stream format")) {

// just warning

}

_numBytesPerSample = _outputFormat.mBitsPerChannel / 8;

_numOutputChannels = _outputFormat.mChannelsPerFrame;

LoggerAudio(2, @"Current output bytes per sample: %ld", _numBytesPerSample);

LoggerAudio(2, @"Current output num channels: %ld", _numOutputChannels);

// Slap a render callback on the unit

AURenderCallbackStruct callbackStruct;

callbackStruct.inputProc = renderCallback; // 注册回调,这个回调是用来取数据的,也就是

callbackStruct.inputProcRefCon = (__bridge void *)(self);

if (checkError(AudioUnitSetProperty(_audioUnit,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Input,

0,

&callbackStruct,

sizeof(callbackStruct)),

"Couldn‘t set the render callback on the audio unit"))

return NO;

if (checkError(AudioUnitInitialize(_audioUnit),

"Couldn‘t initialize the audio unit"))

return NO;

return YES;

}正真将数据渲染代码

- (BOOL) renderFrames: (UInt32) numFrames

ioData: (AudioBufferList *) ioData

{

for (int iBuffer=0; iBuffer < ioData->mNumberBuffers; ++iBuffer) {

memset(ioData->mBuffers[iBuffer].mData, 0, ioData->mBuffers[iBuffer].mDataByteSize);

}

if (_playing && _outputBlock ) {

// Collect data to render from the callbacks

_outputBlock(_outData, numFrames, _numOutputChannels);

// Put the rendered data into the output buffer

if (_numBytesPerSample == 4) // then we‘ve already got floats

{

float zero = 0.0;

for (int iBuffer=0; iBuffer < ioData->mNumberBuffers; ++iBuffer) {

int thisNumChannels = ioData->mBuffers[iBuffer].mNumberChannels;

for (int iChannel = 0; iChannel < thisNumChannels; ++iChannel) {

vDSP_vsadd(_outData+iChannel, _numOutputChannels, &zero, (float *)ioData->mBuffers[iBuffer].mData, thisNumChannels, numFrames);

}

}

}

else if (_numBytesPerSample == 2) // then we need to convert SInt16 -> Float (and also scale)

{

float scale = (float)INT16_MAX;

vDSP_vsmul(_outData, 1, &scale, _outData, 1, numFrames*_numOutputChannels);

for (int iBuffer=0; iBuffer < ioData->mNumberBuffers; ++iBuffer) {

int thisNumChannels = ioData->mBuffers[iBuffer].mNumberChannels;

for (int iChannel = 0; iChannel < thisNumChannels; ++iChannel) {

vDSP_vfix16(_outData+iChannel, _numOutputChannels, (SInt16 *)ioData->mBuffers[iBuffer].mData+iChannel, thisNumChannels, numFrames);

}

}

}

}

return noErr;

}

总结

本文主要是讲述了ffmpeg实现播放的逻辑,分为android和ios两端,根据两端平台的特性做了相应的处理。在android端采用的是NativeWindow(surface)实现视频播放,OpenSL ES实现音频播放。实现音视频同步的逻辑是基于第三方时间基准线,音频和视频同时调整的方案。在ios端采用的是OpenGL实现视频渲染,AudioToolbox实现音频播放。音视频同步和android采用的是一样。其中两端的ffmpeg逻辑是一致的。在ios端OpenGL实现视频渲染没有重点阐述如何使用OpenGL。这个有兴趣的同学可以自行研究。

备注:整个代码工程等整理之后会发布出来。

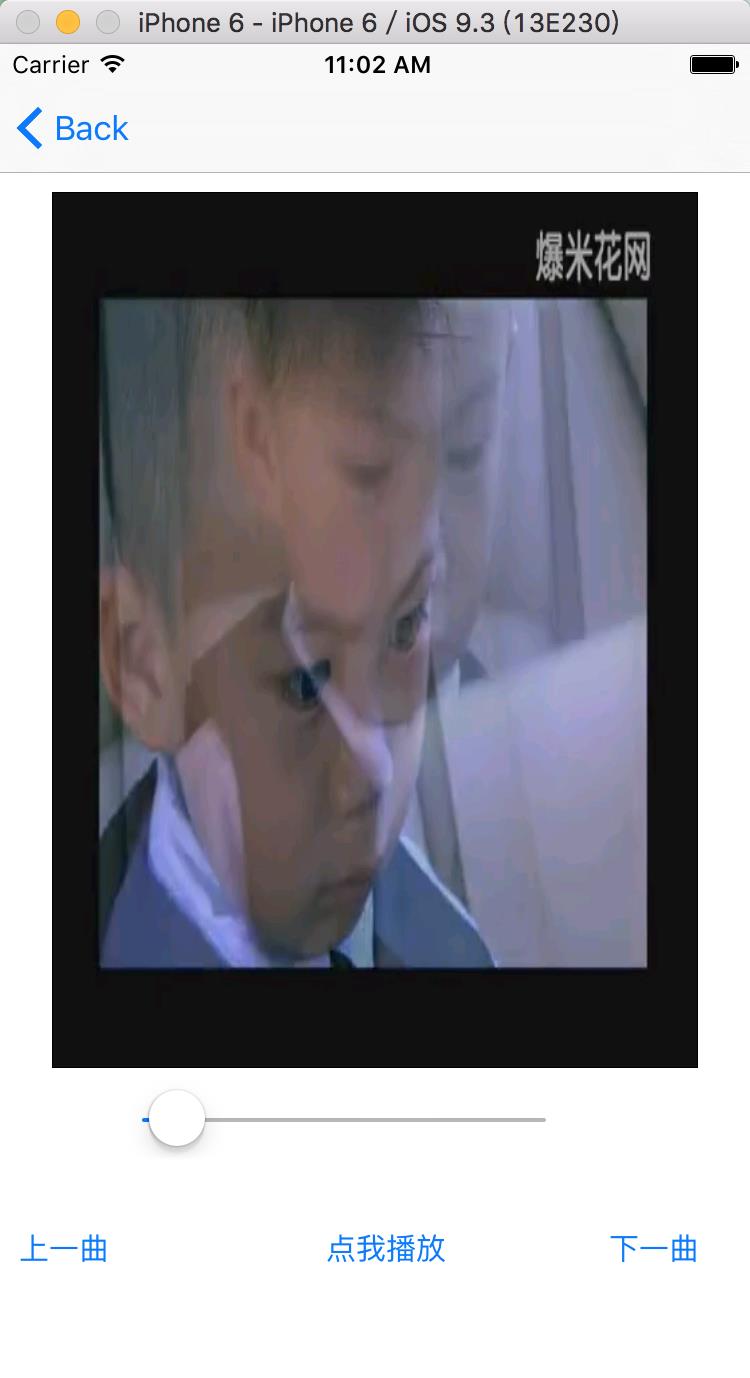

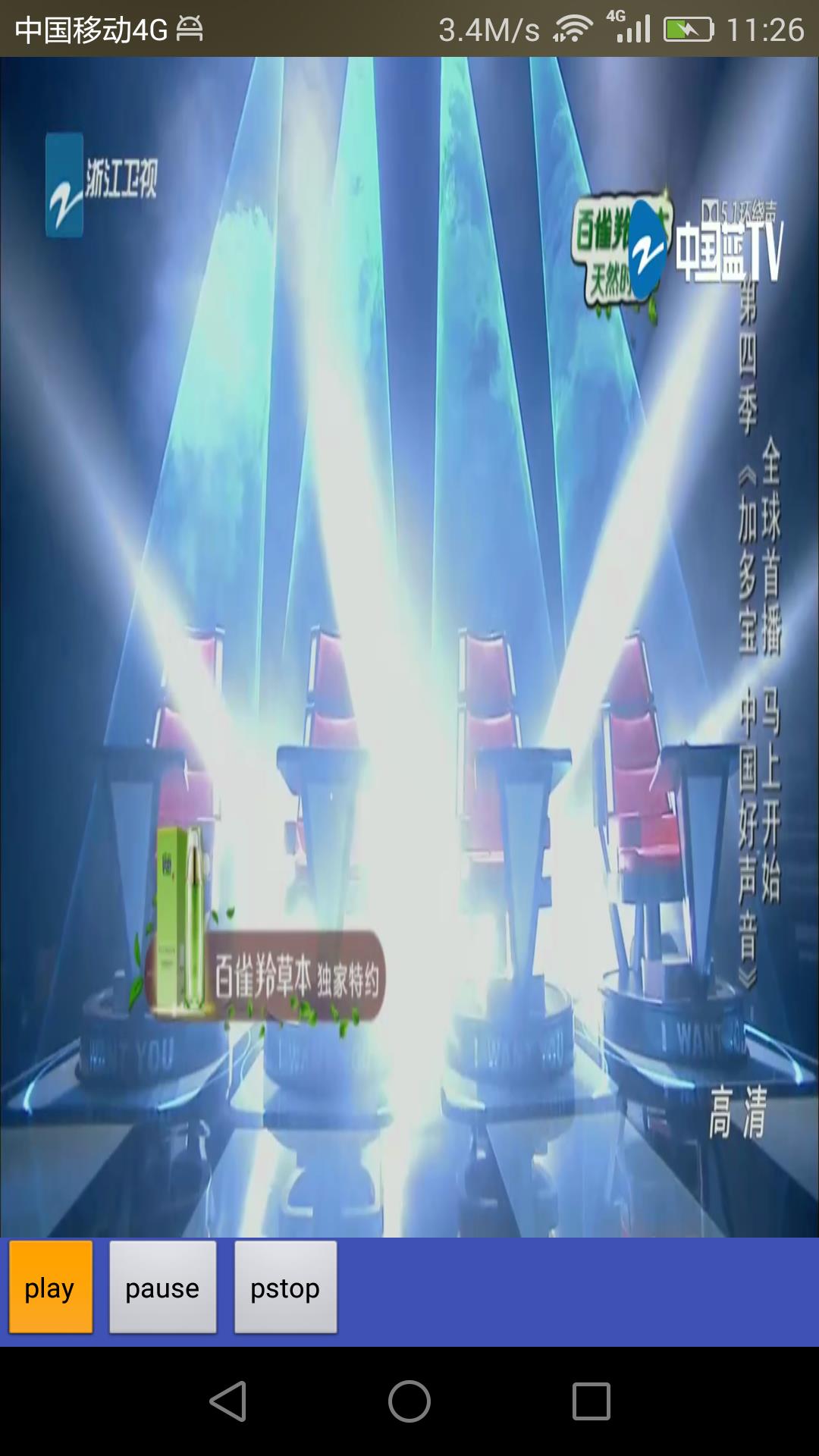

最后添加两张播放效果图

以上是关于直播技术(从服务端到客户端)二的主要内容,如果未能解决你的问题,请参考以下文章