PyCharm 远程连接linux中Python 运行pyspark

Posted 来碗酸梅汤

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了PyCharm 远程连接linux中Python 运行pyspark相关的知识,希望对你有一定的参考价值。

PySpark in PyCharm on a remote server

1、确保remote端Python、spark安装正确

2、remote端安装、设置

vi /etc/profile

添加一行:

export PYTHONPATH=$SPARK_HOME/python/:$SPARK_HOME/python/lib/py4j-0.10.4-src.zip

PYTHONPATH=$SPARK_HOME/python/:$SPARK_HOME/python/lib/py4j-0.8.2.1-src.zip

source /etc/profile

# 安装pip 和 py4j

下载pip-7.1.2.tar

tar -xvf pip-7.1.2.tar

cd pip-7.1.2

python setup.py install

pip install py4j

# 避免ssh时tty检测

cd /etc

chmod 640 sudoers

vi /etc/sudoers

#Default requiretty

3、本地Pycharm设置

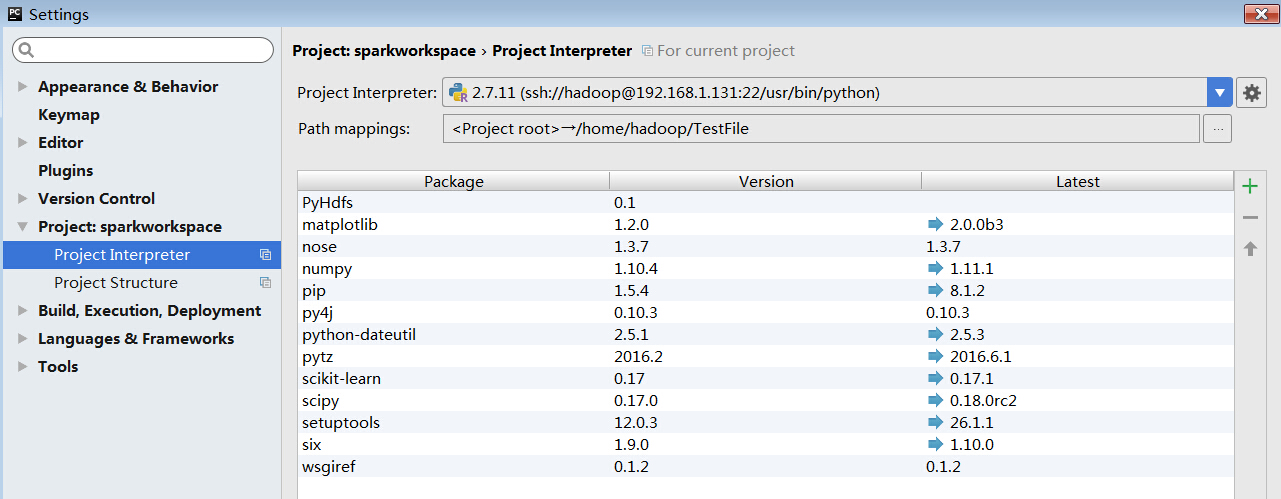

File > Settings > Project Interpreter:

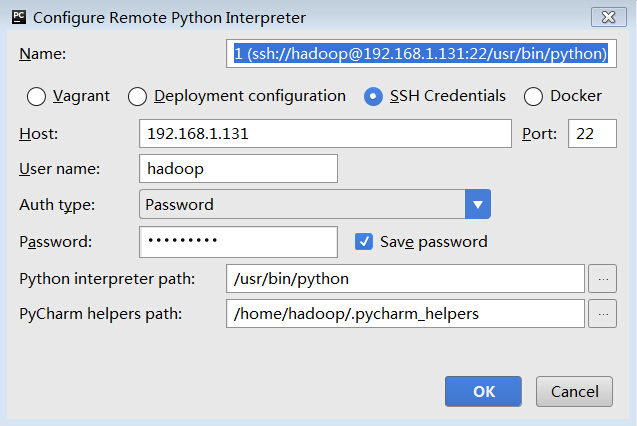

Project Interpreter > Add remote(前提:remote端python安装成功):

注意,这里的Python路径为python interpreter path,如果python安装在其它路径,要把路径改过来

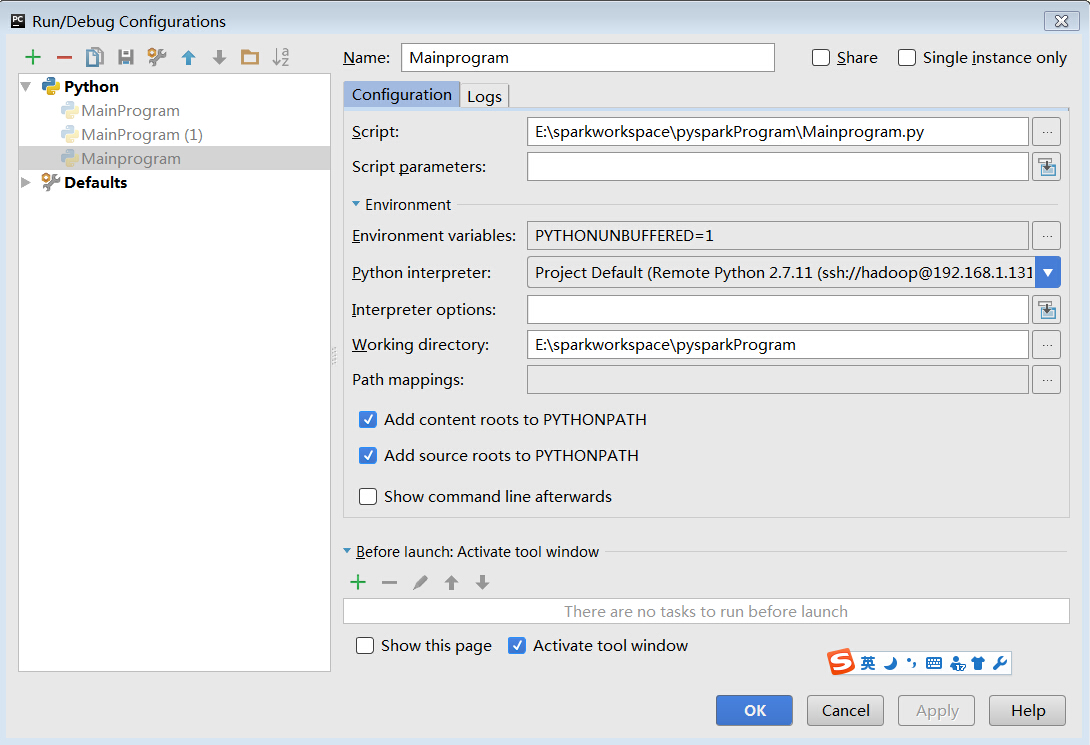

Run > Edit Configuration (前提:虚拟机中共享本地目录成功):

此处我配置映射是在Tools中进行的

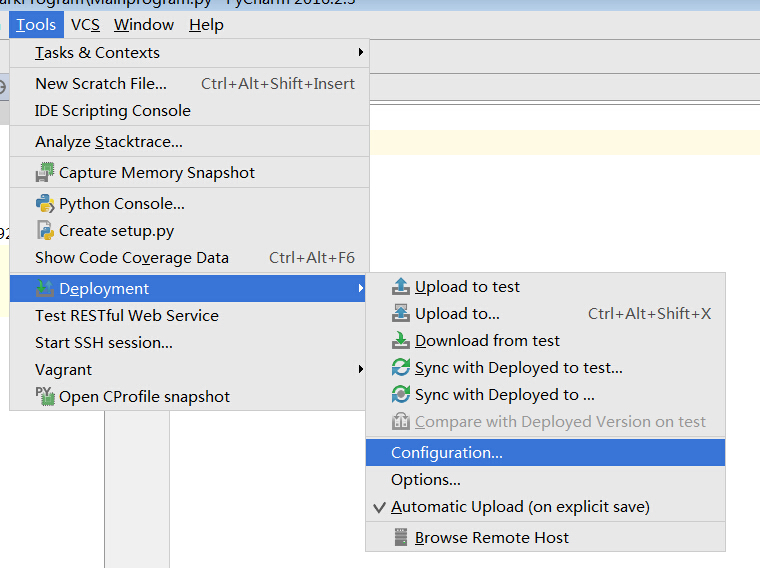

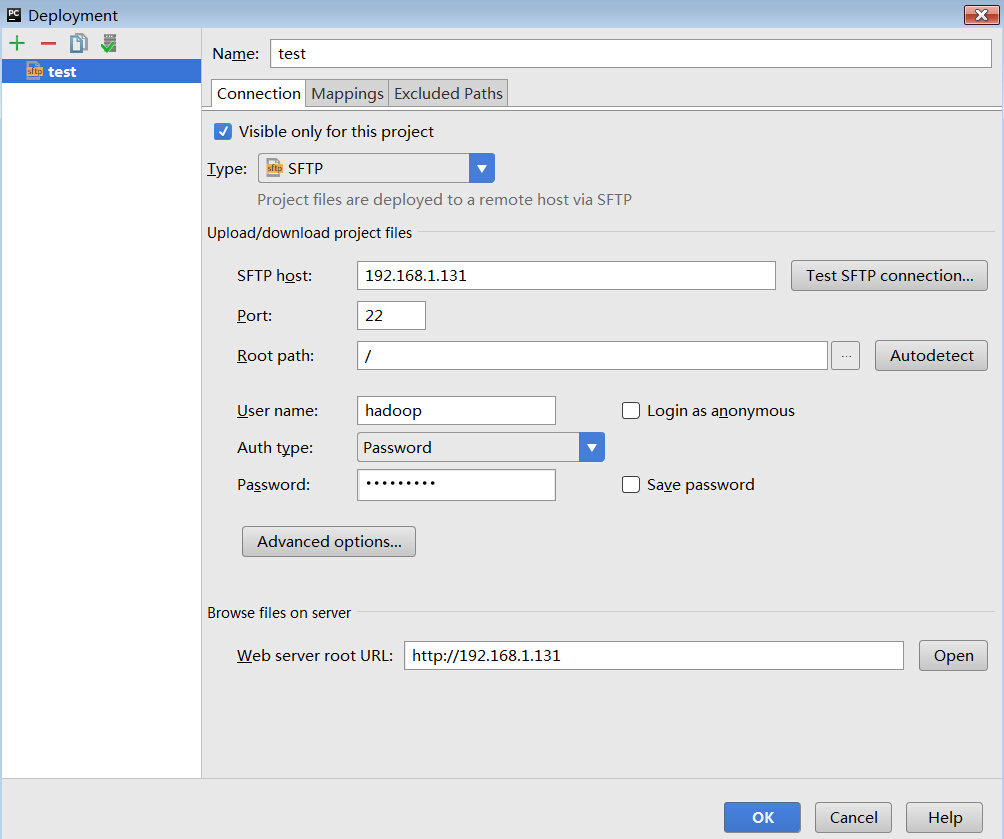

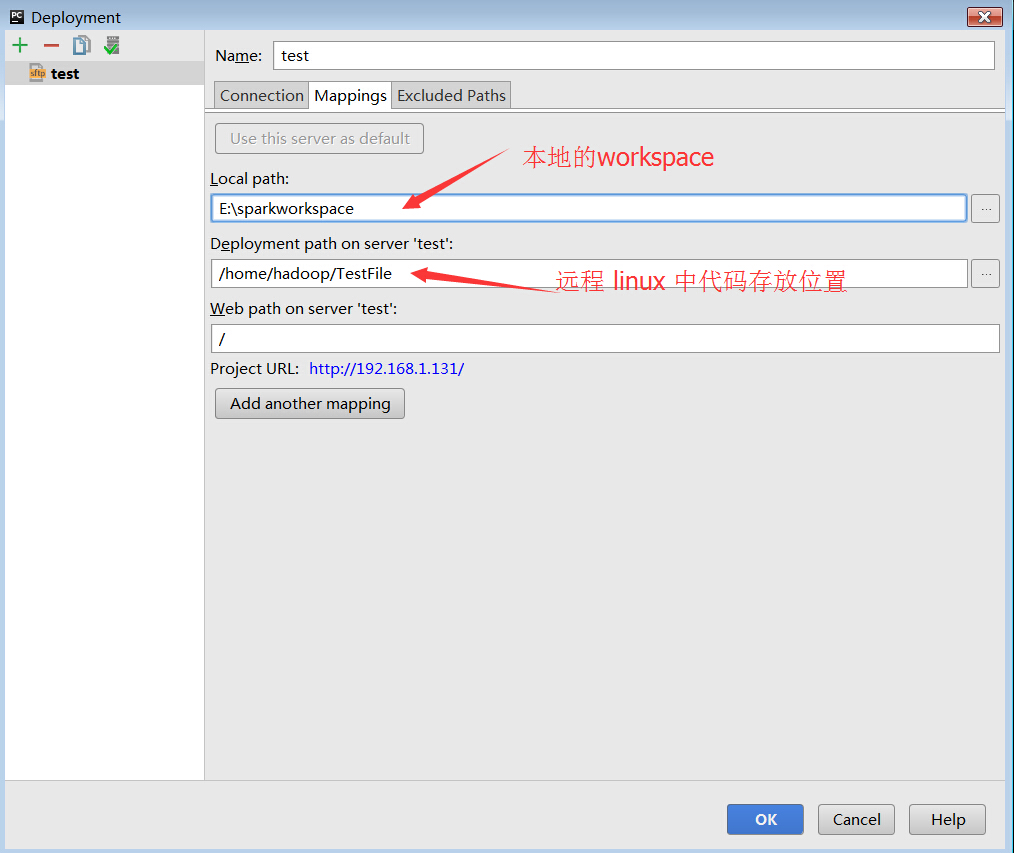

Tools > Dployment > Configuration

4、测试

import os import sys os.environ[\'SPARK_HOME\'] = \'/root/spark-1.4.0-bin-hadoop2.6\' sys.path.append("/root/spark-1.4.0-bin-hadoop2.6/python") try: from pyspark import SparkContext from pyspark import SparkConf print ("Successfully imported Spark Modules") except ImportError as e: print ("Can not import Spark Modules", e) sys.exit(1)

Result: ssh://hadoop@192.168.1.131:22/usr/bin/python -u /home/hadoop/TestFile/pysparkProgram/Mainprogram.py

Successfully imported Spark Modules Process finished with exit code 0

或者:

import sys sys.path.append("/root/programs/spark-1.4.0-bin-hadoop2.6/python") try: import numpy as np import scipy.sparse as sps from pyspark.mllib.linalg import Vectors dv1 = np.array([1.0, 0.0, 3.0]) dv2 = [1.0, 0.0, 3.0] sv1 = Vectors.sparse(3, [0, 2], [1.0, 3.0]) sv2 = sps.csc_matrix((np.array([1.0, 3.0]), np.array([0, 2]), np.array([0, 2])), shape=(3, 1)) print(sv2) except ImportError as e: print("Can not import Spark Modules", e) sys.exit(1)

Result ssh://hadoop@192.168.1.131:22/usr/bin/python -u /home/hadoop/TestFile/pysparkProgram/Mainprogram.py

(0, 0) 1.0 (2, 0) 3.0 Process finished with exit code 0

参考:

https://edumine.wordpress.com/2015/08/14/pyspark-in-pycharm/

http://renien.github.io/blog/accessing-pyspark-pycharm/

http://www.tuicool.com/articles/MJnYJb

参照:

http://blog.csdn.net/u011196209/article/details/9934721

以上是关于PyCharm 远程连接linux中Python 运行pyspark的主要内容,如果未能解决你的问题,请参考以下文章