Flume+hbase 日志数据采集与存储

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Flume+hbase 日志数据采集与存储相关的知识,希望对你有一定的参考价值。

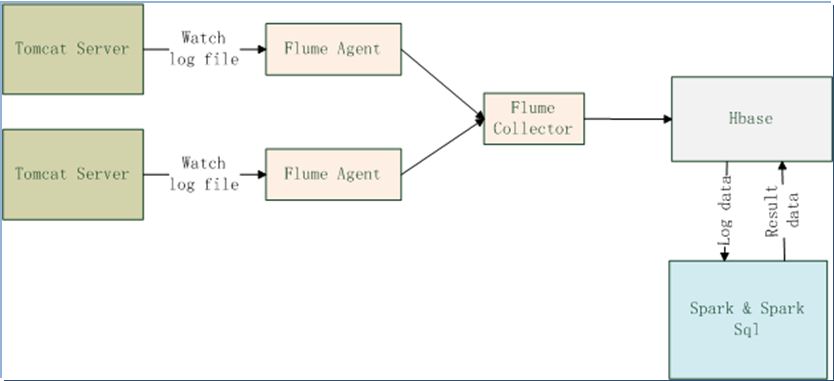

了解过flume的人,差不多都看过这张或则类似的图片,本文即实现上图部分内容。(由于条件有限,目前是单机上实现)

flume-agent配置文件

#flume agent conf source_agent.sources = server source_agent.sinks = avroSink source_agent.channels = memoryChannel source_agent.sources.server.type = exec source_agent.sources.server.command = tail -F /data/hudonglogs/self/channel.log source_agent.sources.server.channels = memoryChannel source_agent.channels.memoryChannel.type = memory source_agent.channels.memoryChannel.capacity = 1000 source_agent.channels.memoryChannel.transactionCapacity = 100 source_agent.sinks = avroSink source_agent.sinks.avroSink.type = avro source_agent.sinks.avroSink.hostname = 127.0.0.1 source_agent.sinks.avroSink.port = 41414 source_agent.sinks.avroSink.channel = memoryChannel

flume-hbase 配置文件

#hbase flume conf sinks collector.sources = avroSource collector.sinks = hbaseSink collector.channels =memChannel collector.sources.avroSource.type = avro collector.sources.avroSource.bind = 127.0.0.1 collector.sources.avroSource.port = 41414 collector.sources.avroSource.channels = memChannel collector.channels.memChannel.type = memory collector.channels.memChannel.capacity = 1000 collector.sinks.hbaseSink.type = asynchbase collector.sinks.hbaseSink.channel = memChannel collector.sinks.hbaseSink.table = logs collector.sinks.hbaseSink.columnFamily = content collector.sinks.hbaseSink.batchSize = 5

hbase中创建表logs 以及列族content

create ‘logs‘,‘content‘

启动flume

nohup $FLUME_HOME/bin/flume-ng agent -c $FLUME_HOME/conf -f $FLUME_HOME/conf/flume-hbase.conf -n collector &

nohup $FLUME_HOME/bin/flume-ng agent -c $FLUME_HOME /conf -f $FLUME_HOME/conf/flume-agent.conf -n source_agent &

注:这两条命令启动是有顺序的,先启动collector,否则会报错

在不断向文件中写入日志后,可以使用scan命令查看hbase

scan ‘logs‘

ROW COLUMN+CELL default09626ade-3f37-49c3-a930-270ef7119dd3 column=content:pCol, timestamp=1454480049481, value=2016-02-03 12:19:36,260 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4473176260 default09ba09cc-326c-465c-90f9-533c923923a0 column=content:pCol, timestamp=1454480074182, value=2016-02-03 14:14:22,179 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480062179 default0c199142-05f8-49c6-b341-8158bb445861 column=content:pCol, timestamp=1454480113199, value=2016-02-03 14:15:07,196 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480107196 default0cbb050e-3756-44f2-8c66-419a6e52fd97 column=content:pCol, timestamp=1454480049493, value=2016-02-03 12:19:42,263 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4473182263 default0f8eb841-7ccd-4abf-9dcf-4fc26d3f55f4 column=content:pCol, timestamp=1454480079188, value=2016-02-03 14:14:34,185 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480074185 default133b7acf-ca3c-46e9-90a8-27d553721900 column=content:pCol, timestamp=1454480121214, value=2016-02-03 14:15:18,200 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480118200 default13e2a5bc-f79a-4ffe-86a0-bf0ea37d8f35 column=content:pCol, timestamp=1454480139209, value=2016-02-03 14:15:35,206 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480135206 default19cae7ca-87ba-42b5-9f13-136c89c0c20e column=content:pCol, timestamp=1454480094196, value=2016-02-03 14:14:50,190 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480090190 default1aba06a5-973d-403a-91de-afacc9536172 column=content:pCol, timestamp=1454480139209, value=2016-02-03 14:15:34,206 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480134206 default1b240a9c-2b35-4649-b199-29a27a0c4db2 column=content:pCol, timestamp=1454480049481, value=2016-02-03 12:19:40,262 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4473180262 default1d92a0e3-b427-4958-bae7-c7bb1e9d20ef column=content:pCol, timestamp=1454480106197, value=2016-02-03 14:14:59,193 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480099193 default25ef2b1b-6d6c-4431-885e-c2e4d2297f59 column=content:pCol, timestamp=1454480085201, value=2016-02-03 14:14:42,187 com.hudong.test.Test01 [main] [INFO]-(Test01.java:22) 145 4480082187

另:

对于现在的日志格式可能不满意,flume支持自定义Serializer,用于日志的清洗,实现AsyncHbaseEventSerializer接口即可,并将flume重新打包,然后将相应的jar复制到flume home目录下lib文件夹下,旧可以!

接口的实现,参照博文:http://blog.csdn.net/yaoyasong/article/details/39400829

以上是关于Flume+hbase 日志数据采集与存储的主要内容,如果未能解决你的问题,请参考以下文章