Paper | Dynamic Residual Dense Network for Image Denoising

Posted ryanxing

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Paper | Dynamic Residual Dense Network for Image Denoising相关的知识,希望对你有一定的参考价值。

发表于2019 Sensors。

摘要

Deep convolutional neural networks have achieved great performance on various image restoration tasks. Specifically, the residual dense network (RDN) has achieved great results on image noise reduction by cascading multiple residual dense blocks (RDBs) to make full use of the hierarchical feature. However, the RDN only performs well in denoising on a single noise level, and the computational cost of the RDN increases significantly with the increase in the number of RDBs, and this only slightly improves the effect of denoising. To overcome this, we propose the dynamic residual dense network (DRDN), a dynamic network that can selectively skip some RDBs based on the noise amount of the input image. Moreover, the DRDN allows modifying the denoising strength to manually get the best outputs, which can make the network more effective for real-world denoising. Our proposed DRDN can perform better than the RDN and reduces the computational cost by 40?50%. Furthermore, we surpass the state-of-the-art CBDNet by 1.34 dB on the real-world noise benchmark.

结论

In this paper, we propose a DRDN model for noise reduction of real-world images. Our proposed DRDN makes full use of the properties of residual connection and deep supervision. We present a method to denoise images with different noise amounts and simultaneously reduce the average computational cost. The core idea of our method is to dynamically change the number of blocks involved in denoising to change the denoising strength via sequential decision. Moreover, our method can manually adjust the denoising strength of the model without fine-tuning the parameters.

要点

RDN有两个局限:第一,无法做到盲去噪;第二,随着RDB数量增加,计算量大增,而性能增益很小。

本文提出了动态RDN(DRDN),可以基于噪声程度,选择性地跳过一些RDB。DRDN甚至还允许手动更改去噪强度。

DRDN不仅性能比RDN更好,而且计算负荷下降40-50%。

故事背景

我们先回顾RDN和RDB。RDN中有大量以RDB为单位的短连接。RDN作者对此的解释是:让前后的RDB具有连续的记忆。但回顾黄高老师的学术报告,我们知道,这种局部短连接设计可以让级联模型更健壮,并且本质上是增加了网络的冗余,提高泛化能力。

因此,作者认为,RDN中的有一些RDB可能对某个任务贡献不大,是可以选择性跳过的。

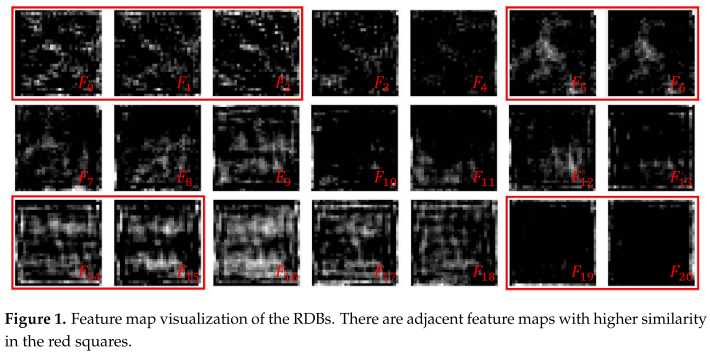

最可贵的一点是,作者通过对RDN的可视化,发现:相邻RDB的输出很形似。如图:

其中,每一层的可视化结果,是当前层所有通道的均值。

这说明:我们可以用恒等变换(短连接)跳过一些RDB,在达到近似性能的同时,减小计算量。

作者还提到了UNet++。该网络对UNet进行了剪裁:基于在测试集上的恶化程度。但本文更希望DRDN能够根据任务难度,自主地进行RDB选择。

DRDN

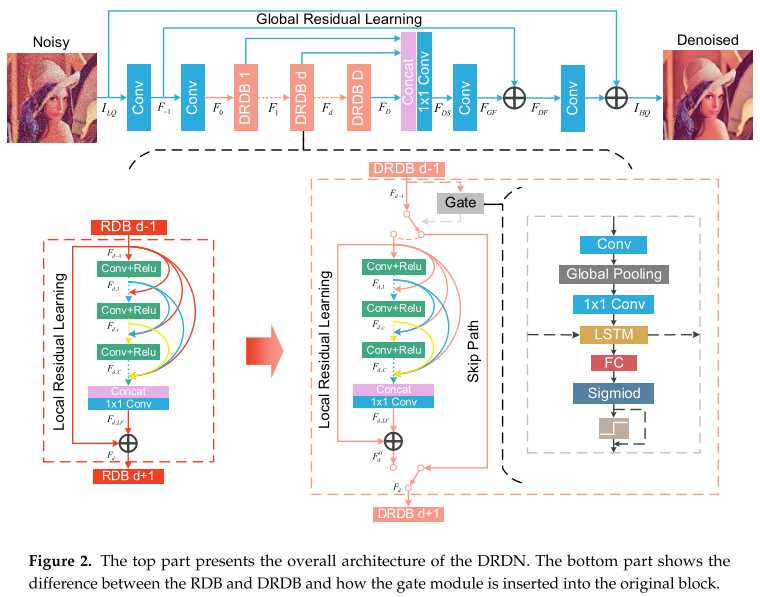

如图,DRDN的结构和RDN是一样的,但内部的RDB换成了DRDB。

在DRDN内部,作者通过一个LSTM,来预测下一个RDB的重要性。如果重要性低于设定阈值,那么下一个RDB就会被短连接代替。这被作者称为门模块(gate module)。

注意,LSTM是建立在连续的DRDB上的。

实战中,作者使用了20个DRDB,每个DRDB内部有6层卷积。其余参数见第四章。

训练

在训练时,如果LSTM阈值未达到,那么该RDB梯度为0。

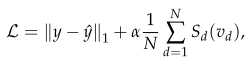

训练分为3个阶段:首先,我们令所有门输出为1,即使得DRDN收敛;其次,我们让门恢复正常,使得门收敛;最后,我们加上对通过率的惩罚,迫使一些DRDB不工作。整体损失函数如下:

其中,\\(S\\)是sigmoid函数,\\(v_d\\)是第\\(d\\)个DRDB输出的向量(FC层输出)。

实验略。

以上是关于Paper | Dynamic Residual Dense Network for Image Denoising的主要内容,如果未能解决你的问题,请参考以下文章