音乐推荐系统实践

Posted chen8023miss

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了音乐推荐系统实践相关的知识,希望对你有一定的参考价值。

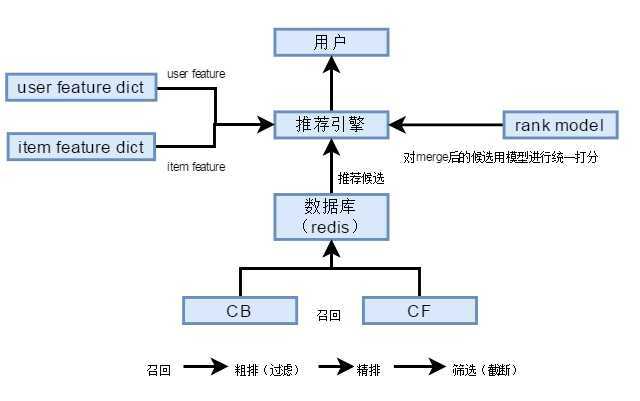

一、推荐系统流程图

CB,CF算法在召回阶段使用,推荐出来的item是粗排的,利用LR算法,可以将CB,CF召回回来的item进行精排,然后选择分数最高,给用户推荐出来。

二、推荐系统思路详解

代码思路:

1、数据预处理(用户画像数据、物品元数据、用户行为数据)

2、召回(CB、CF算法)

3、LR训练模型的数据准备,即用户特征数据,物品特征数据

4、模型准备,即通过LR算法训练模型数据得到w,b

5、推荐系统流程:

(1)解析请求:userid,itemid

(2)加载模型:加载排序模型(model.w,model.b)

(3)检索候选集合:利用cb,cf去redis里面检索数据库,得到候选集合

(4)获取用户特征:userid

(5)获取物品特征:itemid

(6)打分(逻辑回归,深度学习),排序

(7)top-n过滤

(8)数据包装(itemid->name),返回

三、推荐系统实现

1、数据预处理

(1)用户画像数据:user_profile.data

userid,性别,年龄,收入,地域

(2)物品(音乐)元数据:music_meta

itemid,name,desc,时长,地域,标签

(3)用户行为数据:user_watch_pref.sml

userid,itemid,该用户对该物品的收听时长,点击时间(小时)

首先,将3份数据融合到一份数据中

在pre_base_data目录中,执行python gen_base.py

# 将3份数据merge后的结果输出,供下游数据处理 #coding=utf-8 import sys user_action_data = ‘../data/user_watch_pref.sml‘ music_meta_data = ‘../data/music_meta‘ user_profile_data = ‘../data/user_profile.data‘ output_file = ‘../data/merge_base.data‘ # 将3份数据merge后的结果输出,供下游数据处理 ofile = open(output_file, ‘w‘) # step 1. decode music meta data item_info_dict = with open(music_meta_data, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘\\001‘) if len(ss) != 6: continue itemid, name, desc, total_timelen, location, tags = ss item_info_dict[itemid] = ‘\\001‘.join([name, desc, total_timelen, location, tags]) # step 2. decode user profile data user_profile_dict = with open(user_profile_data, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘,‘) if len(ss) != 5: continue userid, gender, age, salary, location = ss user_profile_dict[userid] = ‘\\001‘.join([gender, age, salary, location]) # step 3. decode user action data # output merge data with open(user_action_data, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘\\001‘) if len(ss) != 4: continue userid, itemid, watch_len, hour = ss if userid not in user_profile_dict: continue if itemid not in item_info_dict: continue ofile.write(‘\\001‘.join([userid, itemid, watch_len, hour, user_profile_dict[userid], item_info_dict[itemid]])) ofile.write("\\n") ofile.close()

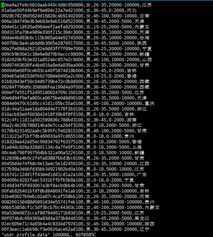

得到类似下面数据merge_base.data

- 01e3fdf415107cd6046a07481fbed499^A6470209102^A1635^A21^A男^A36-45^A20000-100000^A内蒙古^A黄家驹1993演唱会高清视频^A^A1969^A^A演唱会

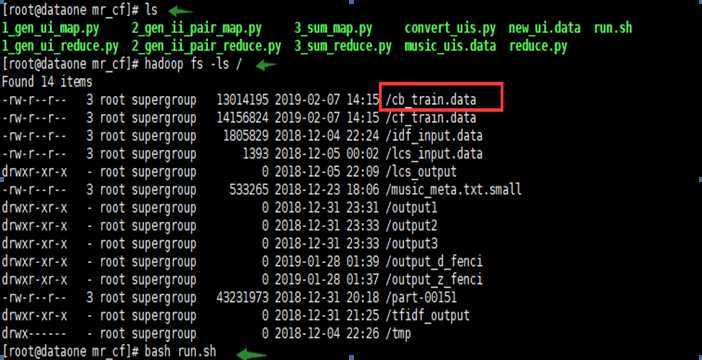

2、【召回】CB算法

(1)以token itemid score形式整理训练数据

利用jieba分词,对item name进行中文分词

python gen_cb_train.py

#coding=utf-8 import sys sys.path.append(‘../‘) reload(sys) sys.setdefaultencoding(‘utf-8‘) import jieba import jieba.posseg import jieba.analyse input_file = "../data/merge_base.data" # 输出cb训练数据 output_file = ‘../data/cb_train.data‘ ofile = open(output_file, ‘w‘) RATIO_FOR_NAME = 0.9 RATIO_FOR_DESC = 0.1 RATIO_FOR_TAGS = 0.05 # 这里是jieba的idf文件 idf_file = ‘../data/idf.txt‘ idf_dict = with open(idf_file, ‘r‘) as fd: for line in fd: token, idf_score = line.strip().split(‘ ‘) idf_dict[token] = idf_score itemid_set = set() with open(input_file, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘\\001‘) # 用户行为 userid = ss[0].strip() itemid = ss[1].strip() watch_len = ss[2].strip() hour = ss[3].strip() # 用户画像 gender = ss[4].strip() age = ss[5].strip() salary = ss[6].strip() user_location = ss[7].strip() # 物品元数据 name = ss[8].strip() desc = ss[9].strip() total_timelen = ss[10].strip() item_location = ss[11].strip() tags = ss[12].strip() # item因为做CB算法,所以不需要行为参数,只要拿到物品的名字就可以使用,所以进行去重复 if itemid not in itemid_set: itemid_set.add(itemid) else: continue # token for name, desc token_dict = for a in jieba.analyse.extract_tags(name, withWeight=True): token = a[0] score = float(a[1]) token_dict[token] = score * RATIO_FOR_NAME # token for desc for a in jieba.analyse.extract_tags(desc, withWeight=True): token = a[0] score = float(a[1]) if token in token_dict: token_dict[token] += score * RATIO_FOR_DESC else: token_dict[token] = score * RATIO_FOR_DESC # token for tags for tag in tags.strip().split(‘,‘): if tag not in idf_dict: continue else: if tag in token_dict: token_dict[tag] += float(idf_dict[tag]) * RATIO_FOR_TAGS else: token_dict[tag] = float(idf_dict[tag]) * RATIO_FOR_TAGS for k, v in token_dict.items(): token = k.strip() score = str(v) #print token, itemid, score ofile.write(‘,‘.join([token, itemid, score])) ofile.write("\\n") ofile.close()

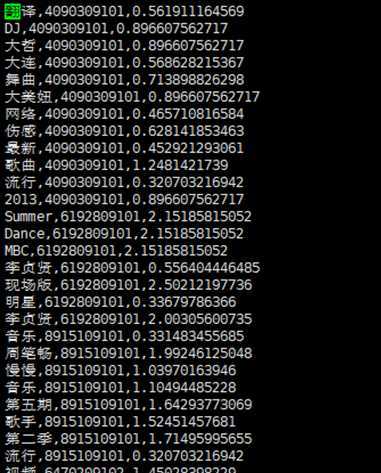

得到如下数据:

- 翻译,4090309101,0.561911164569(最后一个是一个不是传统的TF-IDF,因为分出的词在name,desc,tag里面他的重要性是不一样的)

(2)用协同过滤算法跑出item-item数据

最后得到基于cb的ii矩阵

(3)对数据格式化,item-> item list形式,整理出KV形式

python gen_reclist.py

#coding=utf-8 import sys infile = ‘../data/cb.result‘ outfile = ‘../data/cb_reclist.redis‘ ofile = open(outfile, ‘w‘) MAX_RECLIST_SIZE = 100 PREFIX = ‘CB_‘ rec_dict = with open(infile, ‘r‘) as fd: for line in fd: itemid_A, itemid_B, sim_score = line.strip().split(‘\\t‘) if itemid_A not in rec_dict: rec_dict[itemid_A] = [] rec_dict[itemid_A].append((itemid_B, sim_score)) for k, v in rec_dict.items(): key_item = PREFIX + k reclist_result = ‘_‘.join([‘:‘.join([tu[0], str(round(float(tu[1]), 6))]) for tu in sorted(v, key=lambda x: x[1], reverse=True)[:MAX_RECLIST_SIZE]]) ofile.write(‘ ‘.join([‘SET‘, key_item, reclist_result])) ofile.write("\\n") ofile.close()

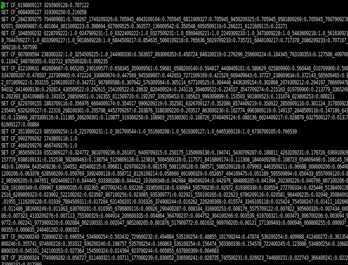

类似如下数据:

- SET CB_5305109176 726100303:0.393048_953500302:0.393048_6193109237:0.348855

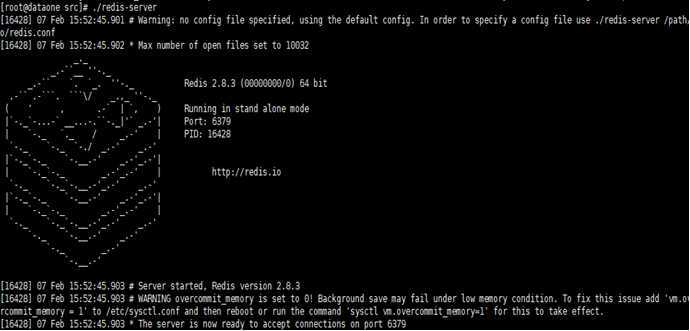

(4)灌库(redis)

下载redis-2.8.3.tar.gz安装包

进行源码编译(需要C编译yum install

gcc-c++ ),执行make,然后会在src目录中,得到bin文件(redis-server

服务器,redis-cli 客户端)

启动redis

server服务两种方法:

]# ./src/redis-server

]#后台方式启动 nohup

./redis-server &

然后换一个终端执行:]# ./src/redis-cli,连接服务

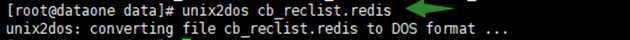

接下来灌数据(批量灌):

需要安装unix2dos(yum install unix2dos)(格式转换)

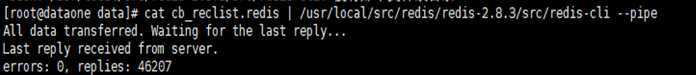

]# cat cb_reclist.redis | /usr/local/src/redis-2.8.3/src/redis-cli --pipe 这样是会报大量异常,所以需要用下面的方式去做,完了再使用管道插入(注意redis安装目录)

unix2dos cb_reclist.redis

cat cb_reclist.redis | /usr/local/src/redis/redis-2.8.3/src/redis-cli --pipe

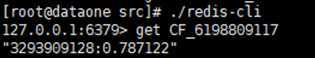

验证:]# ./src/redis-cli

执行:

127.0.0.1:6379> get CB_5305109176

"726100303:0.393048_953500302:0.393048_6193109237:0.348855"

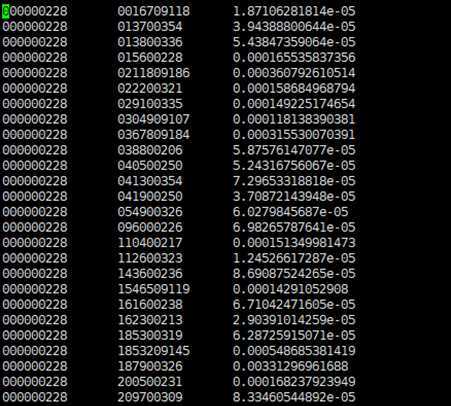

3、【召回】CF算法

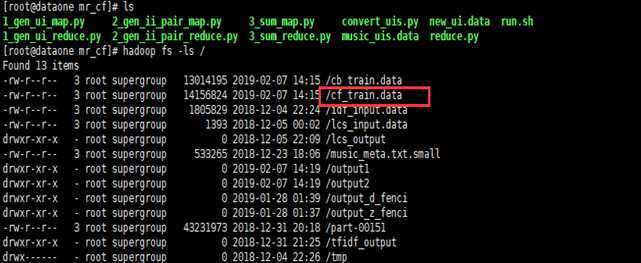

(1)以userid itemid score形式整理训练数据

python gen_cf_train.py

#coding=utf-8 import sys input_file = "../data/merge_base.data" # 输出cf训练数据 output_file = ‘../data/cf_train.data‘ ofile = open(output_file, ‘w‘) key_dict = with open(input_file, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘\\001‘) # 用户行为 userid = ss[0].strip() itemid = ss[1].strip() watch_len = ss[2].strip() hour = ss[3].strip() # 用户画像 gender = ss[4].strip() age = ss[5].strip() salary = ss[6].strip() user_location = ss[7].strip() # 物品元数据 name = ss[8].strip() desc = ss[9].strip() total_timelen = ss[10].strip() item_location = ss[11].strip() tags = ss[12].strip() key = ‘_‘.join([userid, itemid]) if key not in key_dict: key_dict[key] = [] key_dict[key].append((int(watch_len), int(total_timelen))) for k, v in key_dict.items(): t_finished = 0 t_all = 0 # 对<userid, itemid>为key进行分数聚合 for vv in v: t_finished += vv[0] t_all += vv[1] # 得到userid对item的最终分数 score = float(t_finished) / float(t_all) userid, itemid = k.strip().split(‘_‘) ofile.write(‘,‘.join([userid, itemid, str(score)])) ofile.write("\\n") ofile.close()

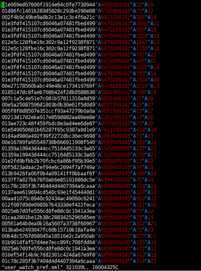

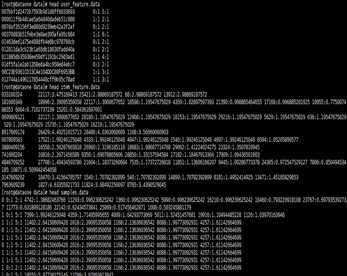

得到如下数据:

(2)用协同过滤算法跑出item-item数据

最后得到基于cf的ii矩阵

(3)对数据格式化,item-> item list形式,整理出KV形式

python gen_reclist.py

#coding=utf-8 import sys infile = ‘../data/cf.result‘ outfile = ‘../data/cf_reclist.redis‘ ofile = open(outfile, ‘w‘) MAX_RECLIST_SIZE = 100 PREFIX = ‘CF_‘ rec_dict = with open(infile, ‘r‘) as fd: for line in fd: itemid_A, itemid_B, sim_score = line.strip().split(‘\\t‘) if itemid_A not in rec_dict: rec_dict[itemid_A] = [] rec_dict[itemid_A].append((itemid_B, sim_score)) for k, v in rec_dict.items(): key_item = PREFIX + k reclist_result = ‘_‘.join([‘:‘.join([tu[0], str(round(float(tu[1]), 6))]) for tu in sorted(v, key=lambda x: x[1], reverse=True)[:MAX_RECLIST_SIZE]]) ofile.write(‘ ‘.join([‘SET‘, key_item, reclist_result])) ofile.write("\\n") ofile.close()

类似如下数据:

(4)灌库

unix2dos cf_reclist.redis

cat cf_reclist.redis | /usr/local/src/redis-2.8.3/src/redis-cli --pipe

验证:

4、LR训练模型的数据准备

准备我们自己的训练数据

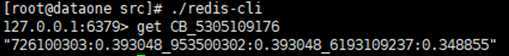

进入pre_data_for_rankmodel目录:

gen_samples.py

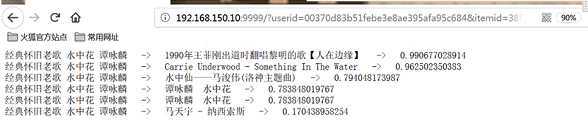

#coding=utf-8 import sys sys.path.append(‘../‘) reload(sys) sys.setdefaultencoding(‘utf-8‘) import jieba import jieba.analyse import jieba.posseg merge_base_infile = ‘../data/merge_base.data‘ output_file = ‘../data/samples.data‘ output_user_feature_file = ‘../data/user_feature.data‘ output_item_feature_file = ‘../data/item_feature.data‘ output_itemid_to_name_file = ‘../data/name_id.dict‘ def get_base_samples(infile): ret_samples_list = [] user_info_set = set() item_info_set = set() item_name2id = item_id2name = with open(infile, ‘r‘) as fd: for line in fd: ss = line.strip().split(‘\\001‘) if len(ss) != 13: continue userid = ss[0].strip() itemid = ss[1].strip() watch_time = ss[2].strip() total_time = ss[10].strip() # user info gender = ss[4].strip() age = ss[5].strip() user_feature = ‘\\001‘.join([userid, gender, age]) # item info name = ss[8].strip() item_feature = ‘\\001‘.join([itemid, name]) # label info label = float(watch_time) / float(total_time) final_label = ‘0‘ if label >= 0.82: final_label = ‘1‘ elif label <= 0.3: final_label = ‘0‘ else: continue # gen name2id dict for item feature item_name2id[name] = itemid # gen id2name dict item_id2name[itemid] = name # gen all samples list ret_samples_list.append([final_label, user_feature, item_feature]) # gen uniq userinfo user_info_set.add(user_feature) item_info_set.add(name) return ret_samples_list, user_info_set, item_info_set, item_name2id, item_id2name # step1. generate base samples(label, user feature, item feature) base_sample_list, user_info_set, item_info_set, item_name2id, item_id2name = get_base_samples(merge_base_infile) # step2. extract user feature user_fea_dict = for info in user_info_set: userid, gender, age = info.strip().split(‘\\001‘) #gender idx = 0 # default 女 if gender == ‘男‘: idx = 1 gender_fea = ‘:‘.join([str(idx), ‘1‘]) # age idx = 0 if age == ‘0-18‘: idx = 0 elif age == ‘19-25‘: idx = 1 elif age == ‘26-35‘: idx = 2 elif age == ‘36-45‘: idx = 3 else: idx = 4 idx += 2 age_fea = ‘:‘.join([str(idx), ‘1‘]) user_fea_dict[userid] = ‘ ‘.join([gender_fea, age_fea]) # step3. extract item feature token_set = set() item_fs_dict = for name in item_info_set: token_score_list = [] for x, w in jieba.analyse.extract_tags(name, withWeight=True): token_score_list.append((x, w)) token_set.add(x) item_fs_dict[name] = token_score_list user_feature_offset = 10 # gen item id feature token_id_dict = for tu in enumerate(list(token_set)): # token -> token id token_id_dict[tu[1]] = tu[0] item_fea_dict = for name, fea in item_fs_dict.items(): tokenid_score_list = [] for (token, score) in fea: if token not in token_id_dict: continue token_id = token_id_dict[token] + user_feature_offset tokenid_score_list.append(‘:‘.join([str(token_id), str(score)])) item_fea_dict[name] = ‘ ‘.join(tokenid_score_list) # step 4.generate final samples ofile = open(output_file, ‘w‘) for (label, user_feature, item_feature) in base_sample_list: userid = user_feature.strip().split(‘\\001‘)[0] item_name = item_feature.strip().split(‘\\001‘)[1] if userid not in user_fea_dict: continue if item_name not in item_fea_dict: continue ofile.write(‘ ‘.join([label, user_fea_dict[userid], item_fea_dict[item_name]])) ofile.write(‘\\n‘) ofile.close() # step 5. generate user feature mapping file o_u_file = open(output_user_feature_file, ‘w‘) for userid, feature in user_fea_dict.items(): o_u_file.write(‘\\t‘.join([userid, feature])) o_u_file.write(‘\\n‘) o_u_file.close() # step 6. generate item feature mapping file o_i_file = open(output_item_feature_file, ‘w‘) for item_name, feature in item_fea_dict.items(): if item_name not in item_name2id: continue itemid = item_name2id[item_name] o_i_file.write(‘\\t‘.join([itemid, feature])) o_i_file.write(‘\\n‘) o_i_file.close() # step 7. generate item id to name mapping file o_file = open(output_itemid_to_name_file, ‘w‘) for itemid, item_name in item_id2name.items(): o_file.write(‘\\t‘.join([itemid, item_name])) o_file.write(‘\\n‘) o_file.close()

5、模型准备

# -*- coding: UTF-8 -*- ‘‘‘ 思路:这里我们要用到我们的数据,就需要我们自己写load_data的部分, 首先定义main,方法入口,然后进行load_data的编写 其次调用该方法的到x训练x测试,y训练,y测试,使用L1正则化或是L2正则化使得到结果更加可靠 输出wegiht,和b偏置 ‘‘‘ import sys import numpy as np from scipy.sparse import csr_matrix from sklearn.model_selection import train_test_split from sklearn.linear_model import LogisticRegression input_file = sys.argv[1] def load_data(): #由于在计算过程用到矩阵计算,这里我们需要根据我们的数据设置行,列,和训练的数据准备 #标签列表 target_list = [] #行数列表 fea_row_list = [] #特征列表 fea_col_list = [] #分数列表 data_list = [] #设置行号计数器 row_idx = 0 max_col = 0 with open(input_file,‘r‘) as fd: for line in fd: ss = line.strip().split(‘ ‘) #标签 label = ss[0] #特征 fea = ss[1:] #将标签放入标签列表中 target_list.append(int(label)) #开始循环处理特征: for fea_score in fea: sss = fea_score.strip().split(‘:‘) if len(sss) != 2: continue feature, score = sss #增加行 fea_row_list.append(row_idx) #增加列 fea_col_list.append(int(feature)) #填充分数 data_list.append(float(score)) if int(feature) > max_col: max_col = int(feature) row_idx += 1 row = np.array(fea_row_list) col = np.array(fea_col_list) data = np.array(data_list) fea_datasets = csr_matrix((data, (row, col)), shape=(row_idx, max_col + 1)) x_train, x_test, y_train, y_test = train_test_split(fea_datasets, s, test_size=0.2, random_state=0) return x_train, x_test, y_train, y_test def main(): x_train,x_test,y_train,y_test = load_data() #用L2正则话防止过拟合 model = LogisticRegression(penalty=‘l2‘) #模型训练 model.fit(x_train,y_train) ff_w = open(‘model.w‘, ‘w‘) ff_b = open(‘model.b‘, ‘w‘) #写入训练出来的W for w_list in model.coef_: for w in w_list: print >> ff_w, "w: ", w # 写入训练出来的B for b in model.intercept_: print >> ff_b, "b: ", b print "precision: ", model.score(x_test, y_test) print "MSE: ", np.mean((model.predict(x_test) - y_test) ** 2) if __name__ == ‘__main__‘: main()

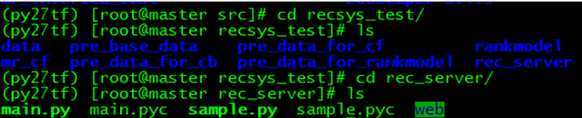

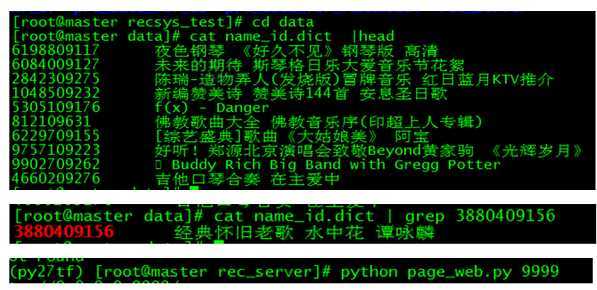

6、推荐系统实现

推荐系统demo流程

(1)解析请求:userid,itemid

(2)加载模型:加载排序模型(model.w,model.b)

(3)检索候选集合:利用cb,cf去redis里面检索数据库,得到候选集合

(4)获取用户特征:userid

(5)获取物品特征:itemid

(6)打分(逻辑回归,深度学习),排序

(7)top-n过滤

(8)数据包装(itemid->name),返回

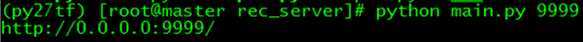

验证:

192.168.150.10:9999/?userid=00370d83b51febe3e8ae395afa95c684&itemid=3880409156

以上是关于音乐推荐系统实践的主要内容,如果未能解决你的问题,请参考以下文章