kuberenetes 的多节点集群与高可用配置

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kuberenetes 的多节点集群与高可用配置相关的知识,希望对你有一定的参考价值。

kuberenetes 的多节点集群与高可用配置标签(空格分隔): kubernetes系列

一: kubernetes master 节点的 高可用

二: 配置nginx 服务器

- 三: 配置nginx 的LB 的 keepalived 高可用

一: kubernetes master 节点的 高可用

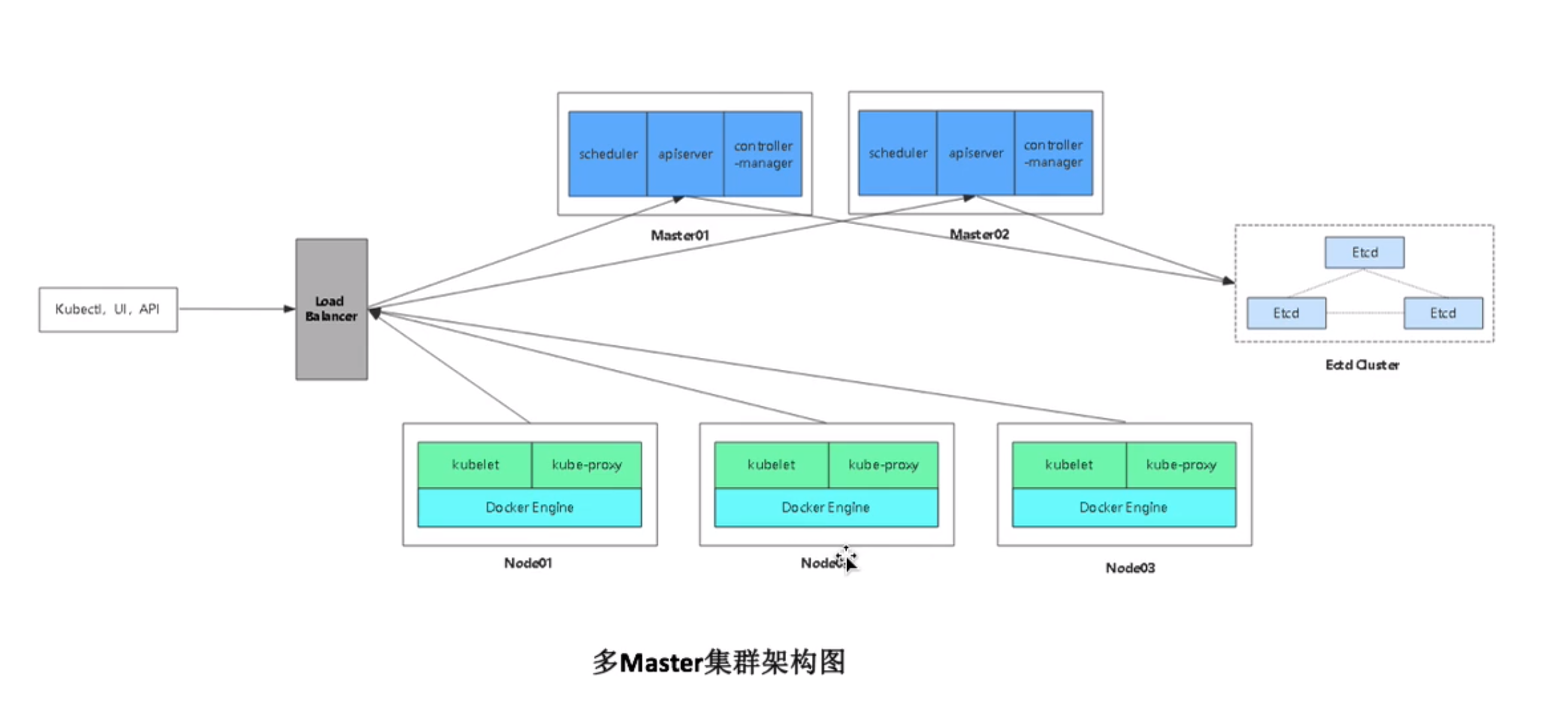

kubernetes 的多master集群架构

kubernetes 高可用主要在于apiserver

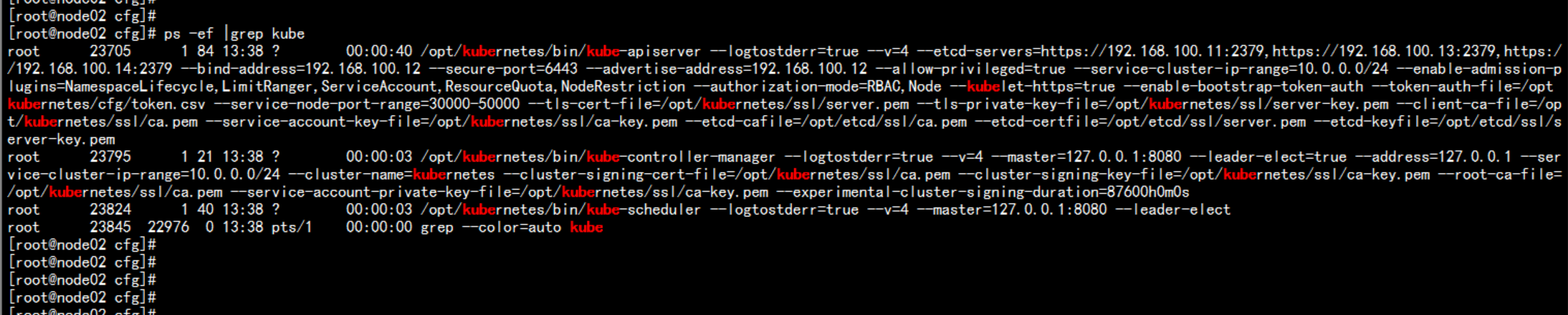

部署master01 IP 地址: 192.168.100.12

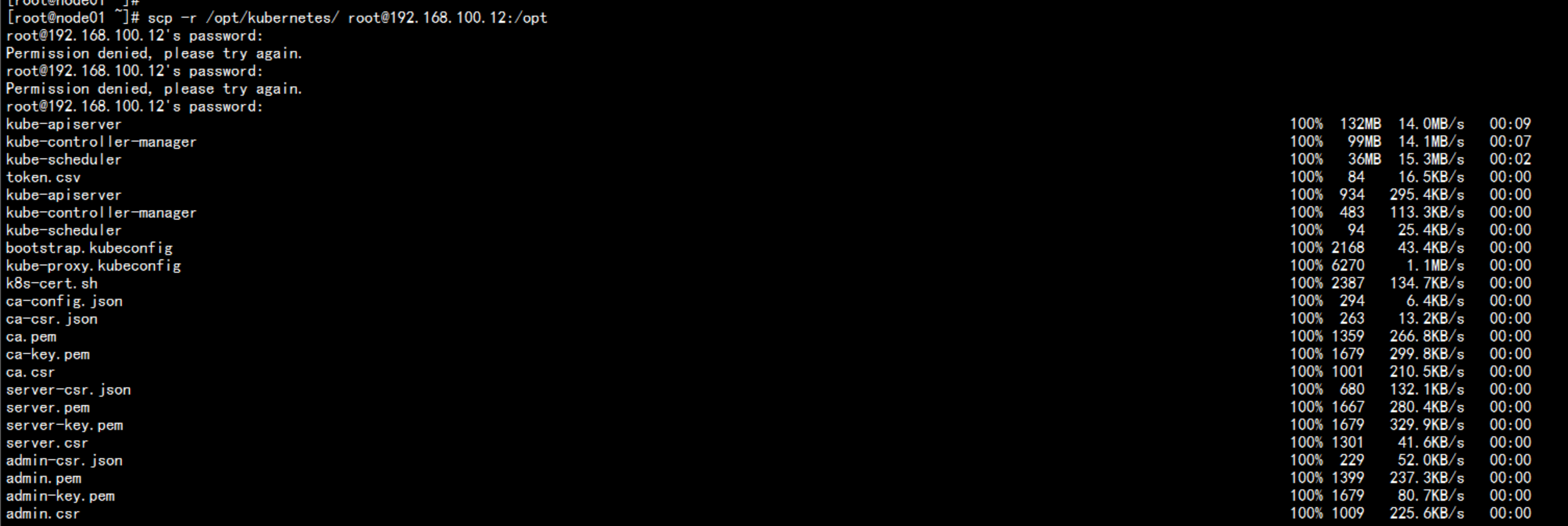

在 192.168.100.12 部署与 master01 一样的 服务

scp -r /opt/kubernetes/ root@192.168.100.12:/opt/

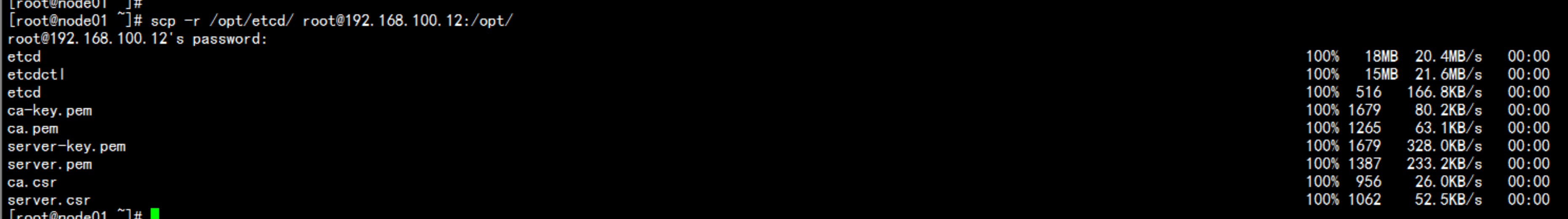

scp -r /opt/etcd/ root@192.168.100.12:/opt/

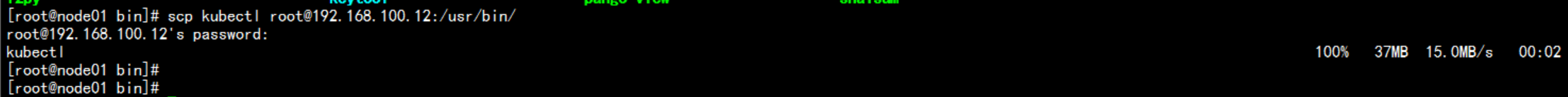

scp /usr/bin/kubectl root@192.168.20.12:/usr/bin/

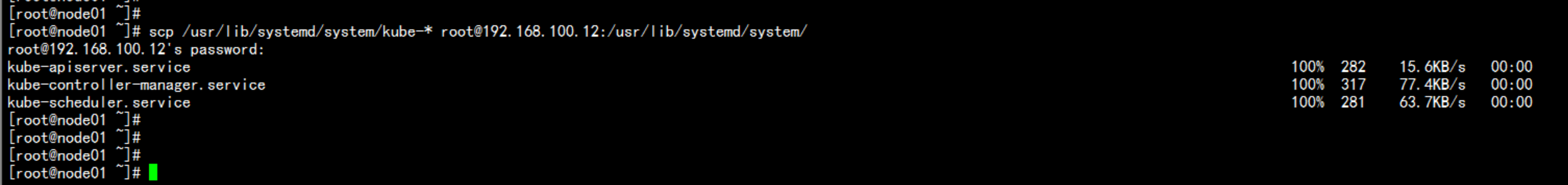

scp /usr/lib/systemd/system/kube-* root@192.168.100.12:/usr/lib/systemd/system/

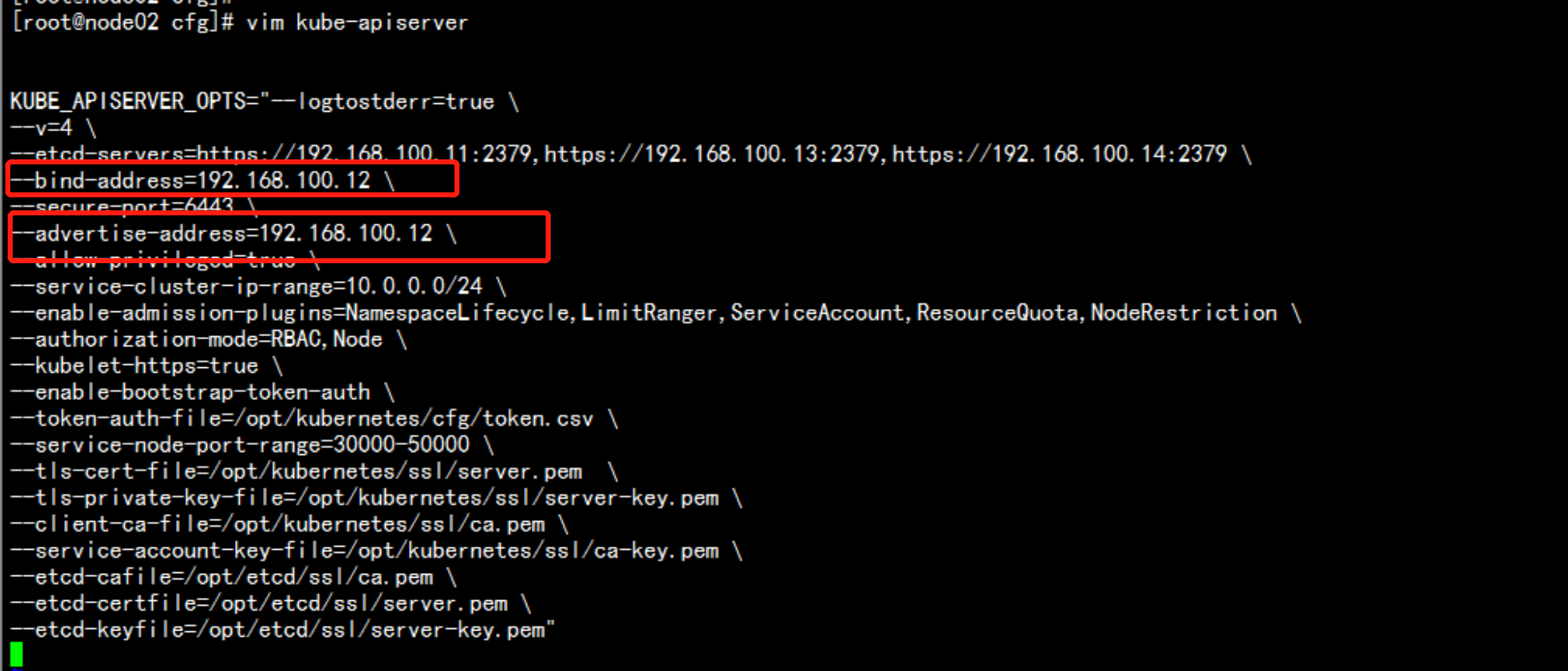

修改 配置文件

cd /opt/kubernetes/cfg

vim kube-apiserver

---

--bind-address=192.168.100.12

--advertise-address=192.168.100.12

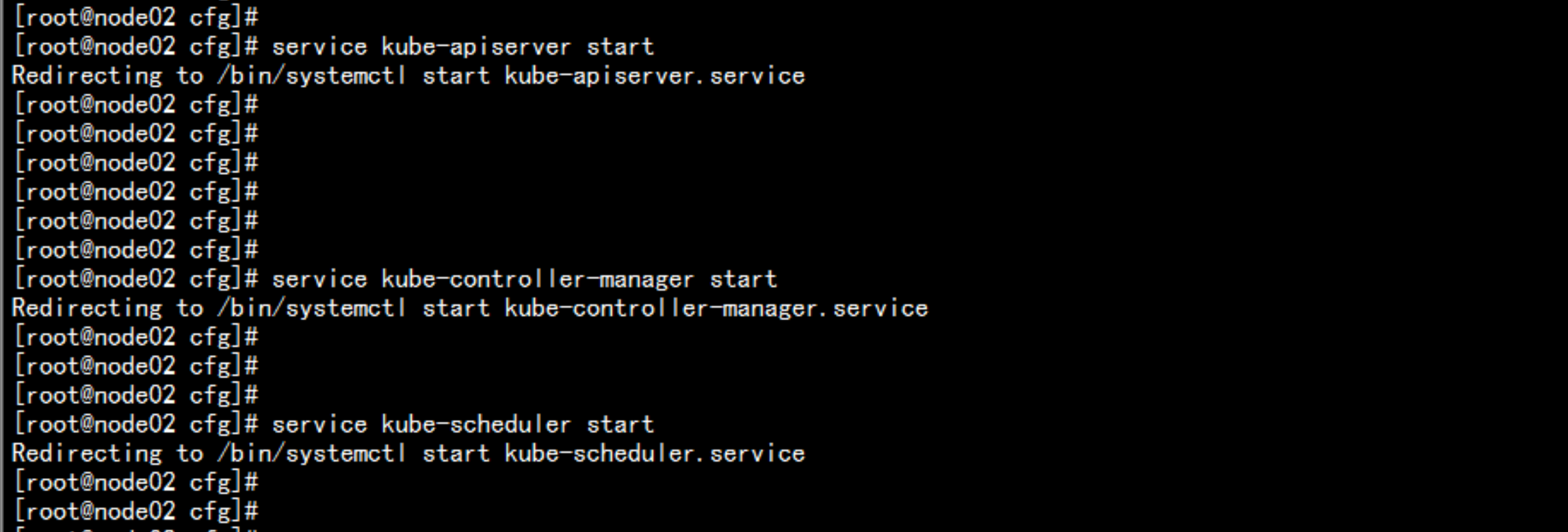

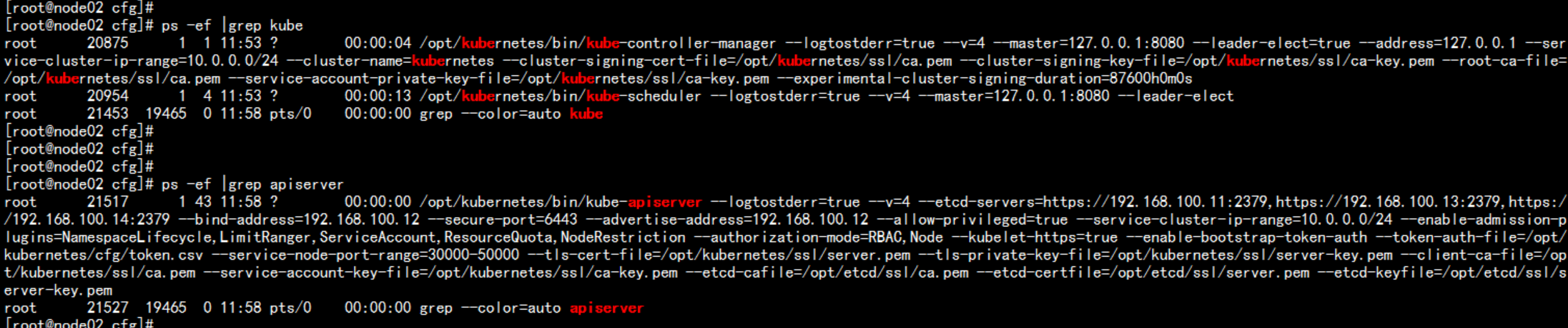

启动服务:

service kube-apiserver start

service kube-controller-manager start

service kube-scheduler start

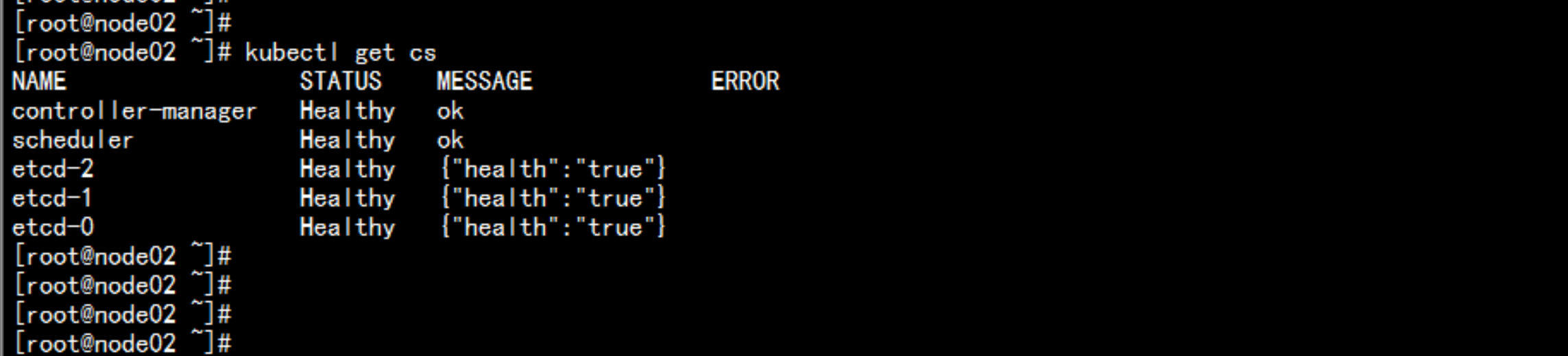

login :

192.168.20.12

kubectl get cs

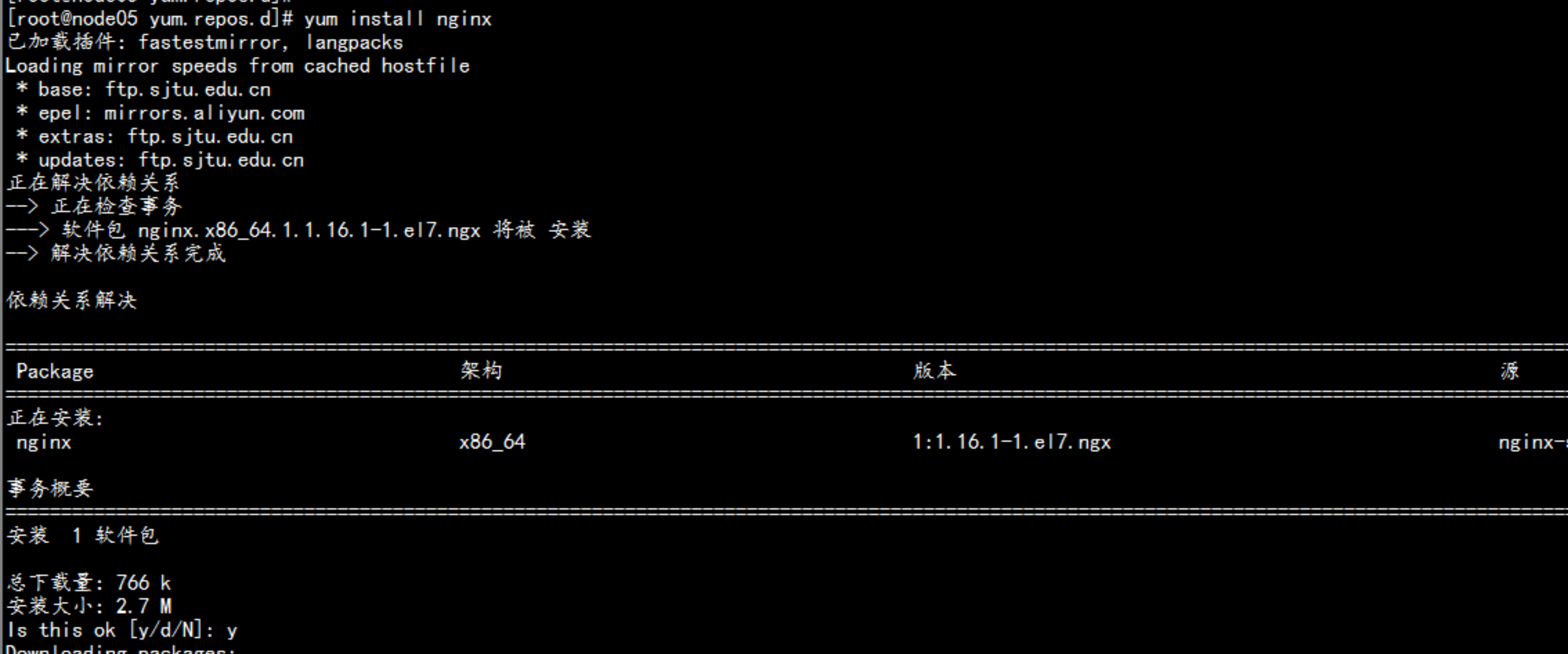

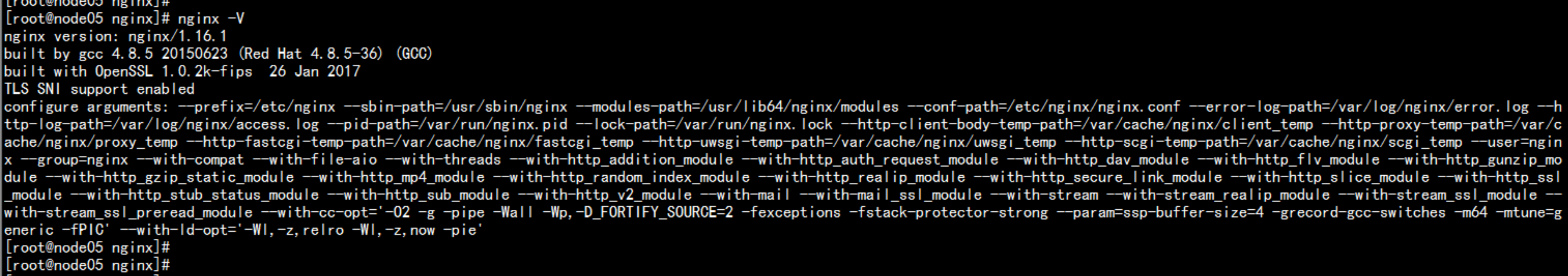

配置负载均衡nginx

nginx 服务器 地址: 192.168.100.15

nginx 的yum 包地址

http://nginx.org/en/linux_packages.html

cd /etc/yum.repos.d/

vim nginx.repo

---

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=1

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

---yum install nignx

cd /etc/nginx/

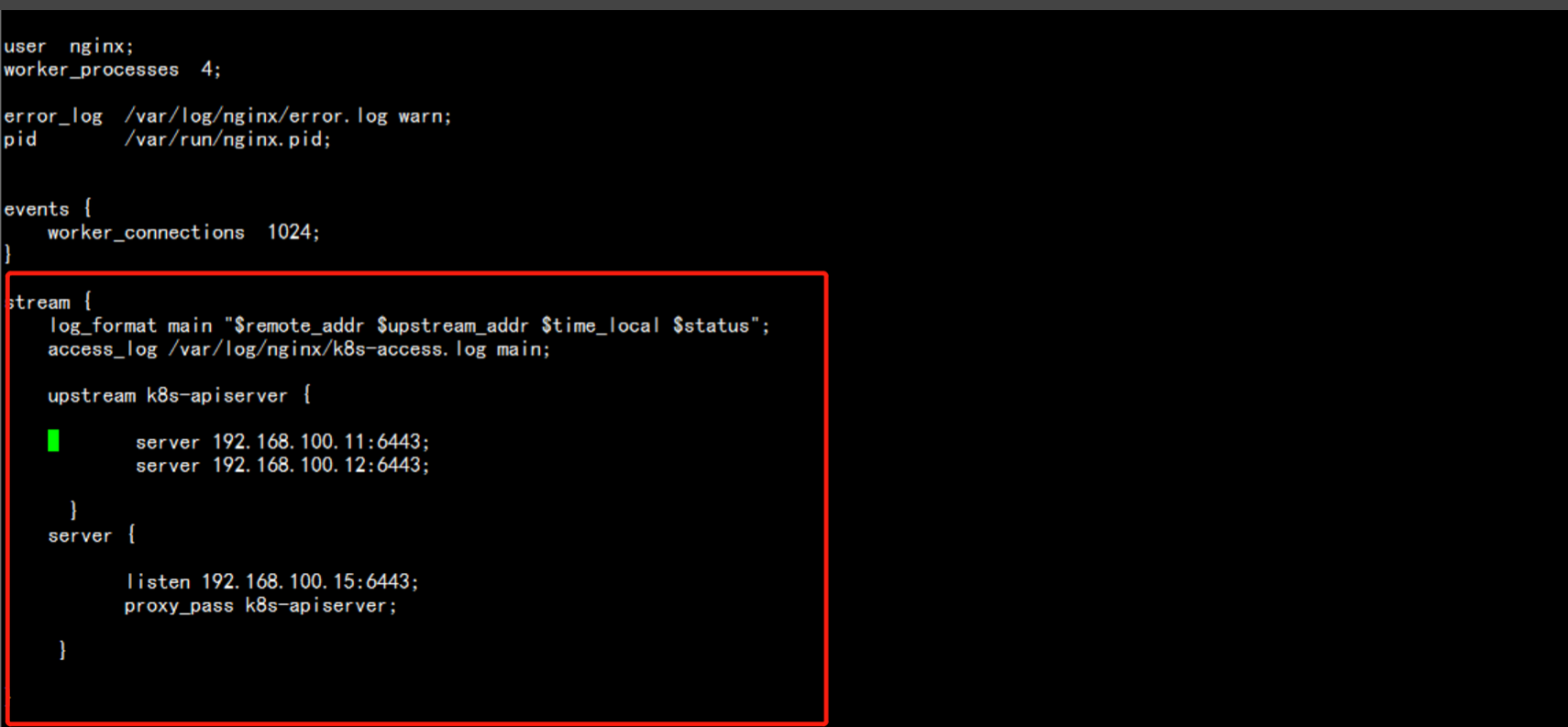

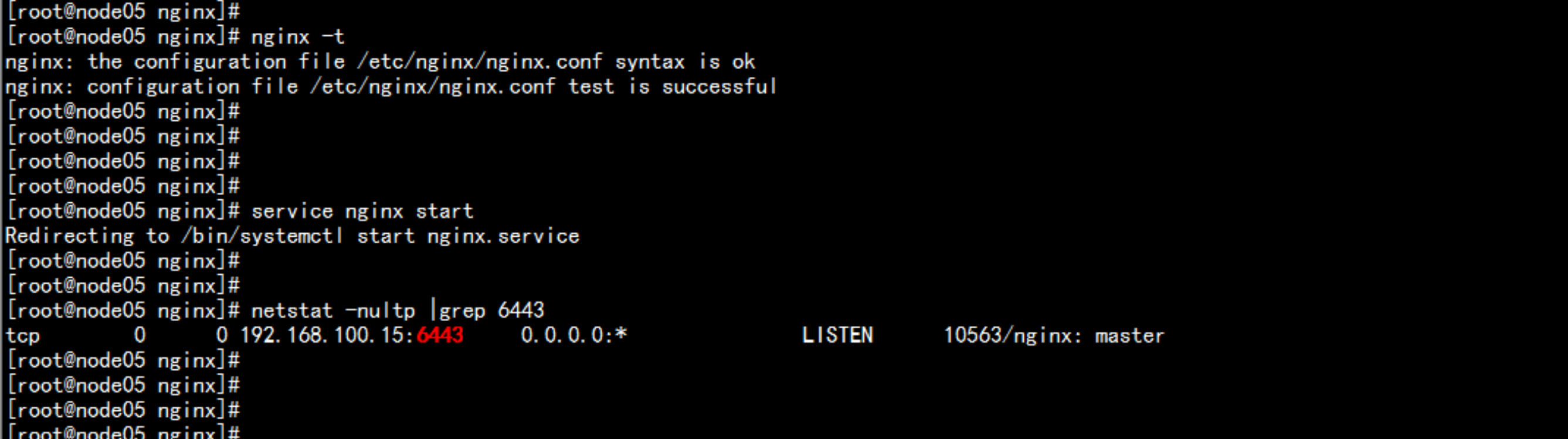

vim nginx.conf

增加:

---

stream

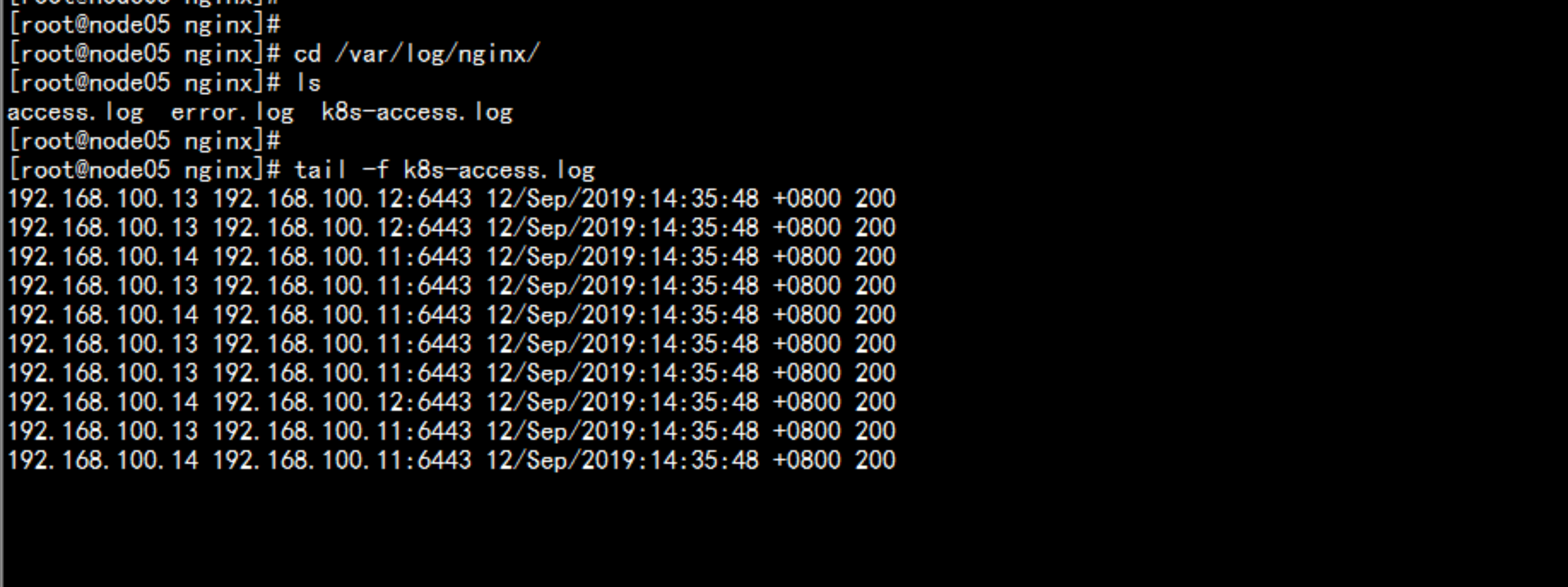

log_format main "$remote_addr $upstream_addr $time_local $status";

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver

server 192.168.100.11:6443;

server 192.168.100.12:6443;

server

listen 192.168.100.15:6443;

proxy_pass k8s-apiserver;

···

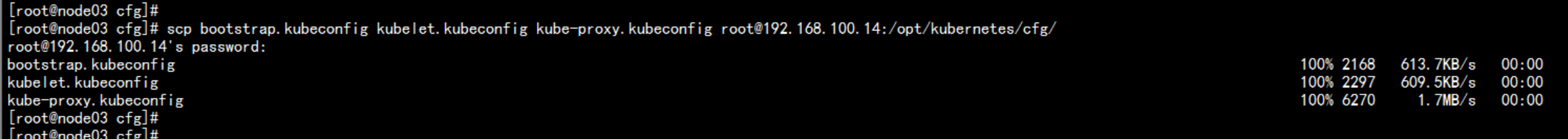

修改node 节点的 master 服务器指向

login : 192.168.20.13

cd /opt/kubernetes/cfg/

vim bootstrap.kubeconfig

---

server: https://192.168.100.11:6443 改成:

server: https://192.168.100.15:6443

---

vim kubelet.kubeconfig

----

server: https://192.168.100.11:6443 改成:

server: https://192.168.100.15:6443

----

vim kube-proxy.kubeconfig

----

server: https://192.168.100.11:6443 改成:

server: https://192.168.100.15:6443

----

scp bootstrap.kubeconfig kubelet.kubeconfig kube-proxy.kubeconfig root@192.168.100.14:/opt/kubernetes/cfg/

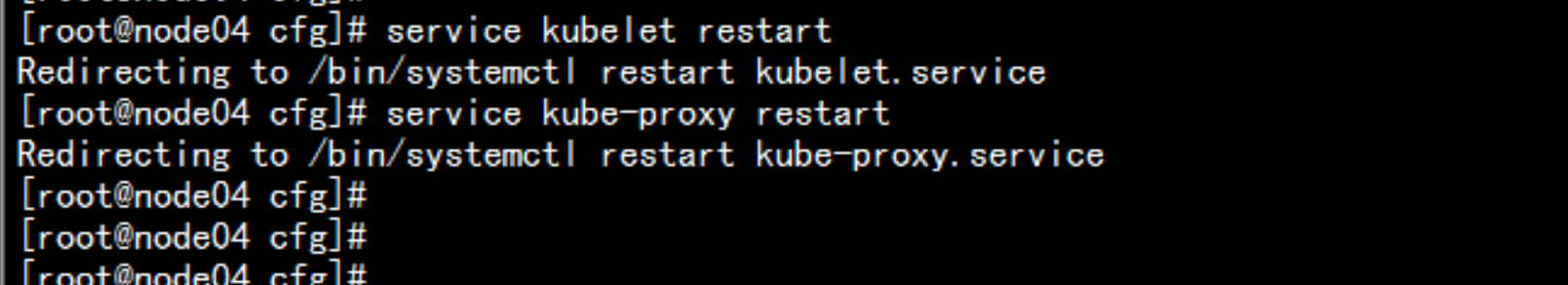

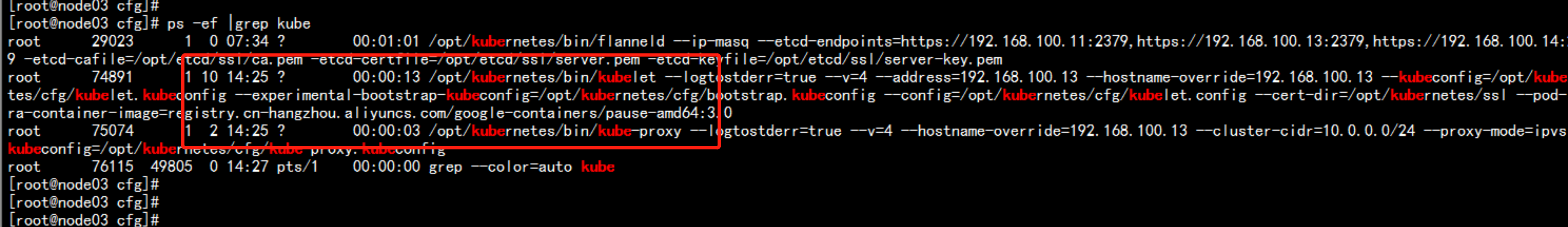

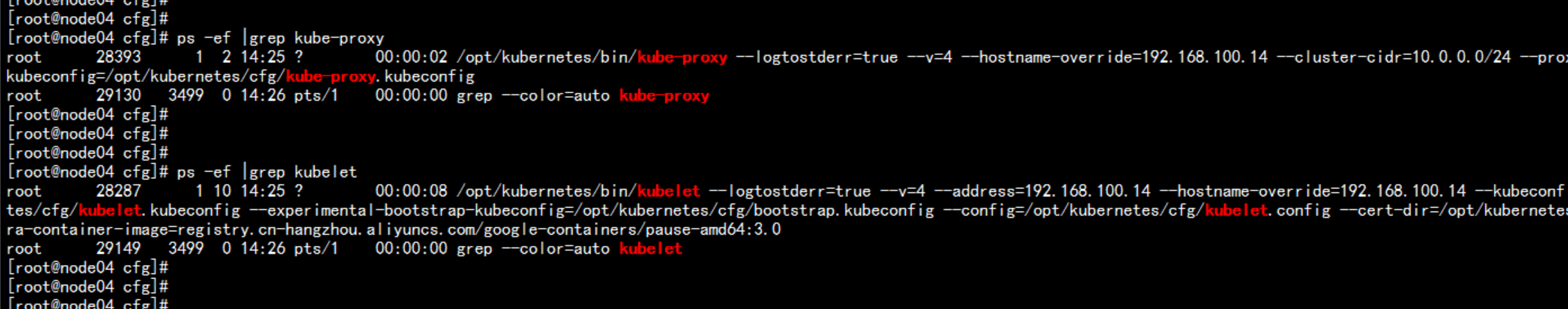

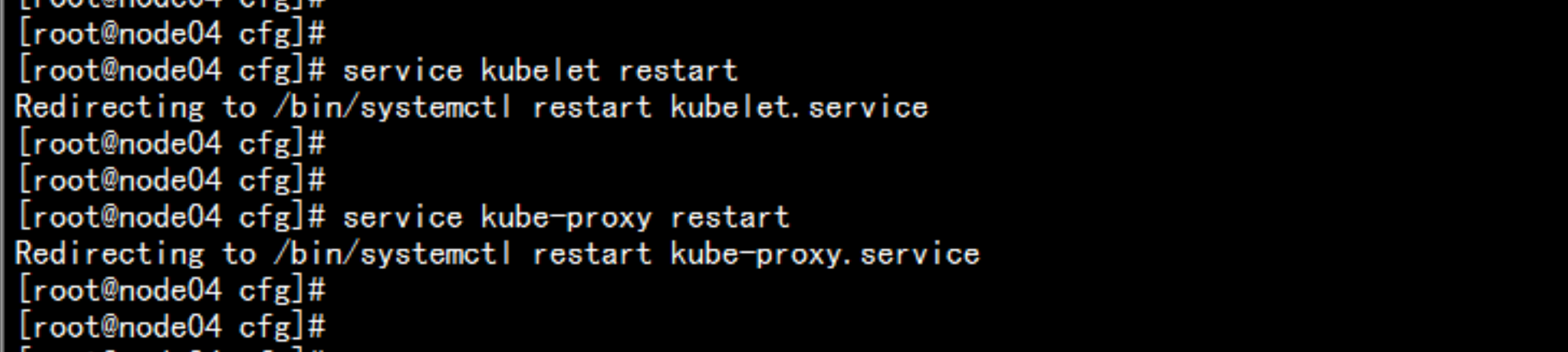

重新启动 node 节点的 kubelet 和 kube-proxy

service kubelet restart

service kube-proxy restart

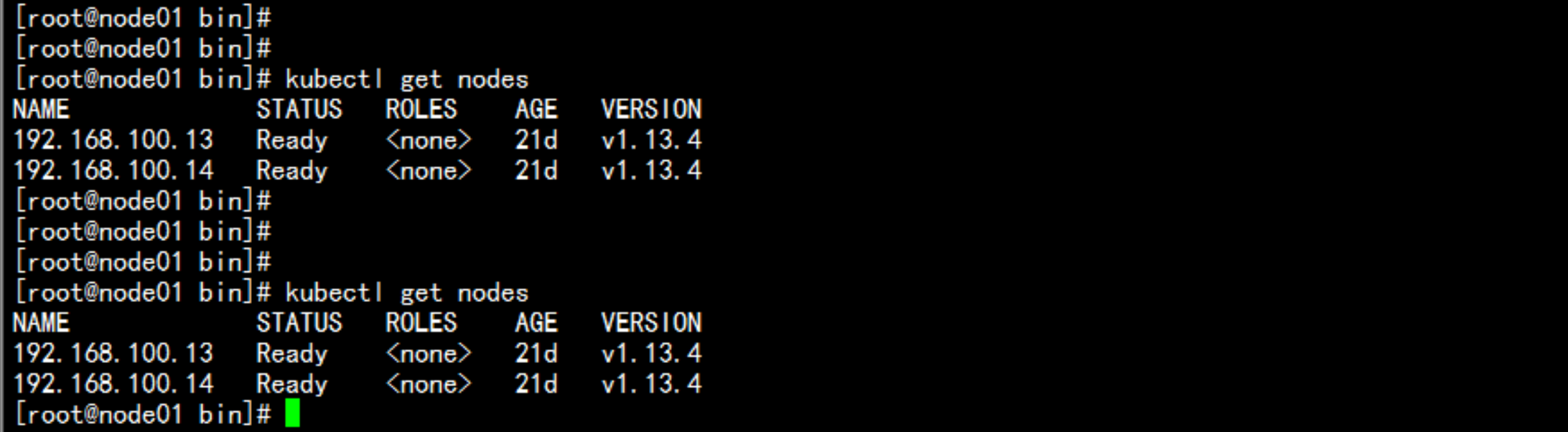

使用 主节点 配置 测试

kubectl get nodes

三: 配置nginx 的LB 的 keepalived 高可用

login 192.168.100.16

安装nginx 服务器 同上 192.168.100.15 一样

修改 :

vim /etc/nginx/nginx.conf

----

stream

log_format main "$remote_addr $upstream_addr $time_local $status";

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver

server 192.168.100.11:6443;

server 192.168.100.12:6443;

server

listen 192.168.100.16:6443;

proxy_pass k8s-apiserver;

---

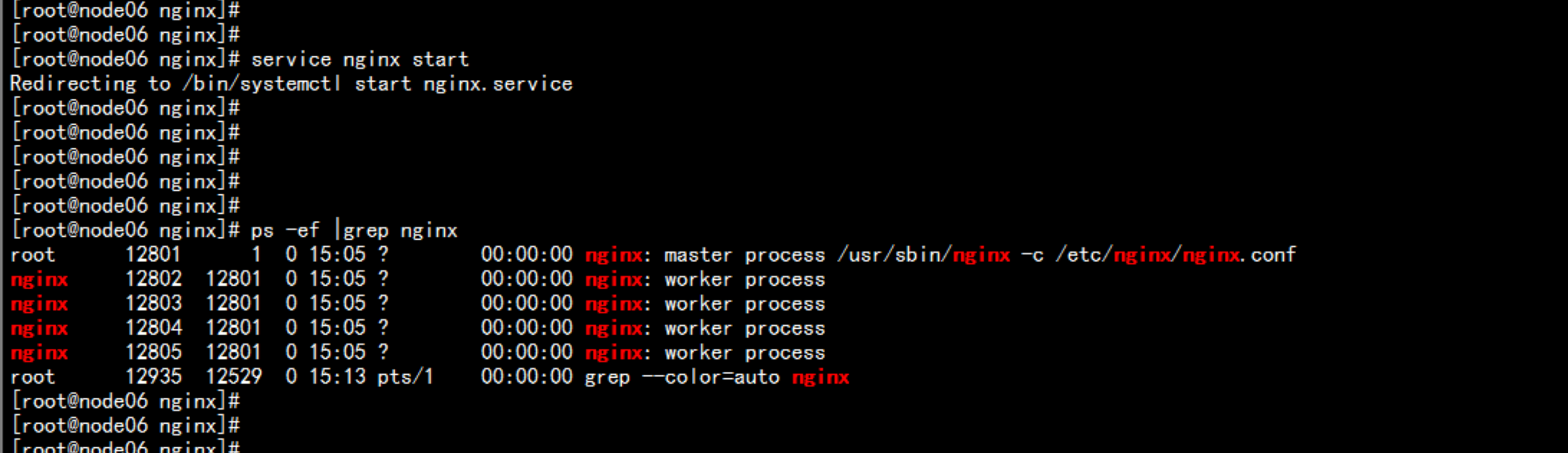

service nginx start

配置keepalive 高可用

yum install keepalived

keepalived 的配置文件

cd /etc/keepalived/

vim keepalived.conf

---

! Configuration File for keepalived

global_defs

# 接收邮件地址

notification_email

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

vrrp_script check_nginx

script "/etc/keepalived/check_nginx.sh"

vrrp_instance VI_1

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备192.168.100.16服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication

auth_type PASS

auth_pass 1111

virtual_ipaddress

192.168.100.70/24

track_script

check_nginx

----

配置 检查 nginx 进程 检查

cd /etc/keepalived/

vim check_nginx.sh

---

#!/bin/bash

count=$(ps -ef |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

/etc/init.d/keepalived stop

fi

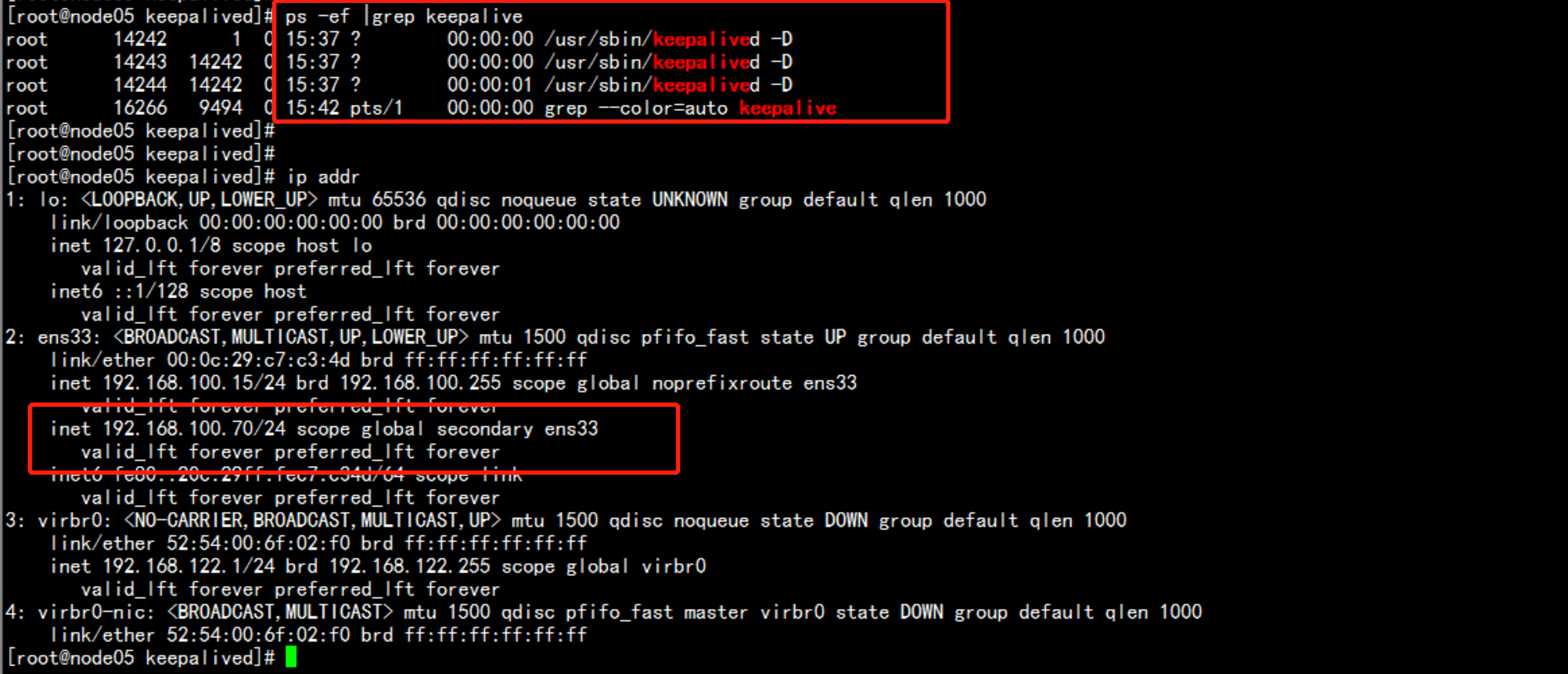

---service keepalived start

ps -ef |grep keepalived

cd /etc/keepalived

scp check_nginx.sh keepalived.conf root@192.168.100.16:/etc/keepalived/

login: 192.168.100.16

cd /etc/keepalived/

vim keepalived.conf

---

改 priority 100 为 priority 90

----

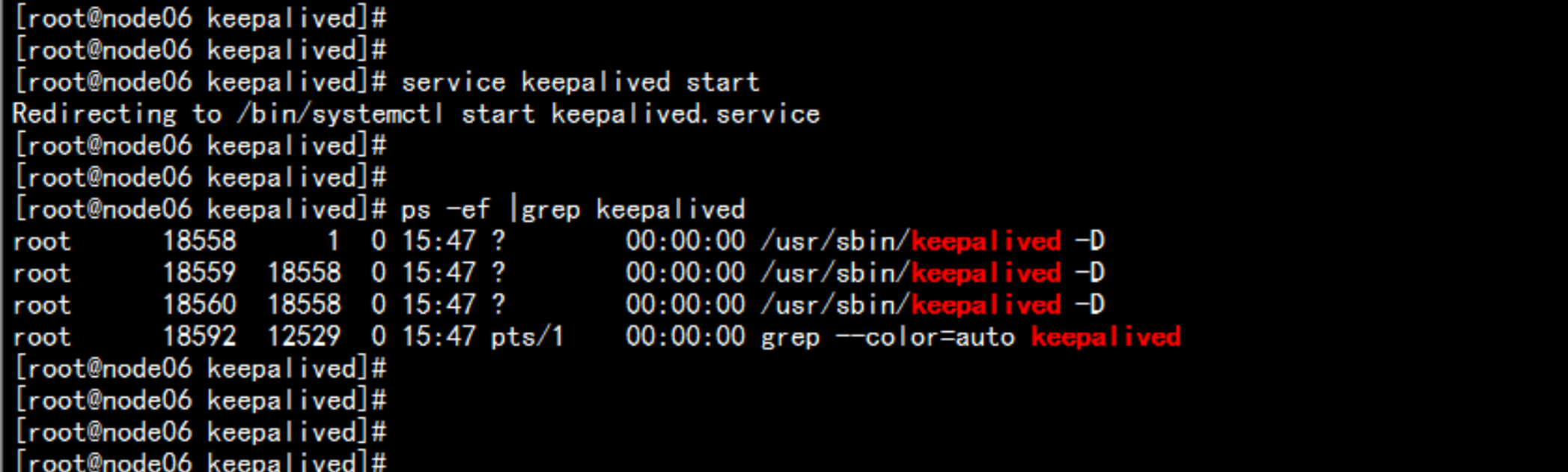

serivice keepalived start

ps -ef |grep keepalived

改 node 节点的 IP 地址

修改node 节点的 master 服务器指向

login : 192.168.20.13

cd /opt/kubernetes/cfg/

vim bootstrap.kubeconfig

---

server: https://192.168.100.15:6443 改成:

server: https://192.168.100.70:6443

---

vim kubelet.kubeconfig

----

server: https://192.168.100.15:6443 改成:

server: https://192.168.100.70:6443

----

vim kube-proxy.kubeconfig

----

server: https://192.168.100.15:6443 改成:

server: https://192.168.100.70:6443

----

scp bootstrap.kubeconfig kubelet.kubeconfig kube-proxy.kubeconfig root@192.168.100.14:/opt/kubernetes/cfg/

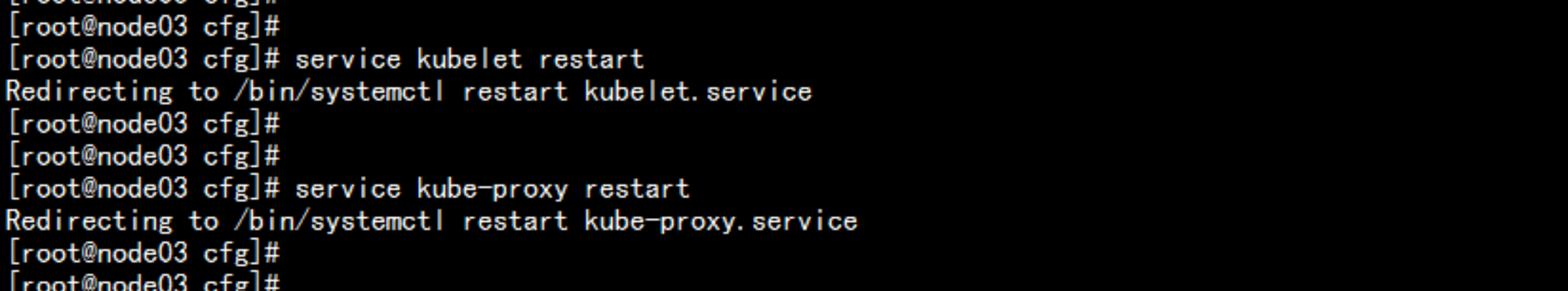

重新启动 node 节点的 kubelet 和 kube-proxy

service kubelet restart

service kube-proxy restart

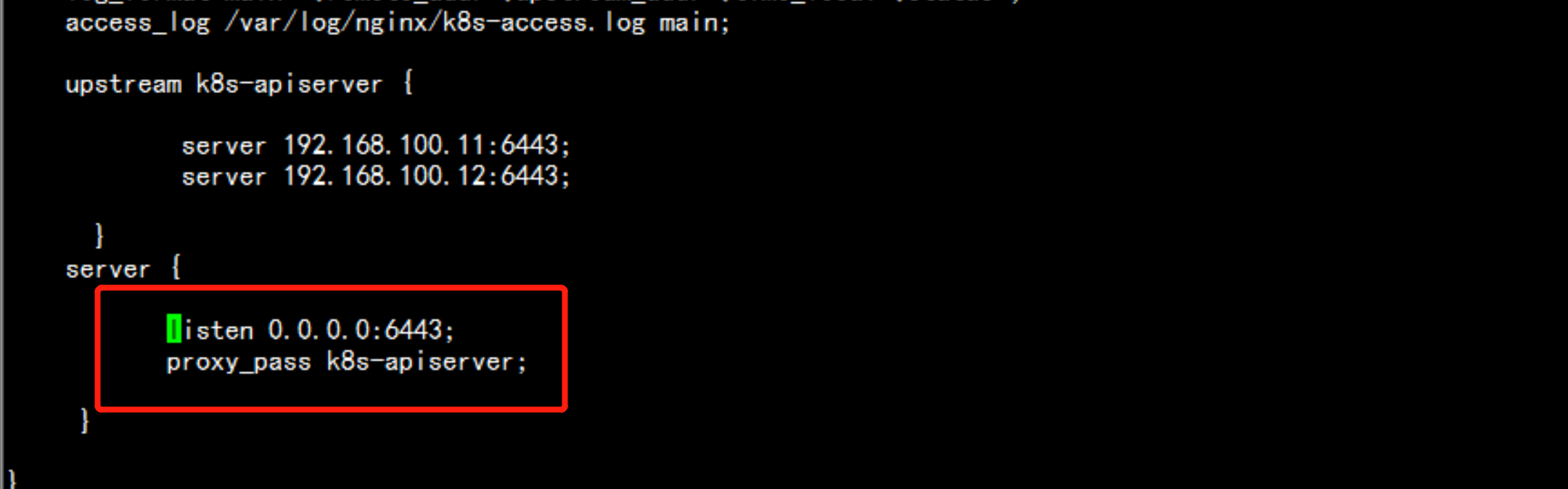

login: 192.168.100.15

修改 nginx 的 配置文件

cd /etc/nginx/

vim nginx.conf

----

将linsten 192.168.100.15:6443 改为: 0.0.0.0:6443

----

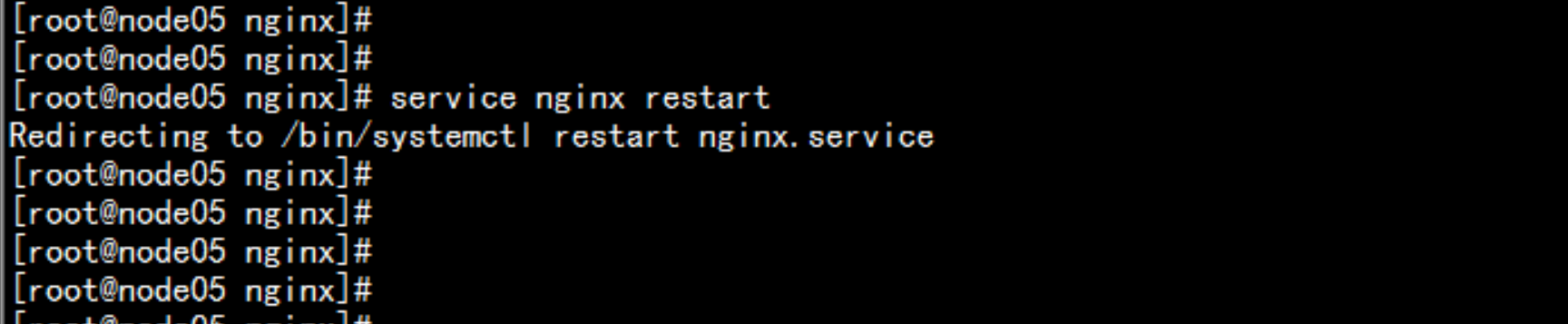

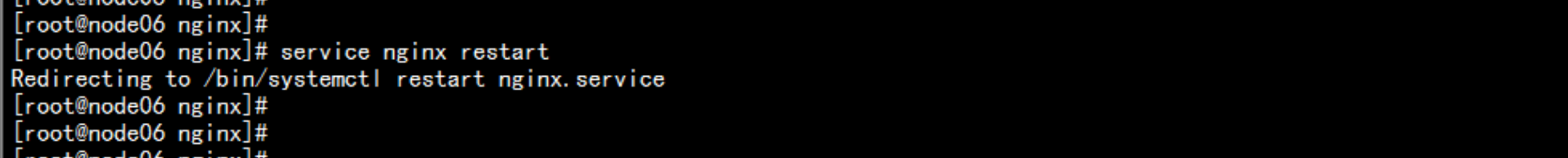

service nginx restart

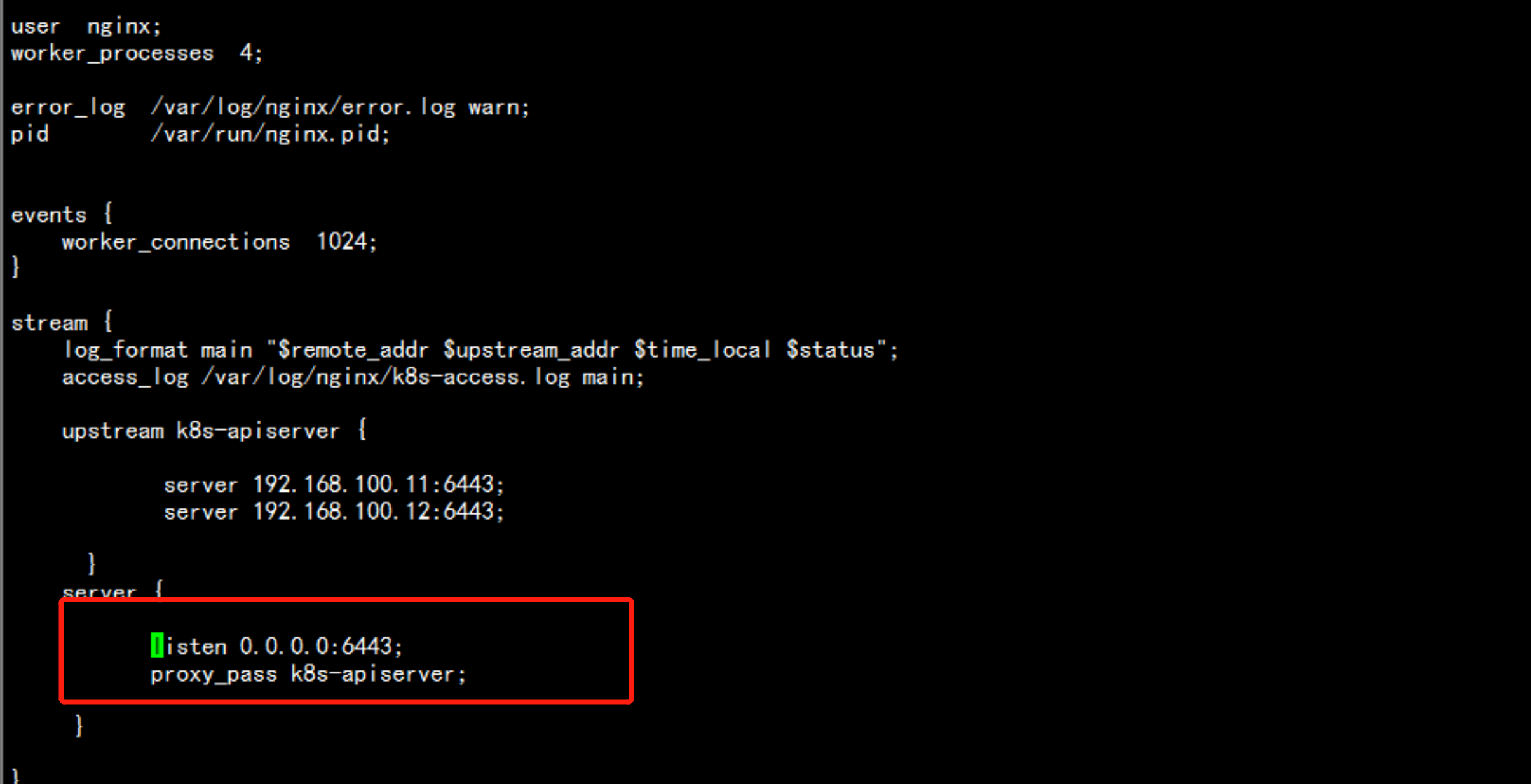

login: 192.168.100.16

修改 nginx 的 配置文件

cd /etc/nginx/

vim nginx.conf

----

将linsten 192.168.100.16:6443 改为: 0.0.0.0:6443

----

service nginx restart

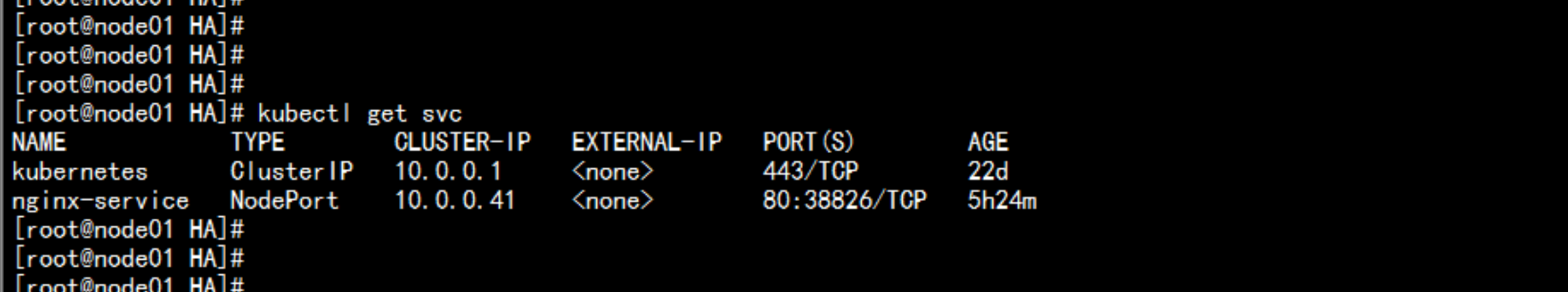

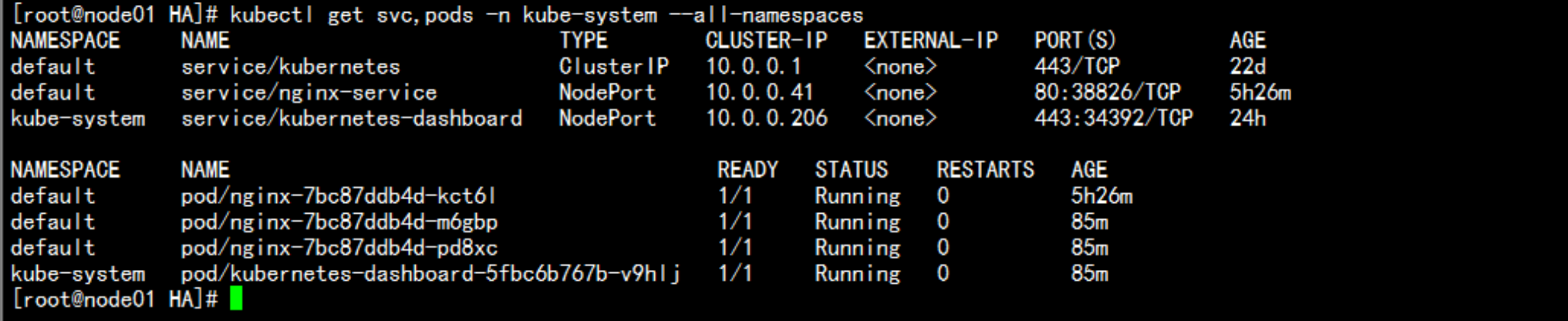

去master01 节点上面查看

kubectl get svc

kubectl get svc,pods -n kube-system --all-namespaces

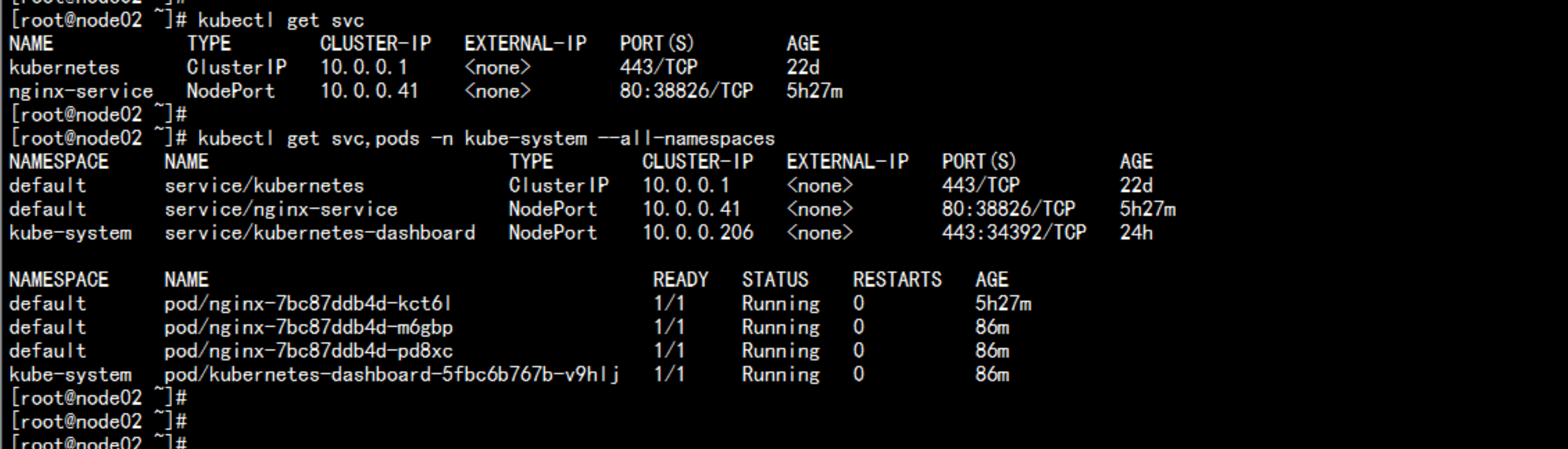

去master02 节点上面查看

kubectl get svc

kubectl get svc,pods -n kube-system --all-namespaces

以上是关于kuberenetes 的多节点集群与高可用配置的主要内容,如果未能解决你的问题,请参考以下文章

在CentOS7上配置RabbitMQ 3.6.3集群与高可用