kubernetes 添加删除master 节点及etcd节点

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kubernetes 添加删除master 节点及etcd节点相关的知识,希望对你有一定的参考价值。

业务场景:测试环境 由于一开始资源有限使用虚拟机机部署节点都是单节点,随着使用频繁业务量增加从新采购新的服务器把以前的master及etcd 单节点迁移到新采购服务器上面同时增加节点至3节点提供高可用环境

环境:

etcd 旧 节点IP: 192.168.30.31

etcd 新节点IP:192.168.30.17,192.168.30.18,192.168.30.19

kube-apiserver 旧节点IP:192.168.30.32

kube-apiserver 新节点IP:192.168.30.17,192.168.30.18,192.168.30.19

kube-apiserver vipIP: 192.168.30.254

kube-apiserver 启动应用 kube-apiserver kube-controller-manager kube-scheduler

节点hostname node03 node4 node5etcd 节点添加

# 操作节点:192.168.30.31

# 配置etcd 操作环境 API V3版本操作

修改 /etc/profile 添加

export ETCDCTL_API=3

export ENDPOINTS=https://192.168.30.31:2379

source /etc/profile

修改 ~/.bashrc 添加

alias etcdctl=‘/apps/etcd/bin/etcdctl --endpoints=$ENDPOINTS --cacert=/apps/etcd/ssl/etcd-ca.pem --cert=/apps/etcd/ssl/etcd_client.pem --key=/apps/etcd/ssl/etcd_client-key.pem‘

source ~/.bashrc

测试配置是否正确

etcdctl endpoint health

[root@etcd ~]# etcdctl endpoint health

https://192.168.30.31:2379 is healthy: successfully committed proposal: took = 20.258113ms

输出正常证明配置正确

# 备份etcd 数据 一定要进行备份如果不备份出错只能重新部署了

etcdctl snapshot save snapshot.db

# 出现问题还原数据

etcdctl snapshot restore ./snapshot.db --name=etcd --initial-advertise-peer-urls=https://192.168.30.31:2380 --initial-cluster-token=etcd-cluster-0 --initial-cluster=etcd=https://192.168.30.31:2380 --data-dir=/apps/etcd/data/default.etcd

# 生成新的数组证书

## 创建 ETCD Server 配置文件

export ETCD_SERVER_IPS=" \"192.168.30.31\", \"192.168.30.17\", \"192.168.30.18\", \"192.168.30.19\" " && export ETCD_SERVER_HOSTNAMES=" \"etcd \", \"etcd03 \", \"etcd4 \", \"etcd5\" " && cat << EOF | tee /opt/k8s/cfssl/etcd/etcd_server.json

"CN": "etcd",

"hosts": [

"127.0.0.1",

$ETCD_SERVER_IPS,

$ETCD_SERVER_HOSTNAMES

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "niuke",

"OU": "niuke"

]

EOF

## 生成 ETCD Server 证书和私钥

cfssl gencert -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem -config=/opt/k8s/cfssl/ca-config.json -profile=kubernetes /opt/k8s/cfssl/etcd/etcd_server.json | cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd_server

## 创建 ETCD Member 2 配置文件

export ETCD_MEMBER_2_IP=" \"192.168.30.17\" " && export ETCD_MEMBER_2_HOSTNAMES="etcd03" && cat << EOF | tee /opt/k8s/cfssl/etcd/$ETCD_MEMBER_2_HOSTNAMES.json

"CN": "etcd",

"hosts": [

"127.0.0.1",

$ETCD_MEMBER_2_IP,

"$ETCD_MEMBER_2_HOSTNAMES"

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "niuke",

"OU": "niuke"

]

EOF

## 生成 ETCD Member 2 证书和私钥

cfssl gencert -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem -config=/opt/k8s/cfssl/ca-config.json -profile=kubernetes /opt/k8s/cfssl/etcd/$ETCD_MEMBER_2_HOSTNAMES.json | cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd_member_$ETCD_MEMBER_2_HOSTNAMES

## 创建 ETCD Member 3 配置文件

export ETCD_MEMBER_3_IP=" \"192.168.30.18\" " && export ETCD_MEMBER_3_HOSTNAMES="etcd4" && cat << EOF | tee /opt/k8s/cfssl/etcd/$ETCD_MEMBER_3_HOSTNAMES.json

"CN": "etcd",

"hosts": [

"127.0.0.1",

$ETCD_MEMBER_3_IP,

"$ETCD_MEMBER_3_HOSTNAMES"

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "niuke",

"OU": "niuke"

]

EOF

## 生成 ETCD Member 3 证书和私钥

cfssl gencert -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem -config=/opt/k8s/cfssl/ca-config.json -profile=kubernetes /opt/k8s/cfssl/etcd/$ETCD_MEMBER_3_HOSTNAMES.json | cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd_member_$ETCD_MEMBER_3_HOSTNAMES

## 创建 ETCD Member 4 配置文件

export ETCD_MEMBER_4_IP=" \"192.168.30.19\" " && export ETCD_MEMBER_4_HOSTNAMES="etcd5" && cat << EOF | tee /opt/k8s/cfssl/etcd/$ETCD_MEMBER_4_HOSTNAMES.json

"CN": "etcd",

"hosts": [

"127.0.0.1",

$ETCD_MEMBER_4_IP,

"$ETCD_MEMBER_4_HOSTNAMES"

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "niuke",

"OU": "niuke"

]

EOF

## 生成 ETCD Member 4证书和私钥

cfssl gencert -ca=/opt/k8s/cfssl/pki/etcd/etcd-ca.pem -ca-key=/opt/k8s/cfssl/pki/etcd/etcd-ca-key.pem -config=/opt/k8s/cfssl/ca-config.json -profile=kubernetes /opt/k8s/cfssl/etcd/$ETCD_MEMBER_4_HOSTNAMES.json | cfssljson -bare /opt/k8s/cfssl/pki/etcd/etcd_member_$ETCD_MEMBER_4_HOSTNAMES

#分发证书到每个节点

scp -r /opt/k8s/cfssl/pki/etcd/etcd* root@192.168.30.17: /apps/etcd/ssl/

scp -r /opt/k8s/cfssl/pki/etcd/etcd* root@192.168.30.18: /apps/etcd/ssl/

scp -r /opt/k8s/cfssl/pki/etcd/etcd* root@192.168.30.19: /apps/etcd/ssl/

# 数据备份完成 添加节点

etcdctl member add node03 --peer-urls=https://192.168.30.17:2380

##########################################################

etcdctl member add etcd03 https://192.168.30.17:2380

Added member named etcd03 with ID 92bf7d7f20e298fc to cluster

ETCD_NAME="etcd03"

ETCD_INITIAL_CLUSTER="etcd03=https://192.168.30.17:2380,etcd=https://192.168.30.31:2380"

ETCD_INITIAL_CLUSTER_STATE="existing"

#################################################################################

# 修改启动配文件

ETCD_OPTS="--name=node03 --data-dir=/apps/etcd/data/default.etcd --listen-peer-urls=https://192.168.30.17:2380 --listen-client-urls=https://192.168.30.17:2379,https://127.0.0.1:2379 --advertise-client-urls=https://192.168.30.17:2379 --initial-advertise-peer-urls=https://192.168.30.17:2380 --initial-cluster=etcd=https://192.168.30.31:2380,node03=https://192.168.30.17:2380 --initial-cluster-token=etcd=https://192.168.30.31:2380,node03=https://192.168.30.17:2380 --initial-cluster-state=existing --heartbeat-interval=6000 --election-timeout=30000 --snapshot-count=5000 --auto-compaction-retention=1 --max-request-bytes=33554432 --quota-backend-bytes=17179869184 --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem --cert-file=/apps/etcd/ssl/etcd_server.pem --key-file=/apps/etcd/ssl/etcd_server-key.pem --peer-cert-file=/apps/etcd/ssl/etcd_member_node03.pem --peer-key-file=/apps/etcd/ssl/etcd_member_node03-key.pem --peer-client-cert-auth --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem"

# 启动 node03 节点 etcd

service etcd start

修改 /etc/profile 添加新节点

export ENDPOINTS=https://192.168.30.17:2379,https://192.168.30.31:2379

source /etc/profile

etcdctl endpoint status

# 查看数据存储大小是否一致如果一致添加新的节点

etcdctl member add node4 --peer-urls=https://192.168.30.18:2380

ETCD_OPTS="--name=node4 --data-dir=/apps/etcd/data/default.etcd --listen-peer-urls=https://192.168.30.18:2380 --listen-client-urls=https://192.168.30.18:2379,https://127.0.0.1:2379 --advertise-client-urls=https://192.168.30.18:2379 --initial-advertise-peer-urls=https://192.168.30.18:2380 --initial-cluster=node4=https://192.168.30.18:2380,etcd=https://192.168.30.31:2380,node03=https://192.168.30.17:2380 --initial-cluster-token=node4=https://192.168.30.18:2380,etcd=https://192.168.30.31:2380,node03=https://192.168.30.17:2380 --initial-cluster-state=existing --heartbeat-interval=6000 --election-timeout=30000 --snapshot-count=5000 --auto-compaction-retention=1 --max-request-bytes=33554432 --quota-backend-bytes=17179869184 --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem --cert-file=/apps/etcd/ssl/etcd_server.pem --key-file=/apps/etcd/ssl/etcd_server-key.pem --peer-cert-file=/apps/etcd/ssl/etcd_member_node4.pem --peer-key-file=/apps/etcd/ssl/etcd_member_node4-key.pem --peer-client-cert-auth --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem"

etcdctl member add node5 --peer-urls=https://192.168.30.19:2380

ETCD_OPTS="--name=node5 --data-dir=/apps/etcd/data/default.etcd --listen-peer-urls=https://192.168.30.19:2380 --listen-client-urls=https://192.168.30.19:2379,https://127.0.0.1:2379 --advertise-client-urls=https://192.168.30.19:2379 --initial-advertise-peer-urls=https://192.168.30.19:2380 --initial-cluster=node4=https://192.168.30.18:2380,etcd=https://192.168.30.31:2380,node5=https://192.168.30.19:2380,node03=https://192.168.30.17:2380 --initial-cluster-token=node4=https://192.168.30.18:2380,etcd=https://192.168.30.31:2380,node5=https://192.168.30.19:2380,node03=https://192.168.30.17:2380 --initial-cluster-state=existing --heartbeat-interval=6000 --election-timeout=30000 --snapshot-count=5000 --auto-compaction-retention=1 --max-request-bytes=33554432 --quota-backend-bytes=17179869184 --trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem --cert-file=/apps/etcd/ssl/etcd_server.pem --key-file=/apps/etcd/ssl/etcd_server-key.pem --peer-cert-file=/apps/etcd/ssl/etcd_member_node5.pem --peer-key-file=/apps/etcd/ssl/etcd_member_node5-key.pem --peer-client-cert-auth --peer-trusted-ca-file=/apps/etcd/ssl/etcd-ca.pem"

####

修改 /etc/profile

export ENDPOINTS=https://192.168.30.17:2379,https://192.168.30.18:2379,https://192.168.30.19:2379

# 验证etcd 集群是否正常

[root@node03 ~]# etcdctl endpoint status

https://192.168.30.17:2379, 92bf7d7f20e298fc, 3.3.13, 30 MB, false, 16, 3963193

https://192.168.30.18:2379, 127f6360c5080113, 3.3.13, 30 MB, true, 16, 3963193

https://192.168.30.19:2379, 5a0a05654c847f54, 3.3.13, 30 MB, false, 16, 3963193

节点正常

#然后替换所有新节点

--initial-cluster=node4=https://192.168.30.18:2380,node5=https://192.168.30.19:2380,node03=https://192.168.30.17:2380 --initial-cluster-token=node4=https://192.168.30.18:2380,node5=https://192.168.30.19:2380,node03=https://192.168.30.17:2380 #这两个配置kube-apiserver 节点添加

# 创建 新节点证书

## 创建 Kubernetes API Server 配置文件

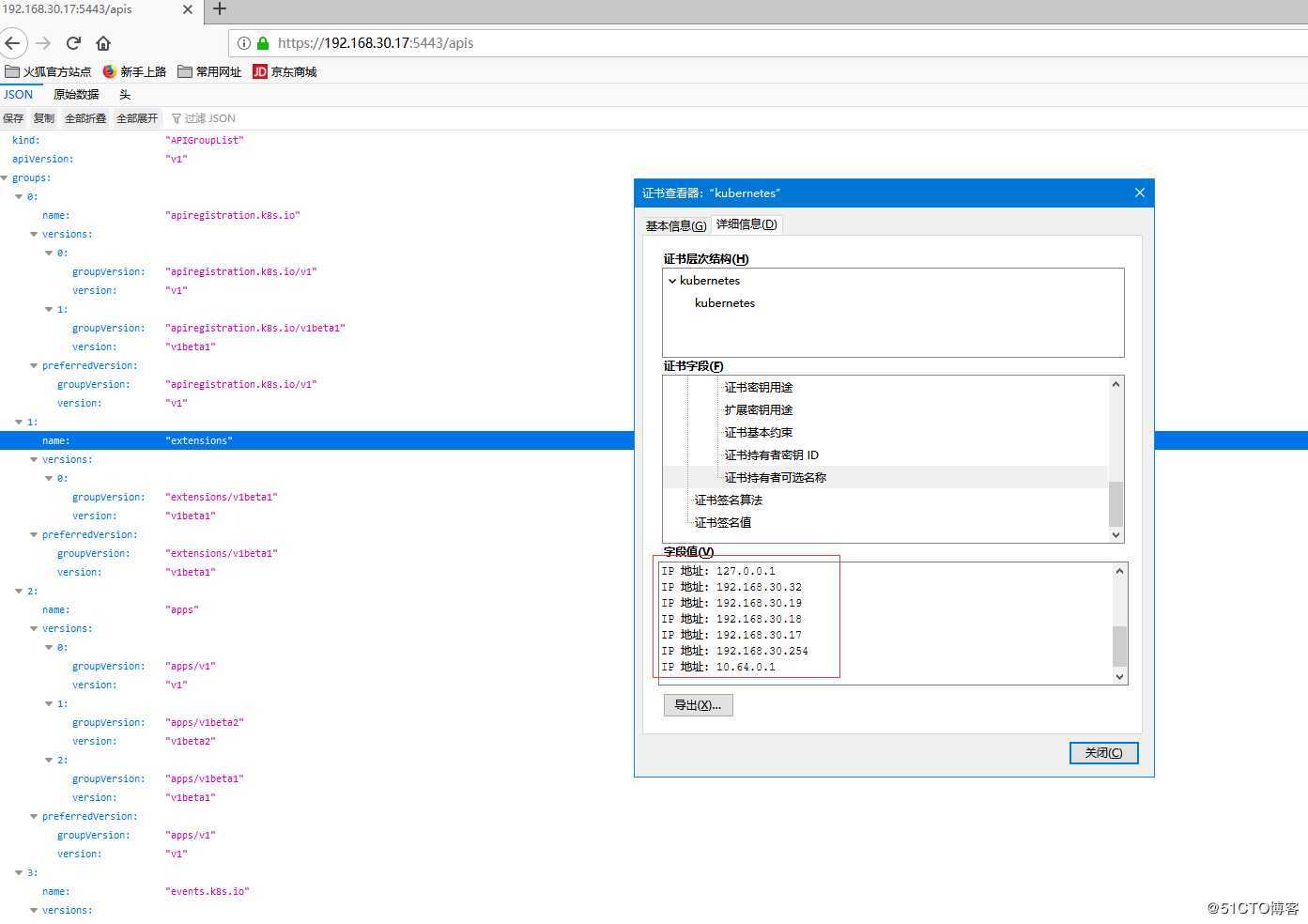

export K8S_APISERVER_VIP=" \"192.168.30.32\", \"192.168.30.17\", \"192.168.30.18\", \"192.168.30.19\", \"192.168.30.254\", " && export K8S_APISERVER_SERVICE_CLUSTER_IP="10.64.0.1" && export K8S_APISERVER_HOSTNAME="api.k8s.niuke.local" && export K8S_CLUSTER_DOMAIN_SHORTNAME="niuke" && export K8S_CLUSTER_DOMAIN_FULLNAME="niuke.local" && cat << EOF | tee /opt/k8s/cfssl/k8s/k8s_apiserver.json

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

$K8S_APISERVER_VIP

"$K8S_APISERVER_SERVICE_CLUSTER_IP",

"$K8S_APISERVER_HOSTNAME",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.$K8S_CLUSTER_DOMAIN_SHORTNAME",

"kubernetes.default.svc.$K8S_CLUSTER_DOMAIN_FULLNAME"

],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "niuke",

"OU": "niuke"

]

EOF

## 生成 Kubernetes API Server 证书和私钥

cfssl gencert -ca=/opt/k8s/cfssl/pki/k8s/k8s-ca.pem -ca-key=/opt/k8s/cfssl/pki/k8s/k8s-ca-key.pem -config=/opt/k8s/cfssl/ca-config.json -profile=kubernetes /opt/k8s/cfssl/k8s/k8s_apiserver.json | cfssljson -bare /opt/k8s/cfssl/pki/k8s/k8s_server

# 分发ssl 证书到节点

scp -r /opt/k8s/cfssl/pki/k8s/ root@192.168.30.17:/apps/kubernetes/ssl/k8s

scp -r /opt/k8s/cfssl/pki/k8s/ root@192.168.30.18:/apps/kubernetes/ssl/k8s

scp -r /opt/k8s/cfssl/pki/k8s/ root@192.168.30.19:/apps/kubernetes/ssl/k8s

# 修改配置文件

### kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=false --bind-address=192.168.30.17 --advertise-address=192.168.30.17 --secure-port=5443 --insecure-port=0 --service-cluster-ip-range=10.64.0.0/16 --service-node-port-range=30000-65000 --etcd-cafile=/apps/kubernetes/ssl/etcd/etcd-ca.pem --etcd-certfile=/apps/kubernetes/ssl/etcd/etcd_client.pem --etcd-keyfile=/apps/kubernetes/ssl/etcd/etcd_client-key.pem --etcd-prefix=/registry --etcd-servers=https://192.168.30.17:2379,https://192.168.30.18:2379,https://192.168.30.19:2379 --client-ca-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --tls-cert-file=/apps/kubernetes/ssl/k8s/k8s_server.pem --tls-private-key-file=/apps/kubernetes/ssl/k8s/k8s_server-key.pem --kubelet-client-certificate=/apps/kubernetes/ssl/k8s/k8s_server.pem --kubelet-client-key=/apps/kubernetes/ssl/k8s/k8s_server-key.pem --service-account-key-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --requestheader-client-ca-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --proxy-client-cert-file=/apps/kubernetes/ssl/k8s/aggregator.pem --proxy-client-key-file=/apps/kubernetes/ssl/k8s/aggregator-key.pem --requestheader-allowed-names=aggregator --requestheader-group-headers=X-Remote-Group --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-username-headers=X-Remote-User --enable-aggregator-routing=true --anonymous-auth=false --experimental-encryption-provider-config=/apps/kubernetes/config/encryption-config.yaml --enable-admission-plugins=AlwaysPullImages,DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,NamespaceExists,NamespaceLifecycle,NodeRestriction,OwnerReferencesPermissionEnforcement,PodNodeSelector,PersistentVolumeClaimResize,PodPreset,PodTolerationRestriction,ResourceQuota,ServiceAccount,StorageObjectInUseProtection MutatingAdmissionWebhook ValidatingAdmissionWebhook --disable-admission-plugins=DenyEscalatingExec,ExtendedResourceToleration,ImagePolicyWebhook,LimitPodHardAntiAffinityTopology,NamespaceAutoProvision,Priority,EventRateLimit,PodSecurityPolicy --cors-allowed-origins=.* --enable-swagger-ui --runtime-config=api/all=true --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --authorization-mode=Node,RBAC --allow-privileged=true --apiserver-count=1 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --kubelet-https --event-ttl=1h --feature-gates=RotateKubeletServerCertificate=true,RotateKubeletClientCertificate=true --enable-bootstrap-token-auth=true --audit-log-path=/apps/kubernetes/log/api-server-audit.log --alsologtostderr=true --log-dir=/apps/kubernetes/log --v=2 --endpoint-reconciler-type=lease --max-mutating-requests-inflight=100 --max-requests-inflight=500 --target-ram-mb=6000"

### kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false --leader-elect=true --address=0.0.0.0 --service-cluster-ip-range=10.64.0.0/16 --cluster-cidr=10.65.0.0/16 --node-cidr-mask-size=24 --cluster-name=kubernetes --allocate-node-cidrs=true --kubeconfig=/apps/kubernetes/config/kube_controller_manager.kubeconfig --authentication-kubeconfig=/apps/kubernetes/config/kube_controller_manager.kubeconfig --authorization-kubeconfig=/apps/kubernetes/config/kube_controller_manager.kubeconfig --use-service-account-credentials=true --client-ca-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --requestheader-client-ca-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --node-monitor-grace-period=40s --node-monitor-period=5s --pod-eviction-timeout=5m0s --terminated-pod-gc-threshold=50 --alsologtostderr=true --cluster-signing-cert-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --cluster-signing-key-file=/apps/kubernetes/ssl/k8s/k8s-ca-key.pem --deployment-controller-sync-period=10s --experimental-cluster-signing-duration=86700h0m0s --enable-garbage-collector=true --root-ca-file=/apps/kubernetes/ssl/k8s/k8s-ca.pem --service-account-private-key-file=/apps/kubernetes/ssl/k8s/k8s-ca-key.pem --feature-gates=RotateKubeletServerCertificate=true,RotateKubeletClientCertificate=true --controllers=*,bootstrapsigner,tokencleaner --horizontal-pod-autoscaler-use-rest-clients=true --horizontal-pod-autoscaler-sync-period=10s --flex-volume-plugin-dir=/apps/kubernetes/kubelet-plugins/volume --tls-cert-file=/apps/kubernetes/ssl/k8s/k8s_controller_manager.pem --tls-private-key-file=/apps/kubernetes/ssl/k8s/k8s_controller_manager-key.pem --kube-api-qps=100 --kube-api-burst=100 --log-dir=/apps/kubernetes/log --v=2"

### kube-scheduler

KUBE_SCHEDULER_OPTS=" --logtostderr=false --address=0.0.0.0 --leader-elect=true --kubeconfig=/apps/kubernetes/config/kube_scheduler.kubeconfig --authentication-kubeconfig=/apps/kubernetes/config/kube_scheduler.kubeconfig --authorization-kubeconfig=/apps/kubernetes/config/kube_scheduler.kubeconfig --alsologtostderr=true --kube-api-qps=100 --kube-api-burst=100 --log-dir=/apps/kubernetes/log --v=2"

# 其它两个节点参考17节点

service kube-apiserver start

service kube-controller-manager start

service kube-scheduler start验证新增节点是否正常

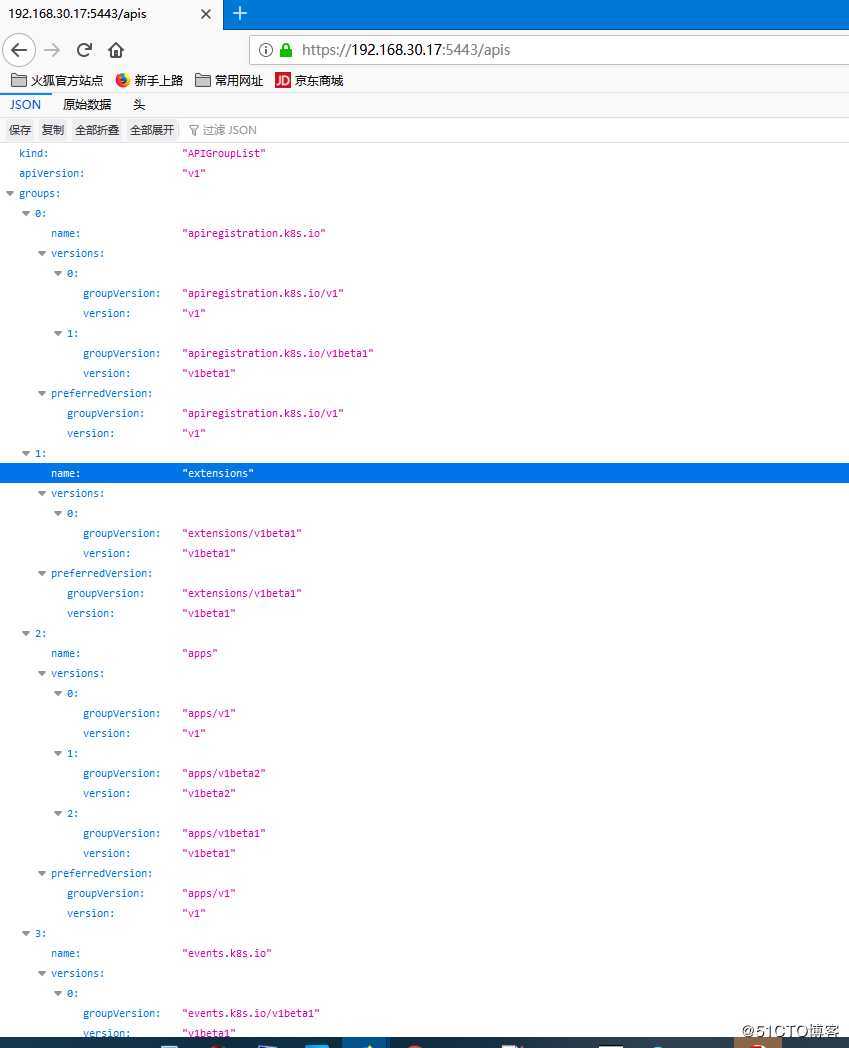

https://192.168.30.17:5443/apis

https://192.168.30.18:5443/apis

https://192.168.30.18:5443/apis

签名证书

安装haproxy 及keepalived

yum install -y haproxy keepalived

修改 haproxy 配置

/etc/haproxy/haproxy.cfg

frontend kube-apiserver-https

mode tcp

bind :6443

default_backend kube-apiserver-backend

backend kube-apiserver-backend

mode tcp

server 192.168.30.17-api 192.168.30.17:5443 check

server 192.168.30.18-api 192.168.30.18:5443 check

server 192.168.30.19-api 192.168.30.19:5443 check

# 启动haproxy

service haproxy start

三台配置一样

# 修改keepalived 配置

192.168.30.19配置

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

router_id LVS_DEVEL

vrrp_script check_haproxy

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

vrrp_instance VI_1

state MASTER

interface br0

virtual_router_id 51

priority 250

advert_int 2

authentication

auth_type PASS

auth_pass 99ce6e3381dc326633737ddaf5d904d2

virtual_ipaddress

192.168.30.254/24

track_script

check_haproxy

### 192.168.30.18 配置

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

router_id LVS_DEVEL

vrrp_script check_haproxy

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

vrrp_instance VI_1

state BACKUP

interface br0

virtual_router_id 51

priority 249

advert_int 2

authentication

auth_type PASS

auth_pass 99ce6e3381dc326633737ddaf5d904d2

virtual_ipaddress

192.168.30.254/24

track_script

check_haproxy

## 192.168.30.17 配置

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs

router_id LVS_DEVEL

vrrp_script check_haproxy

script "killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

vrrp_instance VI_1

state BACKUP

interface br0

virtual_router_id 51

priority 248

advert_int 2

authentication

auth_type PASS

auth_pass 99ce6e3381dc326633737ddaf5d904d2

virtual_ipaddress

192.168.30.254/24

track_script

check_haproxy

### 启动三台 keepalived

service keepalived start

192.168.30.19 配置为master

[root@node5 ~]# ip a | grep br0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br0 state UP group default qlen 1000

6: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

inet 192.168.30.19/24 brd 192.168.30.255 scope global br0

inet 192.168.30.254/24 scope global secondary br0

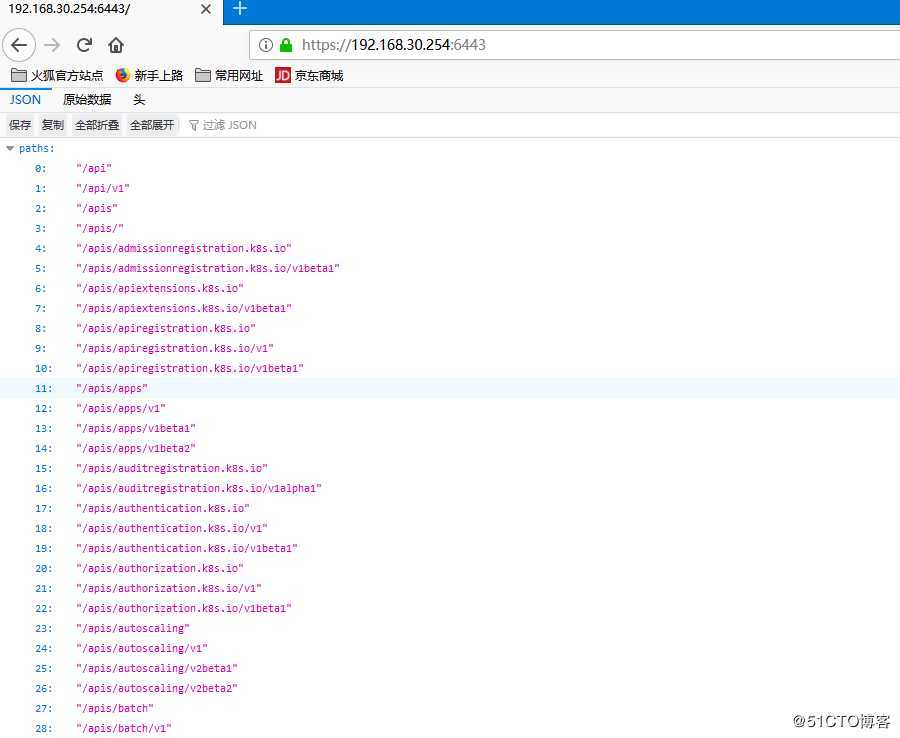

# 测试192.168.30.254 是否能正常访问

https://192.168.30.254:6443

能正常打开

修改node 节点

bootstrap.kubeconfig

kubelet.kubeconfig 两个文件连接地址

本地~/.kube/config 文件连接地址

可以使用vim 修改

server: https://192.168.30.254:6443

修改完成重启node 节点

service kubelet restart

验证node 节点是否正

kubectl get node

[root@]~]#kubectl get node

NAME STATUS ROLES AGE VERSION

ingress Ready k8s-ingress 60d v1.14.6

ingress-01 Ready k8s-ingress 29d v1.14.6

node01 Ready k8s-node 60d v1.14.6

node02 Ready k8s-node 60d v1.14.6

node03 Ready k8s-node 12d v1.14.6

node4 Ready k8s-node 12d v1.14.6

node5 Ready k8s-node 12d v1.14.6

所有节点正常删除etcd 旧节点

service etcd stop

etcdctl member list

查找 member key

etcdctl endpoint status

验证k8s 集群是否正常

### 删除旧节点

etcdctl member remove 7994ca589d94dceb

再次验证集群

[root@node03 ~]# etcdctl member list

127f6360c5080113, started, node4, https://192.168.30.18:2380, https://192.168.30.18:2379

5a0a05654c847f54, started, node5, https://192.168.30.19:2380, https://192.168.30.19:2379

92bf7d7f20e298fc, started, node03, https://192.168.30.17:2380, https://192.168.30.17:2379

[root@node03 ~]# etcdctl endpoint status

https://192.168.30.17:2379, 92bf7d7f20e298fc, 3.3.13, 30 MB, false, 16, 3976114

https://192.168.30.18:2379, 127f6360c5080113, 3.3.13, 30 MB, true, 16, 3976114

https://192.168.30.19:2379, 5a0a05654c847f54, 3.3.13, 30 MB, false, 16, 3976114

[root@node03 ~]# etcdctl endpoint hashkv

https://192.168.30.17:2379, 189505982

https://192.168.30.18:2379, 189505982

https://192.168.30.19:2379, 189505982

[root@node03 ~]# etcdctl endpoint health

https://192.168.30.17:2379 is healthy: successfully committed proposal: took = 2.671314ms

https://192.168.30.18:2379 is healthy: successfully committed proposal: took = 2.2904ms

https://192.168.30.19:2379 is healthy: successfully committed proposal: took = 3.555137ms

[root@]~]#kubectl get node

NAME STATUS ROLES AGE VERSION

ingress Ready k8s-ingress 60d v1.14.6

ingress-01 Ready k8s-ingress 29d v1.14.6

node01 Ready k8s-node 60d v1.14.6

node02 Ready k8s-node 60d v1.14.6

node03 Ready k8s-node 12d v1.14.6

node4 Ready k8s-node 12d v1.14.6

node5 Ready k8s-node 12d v1.14.6

一切正常

删除etcd 开机启动

chkconfig etcd off删除 kube-apiserver 旧节点

service kube-controller-manager stop

service kube-scheduler stop

service kube-apiserver stop

删除开机启动

chkconfig kube-controller-manager off

chkconfig kube-scheduler off

chkconfig kube-apiserver off

再次验证

kubectl get node

[root@]~]#kubectl get node

NAME STATUS ROLES AGE VERSION

ingress Ready k8s-ingress 60d v1.14.6

ingress-01 Ready k8s-ingress 29d v1.14.6

node01 Ready k8s-node 60d v1.14.6

node02 Ready k8s-node 60d v1.14.6

node03 Ready k8s-node 12d v1.14.6

node4 Ready k8s-node 12d v1.14.6

node5 Ready k8s-node 12d v1.14.6

[root@]~]#kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy "health":"true"

etcd-1 Healthy "health":"true"

etcd-2 Healthy "health":"true"

访问k8s 集群里面的业务如果都正常证明增加删除节点操作正确以上是关于kubernetes 添加删除master 节点及etcd节点的主要内容,如果未能解决你的问题,请参考以下文章