Kubernetes 学习19基于canel的网络策略

Posted presley-lpc

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Kubernetes 学习19基于canel的网络策略相关的知识,希望对你有一定的参考价值。

一、概述

1、我们说过,k8s的可用插件有很多,除了flannel之外,还有一个流行的叫做calico的组件,不过calico在很多项目中都会有这个名字被应用,所以他们把自己称为project calico,但是很多时候我们在kubernets的语境中通常会单独称呼他为calico。其本身支持bgp的方式来构建pod网络。通过bgp协议的路由学习能使得去每一节点上生成到达另一节点上pod之间的路由表信息。会在变动时自动执行改变和修改,另外其也支持IP-IP,就是基于IP报文来承载一个IP报文,不像我们VXLAN是通过一个所谓的以太网帧来承载另外一个以太网帧的报文的方式来实现的。因此从这个角度来讲他是一个三层隧道,也就意外着说如果我们期望在calico的网络中实现隧道的方式进行应用,来实现更为强大的控制逻辑,他也支持隧道,不过他是IP-IP隧道,和LVS中所谓的IP-IP的方式很相像。不过calico的配置依赖于我们对bgp等协议的理解工作起来才能更好的去了解他。我们这儿就不再讲calico怎么去作为网络插件去提供网络功能的,而是把他重点集中在calico如何去提供网络策略。因为flannel本身提供不了网络策略。而flannel和calico二者本身已经合二为一了,即canel。事实上,就算我们此前不了解canel的时候类似于使用kubectl的提示根据很多文章的提示直接安装并部署了flannel这样的网络插件都想把flannel换掉。但在这基础之上我们又想去使用网络策略。其实也是有解决方案的,我们可以在flannel提供网络功能的基础之上在额外去给他提供calico去提供网络策略。

2、在实现部署之前要先知道,calico默认使用的网段不是10.244.0.0,如果要拿calico作为网络插件使用的话它工作于192.168.0.0网络。而且是16位掩码。每一个节点网络分配是按照192.168.0.0/24,192.168.1.0/24来分配的。但此处我们不把其当做网络插件提供者而是当做网络策略提供者,我们仍然使用10.244.0.0网段。

二、安装calico

1、可以在官方文档中看到calico有多种安装方式(目前为止calico还不支持ipvs模式)

2、安装教程链接https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/flannel

3、calico新版本中部署比较复杂,calico对集群节点的所有的地址分配都没有自己介入,而是需要依赖于etcd来介入,这就比较麻烦了,首先,大家知道我们k8s自己有etcd database,而calico也需要etcd database,它是两套集群,各自是各自的etcd,这样子分裂起来进行管理来讲对我们k8s工程师都不是一个轻松的事情,那我们应该怎么做呢?后来的calico也支持不把数据放在自己专用的etcd集群中,而是掉apiserver的功能,直接把所有的设置都发给apiserver,由apiserver再存储在etcd中,因为整个k8s集群任何节点的功能,任何组件都不能直接写k8s的etcd,必须apiserver写,主要是确保数据一致性。这样一来也就意外着说我们calico部署有两种方式。第一就是和k8s的etcd分开;第二,直接使用k8s的etcd,不过是要通过apiserver去调用。这儿我们直接使用第二种方式部署。官网对部署方式描述如下

Installing with the Kubernetes API datastore (recommended) Ensure that the Kubernetes controller manager has the following flags set: --cluster-cidr=10.244.0.0/16 and --allocate-node-cidrs=true. Tip: If you’re using kubeadm, you can pass --pod-network-cidr=10.244.0.0/16 to kubeadm to set the Kubernetes controller flags. If your cluster has RBAC enabled, issue the following command to configure the roles and bindings that Calico requires. kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/rbac.yaml Note: You can also view the manifest in your browser. Issue the following command to install Calico. kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/canal.yaml Note: You can also view the manifest in your browser.

4、现在我们来部署

a、首先我们部署一个rbac.yaml配置文件

[root@k8smaster flannel]# kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/rbac.yaml clusterrole.rbac.authorization.k8s.io/calico created clusterrole.rbac.authorization.k8s.io/flannel configured clusterrolebinding.rbac.authorization.k8s.io/canal-flannel created clusterrolebinding.rbac.authorization.k8s.io/canal-calico created

b、第二步我们部署canal.yaml

[root@k8smaster flannel]# kubectl apply -f > https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/canal/canal.yaml configmap/canal-config created daemonset.extensions/canal created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

c、接下来我们来看一下对应的组件是否已经启动起来了

三、canel的使用

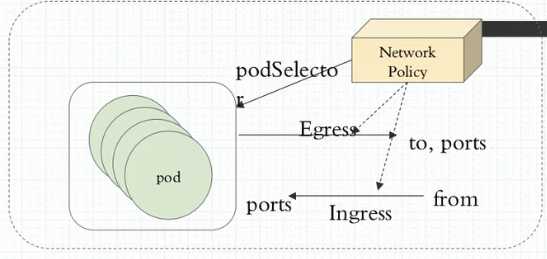

1、部署完canel后我们怎么去控制我们对应的pod间通信呢?现在我们在我们的cluster上创建两个namespaces,一个叫dev,一个叫pro,然后在两个名称空间中创建两个pod,我们看看两个名称空间中跨名称空间通信能不能直接进行,如果能进行的话我们如何让他不能进行。并且我们还可以这样来设计,我们可以为一个名称空间设置一个默认通信策略,任何到达此名称空间的通信流量都不被允许。简单来讲我们所用的网络控制策略是通过这种方式来定义的。如图,我们网络控制策略(Network Policy)他通过Egress或Ingress规则分别来控制不同的通信需求,什么意思呢?Egress即出栈的意思,Ingress即入栈的意思(此处Ingress和我们k8sIngress是两回事)。Egress表示我们pod作为客户端去访问别人的。即自己作为源地址对方作为目标地址来进行通信,Ingress表示自己是目标,远程是源。所以我们要控制这两个方向的不同的通信,而控制Egress的时候客户端端口是随机的而服务端端口是固定的,因此作为出栈的时候去请求别人很显然对方是服务端,对方的端口可预测,对方的地址也可预测,但自己的地址能预测端口却不能预测。同样的逻辑,如果别人访问自己,自己的地址能预测,自己的端口也能预测,但对方的端口是不能预测的。因为对方是客户端。

2、因此去定义规则时如果定义的是Egress规则(出栈的),那么我们可以定义目标地址和目标端口。如果我们定义的是Ingress规则(入栈的),我们能限制对方的地址,能限制自己的端口,那我们这种限制是针对于哪一个Pod来说的呢?这个网络策略规则是控制哪个pod和别人通信或接受别人通信的呢?我们使用podSelector(pod选择器)去选择pod,意思是这个规则生效在哪个pod上,我们一般使用单个pod进行控制,也可以控制一组pod,所以我们使用podSelector就相当于说我这一组pod都受控于这个Egress和Ingress规则,而且更重要的是我们将来定义规则时还可以指定单方向。意思是入栈我们做控制出栈都允许或出栈做控制入栈都允许。因此定义时可以很灵活的去定义,可以发现他和iptables没有太大的区别。那种方式最安全呢?肯定是拒绝所有放行已知。甚至于如果说你是托管了每一个名称空间托管了不同项目的,甚至不同客户的项目。我们名称空间直接设置默认策略。我们在名称空间内所有pod可以无障碍的通信,但是跨名称空间都不被允许。这种都可以叫一个名称空间的默认策略。

3、接下来我们看这些策略怎么工作起来

a、我们可以看到我们加载的canal pod已经启动起来了

[root@k8smaster ~]# kubectl get pods -n kube-system |grep canal canal-hmc47 3/3 Running 0 45m canal-sw5q6 3/3 Running 0 45m canal-xxvzk 3/3 Running 0 45m

b、我们看我们网络策略怎么定义

[root@k8smaster ~]# kubectl explain networkpolicy KIND: NetworkPolicy VERSION: extensions/v1beta1 DESCRIPTION: DEPRECATED 1.9 - This group version of NetworkPolicy is deprecated by networking/v1/NetworkPolicy. NetworkPolicy describes what network traffic is allowed for a set of Pods FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds metadata <Object> Standard object‘s metadata. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#metadata spec <Object> Specification of the desired behavior for this NetworkPolicy.

[root@k8smaster ~]# kubectl explain networkpolicy.spec KIND: NetworkPolicy VERSION: extensions/v1beta1 RESOURCE: spec <Object> DESCRIPTION: Specification of the desired behavior for this NetworkPolicy. DEPRECATED 1.9 - This group version of NetworkPolicySpec is deprecated by networking/v1/NetworkPolicySpec. FIELDS: egress <[]Object> #出栈规则 List of egress rules to be applied to the selected pods. Outgoing traffic is allowed if there are no NetworkPolicies selecting the pod (and cluster policy otherwise allows the traffic), OR if the traffic matches at least one egress rule across all of the NetworkPolicy objects whose podSelector matches the pod. If this field is empty then this NetworkPolicy limits all outgoing traffic (and serves solely to ensure that the pods it selects are isolated by default). This field is beta-level in 1.8 ingress <[]Object> #入栈规则 List of ingress rules to be applied to the selected pods. Traffic is allowed to a pod if there are no NetworkPolicies selecting the pod OR if the traffic source is the pod‘s local node, OR if the traffic matches at least one ingress rule across all of the NetworkPolicy objects whose podSelector matches the pod. If this field is empty then this NetworkPolicy does not allow any traffic (and serves solely to ensure that the pods it selects are isolated by default). podSelector <Object> -required- #规则应用在哪个pod上 Selects the pods to which this NetworkPolicy object applies. The array of ingress rules is applied to any pods selected by this field. Multiple network policies can select the same set of pods. In this case, the ingress rules for each are combined additively. This field is NOT optional and follows standard label selector semantics. An empty podSelector matches all pods in this namespace. policyTypes <[]string> #策略类型,指的是假如我在当前这个策略中即定义了Egress又定义了Ingress,那么谁生效呢?虽然他们并不冲突,但是你可以定义在某个时候某一方向的规则生效。 List of rule types that the NetworkPolicy relates to. Valid options are Ingress, Egress, or Ingress,Egress. If this field is not specified, it will default based on the existence of Ingress or Egress rules; policies that contain an Egress section are assumed to affect Egress, and all policies (whether or not they contain an Ingress section) are assumed to affect Ingress. If you want to write an egress-only policy, you must explicitly specify policyTypes [ "Egress" ]. Likewise, if you want to write a policy that specifies that no egress is allowed, you must specify a policyTypes value that include "Egress" (since such a policy would not include an Egress section and would otherwise default to just [ "Ingress" ]). This field is beta-level in 1.8

我们来看egress定义

[root@k8smaster ~]# kubectl explain networkpolicy.spec.egress KIND: NetworkPolicy VERSION: extensions/v1beta1 RESOURCE: egress <[]Object> DESCRIPTION: List of egress rules to be applied to the selected pods. Outgoing traffic is allowed if there are no NetworkPolicies selecting the pod (and cluster policy otherwise allows the traffic), OR if the traffic matches at least one egress rule across all of the NetworkPolicy objects whose podSelector matches the pod. If this field is empty then this NetworkPolicy limits all outgoing traffic (and serves solely to ensure that the pods it selects are isolated by default). This field is beta-level in 1.8 DEPRECATED 1.9 - This group version of NetworkPolicyEgressRule is deprecated by networking/v1/NetworkPolicyEgressRule. NetworkPolicyEgressRule describes a particular set of traffic that is allowed out of pods matched by a NetworkPolicySpec‘s podSelector. The traffic must match both ports and to. This type is beta-level in 1.8 FIELDS: ports <[]Object> List of destination ports for outgoing traffic. Each item in this list is combined using a logical OR. If this field is empty or missing, this rule matches all ports (traffic not restricted by port). If this field is present and contains at least one item, then this rule allows traffic only if the traffic matches at least one port in the list. to <[]Object> List of destinations for outgoing traffic of pods selected for this rule. Items in this list are combined using a logical OR operation. If this field is empty or missing, this rule matches all destinations (traffic not restricted by destination). If this field is present and contains at least one item, this rule allows traffic only if the traffic matches at least one item in the to list.

以上是关于Kubernetes 学习19基于canel的网络策略的主要内容,如果未能解决你的问题,请参考以下文章