碑文书法汉字拆分

Posted Phil Chow

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了碑文书法汉字拆分相关的知识,希望对你有一定的参考价值。

废话不多说,先上图:

此程序的主要目的,就是将碑文图片上的汉字截取出来,并且将文字周围多余边距去除,完成此后模式识别的先前准备工作。

用的是opencv的库,在处理噪音和二值化处理的时候方便一点。

其中涉及了一些在是使用opencv可能遇到的问题,比如矩形轮廓怎么画,用opencv提取出轮廓之后,怎么取舍这些轮廓……

利用如上图所示的方法,对每个字进行切分,即寻找每个谷点。

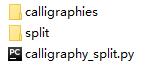

目录结构如下:

calligraphies里面放的是原始碑文图片,split放的是切分后得到的图片,子文件夹以每个原始图片名命名。

calligraphy_split.py为主程序。

代码如下,有点长,步骤写得应该还算清楚,英文注释:

1 import numpy as np 2 import cv2 3 from matplotlib import pyplot as plt 4 import os 5 6 7 class PrCalligraph(object): 8 9 filename = 0 10 dirname = "" 11 12 def cut_img(self, img, flag_pi): 13 row, col = img.shape 14 for i in range(row-1): 15 if img[i, col/2] <= flag_pi: 16 new_up_row = i 17 break 18 for i in range(col-1): 19 if img[row/2, i] <= flag_pi: 20 new_left_col = i 21 break 22 for i in range(row-1, 0, -1): 23 if img[i, col/2] <= flag_pi: 24 new_down_row = i 25 break 26 for i in range(col-1, 0, -1): 27 if img[row/2, i] <= flag_pi: 28 new_right_col = i 29 break 30 print new_up_row, new_left_col, new_down_row, new_right_col 31 return new_up_row, new_left_col, new_down_row, new_right_col 32 33 # deal the image with binaryzation 34 def thresh_binary(self, img): 35 blur = cv2.GaussianBlur(img, (9, 9), 0) 36 # OTSU\'s binaryzation 37 ret3, th3 = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU) 38 kernel = np.ones((2, 2), np.uint8) 39 opening = cv2.morphologyEx(th3, cv2.MORPH_OPEN, kernel) 40 return opening 41 42 # sum the black pixel numbers in each cols 43 def hist_col(self, img): 44 list=[] 45 row, col = img.shape 46 for i in xrange(col): 47 list.append((img[:, i] < 200).sum()) 48 return list 49 50 # find the each segmentatoin cols 51 def cut_col(self, img, hist_list): 52 minlist = [] 53 images = [] 54 row, col = img.shape 55 np_list = np.array(hist_list) 56 avg = col/8 57 i = 0 58 # print np_list 59 while i < col-1: 60 if i >= col-10: 61 if np_list[i] < 40 and np_list[i] <= np_list[i+1: col].min(): 62 minlist.append(i) 63 break 64 if i == col-1: 65 minlist.append(i) 66 break 67 else: 68 if np_list[i] < 40 and np_list[i] < np_list[i+1: i+10].min(): 69 minlist.append(i) 70 i += avg 71 i += 1 72 print minlist 73 for j in xrange(len(minlist)-1): 74 print j 75 images.append(img[0:row, minlist[j]:minlist[j+1]]) 76 return images 77 78 # sum the black pixel numbers in each rows 79 def hist_row(self, img): 80 list=[] 81 row, col = img.shape 82 for i in xrange(row): 83 list.append((img[i, :] < 200).sum()) 84 return self.cut_row(img, list) 85 86 # find each segmentation rows 87 def cut_row(self, img, row_list): 88 minlist = [] 89 single_images_with_rect = [] 90 row, col = img.shape 91 np_list = np.array(row_list) 92 avg = row/16 93 i = 0 94 while i <= row-1: 95 if i >= row-10 and np_list[i] == 0: 96 minlist.append(i) 97 break 98 elif np_list[i] == 0 and (np_list[i+1: i+10] < 200).sum() >= 5: 99 minlist.append(i) 100 i += avg 101 i += 1 102 print minlist 103 for j in xrange(len(minlist)-1): 104 single_img = img[minlist[j]:minlist[j+1], 0:col] 105 single_img_with_rect = self.single_cut(single_img) 106 if single_img_with_rect is not None: 107 single_images_with_rect.append(single_img_with_rect) 108 return single_images_with_rect 109 110 # find the single word\'s contours and take off the redundant margin 111 def single_cut(self, img): 112 blur = cv2.GaussianBlur(img, (9, 9), 0) 113 ret3, th3 = cv2.threshold(blur, 0, 255, cv2.THRESH_BINARY_INV+cv2.THRESH_OTSU) 114 contours, hierarchy = cv2.findContours(th3, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) 115 up, left = img.shape 116 down, right = 0, 0 117 for i in range(len(contours)): 118 cnt = contours[i] 119 x, y, w, h = cv2.boundingRect(cnt) 120 if w < 6 and h < 6: 121 continue 122 if x < up: 123 up = x 124 if y < left: 125 left = y 126 if x+w > down: 127 down = x+w 128 if y+h > right: 129 right = y+h 130 if down-up >= 40 and right-left >= 40: 131 word = img[left:right, up:down] 132 cv2.imwrite(self.dirname+str(self.filename)+\'.png\', word) 133 cv2.rectangle(img,(up, left), (down, right), (0, 255, 0), 2) 134 self.filename += 1 135 return img 136 else: 137 return None 138 139 if __name__ == \'__main__\': 140 141 prcaligraphy = PrCalligraph() 142 # read sys origin dir 143 origin_images = os.listdir(\'./calligraphies/\') 144 # handle each picture 145 for im in origin_images: 146 # use for new single word filename 147 prcaligraphy.filename = 0 148 # take out the original picture\'s name 149 outdir = os.path.splitext(im)[0] 150 # mkdir output dir name/path 151 prcaligraphy.dirname = "./split/"+outdir+\'/\' 152 os.makedirs(prcaligraphy.dirname, False) 153 # use opencv read images 154 img = cv2.imread(\'./calligraphies/\'+im, cv2.IMREAD_GRAYSCALE) 155 # preprocess original picture, cutout the redundant margin 156 row, col = img.shape 157 middle_pi = img[row/2, col/2] 158 if middle_pi > 220: 159 middle_pi = 220 160 else: 161 middle_pi += 10 162 new_up_row, new_left_col, new_down_row, new_right_col = prcaligraphy.cut_img(img, middle_pi) 163 cutedimg = img[new_up_row:new_down_row, new_left_col:new_right_col] 164 # deal the image with binaryzation 165 opening = prcaligraphy.thresh_binary(cutedimg) 166 # split the image into pieces with cols 167 hist_list = prcaligraphy.hist_col(opening) 168 images = prcaligraphy.cut_col(opening, hist_list) 169 # create two plt 170 fig, axes = plt.subplots(1, len(images), sharex=True, sharey=True) 171 fig2, axes2 = plt.subplots(len(images), 12, sharex=True, sharey=True) 172 # split the pieces into single words by rows 173 for i in range(len(images)): 174 axes[i].imshow(images[i], \'gray\') 175 single_images_with_rect = prcaligraphy.hist_row(images[i]) 176 for j in range(len(single_images_with_rect)): 177 axes2[i, j].imshow(single_images_with_rect[j], \'gray\') 178 fig.savefig(prcaligraphy.dirname+\'cut_col.png\') 179 fig2.savefig(prcaligraphy.dirname+\'single.png\') 180 plt.clf() 181 # plt.show() 182 183 # cv2.imshow(\'image\', imageee) 184 # cv2.waitKey(0) 185 # cv2.destroyAllWindows()

以上是关于碑文书法汉字拆分的主要内容,如果未能解决你的问题,请参考以下文章