flink_初识01

Posted yin-fei

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了flink_初识01相关的知识,希望对你有一定的参考价值。

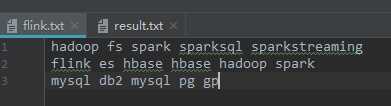

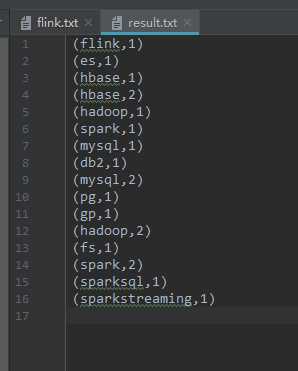

1.flink wordcount

package flink import org.apache.flink.api.scala._ import org.apache.flink.core.fs.FileSystem.WriteMode import org.apache.flink.streaming.api.scala.DataStream, StreamExecutionEnvironment object FlinkDemo def main(args: Array[String]): Unit = val env = StreamExecutionEnvironment.getExecutionEnvironment //source val text: DataStream[String] = env.readTextFile("file:///E:\\\\\\\\sparkproject\\\\\\\\src\\\\\\\\test\\\\\\\\data\\\\\\\\flink.txt") //transaction val result = text .flatMap x => x.split("\\\\s") .map x => (x, 1) .keyBy(0) .sum(1) //sink result.setParallelism(1).print() result.writeAsText("file:///E:\\\\\\\\sparkproject\\\\\\\\src\\\\\\\\test\\\\\\\\data\\\\\\\\result.txt", WriteMode.OVERWRITE).setParallelism(1) env.execute("flink wordcount demo")

pom文件

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.cnsuning.sparkproject</groupId> <artifactId>sparkcode</artifactId> <version>1.0-SNAPSHOT</version> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> <scala.version>2.11.8</scala.version> <spark.version>2.1.0.9</spark.version> <spark.artifactId.version>2.11</spark.artifactId.version> </properties> <dependencies> <!-- https://mvnrepository.com/artifact/org.apache.hive/hive-common --> <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-common</artifactId> <version>1.2.2</version> </dependency> <dependency> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> <version>1.1.1</version> <type>jar</type> </dependency> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-lang3</artifactId> <version>3.1</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.7</version> <scope>runtime</scope> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.6.2</version> </dependency> <!--<dependency>--> <!--<groupId>mysql</groupId>--> <!--<artifactId>mysql-connector-java</artifactId>--> <!--<version>5.1.21</version>--> <!--</dependency>--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.11</artifactId> <version>2.1.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.11</artifactId> <version>2.1.0</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming-kafka-0-8_2.11</artifactId> <version>2.1.0</version> </dependency> <!--<dependency>--> <!--<groupId>com.google.code.gson</groupId>--> <!--<artifactId>gson</artifactId>--> <!--<version>2.8.2</version>--> <!--</dependency>--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.11</artifactId> <version>2.1.0</version> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.29</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.11</artifactId> <version>2.1.0</version> <!--<scope>provided</scope>--> </dependency> <!--flink dependency--> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-java</artifactId> <version>1.6.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_2.11</artifactId> <version>1.6.1</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.flink/flink-core --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-core</artifactId> <version>1.6.1</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.flink/flink-cep --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-cep_2.11</artifactId> <version>1.6.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-clients_2.11</artifactId> <version>1.6.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-wikiedits_2.11</artifactId> <version>1.6.1</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.flink/flink-streaming-scala --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_2.11</artifactId> <version>1.6.1</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka-0.9 --> <!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka-0.11 --> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka-0.11_2.11</artifactId> <version>1.6.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-scala_2.11</artifactId> <version>1.6.1</version> <!--<scope>provided</scope>--> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table_2.11</artifactId> <version>1.6.1</version> <scope>provided</scope> </dependency> <!--hbase dependency--> <!--<dependency>--> <!--<groupId>org.apache.hbase</groupId>--> <!--<artifactId>hbase</artifactId>--> <!--<version>0.98.8-hadoop2</version>--> <!--<type>pom</type>--> <!--</dependency>--> <!--<dependency>--> <!--<groupId>org.apache.hbase</groupId>--> <!--<artifactId>hbase-client</artifactId>--> <!--<version>0.98.8-hadoop2</version>--> <!--</dependency>--> <!--<dependency>--> <!--<groupId>org.apache.hbase</groupId>--> <!--<artifactId>hbase-common</artifactId>--> <!--<version>0.98.8-hadoop2</version>--> <!--</dependency>--> <!--<dependency>--> <!--<groupId>org.apache.hbase</groupId>--> <!--<artifactId>hbase-server</artifactId>--> <!--<version>0.98.8-hadoop2</version>--> <!--</dependency>--> <dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch-spark-20_$spark.artifactId.version</artifactId> <version>6.7.1</version> </dependency> <!-- https://mvnrepository.com/artifact/org.elasticsearch/elasticsearch --> </dependencies> <build> <plugins> <plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> </plugin> <plugin> <groupId>org.codehaus.mojo</groupId> <artifactId>build-helper-maven-plugin</artifactId> <version>1.8</version> <executions> <execution> <id>add-source</id> <phase>generate-sources</phase> <goals> <goal>add-source</goal> </goals> <configuration> <sources> <source>src/main/scala</source> <source>src/test/scala</source> </sources> </configuration> </execution> <execution> <id>add-test-source</id> <phase>generate-sources</phase> <goals> <goal>add-test-source</goal> </goals> <configuration> <sources> <source>src/test/scala</source> </sources> </configuration> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>2.3.2</version> <configuration> <source>1.7</source> <target>1.7</target> <encoding>$project.build.sourceEncoding</encoding> </configuration> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> <executions> <execution> <goals> <goal>compile</goal> <goal>add-source</goal> <goal>testCompile</goal> </goals> </execution> </executions> <configuration> <scalaVersion>2.11.8</scalaVersion> <sourceDir>src/main/scala</sourceDir> <jvmArgs> <jvmArg>-Xms64m</jvmArg> <jvmArg>-Xmx1024m</jvmArg> </jvmArgs> </configuration> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-release-plugin</artifactId> <version>2.5.3</version> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-deploy-plugin</artifactId> <configuration> <skip>false</skip> </configuration> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-shade-plugin</artifactId> <version>2.4.1</version> <executions> <execution> <phase>package</phase> <goals> <goal>shade</goal> </goals> <configuration> <filters> <filter> <artifact>*:*</artifact> <excludes> <exclude>META-INF/*.SF</exclude> org.apache.hive <exclude>META-INF/*.DSA</exclude> <exclude>META-INF/*.RSA</exclude> </excludes> </filter> </filters> <minimizeJar>false</minimizeJar> </configuration> </execution> </executions> </plugin> </plugins> <resources> <resource> <directory>src/main/resources</directory> <filtering>true</filtering> </resource> <resource> <directory>src/main/resources/$profiles.active</directory> </resource> </resources> <!-- 修复 Plugin execution not covered by lifecycle configuration --> <pluginManagement> <plugins> <plugin> <groupId>org.eclipse.m2e</groupId> <artifactId>lifecycle-mapping</artifactId> <version>1.0.0</version> <configuration> <lifecycleMappingMetadata> <pluginExecutions> <pluginExecution> <pluginExecutionFilter> <groupId>org.codehaus.mojo</groupId> <artifactId>build-helper-maven-plugin</artifactId> <versionRange>[1.8,)</versionRange> <goals> <goal>add-source</goal> <goal>add-test-source</goal> </goals> </pluginExecutionFilter> <action>flink <ignore></ignore> </action> </pluginExecution> <pluginExecution> <pluginExecutionFilter> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> <versionRange>[1.8,)</versionRange> <goals> <goal>compile</goal> <goal>add-source</goal> <goal>testCompile</goal> </goals> </pluginExecutionFilter> <action> <ignore></ignore> </action> </pluginExecution> </pluginExecutions> </lifecycleMappingMetadata> </configuration> </plugin> </plugins> </pluginManagement> </build> </project>

以上是关于flink_初识01的主要内容,如果未能解决你的问题,请参考以下文章