kube-router支持hostport 部署

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kube-router支持hostport 部署相关的知识,希望对你有一定的参考价值。

1、在某些情况下能够直接hostport访问业务,例如Ingress 服务2 整理kube-router yaml 配置由于二进制直接部署不识别conflist 文件所以采用DaemonSet 方式部署

2.1 创建kube-router ConfigMap 配置

vi kube-router-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-router-cfg

namespace: kube-system

labels:

tier: node

k8s-app: kube-router

data:

cni-conf.json: |

"cniVersion":"0.3.0",

"name":"mynet",

"plugins":[

"name":"kubernetes",

"type":"bridge",

"bridge":"kube-bridge",

"isDefaultGateway":true,

"ipam":

"type":"host-local"

,

"type":"portmap",

"capabilities":

"snat":true,

"portMappings":true

]

2.2、创建kube-router DaemonSet

vi kube-router-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

labels:

k8s-app: kube-router

tier: node

name: kube-router

namespace: kube-system

spec:

template:

metadata:

labels:

k8s-app: kube-router

tier: node

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ‘‘

spec:

serviceAccountName: kube-router

serviceAccount: kube-router

containers:

- name: kube-router

image: docker.io/cloudnativelabs/kube-router

imagePullPolicy: Always

args:

- --run-router=true

- --run-firewall=true

- --run-service-proxy=true

- --advertise-cluster-ip=true

- --advertise-loadbalancer-ip=true

- --advertise-pod-cidr=true

- --advertise-external-ip=true

- --cluster-asn=64512

- --metrics-path=/metrics

- --metrics-port=20244

- --enable-cni=true

- --enable-ibgp=true

- --enable-overlay=true

- --nodeport-bindon-all-ip=true

- --nodes-full-mesh=true

- --enable-pod-egress=true

- --cluster-cidr=10.65.0.0/16 # 容器ip段 自行修改

- --v=2"

- --kubeconfig=/var/lib/kube-router/kubeconfig

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: KUBE_ROUTER_CNI_CONF_FILE

value: /etc/cni/net.d/10-kuberouter.conflist

livenessProbe:

httpGet:

path: /healthz

port: 20244

initialDelaySeconds: 10

periodSeconds: 3

resources:

requests:

cpu: 250m

memory: 250Mi

securityContext:

privileged: true

volumeMounts:

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: cni-conf-dir

mountPath: /etc/cni/net.d

- name: kubeconfig

mountPath: /var/lib/kube-router

readOnly: true

initContainers:

- name: install-cni

image: busybox

imagePullPolicy: Always

command:

- /bin/sh

- -c

- set -e -x;

if [ ! -f /etc/cni/net.d/10-kuberouter.conflist ]; then

if [ -f /etc/cni/net.d/*.conf ]; then

rm -f /etc/cni/net.d/*.conf;

fi;

TMP=/etc/cni/net.d/.tmp-kuberouter-cfg;

cp /etc/kube-router/cni-conf.json $TMP;

mv $TMP /etc/cni/net.d/10-kuberouter.conflist;

fi

volumeMounts:

- name: cni-conf-dir

mountPath: /etc/cni/net.d

- name: kube-router-cfg

mountPath: /etc/kube-router

hostNetwork: true

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

- effect: NoSchedule

key: node.kubernetes.io/not-ready

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/ingress # 对外服务节点容忍 kubectl taint nodes k8s-ingress-01 node-role.kubernetes.io/ingress=:NoSchedule

operator: Equal

- effect: NoSchedule

key: node-role.kubernetes.io/vip # vip 节点容忍 kubectl taint nodes k8s-vip-01 node-role.kubernetes.io/vip=:NoSchedule

operator: Equal

volumes:

- name: lib-modules

hostPath:

path: /lib/modules

- name: cni-conf-dir

hostPath:

path: /etc/cni/net.d

- name: kube-router-cfg

configMap:

name: kube-router-cfg

- name: kubeconfig

configMap:

name: kube-proxy

items:

- key: kubeconfig.conf

path: kubeconfig2.3 创建 kube-router Account

vi kube-router-account

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-router

namespace: kube-system2.4 创建 kube-router ClusterRole

vi kube-router-clusterrole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: kube-router

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- services

- nodes

- endpoints

verbs:

- list

- get

- watch

- apiGroups:

- "networking.k8s.io"

resources:

- networkpolicies

verbs:

- list

- get

- watch

- apiGroups:

- extensions

resources:

- networkpolicies

verbs:

- get

- list

- watch2.5 创建 kube-router 权限绑定

vi kube-router-clusterrolebinding.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: kube-router

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-router

subjects:

- kind: ServiceAccount

name: kube-router

namespace: kube-system2.6 创建 kube-router 访问k8 api 证书

cat << EOF | tee /apps/work/k8s/cfssl/k8s/kube-router.json

"CN": "kube-router",

"hosts": [""],

"key":

"algo": "rsa",

"size": 2048

,

"names": [

"C": "CN",

"ST": "GuangDong",

"L": "GuangZhou",

"O": "system:masters",

"OU": "Kubernetes-manual"

]

EOF

## 生成 kube-router 证书和私钥

cfssl gencert -ca=/apps/work/k8s/cfssl/pki/k8s/k8s-ca.pem -ca-key=/apps/work/k8s/cfssl/pki/k8s/k8s-ca-key.pem -config=/apps/work/k8s/cfssl/ca-config.json -profile=kubernetes /apps/work/k8s/cfssl/k8s/kube-router.json | cfssljson -bare /apps/work/k8s/cfssl/pki/k8s/kube-router2.7 生成 kubeconfig.conf

KUBE_APISERVER="https://api.k8s.niuke.local:6443"

kubectl config set-cluster kubernetes --certificate-authority=/apps/work/k8s/cfssl/pki/k8s/k8s-ca.pem --embed-certs=true --server=$KUBE_APISERVER --kubeconfig=kubeconfig.conf

kubectl config set-credentials kube-router --client-certificate=/apps/work/k8s/cfssl/pki/k8s/kube-router.pem --client-key=/apps/work/k8s/cfssl/pki/k8s/kube-router-key.pem --embed-certs=true --kubeconfig=kubeconfig.conf

kubectl config set-context default --cluster=kubernetes --user=kube-router --kubeconfig=kubeconfig.conf

kubectl config use-context default --kubeconfig=kubeconfig.conf2.8 创建 kube-router configmap

kubectl create configmap "kube-proxy" --from-file=kubeconfig.conf 3、创建 kube-router 服务

kubectl apply -f .4、 验证 kube-router

[email protected]:/apps/work/k8s# kubectl get all -A | grep kube-router

kube-system pod/kube-router-hk85f 1/1 Running 0 5h56m

kube-system pod/kube-router-hpnbq 1/1 Running 0 5h56m

kube-system pod/kube-router-hrspb 1/1 Running 0 5h56m

kube-system service/kube-router ClusterIP None <none> 20244/TCP 4h11m

kube-system daemonset.apps/kube-router 3 3 3 3 3 <none> 5h56m

登入任意宿主机

ip a

4: kube-bridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:c7:ea:9b:5a:d0 brd ff:ff:ff:ff:ff:ff

inet 10.65.0.1/24 brd 10.65.0.255 scope global kube-bridge

valid_lft forever preferred_lft forever

5: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master kube-bridge state UP group default

link/ether ca:14:4e:fe:dc:ce brd ff:ff:ff:ff:ff:ff link-netnsid 0

6: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 5e:2f:24:66:2a:8a brd ff:ff:ff:ff:ff:ff

7: kube-dummy-if: <BROADCAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

link/ether 1a:3a:61:1a:cb:39 brd ff:ff:ff:ff:ff:ff

inet 10.64.160.74/32 brd 10.64.160.74 scope link kube-dummy-if

valid_lft forever preferred_lft forever

inet 10.64.0.2/32 brd 10.64.0.2 scope link kube-dummy-if

[[email protected] ~]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.30.1 0.0.0.0 UG 0 0 0 eth0

10.65.0.0 0.0.0.0 255.255.255.0 U 0 0 0 kube-bridge

10.65.1.0 192.168.30.34 255.255.255.0 UG 0 0 0 eth0

10.65.2.0 192.168.30.33 255.255.255.0 UG 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.30.0 0.0.0.0 255.255.255.0 U 0 0 0 eth05、部署测试应用测试hostport 是否部署成功

vi nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

hostPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-nodeport

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

type: NodePort

selector:

name: nginx

kubectl apply -f nginx.yaml

[email protected]:/apps/work/k8s# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-867cddf7f9-pkl6b 1/1 Running 0 6m8s 10.65.2.20 node01 <none> <none>

http://192.168.30.33/

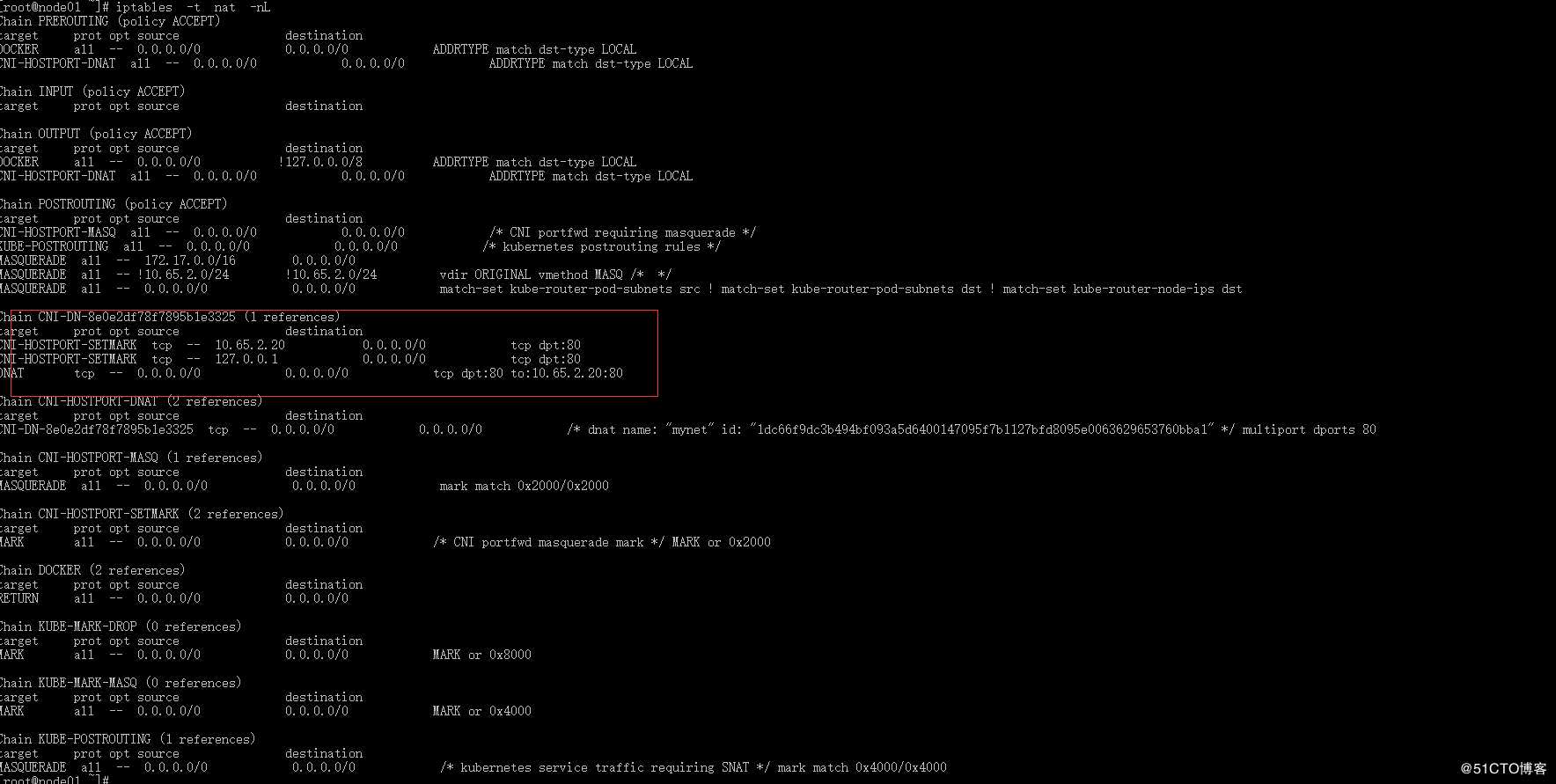

正常打开 进入宿主机 查看映射端口

iptables -t nat -nL

对应容ip 端口已经映射

以上是关于kube-router支持hostport 部署的主要内容,如果未能解决你的问题,请参考以下文章