利用python爬取贝壳网租房信息

Posted technology178

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了利用python爬取贝壳网租房信息相关的知识,希望对你有一定的参考价值。

最近准备换房子,在网站上寻找各种房源信息,看得眼花缭乱,于是想着能否将基本信息汇总起来便于查找,便用python将基本信息爬下来放到excel,这样一来就容易搜索了。

1. 利用lxml中的xpath提取信息

xpath是一门在 xml文档中查找信息的语言,xpath可用来在 xml 文档中对元素和属性进行遍历。对比正则表达式 re两者可以完成同样的工作,实现的功能也差不多,但xpath明显比re具有优势。具有如下优点:(1)可在xml中查找信息 ;(2)支持html的查找;(3)通过元素和属性进行导航

2. 利用xlsxwriter模块将信息保存只excel

xlsxwriter是操作excel的库,可以帮助我们高效快速的,大批量的,自动化的操作excel。它可以写数据,画图,完成大部分常用的excel操作。缺点是xlsxwriter 只能创建新文件,不可以修改原有文件,如果创建新文件时与原有文件同名,则会覆盖原有文件。

3. 爬取思路

观察发现贝壳网租房信息总共是100页,我们可以分每页获取到html代码,然后提取需要的信息保存至字典,将所有页面的信息汇总,最后将字典数据写入excel。

4. 爬虫源代码

# @Author: Rainbowhhy # @Date : 19-6-25 下午6:35 import requests import time from lxml import etree import xlsxwriter def get_html(page): """获取网站html代码""" url = "https://bj.zu.ke.com/zufang/pg/#contentList".format(page) headers = ‘user-agent‘: ‘Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.77 Safari/537.36‘ response = requests.get(url, headers=headers).text return response def parse_html(htmlcode, data): """解析html代码""" content = etree.HTML(htmlcode) results = content.xpath(‘///div[@class="content__article"]/div[1]/div‘) for result in results[:]: community = result.xpath(‘./div[1]/p[@class="content__list--item--title twoline"]/a/text()‘)[0].replace(‘\\n‘, ‘‘).strip().split()[ 0] address = "-".join(result.xpath(‘./div/p[@class="content__list--item--des"]/a/text()‘)) landlord = result.xpath(‘./div/p[@class="content__list--item--brand oneline"]/text()‘)[0].replace(‘\\n‘, ‘‘).strip() if len( result.xpath(‘./div/p[@class="content__list--item--brand oneline"]/text()‘)) > 0 else "" postime = result.xpath(‘./div/p[@class="content__list--item--time oneline"]/text()‘)[0] introduction = ",".join(result.xpath(‘./div/p[@class="content__list--item--bottom oneline"]/i/text()‘)) price = result.xpath(‘./div/span/em/text()‘)[0] description = "".join(result.xpath(‘./div/p[2]/text()‘)).replace(‘\\n‘, ‘‘).replace(‘-‘, ‘‘).strip().split() area = description[0] count = len(description) if count == 6: orientation = description[1] + description[2] + description[3] + description[4] elif count == 5: orientation = description[1] + description[2] + description[3] elif count == 4: orientation = description[1] + description[2] elif count == 3: orientation = description[1] else: orientation = "" pattern = description[-1] floor = "".join(result.xpath(‘./div/p[2]/span/text()‘)[1].replace(‘\\n‘, ‘‘).strip().split()).strip() if len( result.xpath(‘./div/p[2]/span/text()‘)) > 1 else "" date_time = time.strftime("%Y-%m-%d", time.localtime()) """数据存入字典""" data_dict = "community": community, "address": address, "landlord": landlord, "postime": postime, "introduction": introduction, "price": ‘¥‘ + price, "area": area, "orientation": orientation, "pattern": pattern, "floor": floor, "date_time": date_time data.append(data_dict) def excel_storage(response): """将字典数据写入excel""" workbook = xlsxwriter.Workbook(‘./beikeHouse.xlsx‘) worksheet = workbook.add_worksheet() """设置标题加粗""" bold_format = workbook.add_format(‘bold‘: True) worksheet.write(‘A1‘, ‘小区名称‘, bold_format) worksheet.write(‘B1‘, ‘租房地址‘, bold_format) worksheet.write(‘C1‘, ‘房屋来源‘, bold_format) worksheet.write(‘D1‘, ‘发布时间‘, bold_format) worksheet.write(‘E1‘, ‘租房说明‘, bold_format) worksheet.write(‘F1‘, ‘房屋价格‘, bold_format) worksheet.write(‘G1‘, ‘房屋面积‘, bold_format) worksheet.write(‘H1‘, ‘房屋朝向‘, bold_format) worksheet.write(‘I1‘, ‘房屋户型‘, bold_format) worksheet.write(‘J1‘, ‘房屋楼层‘, bold_format) worksheet.write(‘K1‘, ‘查看日期‘, bold_format) row = 1 col = 0 for item in response: worksheet.write_string(row, col, item[‘community‘]) worksheet.write_string(row, col + 1, item[‘address‘]) worksheet.write_string(row, col + 2, item[‘landlord‘]) worksheet.write_string(row, col + 3, item[‘postime‘]) worksheet.write_string(row, col + 4, item[‘introduction‘]) worksheet.write_string(row, col + 5, item[‘price‘]) worksheet.write_string(row, col + 6, item[‘area‘]) worksheet.write_string(row, col + 7, item[‘orientation‘]) worksheet.write_string(row, col + 8, item[‘pattern‘]) worksheet.write_string(row, col + 9, item[‘floor‘]) worksheet.write_string(row, col + 10, item[‘date_time‘]) row += 1 workbook.close() def main(): all_datas = [] """网站总共100页,循环100次""" for page in range(1, 100): html = get_html(page) parse_html(html, all_datas) excel_storage(all_datas) if __name__ == ‘__main__‘: main()

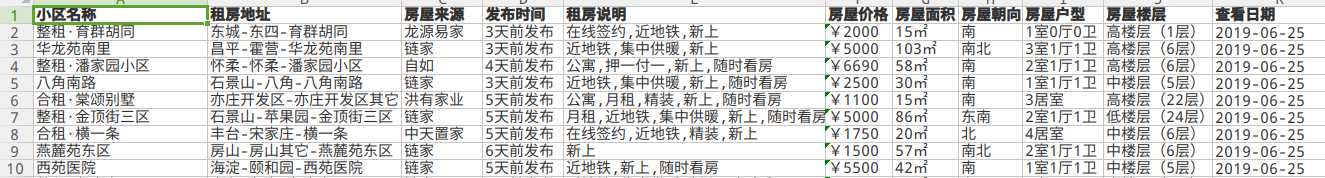

5. 信息截图

以上是关于利用python爬取贝壳网租房信息的主要内容,如果未能解决你的问题,请参考以下文章