[Paper Reading] Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Posted zlian2016

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了[Paper Reading] Show, Attend and Tell: Neural Image Caption Generation with Visual Attention相关的知识,希望对你有一定的参考价值。

论文链接:https://arxiv.org/pdf/1502.03044.pdf

代码链接:https://github.com/kelvinxu/arctic-captions & https://github.com/yunjey/show-attend-and-tell & https://github.com/jazzsaxmafia/show_attend_and_tell.tensorflow

主要贡献

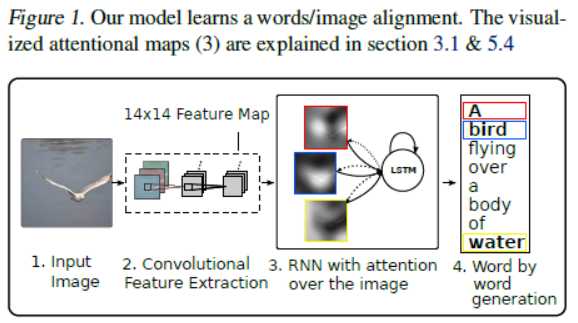

在这篇文章中,作者将“注意力机制(Attention Mechanism)”引入了神经机器翻译(Neural Image Captioning)领域,提出了两种不同的注意力模型:‘Soft’ Deterministic Attention Mechanism & ‘Hard’ Stochastic Attention Mechanism。下图展示了Show, Attend and Tell模型的整体框架。

- Stochastic ‘Hard‘ Attention

- Deterministic ‘Soft‘ Attention

实验细节

以上是关于[Paper Reading] Show, Attend and Tell: Neural Image Caption Generation with Visual Attention的主要内容,如果未能解决你的问题,请参考以下文章