kubernetes 集群部署

Posted zoujiaojiao

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kubernetes 集群部署相关的知识,希望对你有一定的参考价值。

kubernetes 集群部署

环境

JiaoJiao_Centos7-1(152.112) 192.168.152.112

JiaoJiao_Centos7-2(152.113) 192.168.152.113

JiaoJiao_Centos7-3(152.114) 192.168.152.114

已开通 4C+8G+80G

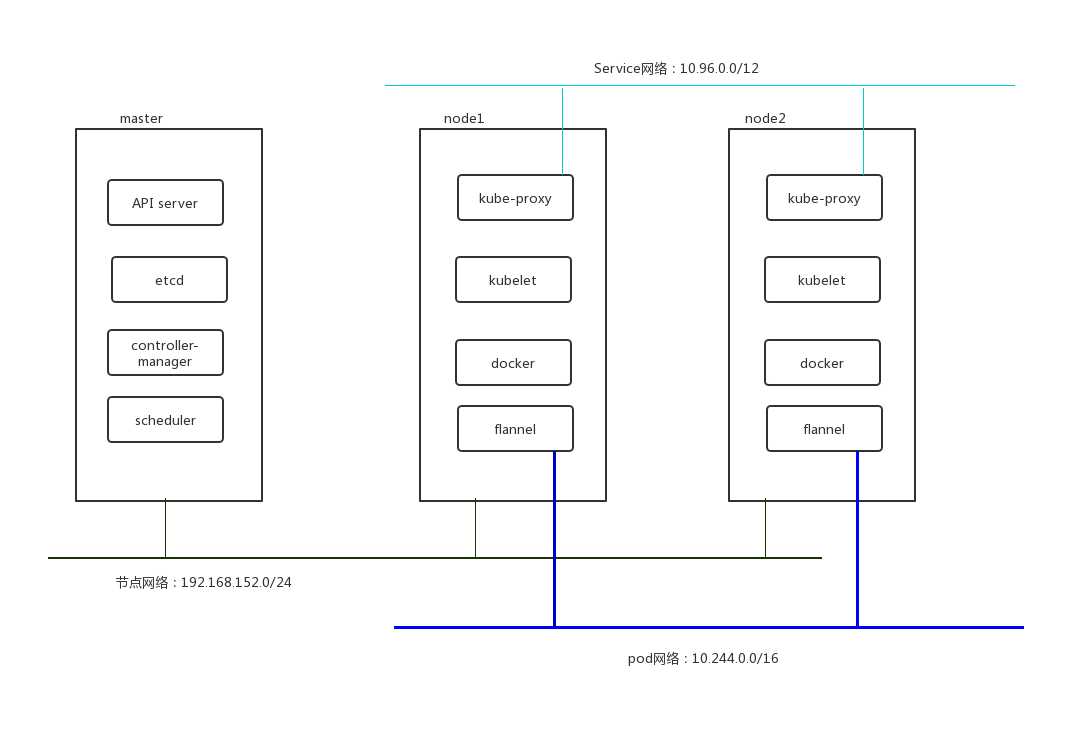

集群规划

部署方式

环境准备:基于主机名称通信,时间同步,关闭firewall和iptables.service

方式一:yum ,rpm 安装。复杂。

1. etcd cluster :安装在master节点上2. flannel:安装在所有节点上

2. 安装配置master

kube-apiserver,kube-scheduler,kube-controller-manager

3. 安装配置node

kube-proxy

kubelet

方式二:使用kubeadm工具安装。简单。

1. master和node 都用yum 安装kubelet,kubeadm,docker

2. master 上初始化:kubeadm init

3. master 上启动一个flannel的pod

4. node上加入集群:kubeadm join

部署步骤

采取方式二:使用kubeadm工具安装步骤

准备环境

修改主机名(3台机器都需要修改)

# hostnamectl set-hostname master

配置hosts和dns解析(3台机器都需要配置)

[[email protected] ~]# cat /etc/hosts 127.0.0.1 node02 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 node02 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.152.112 master 192.168.152.113 node01 192.168.152.114 node02

默认的dns服务器没法解析到opsx.alibaba.com和mirrors.aliyun.com,使用nameserver 192.168.?.?(具体根据你所在公司环境设置)这个可以。

[[email protected] ~]# cat /etc/resolv.conf # Generated by NetworkManager nameserver 192.168.?.?

关闭防火墙和iptables,检查iptables配置值是否为1(3台机器都需要)

# systemctl stop firewalld.service # systemctl stop iptables.service # systemctl disable firewalld.service [[email protected] docker]# cat /proc/sys/net/bridge/bridge-nf-call-ip6tables 1 [[email protected] docker]# cat /proc/sys/net/bridge/bridge-nf-call-iptables 1

配置docker yum源

#yum update #yum install -y yum-utils device-mapper-persistent-data lvm2 wget #cd /etc/yum.repos.d #wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

配置kubernetes yum 源

#wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg # wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg # rpm --import rpm-package-key.gpg # rpm --import yum-key.gpg [[email protected] yum.repos.d]# cat kubernetes.repo [kubernetes] name=Kubernetes Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg enabled=1 # yum repolist

master和node 安装kubelet,kubeadm,docker

# yum install docker-ce kubelet kubeadm

master 上安装kubectl ,node节点可以选择安装或者不安装

kubectl 是API server 客户端程序,通过连接master端的API server来实现k8s对象资源的增删改查等基本操作。

#yum install kubectl

docker的配置

配置私有仓库和镜像加速地址

[[email protected] docker]# cat /etc/docker/daemon.json "registry-mirrors": ["http://295c6a59.m.daocloud.io"], "insecure-registries":["自己搭建的私有仓库地址"]

设置代理

[[email protected] docker]# cat /usr/lib/systemd/system/docker.service |grep -v "^#" [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com BindsTo=containerd.service After=network-online.target firewalld.service containerd.service Wants=network-online.target Requires=docker.socket [Service] Type=notify ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always #设置代理地址,和不让代理的地址 Environment="HTTPS_PROXY=http://www.ik8s.io:10080" "NO_PROXY=localhost,127.0.0.1,192.168.152.0/24" StartLimitBurst=3 StartLimitInterval=60s LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TasksMax=infinity Delegate=yes KillMode=process [Install] WantedBy=multi-user.target

启动docker

# systemctl daemon-reload # systemctl start docker [[email protected] docker]# docker info Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 0 Server Version: 18.09.6 Storage Driver: overlay2 Backing Filesystem: xfs Supports d_type: true Native Overlay Diff: false Logging Driver: json-file Cgroup Driver: cgroupfs Plugins: Volume: local Network: bridge host macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog Swarm: inactive Runtimes: runc Default Runtime: runc Init Binary: docker-init containerd version: bb71b10fd8f58240ca47fbb579b9d1028eea7c84 runc version: 2b18fe1d885ee5083ef9f0838fee39b62d653e30 init version: fec3683 Security Options: seccomp Profile: default Kernel Version: 3.10.0-514.el7.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 4 Total Memory: 7.64GiB Name: master ID: KEBQ:4XXU:LTA7:J5QH:HK4F:JS26:RKI4:YFPJ:RY45:Q647:SJI6:VZDQ Docker Root Dir: /var/lib/docker Debug Mode (client): false Debug Mode (server): false HTTPS Proxy: http://www.ik8s.io:10080 #代理地址 No Proxy: localhost,127.0.0.1,192.168.152.0/24 Registry: https://index.docker.io/v1/ Labels: Experimental: false Insecure Registries: XXXXX #本公司私有库地址 127.0.0.0/8 Registry Mirrors: http://295c6a59.m.daocloud.io/ #镜像加速地址 Live Restore Enabled: false Product License: Community Engine # systemctl enable docker

master 上初始化:kubeadm init

修改配置文件,忽略swap报错

[[email protected] docker]# cat /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--fail-swap-on=false"

初始化

#kubeadm init [command] --help 可以先查看下帮助 # kubeadm init --kubernetes-version=v1.14.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap --kubernetes-version=v1.14 # k8s的版本 版本通过:https://github.com/kubernetes/kubernetes/releases 来确定你需要的版本。 --pod-network-cidr=10.244.0.0/16 #pod网络。flannel 的默认是10.244.0.0/16 --service-cidr=10.96.0.0/12 # service 网络,也是用了默认值 --ignore-preflight-errors=Swap #忽略Swap报错 [[email protected] ~]# kubeadm init --kubernetes-version=v1.14.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap [init] Using Kubernetes version: v1.14.2 [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [WARNING Swap]: running with swap on is not supported. Please disable swap [WARNING Service-Kubelet]: kubelet service is not enabled, please run ‘systemctl enable kubelet.service‘ [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using ‘kubeadm config images pull‘ error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.14.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.14.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.14.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1

报错了。拉不到镜像。

解决办法:docker.io仓库对google的容器做了镜像,可以通过下列命令下拉取相关镜像

#docker pull mirrorgooglecontainers/kube-apiserver:v1.14.2 #docker pull mirrorgooglecontainers/kube-controller-manager:v1.14.2 #docker pull mirrorgooglecontainers/kube-scheduler:v1.14.2 #docker pull mirrorgooglecontainers/kube-proxy:v1.14.2 #docker pull mirrorgooglecontainers/pause:3.1 #docker pull mirrorgooglecontainers/etcd:3.3.10 #docker pull coredns/coredns:1.3.1

通过docker tag命令来修改镜像的标签:

# docker tag mirrorgooglecontainers/kube-apiserver:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2 # docker tag mirrorgooglecontainers/kube-controller-manager:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2 # docker tag mirrorgooglecontainers/kube-scheduler:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2 # docker tag mirrorgooglecontainers/kube-proxy:v1.14.2 k8s.gcr.io/kube-proxy:v1.14.2 # docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1 # docker tag mirrorgooglecontainers/etcd:3.3.10 k8s.gcr.io/etcd:3.3.10 # docker tag coredns/coredns:1.3.1 k8s.gcr.io/coredns:1.3.1

初始化过程中有提示,请按照提示进行操作并且将下面内容保存下来。

kubeadm join 192.168.152.112:6443 --token qlu35g.rfxl6k8mvry8nxfp > --discovery-token-ca-cert-hash sha256:8febc1b1402b471187e55186b4c3937ac23cfe830fb6ccfbd2e9f212e011218d

部署网络:master 上启动一个flannel的pod

下载镜像。由于不能直接下载官方镜像,自己百度找了个地址:

#docker pull jmgao1983/flannel:v0.11.0-amd64 #docker tag jmgao1983/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64 #docker rmi jmgao1983/flannel:v0.11.0-amd64

启动:

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

检查下:

[[email protected] ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-fb8b8dccf-f7db6 1/1 Running 0 53m coredns-fb8b8dccf-sd8hk 1/1 Running 0 53m etcd-master 1/1 Running 0 52m kube-apiserver-master 1/1 Running 0 52m kube-controller-manager-master 1/1 Running 0 52m kube-flannel-ds-amd64-zq5zr 1/1 Running 0 19m kube-proxy-8mtbc 1/1 Running 0 53m kube-scheduler-master 1/1 Running 0 52m

到此,master 就安装好了! 保存镜像

docker save -o master.tar k8s.gcr.io/kube-proxy:v1.14.2 k8s.gcr.io/kube-apiserver:v1.14.2 k8s.gcr.io/kube-controller-manager:v1.14.2 k8s.gcr.io/kube-scheduler:v1.14.2 quay.io/coreos/flannel:v0.11.0-amd64 k8s.gcr.io/coredns:1.3.1 k8s.gcr.io/etcd:3.3.10 k8s.gcr.io/pause:3.1

node节点加入集群

将master上保存的镜像同步到节点上

[[email protected] ~]#scp master.tar node01:/root/ [[email protected] ~]#scp master.tar node02:/root/

将镜像导入本地

[[email protected] ~]# docker load< master.tar

修改配置,忽略报错:

[[email protected] docker]# cat /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--fail-swap-on=false"

执行加入集群命令

[[email protected] ~]# kubeadm join 192.168.152.112:6443 --token qlu35g.rfxl6k8mvry8nxfp --discovery-token-ca-cert-hash sha256:8febc1b1402b471187e55186b4c3937ac23cfe830fb6ccfbd2e9f212e011218d --ignore-preflight-errors=Swap

在master上查看节点情况

[[email protected] ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 107m v1.14.2 node01 Ready <none> 23m v1.14.2 node02 Ready <none> 23m v1.14.2

以上是关于kubernetes 集群部署的主要内容,如果未能解决你的问题,请参考以下文章