Kubernetes集群部署(yum部署)

Posted bixiaoyu

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Kubernetes集群部署(yum部署)相关的知识,希望对你有一定的参考价值。

环境准备

Kubernetes-Master:192.168.37.134 #yum install kubernetes-master etcd flannel -y

Kubernetes-node1:192.168.37.135 #yum install kubernetes-node etcd docker flannel *rhsm* -y

Kubernetes-node2:192.168.37.146 #yum install kubernetes-node etcd docker flannel *rhsm* -y

系统版本:Centos7.5

关闭Firewalld防火墙,保证ntp时间正常同步同步

【K8s-master-etcd配置】

[[email protected] ~]# egrep -v "#|^$" /etc/etcd/etcd.conf ETCD_DATA_DIR="/data/etcd1" ETCD_LISTEN_PEER_URLS="http://192.168.37.134:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.37.134:2379,http://127.0.0.1:2379" ETCD_MAX_SNAPSHOTS="5" ETCD_NAME="etcd1" ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.37.134:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.37.134:2379" ETCD_INITIAL_CLUSTER="etcd1=http://192.168.37.134:2380,etcd2=http://192.168.37.135:2380,etcd3=http://192.168.37.136:2380"

[email protected] ~]# mkdir -p /data/etcd1/

[[email protected]ster ~]# chmod 757 -R /data/etcd1/

【K8s-etcd1配置】

[[email protected] ~]# egrep -v "#|^$" /etc/etcd/etcd.conf ETCD_DATA_DIR="/data/etcd2" ETCD_LISTEN_PEER_URLS="http://192.168.37.135:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.37.135:2379,http://127.0.0.1:2379" ETCD_MAX_SNAPSHOTS="5" ETCD_NAME="etcd2" ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.37.135:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.37.135:2379" ETCD_INITIAL_CLUSTER="etcd1=http://192.168.37.134:2380,etcd2=http://192.168.37.135:2380,etcd3=http://192.168.37.136:2380"

[[email protected] ~]# mkdir -p /data/etcd2/

[[email protected] ~]#chmod 757 -R /data/etcd2/

【K8s-node2-etcd配置】

[[email protected] ~]# egrep -v "#|^$" /etc/etcd/etcd.conf ETCD_DATA_DIR="/data/etcd3" ETCD_LISTEN_PEER_URLS="http://192.168.37.136:2380" ETCD_LISTEN_CLIENT_URLS="http://192.168.37.136:2379,http://127.0.0.1:2379" ETCD_MAX_SNAPSHOTS="5" ETCD_NAME="etcd3" ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.37.136:2380" ETCD_ADVERTISE_CLIENT_URLS="http://192.168.37.136:2379" ETCD_INITIAL_CLUSTER="etcd1=http://192.168.37.134:2380,etcd2=http://192.168.37.135:2380,etcd3=http://192.168.37.136:2380"

[[email protected] ~]# mkdir /data/etcd3/

[[email protected] ~]# chmod 757 -R /data/etcd3/

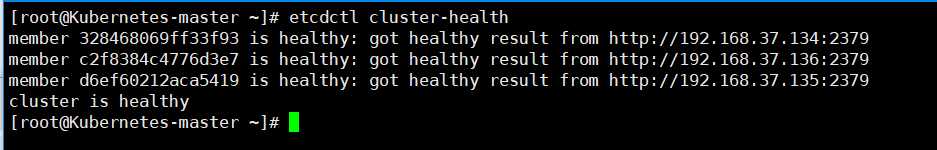

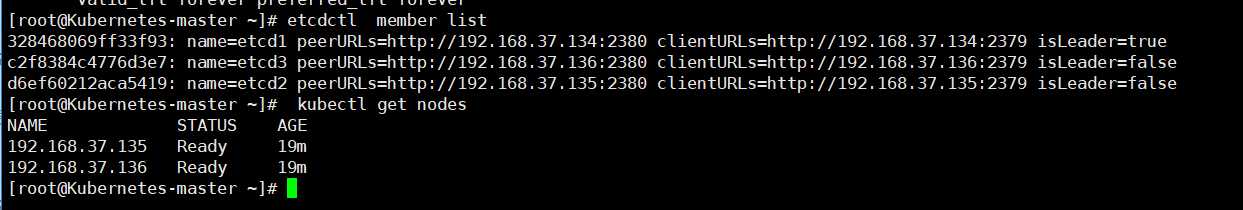

至此,ETCD集群已配置完毕,接下来启动并验证etcd集群是否正常~

[[email protected] ~]# systemctl start etcd.service #注意,上述节点都需要启动etcd服务,同时也设置自启

[[email protected] ~]# systemctl enable etcd.service

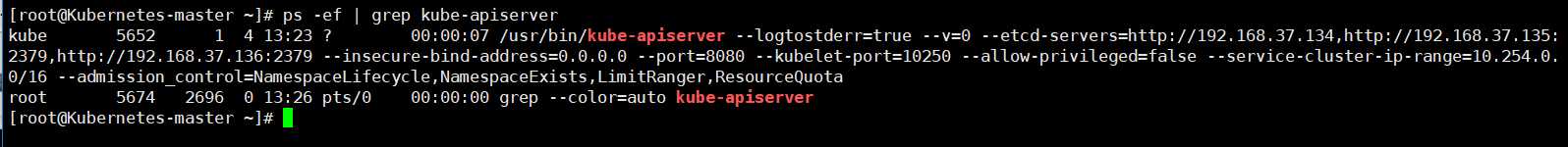

【K8s-master节点API-server/config配置】

[[email protected] ~]# egrep -v "#|^$" /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=8080"

KUBELET_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.37.134,http://192.168.37.135:2379,http://192.168.37.136:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

KUBE_API_ARGS=""

[[email protected] ~]#systemctl start kube-apiserver

[[email protected] ~]# systemctl enable kube-apiserver

[[email protected] ~]# egrep -v "#|^$" /etc/kubernetes/config KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=http://192.168.37.134:8080"

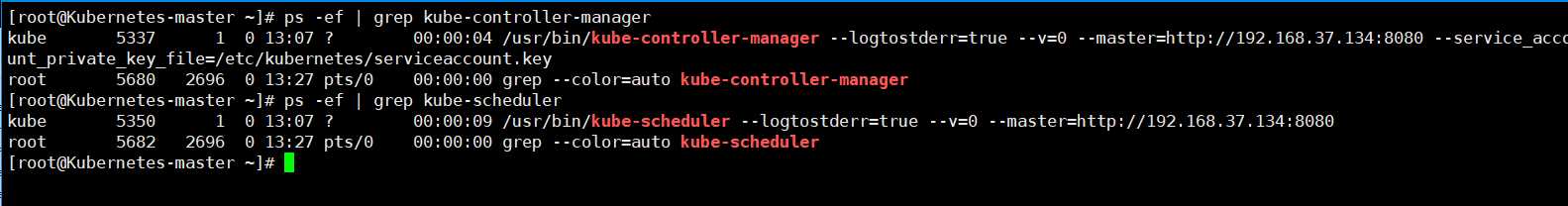

[[email protected] kubernetes]# systemctl start kube-controller-manager

[[email protected] kubernetes]# systemctl enable kube-controller-manager

[[email protected] kubernetes]# systemctl start kube-scheduler

[[email protected] kubernetes]# systemctl enable kube-scheduler

【k8s-node1】

kubelet配置文件

[[email protected] ~]# egrep -v "#|^$" /etc/kubernetes/kubelet KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_HOSTNAME="--hostname-override=192.168.37.135" KUBELET_API_SERVER="--api-servers=http://192.168.37.134:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

config主配置文件

[[email protected] ~]# egrep -v "#|^$" /etc/kubernetes/config

KUBE_LOGTOSTDERR="--logtostderr=true"

KUBE_LOG_LEVEL="--v=0"

KUBE_ALLOW_PRIV="--allow-privileged=false"

KUBE_MASTER="--master=http://192.168.37.134:8080"

[[email protected] ~]# systemctl start kubelet

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kube-proxy

[[email protected] ~]# systemctl enable kube-proxy

【k8s-node2】

kubelet配置文件

[[email protected] ~]# egrep -v "#|^$" /etc/kubernetes/kubelet KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_HOSTNAME="--hostname-override=192.168.37.136" KUBELET_API_SERVER="--api-servers=http://192.168.37.134:8080" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" KUBELET_ARGS=""

config主配置文件

[[email protected] ~]# egrep -v "^$|#" /etc/kubernetes/config KUBE_LOGTOSTDERR="--logtostderr=true" KUBE_LOG_LEVEL="--v=0" KUBE_ALLOW_PRIV="--allow-privileged=false" KUBE_MASTER="--master=http://192.168.37.134:8080"

[[email protected] ~]# systemctl start kubelet

[[email protected] ~]# systemctl enable kubelet

[[email protected] ~]# systemctl start kube-proxy

[[email protected] ~]# systemctl enable kube-proxy

【Kubernetes-flanneld网络配置】

[[email protected] kubernetes]# egrep -v "#|^$" /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.37.134:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network"

[[email protected] ~]# egrep -v "#|^$" /etc/sysconfig/flanneld FLANNEL_ETCD_ENDPOINTS="http://192.168.37.134:2379" FLANNEL_ETCD_PREFIX="/atomic.io/network"

[[email protected] ~]# egrep -v "#|^$" /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://192.168.37.134:2379"

FLANNEL_ETCD_PREFIX="/atomic.io/network"

[[email protected] kubernetes]# etcdctl mk /atomic.io/network/config ‘{"Network":"172.17.0.0/16"}‘

{"Network":"172.17.0.0/16"}

[[email protected] kubernetes]# etcdctl get /atomic.io/network/config

{"Network":"172.17.0.0/16"}

[[email protected] kubernetes]# systemctl restart flanneld

[[email protected] kubernetes]# systemctl enable flanneld

[[email protected] ~]# systemctl start flanneld

[[email protected] ~]# systemctl enable flanneld

[[email protected] ~]# systemctl start flanneld

[[email protected] ~]# systemctl enable flanneld

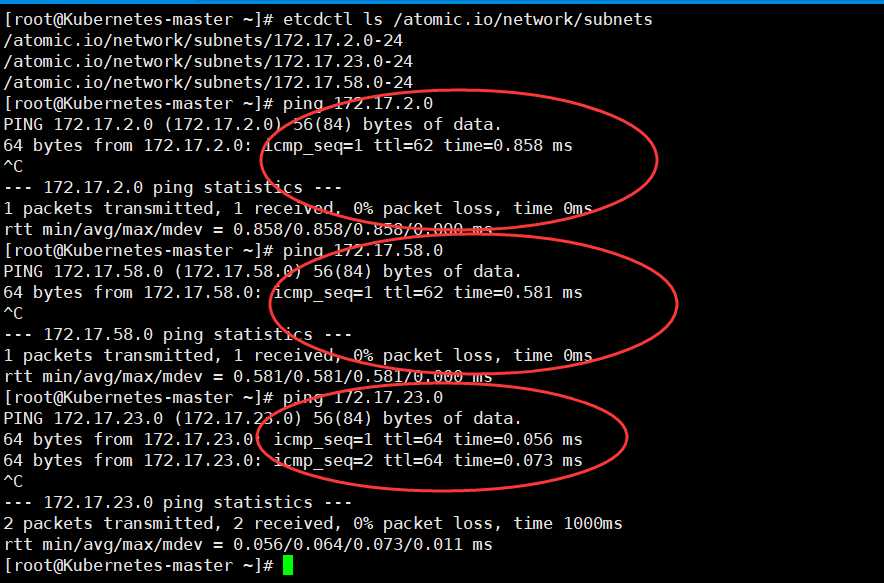

Ps:重启flanneld网络,会出现三个节点的IP,在node节点上要保证docker和自己的flanneld网段一致。如果不一致,重启docker服务即可恢复,否则的话,三个网段ping测不通

[[email protected] ~]# etcdctl ls /atomic.io/network/subnets /atomic.io/network/subnets/172.17.2.0-24 /atomic.io/network/subnets/172.17.23.0-24 /atomic.io/network/subnets/172.17.58.0-24

检查:在master上查看kubernetes的节点状态

[[email protected] ~]# kubectl get nodes

NAME STATUS AGE

192.168.37.135 Ready 5m

192.168.37.136 Ready 5m

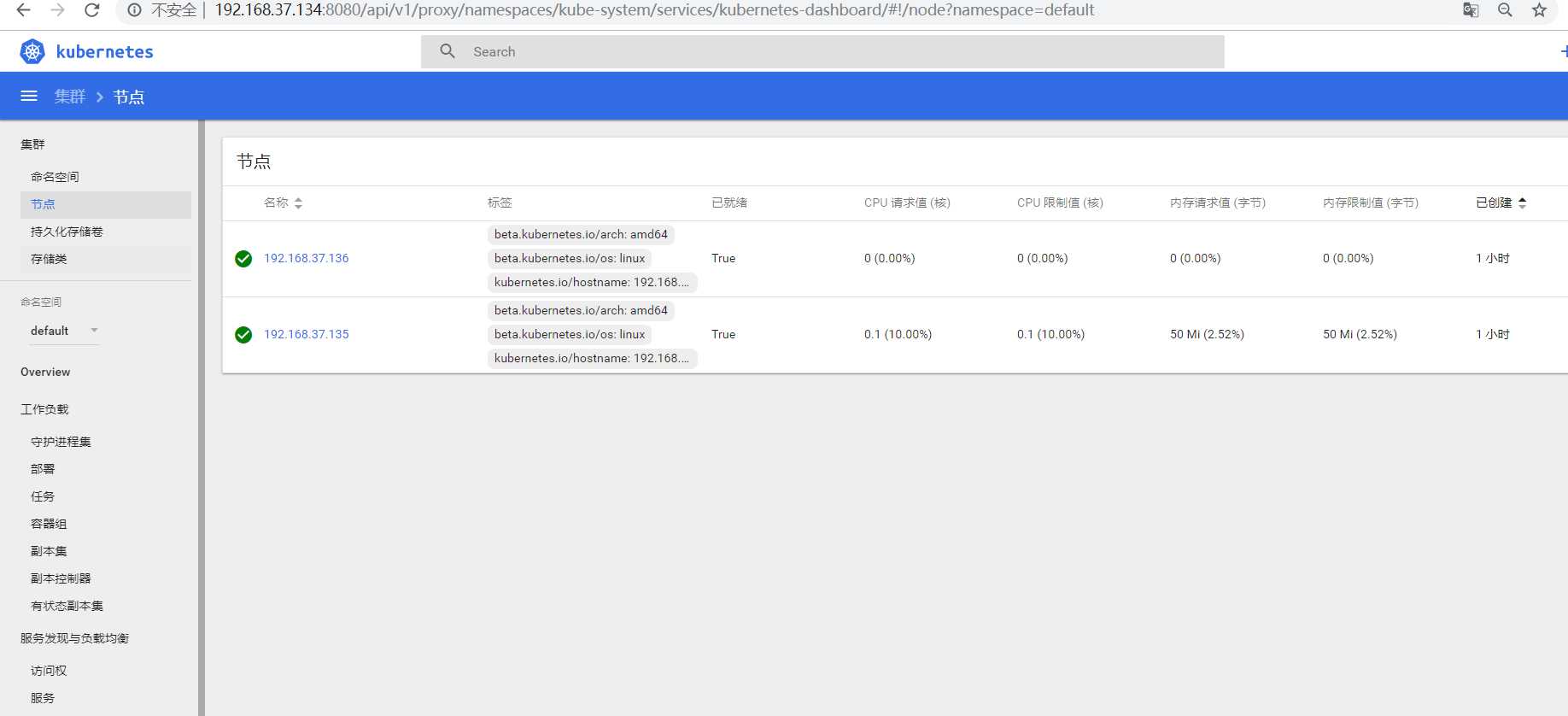

【K8s-Dashboard UI平台】

Kubernetes实现对docker容器集群的统一管理和调度,通过web界面能够更好的管理和控制

Ps:这里我们只需要在node1节点导入镜像即可

[[email protected] ~]# docker load < pod-infrastructure.tgz

[[email protected] ~]# docker tag $(docker images | grep none | awk ‘{print $3}‘) registry.access.redhat.com/rhel7/pod-infrastructure [[email protected]-node1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.access.redhat.com/rhel7/pod-infrastructure latest 99965fb98423 18 months ago 209 MB

[[email protected] ~]# docker load < kubernetes-dashboard-amd64.tgz [[email protected]-node1 ~]# docker tag $(docker images | grep none | awk ‘{print $3}‘) bestwu/kubernetes-dashboard-amd64:v1.6.3 [[email protected]-node1 ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.access.redhat.com/rhel7/pod-infrastructure latest 99965fb98423 18 months ago 209 MB bestwu/kubernetes-dashboard-amd64 v1.6.3 9595afede088 21 months ago 139 MB

【Kubernetes-master】

编辑 ymal文件并创建Dashboard pods模块

[[email protected] ~]# vim dashboard-controller.yaml

[[email protected] ~]# cat dashboard-controller.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" spec: selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/critical-pod: ‘‘ scheduler.alpha.kubernetes.io/tolerations: ‘[{"key":"CriticalAddonsOnly", "operator":"Exists"}]‘ spec: containers: - name: kubernetes-dashboard image: bestwu/kubernetes-dashboard-amd64:v1.6.3 resources: # keep request = limit to keep this container in guaranteed class limits: cpu: 100m memory: 50Mi requests: cpu: 100m memory: 50Mi ports: - containerPort: 9090 args: - --apiserver-host=http://192.168.37.134:8080 livenessProbe: httpGet: path: / port: 9090 initialDelaySeconds: 30 timeoutSeconds: 30

[[email protected] ~]# vim dashboard-service.yaml

apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" spec: selector: k8s-app: kubernetes-dashboard ports: - port: 80 targetPort: 9090

[[email protected] ~]# kubectl apply -f dashboard-controller.yaml

[[email protected] ~]# kubectl apply -f dashboard-service.yaml

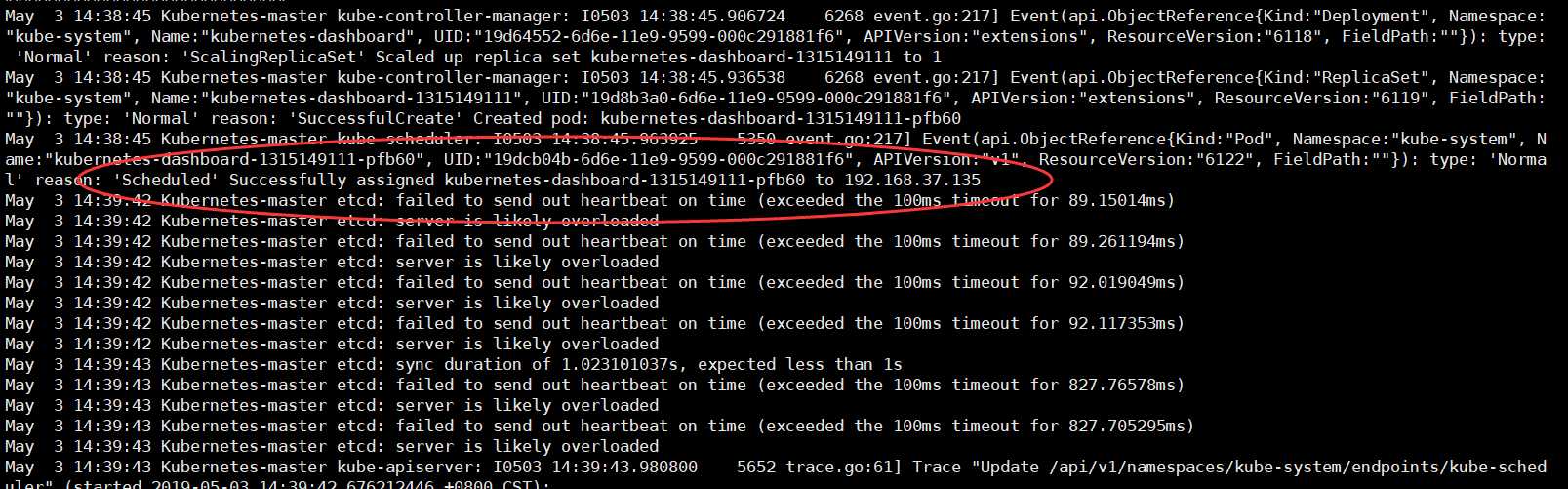

Ps:在创建 模块的同时,检查日志是否出现异常信息

[[email protected] ~]# tail -f /var/log/messages

可以在node1节点上查看容器已经启动成功~

[[email protected] ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS f118f845f19f bestwu/kubernetes-dashboard-amd64:v1.6.3 "/dashboard --inse..." 8 minutes ago Up 8 minutes 30dc9e7f_kubernetes-dashboard-1315149111-pfb60_kube-system_19dcb04b-6d6e-11e9-9599-000c291881f6_02fd5b8e 67b7746a6d23 registry.access.redhat.com/rhel7/pod-infrastructure:latest "/usr/bin/pod" 8 minutes ago Up 8 minutes es-dashboard-1315149111-pfb60_kube-system_19dcb04b-6d6e-11e9-9599-000c291881f6_4e2cb565

通过浏览器可验证输出k8s-master端访问即可

以上是关于Kubernetes集群部署(yum部署)的主要内容,如果未能解决你的问题,请参考以下文章