pytorch实现kaggle猫狗识别

Posted henuliulei

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了pytorch实现kaggle猫狗识别相关的知识,希望对你有一定的参考价值。

参考:https://blog.csdn.net/weixin_37813036/article/details/90718310

深度学习的基础就是数据,咱们先从数据谈起。此次使用的猫狗分类图像一共25000张,猫狗分别有12500张。下载地址:https://www.kaggle.com/c/dogs-vs-cats-redux-kernels-edition/data我们先来简单的看看都是一些什么图片。我们从下载文件里可以看到有两个文件夹:train和test1,分别用于训练和测试。打开train文件夹可以看到有25000 张小猫小狗的图片,图片名字为cat.0.jpg,cat.1.jpg,dog.0.jpg,dog.1.jpg。

1 data_transform = transforms.Compose([ 2 transforms.Resize(256), 3 transforms.CenterCrop(224), 4 transforms.ToTensor(), 5 transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) 6 ]) 7 8 train_dataset = datasets.ImageFolder(root=‘./data2/train/‘, 9 transform=data_transform) 10 train_loader = torch.utils.data.DataLoader(train_dataset, 11 batch_size=batch_size, 12 shuffle=True, 13 num_workers=num_workers)

为方便训练过程,需要把训练集进行处理:把猫狗的图片分别放在cat,dog文件夹中,并划分出一部分图片作为测试集(与下载的测试集不同)。代码如下:

1 #接下来的数据是把原训练集90%的数据做训练,10%做测试集,其中把分为训练集的数据内的猫和狗分开,分为测试集的数据的猫和狗进行分开保存在新的各自的目录下 2 # kaggle原始数据集地址 3 original_dataset_dir = ‘D:\\Code\\Python\\Kaggle-Dogs_vs_Cats_PyTorch-master\\data\\train‘ #训练集地址 4 total_num = int(len(os.listdir(original_dataset_dir)) ) #训练集数据总数,包含猫和狗 5 random_idx = np.array(range(total_num)) 6 np.random.shuffle(random_idx)#打乱图片顺序 7 8 # 待处理的数据集地址 9 base_dir = ‘D:\\Code\\dogvscat\\data2‘ #把原训练集数据分类后的数据存储在该目录下 10 if not os.path.exists(base_dir): 11 os.mkdir(base_dir) 12 13 # 训练集、测试集的划分 14 sub_dirs = [‘train‘, ‘test‘] 15 animals = [‘cats‘, ‘dogs‘] 16 train_idx = random_idx[:int(total_num * 0.9)] #打乱后的数据的90%是训练集,10是测试集 17 test_idx = random_idx[int(total_num * 0.9):int(total_num * 1)] 18 numbers = [train_idx, test_idx] 19 for idx, sub_dir in enumerate(sub_dirs): 20 dir = os.path.join(base_dir, sub_dir)#‘D:\\Code\\dogvscat\\data2\\train‘或‘D:\\Code\\dogvscat\\data2\\test‘ 21 if not os.path.exists(dir): 22 os.mkdir(dir) 23 24 animal_dir = "" 25 26 #fnames = [‘.{}.jpg‘.format(i) for i in numbers[idx]] 27 fnames = "" 28 if sub_dir == ‘train‘: 29 idx = 0 30 else: 31 idx =1 32 for i in numbers[idx]: 33 #print(i) 34 if i>=12500:#把数据保存在dogs目录下 35 fnames = str(‘dog‘+‘.{}.jpg‘.format(i)) 36 animal_dir = os.path.join(dir,‘dogs‘) 37 38 if not os.path.exists(animal_dir): 39 os.mkdir(animal_dir) 40 if i<12500:#图片是猫,数据保存在cats目录下 41 fnames = str(‘cat‘+‘.{}.jpg‘.format(i)) 42 animal_dir = os.path.join(dir, ‘cats‘) 43 if not os.path.exists(animal_dir): 44 os.mkdir(animal_dir) 45 src = os.path.join(original_dataset_dir, str(fnames)) #原数据地址 46 #print(src) 47 dst = os.path.join(animal_dir, str(fnames))#新地址 48 #print(dst) 49 shutil.copyfile(src, dst)#复制 50 51 52 # 验证训练集、测试集的划分的照片数目 53 print(dir + ‘ total images : %d‘ % (len(os.listdir(dir+‘\\dogs‘))+len(os.listdir(dir+‘\\cats‘)))) 54 # coding=utf-8

# 创建模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.maxpool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 53 * 53, 1024)

self.fc2 = nn.Linear(1024, 512)

self.fc3 = nn.Linear(512, 2)

def forward(self, x):

x = self.maxpool(F.relu(self.conv1(x)))

x = self.maxpool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 53 * 53)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

class Net2(nn.Module):

def __init__(self):

super(Net2, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.maxpool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 53 * 53, 1024)

torch.nn.Dropout(0.5)

self.fc2 = nn.Linear(1024, 512)

torch.nn.Dropout(0.5)

self.fc3 = nn.Linear(512, 2)

def forward(self, x):

x = self.maxpool(F.relu(self.conv1(x)))

x = self.maxpool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 53 * 53)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

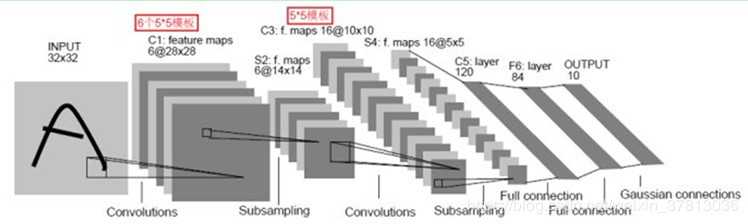

我们从conv1说起。conv1实际上就是定义一个卷积层,3,6,5分别是什么意思?3代表的是输入图像的像素数组的层数,一般来说就是你输入的图像的通道数,比如这里使用的小猫图像都是彩色图像,由R、G、B三个通道组成,所以数值为3;6代表的是我们希望进行6次卷积,每一次卷积都能生成不同的特征映射数组,用于提取小猫和小狗的6种特征。每一个特征映射结果最终都会被堆叠在一起形成一个图像输出,再作为下一步的输入;5就是过滤框架的尺寸,表示我们希望用一个5 * 5的矩阵去和图像中相同尺寸的矩阵进行点乘再相加,形成一个值。定义好了卷基层,我们接着定义池化层。池化层所做的事说来简单,其实就是因为大图片生成的像素矩阵实在太大了,我们需要用一个合理的方法在降维的同时又不失去物体特征,所以深度学习学者们想出了一个称为池化的技术,说白了就是从左上角开始,每四个元素(2 * 2)合并成一个元素,用这一个元素去代表四个元素的值,所以图像体积一下子降为原来的四分之一。再往下一行,我们又一次碰见了一个卷积层:conv2,和conv1一样,它的输入也是一个多层像素数组,输出也是一个多层像素数组,不同的是这一次完成的计算量更大了,我们看这里面的参数分别是6,16,5。之所以为6是因为conv1的输出层数为6,所以这里输入的层数就是6;16代表conv2的输出层数,和conv1一样,16代表着这一次卷积操作将会学习小猫小狗的16种映射特征,特征越多理论上能学习的效果就越好,大家可以尝试一下别的值,看看效果是否真的编变好。conv2使用的过滤框尺寸和conv1一样,所以不再重复。

关于53这个数字可以根据((n+2p-f)/ s)+1计算出来。而三个全连接层所做的事很类似,就是不断训练,最后输出一个二分类数值。net类的forward函数表示前向计算的整个过程。forward接受一个input,返回一个网络输出值,中间的过程就是一个调用init函数中定义的层的过程。F.relu是一个激活函数,把所有的非零值转化成零值。此次图像识别的最后关键一步就是真正的循环训练操作。

进行训练的代码:

1 def train(): 2 3 for epoch in range(epochs): 4 running_loss = 0.0 5 train_correct = 0 6 train_total = 0 7 for step, data in enumerate(train_loader, 0):#第二个参数表示指定索引从0开始 8 inputs, train_labels = data 9 if use_gpu: 10 inputs, labels = Variable(inputs.cuda()), Variable(train_labels.cuda()) 11 else: 12 inputs, labels = Variable(inputs), Variable(train_labels) 13 optimizer.zero_grad() 14 outputs = net(inputs) 15 _, train_predicted = torch.max(outputs.data, 1) #返回每一行最大值的数值和索引,索引对应分类 16 train_correct += (train_predicted == labels.data).sum() 17 loss = cirterion(outputs, labels) 18 loss.backward() 19 optimizer.step() 20 running_loss += loss.item() 21 train_total += train_labels.size(0) 22 23 print(‘train %d epoch loss: %.3f acc: %.3f ‘ % ( 24 epoch + 1, running_loss / train_total, 100 * train_correct / train_total)) 25 # 模型测试 26 correct = 0 27 test_loss = 0.0 28 test_total = 0 29 test_total = 0 30 net.eval() #测试的时候整个模型的参数不再变化 31 for data in test_loader: 32 images, labels = data 33 if use_gpu: 34 images, labels = Variable(images.cuda()), Variable(labels.cuda()) 35 else: 36 images, labels = Variable(images), Variable(labels) 37 outputs = net(images) 38 _, predicted = torch.max(outputs.data, 1) 39 loss = cirterion(outputs, labels) 40 test_loss += loss.item() 41 test_total += labels.size(0) 42 correct += (predicted == labels.data).sum()

完整的代码如下

1 # coding=utf-8 2 import os 3 import numpy as np 4 import torch 5 import torch.nn as nn 6 import torch.nn.functional as F 7 import torch.optim as optim 8 from torch.autograd import Variable 9 from torch.utils.data import Dataset 10 from torchvision import transforms, datasets, models 11 import shutil 12 from matplotlib import pyplot as plt 13 # 随机种子设置 14 random_state = 42 15 np.random.seed(random_state) 16 #接下来的数据是把原训练集90%的数据做训练,10%做测试集,其中把分为训练集的数据内的猫和狗分开,分为测试集的数据的猫和狗进行分开保存在新的各自的目录下 17 # kaggle原始数据集地址 18 original_dataset_dir = ‘D:\\Code\\Python\\Kaggle-Dogs_vs_Cats_PyTorch-master\\data\\train‘ #训练集地址 19 total_num = int(len(os.listdir(original_dataset_dir)) ) #训练集数据总数,包含猫和狗 20 random_idx = np.array(range(total_num)) 21 np.random.shuffle(random_idx)#打乱图片顺序 22 23 # 待处理的数据集地址 24 base_dir = ‘D:\\Code\\dogvscat\\data2‘ #把原训练集数据分类后的数据存储在该目录下 25 if not os.path.exists(base_dir): 26 os.mkdir(base_dir) 27 28 # 训练集、测试集的划分 29 sub_dirs = [‘train‘, ‘test‘] 30 animals = [‘cats‘, ‘dogs‘] 31 train_idx = random_idx[:int(total_num * 0.9)] #打乱后的数据的90%是训练集,10是测试集 32 test_idx = random_idx[int(total_num * 0.9):int(total_num * 1)] 33 numbers = [train_idx, test_idx] 34 for idx, sub_dir in enumerate(sub_dirs): 35 dir = os.path.join(base_dir, sub_dir)#‘D:\\Code\\dogvscat\\data2\\train‘或‘D:\\Code\\dogvscat\\data2\\test‘ 36 if not os.path.exists(dir): 37 os.mkdir(dir) 38 39 animal_dir = "" 40 41 #fnames = [‘.{}.jpg‘.format(i) for i in numbers[idx]] 42 fnames = "" 43 if sub_dir == ‘train‘: 44 idx = 0 45 else: 46 idx =1 47 for i in numbers[idx]: 48 #print(i) 49 if i>=12500:#把数据保存在dogs目录下 50 fnames = str(‘dog‘+‘.{}.jpg‘.format(i)) 51 animal_dir = os.path.join(dir,‘dogs‘) 52 53 if not os.path.exists(animal_dir): 54 os.mkdir(animal_dir) 55 if i<12500:#图片是猫,数据保存在cats目录下 56 fnames = str(‘cat‘+‘.{}.jpg‘.format(i)) 57 animal_dir = os.path.join(dir, ‘cats‘) 58 if not os.path.exists(animal_dir): 59 os.mkdir(animal_dir) 60 src = os.path.join(original_dataset_dir, str(fnames)) #原数据地址 61 #print(src) 62 dst = os.path.join(animal_dir, str(fnames))#新地址 63 #print(dst) 64 shutil.copyfile(src, dst)#复制 65 66 67 # 验证训练集、测试集的划分的照片数目 68 print(dir + ‘ total images : %d‘ % (len(os.listdir(dir+‘\\dogs‘))+len(os.listdir(dir+‘\\cats‘)))) 69 # coding=utf-8 70 71 # 配置参数 72 random_state = 1 73 torch.manual_seed(random_state) # 设置随机数种子,确保结果可重复 74 torch.cuda.manual_seed(random_state)# #为GPU设置种子用于生成随机数,以使得结果是确定的 75 torch.cuda.manual_seed_all(random_state) #为所有GPU设置种子用于生成随机数,以使得结果是确定的 76 np.random.seed(random_state) 77 # random.seed(random_state) 78 79 epochs = 10 # 训练次数 80 batch_size = 4 # 批处理大小 81 num_workers = 0 # 多线程的数目 82 use_gpu = torch.cuda.is_available() 83 PATH=‘D:\\Code\\dogvscat\\model.pt‘ 84 # 对加载的图像作归一化处理, 并裁剪为[224x224x3]大小的图像 85 data_transform = transforms.Compose([ 86 transforms.Resize(256),#重置图像分辨率 87 transforms.CenterCrop(224), #中心裁剪 88 transforms.ToTensor(), 89 transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) #归一化 90 ]) 91 92 train_dataset = datasets.ImageFolder(root=‘D:\\Code\\dogvscat\\data2\\train‘, 93 transform=data_transform) 94 print(train_dataset) 95 train_loader = torch.utils.data.DataLoader(train_dataset, 96 batch_size=batch_size, 97 shuffle=True, 98 num_workers=num_workers) 99 100 test_dataset = datasets.ImageFolder(root=‘D:\\Code\\dogvscat\\data2\\test‘, transform=data_transform) 101 test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=batch_size, shuffle=True, num_workers=num_workers) 102 103 104 # 创建模型 105 class Net(nn.Module): 106 def __init__(self): 107 super(Net, self).__init__() 108 self.conv1 = nn.Conv2d(3, 6, 5) 109 self.maxpool = nn.MaxPool2d(2, 2) 110 self.conv2 = nn.Conv2d(6, 16, 5) 111 self.fc1 = nn.Linear(16 * 53 * 53, 1024) 112 self.fc2 = nn.Linear(1024, 512) 113 self.fc3 = nn.Linear(512, 2) 114 115 def forward(self, x): 116 x = self.maxpool(F.relu(self.conv1(x))) 117 x = self.maxpool(F.relu(self.conv2(x))) 118 x = x.view(-1, 16 * 53 * 53) 119 x = F.relu(self.fc1(x)) 120 x = F.relu(self.fc2(x)) 121 x = self.fc3(x) 122 123 return x 124 class Net2(nn.Module): 125 def __init__(self): 126 super(Net2, self).__init__() 127 self.conv1 = nn.Conv2d(3, 6, 5) 128 self.maxpool = nn.MaxPool2d(2, 2) 129 self.conv2 = nn.Conv2d(6, 16, 5) 130 self.fc1 = nn.Linear(16 * 53 * 53, 1024) 131 torch.nn.Dropout(0.5) 132 self.fc2 = nn.Linear(1024, 512) 133 torch.nn.Dropout(0.5) 134 self.fc3 = nn.Linear(512, 2) 135 136 def forward(self, x): 137 x = self.maxpool(F.relu(self.conv1(x))) 138 x = self.maxpool(F.relu(self.conv2(x))) 139 x = x.view(-1, 16 * 53 * 53) 140 x = F.relu(self.fc1(x)) 141 x = F.relu(self.fc2(x)) 142 x = self.fc3(x) 143 144 return x 145 146 147 net = Net2() 148 if(os.path.exists(‘D:\\Code\\dogvscat\\model.pt‘)): 149 net=torch.load(‘D:\\Code\\dogvscat\\model.pt‘) 150 151 if use_gpu: 152 print(‘gpu is available‘) 153 net = net.cuda() 154 else: 155 print(‘gpu is unavailable‘) 156 157 print(net) 158 trainLoss = [] 159 trainacc = [] 160 testLoss = [] 161 testacc = [] 162 x = np.arange(1,11) 163 # 定义loss和optimizer 164 cirterion = nn.CrossEntropyLoss() 165 optimizer = optim.SGD(net.parameters(), lr=0.01, momentum=0.9) 166 167 def train(): 168 169 for epoch in range(epochs): 170 running_loss = 0.0 171 train_correct = 0 172 train_total = 0 173 for step, data in enumerate(train_loader, 0):#第二个参数表示指定索引从0开始 174 inputs, train_labels = data 175 if use_gpu: 176 inputs, labels = Variable(inputs.cuda()), Variable(train_labels.cuda()) 177 else: 178 inputs, labels = Variable(inputs), Variable(train_labels) 179 optimizer.zero_grad() 180 outputs = net(inputs) 181 _, train_predicted = torch.max(outputs.data, 1) #返回每一行最大值的数值和索引,索引对应分类 182 train_correct += (train_predicted == labels.data).sum() 183 loss = cirterion(outputs, labels) 184 loss.backward() 185 optimizer.step() 186 running_loss += loss.item() 187 train_total += train_labels.size(0) 188 189 print(‘train %d epoch loss: %.3f acc: %.3f ‘ % ( 190 epoch + 1, running_loss / train_total, 100 * train_correct / train_total)) 191 # 模型测试 192 correct = 0 193 test_loss = 0.0 194 test_total = 0 195 test_total = 0 196 net.eval() #测试的时候整个模型的参数不再变化 197 for data in test_loader: 198 images, labels = data 199 if use_gpu: 200 images, labels = Variable(images.cuda()), Variable(labels.cuda()) 201 else: 202 images, labels = Variable(images), Variable(labels) 203 outputs = net(images) 204 _, predicted = torch.max(outputs.data, 1) 205 loss = cirterion(outputs, labels) 206 test_loss += loss.item() 207 test_total += labels.size(0) 208 correct += (predicted == labels.data).sum() 209 210 print(‘test %d epoch loss: %.3f acc: %.3f ‘ % (epoch + 1, test_loss / test_total, 100 * correct / test_total)) 211 trainLoss.append(running_loss / train_total) 212 trainacc.append(100 * train_correct / train_total) 213 testLoss.append(test_loss / test_total) 214 testacc.append(100 * correct / test_total) 215 plt.figure(1) 216 plt.title(‘train‘) 217 plt.plot(x,trainacc,‘r‘) 218 plt.plot(x,trainLoss,‘b‘) 219 plt.show() 220 plt.figure(2) 221 plt.title(‘test‘) 222 plt.plot(x,testacc,‘r‘) 223 plt.plot(x,testLoss,‘b‘) 224 plt.show() 225 226 227 228 torch.save(net, ‘D:\\Code\\dogvscat\\model.pt‘) 229 230 231 train()

看一下某次的运行结果

D:anacondaanacondapythonw.exe D:/Code/Python/pytorch入门与实践/第六章_pytorch实战指南/猫和狗二分类.py D:Codedogvscatdata2 raincats total images : 11253 D:Codedogvscatdata2 estdogs total images : 1253 Net( (conv1): Conv2d(3, 6, kernel_size=(5, 5), stride=(1, 1)) (maxpool): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (conv2): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1)) (fc1): Linear(in_features=44944, out_features=1024, bias=True) (fc2): Linear(in_features=1024, out_features=512, bias=True) (fc3): Linear(in_features=512, out_features=2, bias=True) ) train 1 epoch loss: 0.162 acc: 61.000 test 1 epoch loss: 0.153 acc: 66.000 train 2 epoch loss: 0.148 acc: 68.000 test 2 epoch loss: 0.143 acc: 71.000 train 3 epoch loss: 0.138 acc: 71.000 test 3 epoch loss: 0.138 acc: 72.000 train 4 epoch loss: 0.130 acc: 74.000 test 4 epoch loss: 0.137 acc: 72.000 train 5 epoch loss: 0.119 acc: 77.000 test 5 epoch loss: 0.132 acc: 74.000 train 6 epoch loss: 0.104 acc: 81.000 test 6 epoch loss: 0.129 acc: 75.000 train 7 epoch loss: 0.085 acc: 85.000 test 7 epoch loss: 0.132 acc: 75.000 train 8 epoch loss: 0.060 acc: 90.000 test 8 epoch loss: 0.146 acc: 75.000 train 9 epoch loss: 0.036 acc: 94.000 test 9 epoch loss: 0.200 acc: 74.000 train 10 epoch loss: 0.022 acc: 97.000 test 10 epoch loss: 0.207 acc: 75.000 Process finished with exit code 0

发现这个程序运行结果训练集准确率很高,测试集准确率为75%左右,因此Net类有点过拟合,Net2加入了Dropout降低网络复杂度处理过拟合。这个程序属于最基础的分类算法,因此准确率并不是很高,但是我认为初学者可以先会这个程序,再继续提高网络的准确率。

以上是关于pytorch实现kaggle猫狗识别的主要内容,如果未能解决你的问题,请参考以下文章

深度学习100例 | 第1例:猫狗识别 - PyTorch实现

深度学习100例 | 第1例:猫狗识别 - PyTorch实现

Pytorch入门练习-kaggle手写字识别神经网络(SNN)实现