importing-cleaning-data-in-r-case-studies

Posted gaowenxingxing

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了importing-cleaning-data-in-r-case-studies相关的知识,希望对你有一定的参考价值。

importing-cleaning-data-in-r-case-studies

导入数据

sales<-read_csv("sales.csv")查看数据结构

> # View dimensions of sales

> dim(sales)

[1] 5000 46

>

> # Inspect first 6 rows of sales

> head(sales)

X event_id primary_act_id secondary_act_id

1 1 abcaf1adb99a935fc661 43f0436b905bfa7c2eec b85143bf51323b72e53c

2 2 6c56d7f08c95f2aa453c 1a3e9aecd0617706a794 f53529c5679ea6ca5a48

3 3 c7ab4524a121f9d687d2 4b677c3f5bec71eec8d1 b85143bf51323b72e53c

4 4 394cb493f893be9b9ed1 b1ccea01ad6ef8522796 b85143bf51323b72e53c

5 5 55b5f67e618557929f48 91c03a34b562436efa3c b85143bf51323b72e53c

6 6 4f10fd8b9f550352bd56 ac4b847b3fde66f2117e 63814f3d63317f1b56c4

purch_party_lkup_id

1 7dfa56dd7d5956b17587

2 4f9e6fc637eaf7b736c2

3 6c2545703bd527a7144d

4 527d6b1eaffc69ddd882

5 8bd62c394a35213bdf52

6 3b3a628f83135acd0676

event_name

1 Xfinity Center Mansfield Premier Parking: Florida Georgia Line

2 Gorge Camping - dave matthews band - sept 3-7

3 Dodge Theatre Adams Street Parking - benise

4 Gexa Energy Pavilion Vip Parking : kid rock with sheryl crow

5 Premier Parking - motley crue

6 Fast Lane Access: Journey

primary_act_name secondary_act_name major_cat_name

1 XFINITY Center Mansfield Premier Parking NULL MISC

2 Gorge Camping Dave Matthews Band MISC

3 Parking Event NULL MISC

4 Gexa Energy Pavilion VIP Parking NULL MISC

5 White River Amphitheatre Premier Parking NULL MISC

6 Fast Lane Access Journey MISC

minor_cat_name la_event_type_cat

1 PARKING PARKING

2 CAMPING INVALID

3 PARKING PARKING

4 PARKING PARKING

5 PARKING PARKING

6 SPECIAL ENTRY (UPSELL) UPSELL

event_disp_name

1 Xfinity Center Mansfield Premier Parking: Florida Georgia Line

2 Gorge Camping - dave matthews band - sept 3-7

3 Dodge Theatre Adams Street Parking - benise

4 Gexa Energy Pavilion Vip Parking : kid rock with sheryl crow

5 Premier Parking - motley crue

6 Fast Lane Access: Journey

ticket_text

1 THIS TICKET IS VALID FOR PARKING ONLY GOOD THIS DAY ONLY PREMIER PARKING PASS XFINITY CENTER,LOTS 4 PM SAT SEP 12 2015 7:30 PM

2 %OVERNIGHT C A M P I N G%* * * * * *%GORGE CAMPGROUND%* GOOD THIS DATE ONLY *%SEP 3 - 6, 2009

3 ADAMS STREET GARAGE%PARKING FOR 4/21/06 ONLY%DODGE THEATRE PARKING PASS%ENTRANCE ON ADAMS STREET%BENISE%GARAGE OPENS AT 6:00PM

4 THIS TICKET IS VALID FOR PARKING ONLY GOOD FOR THIS DATE ONLY VIP PARKING PASS GEXA ENERGY PAVILION FRI SEP 02 2011 7:00 PM

5 THIS TICKET IS VALID%FOR PARKING ONLY%GOOD THIS DATE ONLY%PREMIER PARKING PASS%WHITE RIVER AMPHITHEATRE%SAT JUL 30, 2005 6:00PM

6 FAST LANE JOURNEY FAST LANE EVENT THIS IS NOT A TICKET SAN MANUEL AMPHITHEATER SAT JUL 21 2012 7:00 PM

tickets_purchased_qty trans_face_val_amt delivery_type_cd event_date_time

1 1 45 eTicket 2015-09-12 23:30:00

2 1 75 TicketFast 2009-09-05 01:00:00

3 1 5 TicketFast 2006-04-22 01:30:00

4 1 20 Mail 2011-09-03 00:00:00

5 1 20 Mail 2005-07-31 01:00:00

6 2 10 TicketFast 2012-07-22 02:00:00

event_dt presale_dt onsale_dt sales_ord_create_dttm sales_ord_tran_dt

1 2015-09-12 NULL 2015-05-15 2015-09-11 18:17:45 2015-09-11

2 2009-09-04 NULL 2009-03-13 2009-07-06 00:00:00 2009-07-05

3 2006-04-21 NULL 2006-02-25 2006-04-05 00:00:00 2006-04-05

4 2011-09-02 NULL 2011-04-22 2011-07-01 17:38:50 2011-07-01

5 2005-07-30 2005-03-02 2005-03-04 2005-06-18 00:00:00 2005-06-18

6 2012-07-21 NULL 2012-04-11 2012-07-21 17:20:18 2012-07-21

print_dt timezn_nm venue_city venue_state venue_postal_cd_sgmt_1

1 2015-09-12 EST MANSFIELD MASSACHUSETTS 02048

2 2009-09-01 PST QUINCY WASHINGTON 98848

3 2006-04-05 MST PHOENIX ARIZONA 85003

4 2011-07-06 CST DALLAS TEXAS 75210

5 2005-06-28 PST AUBURN WASHINGTON 98092

6 2012-07-21 PST SAN BERNARDINO CALIFORNIA 92407

sales_platform_cd print_flg la_valid_tkt_event_flg fin_mkt_nm

1 www.concerts.livenation.com T N Boston

2 NULL T N Seattle

3 NULL T N Arizona

4 NULL T N Dallas

5 NULL T N Seattle

6 www.livenation.com T N Los Angeles

web_session_cookie_val gndr_cd age_yr income_amt edu_val edu_1st_indv_val

1 7dfa56dd7d5956b17587 <NA> <NA> <NA> <NA> <NA>

2 4f9e6fc637eaf7b736c2 <NA> <NA> <NA> <NA> <NA>

3 6c2545703bd527a7144d <NA> <NA> <NA> <NA> <NA>

4 527d6b1eaffc69ddd882 <NA> <NA> <NA> <NA> <NA>

5 8bd62c394a35213bdf52 <NA> <NA> <NA> <NA> <NA>

6 3b3a628f83135acd0676 <NA> <NA> <NA> <NA> <NA>

edu_2nd_indv_val adults_in_hh_num married_ind child_present_ind

1 <NA> <NA> <NA> <NA>

2 <NA> <NA> <NA> <NA>

3 <NA> <NA> <NA> <NA>

4 <NA> <NA> <NA> <NA>

5 <NA> <NA> <NA> <NA>

6 <NA> <NA> <NA> <NA>

home_owner_ind occpn_val occpn_1st_val occpn_2nd_val dist_to_ven

1 <NA> <NA> <NA> <NA> NA

2 <NA> <NA> <NA> <NA> 59

3 <NA> <NA> <NA> <NA> NA

4 <NA> <NA> <NA> <NA> NA

5 <NA> <NA> <NA> <NA> NA

6 <NA> <NA> <NA> <NA> NA

>

> # View column names of sales

> names(sales)

[1] "X" "event_id" "primary_act_id"

[4] "secondary_act_id" "purch_party_lkup_id" "event_name"

[7] "primary_act_name" "secondary_act_name" "major_cat_name"

[10] "minor_cat_name" "la_event_type_cat" "event_disp_name"

[13] "ticket_text" "tickets_purchased_qty" "trans_face_val_amt"

[16] "delivery_type_cd" "event_date_time" "event_dt"

[19] "presale_dt" "onsale_dt" "sales_ord_create_dttm"

[22] "sales_ord_tran_dt" "print_dt" "timezn_nm"

[25] "venue_city" "venue_state" "venue_postal_cd_sgmt_1"

[28] "sales_platform_cd" "print_flg" "la_valid_tkt_event_flg"

[31] "fin_mkt_nm" "web_session_cookie_val" "gndr_cd"

[34] "age_yr" "income_amt" "edu_val"

[37] "edu_1st_indv_val" "edu_2nd_indv_val" "adults_in_hh_num"

[40] "married_ind" "child_present_ind" "home_owner_ind"

[43] "occpn_val" "occpn_1st_val" "occpn_2nd_val"

[46] "dist_to_ven"下面的一些都是查数据结构的

# Look at structure of sales

str(sales)

# View a summary of sales

summary(sales)

# Load dplyr

require(dplyr)

# Get a glimpse of sales

glimpse(sales)删除指定列

# Remove the first column of sales: sales2

两种写法是一样的

sales2 <- sales[, 2:ncol(sales)]

sales2<-sales[,-1]Create a vector called keep that contains the indices of the columns you want to save. Remember: you want to keep everything besides the first 4 and last 15 columns of sales2.

# Define a vector of column indices: keep

keep <- 5:(ncol(sales2) - 15)

# Subset sales2 using keep: sales3

sales3 <- sales2[, keep]separate 拆分单元格

可以参考separate帮助文档

# Load tidyr

require(tidyr)

# Split event_date_time: sales4

sales4 <- separate(sales3, event_date_time,

c("event_dt","event_time"), sep = " ")

# Split sales_ord_create_dttm: sales5

sales5<-separate(sales4,sales_ord_create_dttm,c("ord_create_dt" , "ord_create_time"),sep=" ")

# Split month column into month and year: mbta6

mbta6 <- separate(mbta5, month, c("year", "month"))读取指定位置的数据

# Define an issues vector

issues<-c(2516, 3863, 4082, 4183)

# Print values of sales_ord_create_dttm at these indices

print(sales3$sales_ord_create_dttm[issues])

# Print a well-behaved value of sales_ord_create_dttm

print(sales3$sales_ord_create_dttm[2517])stringr 包学习

str_detect()检查字符串匹配

# Load stringr

require(stringr)

# Find columns of sales5 containing "dt": date_cols

date_cols<-str_detect(names(sales5),"dt")

# Load lubridate

require(lubridate)

# Coerce date columns into Date objects

sales5[, date_cols] <- lapply(sales5[, date_cols] , ymd)查看缺失值的个数

# Find date columns (don't change)

date_cols <- str_detect(names(sales5), "dt")

# Create logical vectors indicating missing values (don't change)

missing <- lapply(sales5[, date_cols], is.na)

# Create a numerical vector that counts missing values: num_missing

num_missing<-sapply(missing,sum)

# Print num_missing

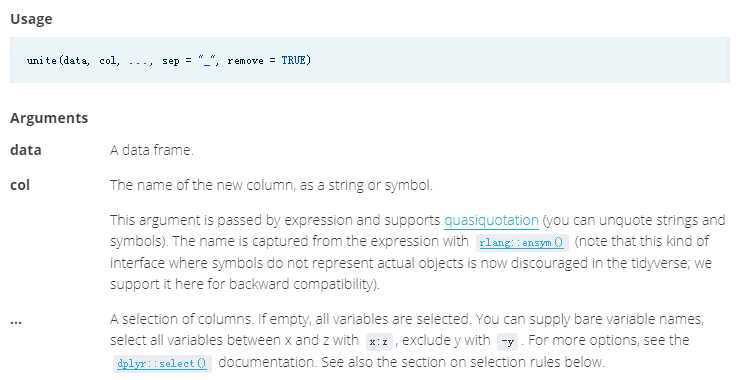

num_missingunite()

# Combine the venue_city and venue_state columns

sales6 <-unite(sales5,venue_city_state,venue_city , venue_state,sep=", ")

# View the head of sales6

head(sales6)从excel中读入数据,并且跳过第一行

关键是skip这个参数

# Load readxl

library(readxl)

# Import mbta.xlsx and skip first row: mbta

mbta<-read_excel("mbta.xlsx",skip=1)有一种很简单的删除行列的方式

# Remove rows 1, 7, and 11 of mbta: mbta2

mbta2<-mbta[c(-1,-7,-11),]

# Remove the first column of mbta2: mbta3

mbta3<-mbta2[,-1]gather()合并单元格

# Load tidyr

require(tidyr)

# Gather columns of mbta3: mbta4

mbta4<-gather(mbta3,month,thou_riders,-mode)

# View the head of mbta4

head(mbta4)fread()

# Import food.csv as a data frame: food

food <-fread("food.csv")读取xls文件

# Load the gdata package

library(gdata)

# Import the spreadsheet: att

att <- read.xls("attendance.xls")Reference

以上是关于importing-cleaning-data-in-r-case-studies的主要内容,如果未能解决你的问题,请参考以下文章