基于Keras 的VGG16神经网络模型的Mnist数据集识别并使用GPU加速

Posted hujinzhou

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于Keras 的VGG16神经网络模型的Mnist数据集识别并使用GPU加速相关的知识,希望对你有一定的参考价值。

这段话放在前面:之前一种用的Pytorch,用着还挺爽,感觉挺方便的,但是在最近文献的时候,很多实验都是基于Google 的Keras的,所以抽空学了下Keras,学了之后才发现Keras相比Pytorch而言,基于keras来写神经网络的话太方便,因为Keras高度的封装性,所以基于Keras来搭建神经网络很简单,在Keras下,可以用两种两种方法来搭建网络模型,分别是Sequential()与Model(),对于网络结构简单,层次较少的模型使用sequential方法较好,只需不断地model.add即可,而后者更适用于网络模型复杂的情况,各有各的好处。

论GPU的重要性:在未使用GPU之前,一直用的CPU来训练,那速度,简直是龟速,一个VGG16花了10个小时,费时费力还闹心,然后今天将它加载在实验室的服务器上,只花了不到半个小时就好了。

下面给出代码:

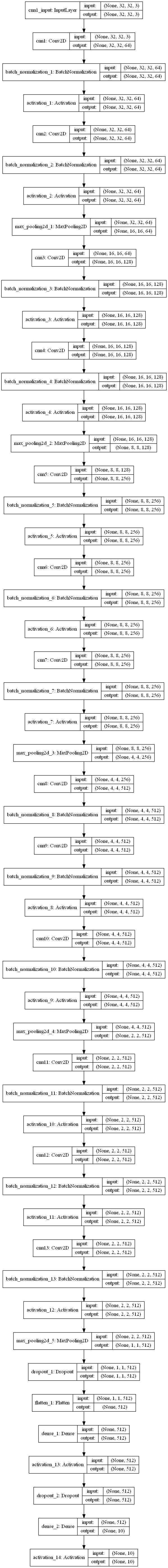

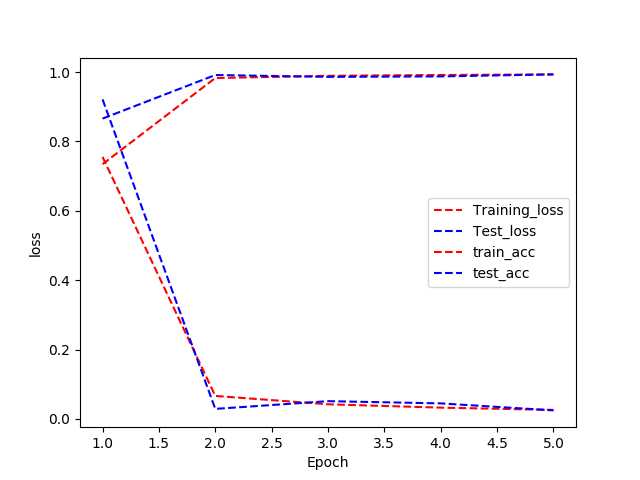

1 #!/usr/bin/env python 3.6 2 #_*_coding:utf-8 _*_ 3 #@Time :2019/11/9 15:19 4 #@Author :hujinzhou 5 #@FileName: My_frist_keras_moudel.py 6 7 #@Software: PyCharm 8 from keras.datasets import mnist 9 from call_back import LossHistory 10 from keras.utils.np_utils import to_categorical 11 import numpy as np 12 import cv2 13 import pylab 14 from keras.optimizers import Adam,SGD 15 from matplotlib import pyplot as plt 16 from keras.utils.vis_utils import plot_model 17 from keras.layers import Dense, Activation, Flatten,Convolution2D,MaxPool2D,Dropout,BatchNormalization 18 from keras.models import Sequential, Model 19 from keras.layers.normalization import BatchNormalization 20 np.random.seed(10) 21 """下载mnist数据集,x_train训练集的数据,y_train训练集的标签,测试集依次类推""" 22 (x_train,y_train),(x_test,y_test)=mnist.load_data() 23 print(x_train.shape) 24 print(len(x_train)) 25 print(y_train[0]) 26 "-----------------------------------------------------------------------------------------" 27 """通过迭代的方法将训练集中的数据整形为32*32""" 28 x_train4D = [cv2.cvtColor(cv2.resize(i,(32,32)), cv2.COLOR_GRAY2BGR) for i in x_train] 29 x_train4D = np.concatenate([arr[np.newaxis] for arr in x_train4D]).astype(‘float32‘) 30 31 x_test4D = [cv2.cvtColor(cv2.resize(i,(32,32)), cv2.COLOR_GRAY2BGR) for i in x_test] 32 x_test4D = np.concatenate([arr[np.newaxis] for arr in x_test4D]).astype(‘float32‘) 33 print(x_test4D.shape) 34 print(x_train4D.shape) 35 "------------------------------------------------------------------------------------" 36 plt.imshow(x_train4D[0],cmap=‘gray‘) 37 pylab.show() 38 #x_train4D = x_train4D.astype(‘float32‘) 39 #x_test4D = x_test4D.astype(‘float32‘) 40 """归一化""" 41 x_test4D_normalize=x_test4D/255 42 x_train4D_normalize=x_train4D/255 43 44 """one_hot encoding""" 45 y_trainOnehot=to_categorical(y_train) 46 y_testOnehot=to_categorical(y_test) 47 48 """建立模型""" 49 "--------------------------------------------------------------------------" 50 model=Sequential() 51 model.add(Convolution2D(filters=64, 52 kernel_size=(5,5), 53 padding=‘same‘, 54 input_shape=(32,32,3), 55 56 kernel_initializer=‘he_normal‘, 57 name=‘cnn1‘ 58 59 ) 60 )#output32*32*64 61 model.add(BatchNormalization(axis=-1)) 62 model.add(Activation(‘relu‘)) 63 64 65 # model.add(Convolution2D(filters=64, 66 # kernel_size=(5,5), 67 # padding=‘same‘, 68 # 69 # kernel_initializer=‘he_normal‘, 70 # name=‘cnn2‘ 71 # ) 72 # )#output32*32*64 73 # model.add(BatchNormalization(axis=-1)) 74 # model.add(Activation(‘relu‘)) 75 model.add(MaxPool2D(pool_size=(2,2),strides=(2, 2)))#output16*16*64 76 77 model.add(Convolution2D(filters=128, 78 kernel_size=(5,5), 79 padding=‘same‘, 80 81 kernel_initializer=‘he_normal‘, 82 name=‘cnn3‘ 83 ) 84 )#output16*16*128 85 model.add(BatchNormalization(axis=-1)) 86 model.add(Activation(‘relu‘)) 87 # model.add(Convolution2D(filters=128, 88 # kernel_size=(5,5), 89 # padding=‘same‘, 90 # 91 # kernel_initializer=‘he_normal‘, 92 # name=‘cnn4‘ 93 # ) 94 # )#output16*16*128 95 # model.add(BatchNormalization(axis=-1)) 96 # model.add(Activation(‘relu‘)) 97 model.add(MaxPool2D(pool_size=(2,2),strides=(2, 2)))#output8*8*128 98 99 model.add(Convolution2D(filters=256, 100 kernel_size=(5,5), 101 padding=‘same‘, 102 103 kernel_initializer=‘he_normal‘, 104 name=‘cnn5‘ 105 ) 106 )#output8*8*256 107 model.add(BatchNormalization(axis=-1)) 108 model.add(Activation(‘relu‘)) 109 # model.add(Convolution2D(filters=256, 110 # kernel_size=(5,5), 111 # padding=‘same‘, 112 # 113 # kernel_initializer=‘he_normal‘, 114 # name=‘cnn6‘ 115 # ) 116 # )#output8*8*256 117 # model.add(BatchNormalization(axis=-1)) 118 # model.add(Activation(‘relu‘)) 119 # model.add(Convolution2D(filters=256, 120 # kernel_size=(5,5), 121 # padding=‘same‘, 122 # 123 # kernel_initializer=‘he_normal‘, 124 # name=‘cnn7‘ 125 # ) 126 # )#output8*8*256 127 # model.add(BatchNormalization(axis=-1)) 128 # model.add(Activation(‘relu‘)) 129 model.add(MaxPool2D(pool_size=(2,2),strides=(2, 2)))#output4*4*256 130 model.add(Convolution2D(filters=512, 131 kernel_size=(5,5), 132 padding=‘same‘, 133 134 kernel_initializer=‘he_normal‘, 135 name=‘cnn8‘ 136 ) 137 )#output4*4*512 138 model.add(BatchNormalization(axis=-1)) 139 model.add(Activation(‘relu‘)) 140 # model.add(Convolution2D(filters=512, 141 # kernel_size=(5,5), 142 # padding=‘same‘, 143 # 144 # kernel_initializer=‘he_normal‘, 145 # name=‘cnn9‘ 146 # ) 147 # )#output4*4*512 148 # model.add(BatchNormalization(axis=-1)) 149 # model.add(Activation(‘relu‘)) 150 # model.add(Convolution2D(filters=512, 151 # kernel_size=(5,5), 152 # padding=‘same‘, 153 # 154 # kernel_initializer=‘he_normal‘, 155 # name=‘cnn10‘ 156 # ) 157 # )#output4*4*512 158 # model.add(BatchNormalization(axis=-1)) 159 # model.add(Activation(‘relu‘)) 160 model.add(MaxPool2D(pool_size=(2,2),strides=(2, 2)))#output2*2*512 161 model.add(Convolution2D(filters=512, 162 kernel_size=(5,5), 163 padding=‘same‘, 164 165 kernel_initializer=‘he_normal‘, 166 name=‘cnn11‘ 167 ) 168 )#output2*2*512 169 model.add(BatchNormalization(axis=-1)) 170 model.add(Activation(‘relu‘)) 171 # model.add(Convolution2D(filters=512, 172 # kernel_size=(5,5), 173 # padding=‘same‘, 174 # 175 # kernel_initializer=‘he_normal‘, 176 # name=‘cnn12‘ 177 # ) 178 # )#output2*2*512 179 # model.add(BatchNormalization(axis=-1)) 180 # model.add(Activation(‘relu‘)) 181 # model.add(Convolution2D(filters=512, 182 # kernel_size=(5,5), 183 # padding=‘same‘, 184 # 185 # kernel_initializer=‘he_normal‘, 186 # name=‘cnn13‘ 187 # ) 188 # )#output2*2*512 189 # model.add(BatchNormalization(axis=-1)) 190 # model.add(Activation(‘relu‘)) 191 model.add(MaxPool2D(pool_size=(2,2),strides=(2, 2)))#output1*1*512 192 model.add(Dropout(0.5)) 193 model.add(Flatten()) 194 model.add(Dense(512)) 195 model.add(Activation(‘relu‘)) 196 model.add(Dropout(0.5)) 197 model.add(Dense(10)) 198 model.add(Activation(‘softmax‘)) 199 model.summary() 200 plot_model(model,to_file=‘model4.png‘,show_shapes=True,show_layer_names=True) 201 # for layer in model.layers: 202 # layer.trainable=False 203 "--------------------------------------------------------------------------------" 204 """训练模型""" 205 adam=SGD(lr=0.1) 206 model.compile(optimizer=adam,loss=‘categorical_crossentropy‘,metrics=[‘accuracy‘]) 207 epoch=5 208 batchsize=100 209 # from keras.models import load_model 210 # model = load_model(‘./My_keras_model_weight‘) 211 history=model.fit(x=x_train4D_normalize, 212 y=y_trainOnehot, 213 epochs=epoch, 214 batch_size=batchsize, 215 validation_data=(x_test4D_normalize,y_testOnehot)) 216 217 """保存模型""" 218 model.save(‘./My_keras_model2_weight‘) 219 220 #model.load(‘./My_keras_model_weight‘) 221 """画出损失曲线""" 222 training_loss=history.history["loss"] 223 train_acc=history.history["acc"] 224 test_loss=history.history["val_loss"] 225 test_acc=history.history["val_acc"] 226 227 epoch_count=range(1,len(training_loss)+1) 228 plt.plot(epoch_count,training_loss,‘r--‘) 229 plt.plot(epoch_count,test_loss,‘b--‘) 230 plt.plot(epoch_count,train_acc,‘r--‘) 231 plt.plot(epoch_count,test_acc,‘b--‘) 232 plt.legend(["Training_loss","Test_loss","train_acc","test_acc"]) 233 plt.xlabel("Epoch") 234 plt.ylabel("loss") 235 plt.show()

结果如下

最终的精度可以达到0.993左右

loss: 0.0261 - acc: 0.9932 - val_loss: 0.0246 - val_acc: 0.9933

以上是关于基于Keras 的VGG16神经网络模型的Mnist数据集识别并使用GPU加速的主要内容,如果未能解决你的问题,请参考以下文章