mapreduce清洗数据

Posted quyangzhangsiyuan

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了mapreduce清洗数据相关的知识,希望对你有一定的参考价值。

继上篇

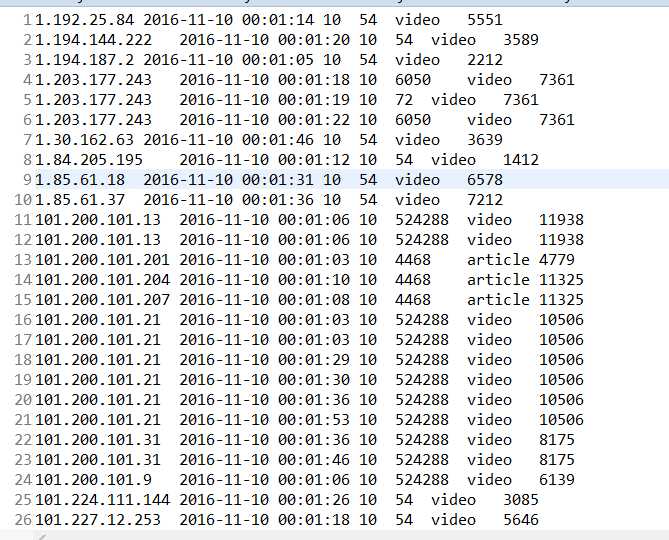

MapReduce清洗数据

package mapreduce; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.TextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat; public class CleanData { public static class Map extends Mapper<Object , Text , Text , IntWritable>{ private static Text newKey=new Text(); private static String chage(String data) { char[] str = data.toCharArray(); String[] time = new String[7]; int j = 0; int k = 0; for(int i=0;i<str.length;i++) { if(str[i]==‘/‘||str[i]==‘:‘||str[i]==32) { time[k] = data.substring(j,i); j = i+1; k++; } } time[k] = data.substring(j, data.length()); switch(time[1]) { case "Jan":time[1]="01";break; case "Feb":time[1]="02";break; case "Mar":time[1]="03";break; case "Apr":time[1]="04";break; case "May":time[1]="05";break; case "Jun":time[1]="06";break; case "Jul":time[1]="07";break; case "Aug":time[1]="08";break; case "Sep":time[1]="09";break; case "Oct":time[1]="10";break; case "Nov":time[1]="11";break; case "Dec":time[1]="12";break; } data = time[2]+"-"+time[1]+"-"+time[0]+" "+time[3]+":"+time[4]+":"+time[5]; return data; } public void map(Object key,Text value,Context context) throws IOException, InterruptedException{ String line=value.toString(); System.out.println(line); String arr[]=line.split(","); String ip = arr[0]; String date = arr[1]; String day = arr[2]; String traffic = arr[3]; String type = arr[4]; String id = arr[5]; date = chage(date); traffic = traffic.substring(0, traffic.length()-1); newKey.set(ip+‘ ‘+date+‘ ‘+day+‘ ‘+traffic+‘ ‘+type); //newKey.set(ip+‘,‘+date+‘,‘+day+‘,‘+traffic+‘,‘+type); int click=Integer.parseInt(id); context.write(newKey, new IntWritable(click)); } } public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable>{ public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{ for(IntWritable val : values){ context.write(key, val); } } } public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException{ Configuration conf=new Configuration(); System.out.println("start"); Job job =new Job(conf,"cleanData"); job.setJarByClass(CleanData.class); job.setMapperClass(Map.class); job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TextOutputFormat.class); Path in=new Path("hdfs://192.168.137.67:9000/mymapreducel/in/result.txt"); Path out=new Path("hdfs://192.168.137.67:9000/mymapreducelShiYan/out1"); FileInputFormat.addInputPath(job,in); FileOutputFormat.setOutputPath(job,out); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

今天遇到了一个

java.lang.ClassCastException: class org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$GetFileInfoRequestProto cannot be cast to class com.google.protobuf.Message (org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$GetFileI....

的错误

搞了好几个小时也没有解决,最后没办法了把导的包全部移除后重新导入,解决了问题。

以上是关于mapreduce清洗数据的主要内容,如果未能解决你的问题,请参考以下文章