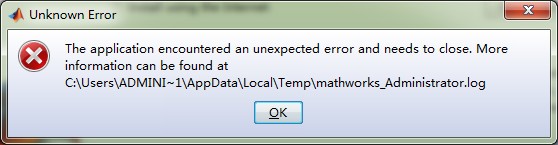

安装matlab时遇到这个问题,网络上没发现有解决方法

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了安装matlab时遇到这个问题,网络上没发现有解决方法相关的知识,希望对你有一定的参考价值。

The application encountered an unexpected error and needs to close. More information can be found at C:\Users\ADMINI~1\AppData\Local\Temp\mathworks_Administrator.log

我自己解决了,只需把安装包setup.exe所在目录下的"licence.dat"改名(改了后缀名)为"licence.txt",(根据错误提示中log文件里的提示而尝试这种做法的)

这个不清楚了

追答重新换过软件版本安装看

在 Matlab/Octave 中实现神经网络

【中文标题】在 Matlab/Octave 中实现神经网络【英文标题】:Implementing a Neural Network in Matlab/Octave 【发布时间】:2016-05-07 18:29:31 【问题描述】:我正在努力解决问题http://postimg.org/image/4bmfha8m7/

我在实现 36 个输入的权重矩阵时遇到了麻烦。

我有一个 3 个神经元的隐藏层。

我使用反向传播算法来学习。

到目前为止我尝试过的是:

% Sigmoid Function Definition

function [result] = sigmoid(x)

result = 1.0 ./ (1.0 + exp(-x));

end

% Inputs

input = [1 1 0 1 1 1 0 1 0 1 0 1 1 1 0 0 0 0 0 0 0 1 1 1 0 0 1 1 1 0 0 1 1 1 0;

0 0 0 0 1 0 1 1 1 0 0 1 1 1 0 0 0 0 0 1 0 1 1 1 0 0 1 1 1 0 0 0 0 0 1;

0 0 0 0 0 0 1 1 1 1 0 1 1 1 1 0 1 1 1 1 0 1 1 1 1 0 1 1 1 1 0 0 0 0 0;

0 0 0 0 1 0 1 1 1 0 0 1 1 1 0 0 1 1 1 0 0 1 1 1 0 0 1 1 1 0 0 0 0 0 1];

% Desired outputs

output = [1;1;1;1];

% Initializing the bias (Bias or threshold are the same thing, essential for learning, to translate the curve)

% Also, the first column of the weight matrix is the weight of the bias values

bias = [-1 -1 -1 -1];

% Learning coefficient

coeff = 1.0;

% Number of learning iterations

iterations = 100;

disp('No. Of Learning Iterations = ');

disp(iterations);

% Initial weights

weights = ones(36,36);

% Main Algorithm Begins

for i = 1:iterations

out = zeros(4,1);

numIn = length (input(:,1));

for j = 1:numIn

% 1st neuron in the hidden layer

H1 = bias(1,1)*weights(1,1) + input(j,1)*weights(1,2) + input(j,2)*weights(1,3) + input(j,3)*weights(1,4)+ input(j,4)*weights(1,5) + input(j,5)*weights(1,6) + input(j,6)*weights(1,7)

+ input(j,7)*weights(1,8) + input(j,8)*weights(1,9) + input(j,9)*weights(1,10)+ input(j,10)*weights(1,11) + input(j,11)*weights(1,12) + input(j,12)*weights(1,13)

+ input(j,13)*weights(1,14) + input(j,14)*weights(1,15) + input(j,15)*weights(1,16)+ input(j,16)*weights(1,17) + input(j,17)*weights(1,18) + input(j,18)*weights(1,19)

+ input(j,19)*weights(1,20) + input(j,20)*weights(1,21) + input(j,21)*weights(1,22)+ input(j,22)*weights(1,23) + input(j,23)*weights(1,24) + input(j,24)*weights(1,25)

+ input(j,25)*weights(1,26) + input(j,26)*weights(1,27) + input(j,27)*weights(1,28)+ input(j,28)*weights(1,29) + input(j,29)*weights(1,30) + input(j,30)*weights(1,31)

+ input(j,31)*weights(1,32) + input(j,32)*weights(1,33) + input(j,33)*weights(1,34)+ input(j,34)*weights(1,35) + input(j,35)*weights(1,36)

x2(1) = sigmoid(H1);

% 2nd neuron in the hidden layer

H2 = bias(1,2)*weights(2,1) + input(j,1)*weights(2,2) + input(j,2)*weights(2,3) + input(j,3)*weights(2,4)+ input(j,4)*weights(2,5) + input(j,5)*weights(2,6) + input(j,6)*weights(2,7)

+ input(j,7)*weights(2,8) + input(j,8)*weights(2,9) + input(j,9)*weights(2,10)+ input(j,10)*weights(2,11) + input(j,11)*weights(2,12) + input(j,12)*weights(2,13)

+ input(j,13)*weights(2,14) + input(j,14)*weights(2,15) + input(j,15)*weights(2,16)+ input(j,16)*weights(2,17) + input(j,17)*weights(2,18) + input(j,18)*weights(2,19)

+ input(j,19)*weights(2,20) + input(j,20)*weights(2,21) + input(j,21)*weights(2,22)+ input(j,22)*weights(2,23) + input(j,23)*weights(2,24) + input(j,24)*weights(2,25)

+ input(j,25)*weights(2,26) + input(j,26)*weights(2,27) + input(j,27)*weights(2,28)+ input(j,28)*weights(2,29) + input(j,29)*weights(2,30) + input(j,30)*weights(2,31)

+ input(j,31)*weights(2,32) + input(j,32)*weights(2,33) + input(j,33)*weights(2,34)+ input(j,34)*weights(2,35) + input(j,35)*weights(2,36)

x2(2) = sigmoid(H2);

% 3rd neuron in the hidden layer

H3 = bias(1,3)*weights(3,1) + input(j,1)*weights(3,2) + input(j,2)*weights(3,3) + input(j,3)*weights(3,4)+ input(j,4)*weights(3,5) + input(j,5)*weights(3,6) + input(j,6)*weights(3,7)

+ input(j,7)*weights(3,8) + input(j,8)*weights(3,9) + input(j,9)*weights(3,10)+ input(j,10)*weights(3,11) + input(j,11)*weights(3,12) + input(j,12)*weights(3,13)

+ input(j,13)*weights(3,14) + input(j,14)*weights(3,15) + input(j,15)*weights(3,16)+ input(j,16)*weights(3,17) + input(j,17)*weights(3,18) + input(j,18)*weights(3,19)

+ input(j,19)*weights(3,20) + input(j,20)*weights(3,21) + input(j,21)*weights(3,22)+ input(j,22)*weights(3,23) + input(j,23)*weights(3,24) + input(j,24)*weights(3,25)

+ input(j,25)*weights(3,26) + input(j,26)*weights(3,27) + input(j,27)*weights(3,28)+ input(j,28)*weights(3,29) + input(j,29)*weights(3,30) + input(j,30)*weights(3,31)

+ input(j,31)*weights(3,32) + input(j,32)*weights(3,33) + input(j,33)*weights(3,34)+ input(j,34)*weights(3,35) + input(j,35)*weights(3,36)

x2(3) = sigmoid(H3);

% Output layer

x3_1 = bias(1,4)*weights(4,1) + x2(1)*weights(4,2) + x2(2)*weights(4,3) + x2(3)*weights(4,4);

out(j) = sigmoid(x3_1);

% Adjust delta values of weights

% For output layer: delta(wi) = xi*delta,

% delta = (1-actual output)*(desired output - actual output)

delta3_1 = out(j)*(1-out(j))*(output(j)-out(j));

% Propagate the delta backwards into hidden layers

delta2_1 = x2(1)*(1-x2(1))*weights(3,2)*delta3_1;

delta2_2 = x2(2)*(1-x2(2))*weights(3,3)*delta3_1;

delta2_3 = x2(3)*(1-x2(3))*weights(3,4)*delta3_1;

% Add weight changes to original weights and then use the new weights.

% delta weight = coeff*x*delta

for k = 1:4

if k == 1 % Bias cases

weights(1,k) = weights(1,k) + coeff*bias(1,1)*delta2_1;

weights(2,k) = weights(2,k) + coeff*bias(1,2)*delta2_2;

weights(3,k) = weights(3,k) + coeff*bias(1,3)*delta2_3;

weights(4,k) = weights(4,k) + coeff*bias(1,4)*delta3_1;

else % When k=2 or 3 input cases to neurons

weights(1,k) = weights(1,k) + coeff*input(j,1)*delta2_1;

weights(2,k) = weights(2,k) + coeff*input(j,2)*delta2_2;

weights(3,k) = weights(3,k) + coeff*input(j,3)*delta2_3;

weights(4,k) = weights(4,k) + coeff*x2(k-1)*delta3_1;

end

end

end

end

disp('For the Input');

disp(input);

disp('Output Is');

disp(out);

disp('Test Case: For the Input');

input = [1 1 0 1 1 1 0 1 0 1 0 1 1 1 0 0 0 0 0 0 0 1 1 1 0 0 1 1 1 0 0 1 1 1 0];

【问题讨论】:

你的代码有什么问题? @Daniel 不正确,我无法正确获取反向传播。 【参考方案1】:对我来说,问题是标签,我看不到你在哪里有输出

输出 (1,1,1,1)?你的意思是。也许我错过了一些东西,但对我来说,有两种方法可以直接标记多类分类,一种是直接使用标签(0 表示 A,1 表示 B,3 表示 C ...)并在 A=1 之后或直接扩展之后扩展它, 0,0,0 = [1,0,0,0;0,1,0,0;0,0,1,0;0,0,0,1]

你做运算的方式很容易出错,看看matlab/octave矩阵运算,它非常强大,可以简化很多。

【讨论】:

以上是关于安装matlab时遇到这个问题,网络上没发现有解决方法的主要内容,如果未能解决你的问题,请参考以下文章