人脸识别《一》opencv人脸识别之预处理

Posted xiaobai_xiaokele

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了人脸识别《一》opencv人脸识别之预处理相关的知识,希望对你有一定的参考价值。

人脸识别非常容易受到光线条件变化,人脸方向,面部表情等等的影响,所以就需要尽可能的减少这些差异。否则人脸识别算法会经常认为相同条件下的两张不同人脸之间具有非常大的相似性,而不是认为一个人的两张脸。

最简单的人脸预处理的方式就是直方图均衡equalizeHist(),在人脸检测中用过,这对于光线和位置变化不是很明显的情况足够,但是在现实世界条件下为了保证可靠性,我们需要很多复杂的技术,包括面部特征检测(检测眼,鼻,嘴,眉毛等)。为了简单我们仅仅使用眼检测并且忽略其他面部特征例如嘴和鼻,它们相比于眼睛来说不太重要。

眼睛检测

眼睛检测对于人脸预处理来说非常有用,因为对于正脸,我们总是假设人的眼睛应该是水平的并且在人脸的对立位置,并且不管面部表情,光线变化,相机属性,相机距离等的影响,眼睛都应该是人脸上具有相当标准的位置以及尺寸。当人脸检测器检测到一个人脸实际是别的东西的时候,忽略错误位置是非常有用的。很少出现人脸检测器和双眼检测器同时出错的情况,所以如果使用检测到的人脸和双眼来处理图像,那么不会检测出很多错误位置(但是不会给出很多人脸供处理,因为人眼检测器不像人脸检测器那么有效)。

opencv2.4中一些预训练的人眼检测器可以检测睁开或者闭合的眼睛,而有一些只能检测睁开的眼睛。

能够检测人眼睁开或者闭合的检测器如下:

• haarcascade_mcs_lefteye.xml(and haarcascade_mcs_righteye.xml)

• haarcascade_lefteye_2splits.xml(and haarcascade_righteye_2splits.xml)

只能检测睁开的眼睛的检测器:

• haarcascade_eye.xml

• haarcascade_eye_tree_eyeglasses.xml

【注意】睁开或者闭合人眼检测器会指定训练的是哪只眼睛,所以需要使用不同的检测器来对应左眼和右眼,然而只检测睁开眼睛的检测器可以使用同一个检测器来表示左眼或者右眼。haarcascade_eye_tree_eyeglasses.xml对于人带着眼镜的情况能够检测出来人眼,但是如果不戴眼镜的情况就不太可靠了。

XML带有“left eye”的名字意味着人的实际的左眼,所以对于相机图像来说就是右边的眼睛。

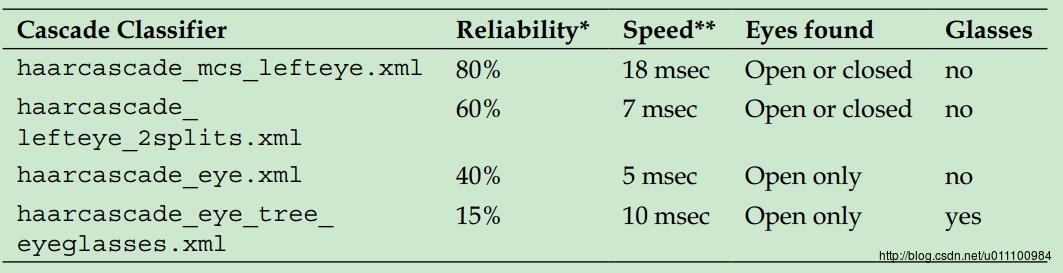

上面四个人眼检测器是按照最可靠到最低可靠度来排列的,所以如果你不需要检测带着眼睛的情况,那么第一个检测器会是最好的选择。

人眼搜索区域

人眼检测中,裁剪图像只显示大概眼睛区域是非常重要的,就像人脸检测的情况,然后继续裁剪一个小的矩形表示左眼的大概区域(如果使用左眼检测器)右眼同理。如果你在整个人脸或者是整幅图上检测,会非常慢以及低的可靠度。不同人眼检测器适合不同的人脸区域,例如如果在实际人眼的最接近区域检测使用haarcascade_eye.xml是效果最好的,而haarcascade_mcs_lefteye.xml和haarcascade_lefteye_2splits.xml是对于人眼所在区域的大区域进行检测的效果最好。

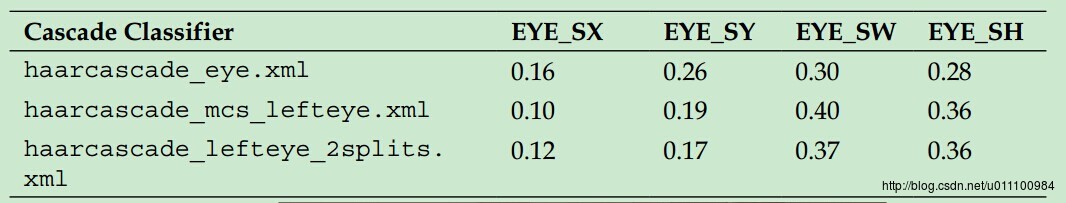

下面列出了不同人眼检测器的优质的人脸搜索区域(使用LBP)使用检测到的人脸矩阵的相对坐标。

不同人眼检测器对应的上面的区域的检测属性如下:

【注意】从上表可视,选择人眼检测器可以选择检测开和闭,或者只检测睁开眼睛。并且记住可以使用一个人眼检测器,如果它不能检测出人眼,你可以使用另外一个。对于很多任务,检测睁开的眼睛或者闭合的是很有用的,如果速度不要求,最好先用mcs_*eye,如果它失败了,在使用eye_2splits检测。但是对于人脸识别来说,一个人睁开眼和闭着眼差别很大,所以最好是先用haarcascade_eye检测,如果失败了再使用haarcascade_eye_tree_eyeglasses检测。

preprocFace.cpp#include "preprocFace.h"

#include "detectFace.h"

using namespace cv;

using namespace std;

void detectBothEyes(const Mat &face, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2, Point &leftEye, Point &rightEye, Rect *searchedLeftEye, Rect *searchedRightEye)

// Skip the borders of the face, since it is usually just hair and ears, that we don't care about.

/*

// For "2splits.xml": Finds both eyes in roughly 60% of detected faces, also detects closed eyes.

const float EYE_SX = 0.12f;

const float EYE_SY = 0.17f;

const float EYE_SW = 0.37f;

const float EYE_SH = 0.36f;

*/

/*

// For mcs.xml: Finds both eyes in roughly 80% of detected faces, also detects closed eyes.

const float EYE_SX = 0.10f;

const float EYE_SY = 0.19f;

const float EYE_SW = 0.40f;

const float EYE_SH = 0.36f;

*/

// For default eye.xml or eyeglasses.xml: Finds both eyes in roughly 40% of detected faces, but does not detect closed eyes.

const float EYE_SX = 0.16f;

const float EYE_SY = 0.26f;

const float EYE_SW = 0.30f;

const float EYE_SH = 0.28f;

int leftX = cvRound(face.cols * EYE_SX);

int topY = cvRound(face.rows * EYE_SY);

int widthX = cvRound(face.cols * EYE_SW);

int heightY = cvRound(face.rows * EYE_SH);

int rightX = cvRound(face.cols * (1.0-EYE_SX-EYE_SW) ); // Start of right-eye corner

Mat topLeftOfFace = face(Rect(leftX, topY, widthX, heightY));

Mat topRightOfFace = face(Rect(rightX, topY, widthX, heightY));

Rect leftEyeRect, rightEyeRect;

// Return the search windows to the caller, if desired.

if (searchedLeftEye)

*searchedLeftEye = Rect(leftX, topY, widthX, heightY);

if (searchedRightEye)

*searchedRightEye = Rect(rightX, topY, widthX, heightY);

// Search the left region, then the right region using the 1st eye detector.

detectLargestObject(topLeftOfFace, eyeCascade1, leftEyeRect, topLeftOfFace.cols);

detectLargestObject(topRightOfFace, eyeCascade1, rightEyeRect, topRightOfFace.cols);

// If the eye was not detected, try a different cascade classifier.

if (leftEyeRect.width <= 0 && !eyeCascade2.empty())

detectLargestObject(topLeftOfFace, eyeCascade2, leftEyeRect, topLeftOfFace.cols);

//if (leftEyeRect.width > 0)

// cout << "2nd eye detector LEFT SUCCESS" << endl;

//else

// cout << "2nd eye detector LEFT failed" << endl;

//else

// cout << "1st eye detector LEFT SUCCESS" << endl;

// If the eye was not detected, try a different cascade classifier.

if (rightEyeRect.width <= 0 && !eyeCascade2.empty())

detectLargestObject(topRightOfFace, eyeCascade2, rightEyeRect, topRightOfFace.cols);

//if (rightEyeRect.width > 0)

// cout << "2nd eye detector RIGHT SUCCESS" << endl;

//else

// cout << "2nd eye detector RIGHT failed" << endl;

//else

// cout << "1st eye detector RIGHT SUCCESS" << endl;

if (leftEyeRect.width > 0) // Check if the eye was detected.

leftEyeRect.x += leftX; // Adjust the left-eye rectangle because the face border was removed.

leftEyeRect.y += topY;

leftEye = Point(leftEyeRect.x + leftEyeRect.width/2, leftEyeRect.y + leftEyeRect.height/2);

else

leftEye = Point(-1, -1); // Return an invalid point

if (rightEyeRect.width > 0) // Check if the eye was detected.

rightEyeRect.x += rightX; // Adjust the right-eye rectangle, since it starts on the right side of the image.

rightEyeRect.y += topY; // Adjust the right-eye rectangle because the face border was removed.

rightEye = Point(rightEyeRect.x + rightEyeRect.width/2, rightEyeRect.y + rightEyeRect.height/2);

else

rightEye = Point(-1, -1); // Return an invalid point

Mat getPreprocessedFace(Mat &srcImg, int desiredFaceWidth, CascadeClassifier &faceCascade, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2, bool doLeftAndRightSeparately, Rect *storeFaceRect, Point *storeLeftEye, Point *storeRightEye, Rect *searchedLeftEye, Rect *searchedRightEye)

// Use square faces.

int desiredFaceHeight = desiredFaceWidth;

// Mark the detected face region and eye search regions as invalid, in case they aren't detected.

if (storeFaceRect)

storeFaceRect->width = -1;

if (storeLeftEye)

storeLeftEye->x = -1;

if (storeRightEye)

storeRightEye->x= -1;

if (searchedLeftEye)

searchedLeftEye->width = -1;

if (searchedRightEye)

searchedRightEye->width = -1;

// Find the largest face.

Rect faceRect;

detectLargestObject(srcImg, faceCascade, faceRect,desiredFaceWidth);

// Check if a face was detected.

if (faceRect.width > 0)

cout<<"inner have a face"<<endl;

// Give the face rect to the caller if desired.

if (storeFaceRect)

*storeFaceRect = faceRect;

Mat faceImg = srcImg(faceRect); // Get the detected face image.

// If the input image is not grayscale, then convert the BGR or BGRA color image to grayscale.

Mat gray;

if (faceImg.channels() == 3)

cvtColor(faceImg, gray, CV_BGR2GRAY);

else if (faceImg.channels() == 4)

cvtColor(faceImg, gray, CV_BGRA2GRAY);

else

// Access the input image directly, since it is already grayscale.

gray = faceImg;

// Search for the 2 eyes at the full resolution, since eye detection needs max resolution possible!

Point leftEye, rightEye;

detectBothEyes(gray, eyeCascade1, eyeCascade2, leftEye, rightEye, searchedLeftEye, searchedRightEye);

// Give the eye results to the caller if desired.

if (storeLeftEye)

*storeLeftEye = leftEye;

if (storeRightEye)

*storeRightEye = rightEye;

return Mat();

/*

else

// Since no eyes were found, just do a generic image resize.

resize(gray, tmpImg, Size(w,h));

*/

return Mat();

main.cpp#include "detectFace.h"

#include "preprocFace.h"

using namespace cv;

using namespace std;

// Try to set the camera resolution. Note that this only works for some cameras on

// some computers and only for some drivers, so don't rely on it to work!

const int DESIRED_CAMERA_WIDTH = 640;

const int DESIRED_CAMERA_HEIGHT = 480;

// Set the desired face dimensions. Note that "getPreprocessedFace()" will return a square face.

const int faceWidth = 70;

const int faceHeight = faceWidth;

const bool preprocessLeftAndRightSeparately = true; // Preprocess left & right sides of the face separately, in case there is stronger light on one side.

// Cascade Classifier file, used for Face Detection.

const char *faceCascadeFilename = "lbpcascade_frontalface.xml"; // LBP face detector.

//const char *faceCascadeFilename = "haarcascade_frontalface_alt_tree.xml"; // Haar face detector.

//const char *eyeCascadeFilename1 = "haarcascade_lefteye_2splits.xml"; // Best eye detector for open-or-closed eyes.

//const char *eyeCascadeFilename2 = "haarcascade_righteye_2splits.xml"; // Best eye detector for open-or-closed eyes.

//const char *eyeCascadeFilename1 = "haarcascade_mcs_lefteye.xml"; // Good eye detector for open-or-closed eyes.

//const char *eyeCascadeFilename2 = "haarcascade_mcs_righteye.xml"; // Good eye detector for open-or-closed eyes.

const char *eyeCascadeFilename1 = "haarcascade_eye.xml"; // Basic eye detector for open eyes only.

const char *eyeCascadeFilename2 = "haarcascade_eye_tree_eyeglasses.xml"; // Basic eye detector for open eyes if they might wear glasses.

// Load the face and 1 or 2 eye detection XML classifiers.

void initDetectors(CascadeClassifier &faceCascade, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2)

// Load the Face Detection cascade classifier xml file.

try // Surround the OpenCV call by a try/catch block so we can give a useful error message!

faceCascade.load(faceCascadeFilename);

catch (cv::Exception &e)

if ( faceCascade.empty() )

cerr << "ERROR: Could not load Face Detection cascade classifier [" << faceCascadeFilename << "]!" << endl;

cerr << "Copy the file from your OpenCV data folder (eg: 'C:\\\\OpenCV\\\\data\\\\lbpcascades') into this WebcamFaceRec folder." << endl;

exit(1);

cout << "Loaded the Face Detection cascade classifier [" << faceCascadeFilename << "]." << endl;

// Load the Eye Detection cascade classifier xml file.

try // Surround the OpenCV call by a try/catch block so we can give a useful error message!

eyeCascade1.load(eyeCascadeFilename1);

catch (cv::Exception &e)

if ( eyeCascade1.empty() )

cerr << "ERROR: Could not load 1st Eye Detection cascade classifier [" << eyeCascadeFilename1 << "]!" << endl;

cerr << "Copy the file from your OpenCV data folder (eg: 'C:\\\\OpenCV\\\\data\\\\haarcascades') into this WebcamFaceRec folder." << endl;

exit(1);

cout << "Loaded the 1st Eye Detection cascade classifier [" << eyeCascadeFilename1 << "]." << endl;

// Load the Eye Detection cascade classifier xml file.

try // Surround the OpenCV call by a try/catch block so we can give a useful error message!

eyeCascade2.load(eyeCascadeFilename2);

catch (cv::Exception &e)

if ( eyeCascade2.empty() )

cerr << "Could not load 2nd Eye Detection cascade classifier [" << eyeCascadeFilename2 << "]." << endl;

// Dont exit if the 2nd eye detector did not load, because we have the 1st eye detector at least.

//exit(1);

else

cout << "Loaded the 2nd Eye Detection cascade classifier [" << eyeCascadeFilename2 << "]." << endl;

// Get access to the webcam.

void initWebcam(VideoCapture &videoCapture, int cameraNumber)

// Get access to the default camera.

try // Surround the OpenCV call by a try/catch block so we can give a useful error message!

videoCapture.open(cameraNumber);

catch (cv::Exception &e)

if ( !videoCapture.isOpened() )

cerr << "ERROR: Could not access the camera!" << endl;

exit(1);

cout << "Loaded camera " << cameraNumber << "." << endl;

void recognizeAndTrainUsingWebcam(VideoCapture &videoCapture, CascadeClassifier &faceCascade, CascadeClassifier &eyeCascade1, CascadeClassifier &eyeCascade2)

// Run forever, until the user hits Escape to "break" out of this loop.

while (true)

// Grab the next camera frame. Note that you can't modify camera frames.

Mat cameraFrame;

videoCapture >> cameraFrame;

if( cameraFrame.empty() )

cerr << "ERROR: Couldn't grab the next camera frame." << endl;

exit(1);

// Get a copy of the camera frame that we can draw onto.

Mat displayedFrame;

cameraFrame.copyTo(displayedFrame);

// Run the face recognition system on the camera image. It will draw some things onto the given image, so make sure it is not read-only memory!

int identity = -1;

// Find a face and preprocess it to have a standard size and contrast & brightness.

Rect faceRect; // Position of detected face.

Rect searchedLeftEye, searchedRightEye; // top-left and top-right regions of the face, where eyes were searched.

Point leftEye, rightEye; // Position of the detected eyes.

Mat preprocessedFace = getPreprocessedFace(displayedFrame, faceWidth, faceCascade, eyeCascade1, eyeCascade2, preprocessLeftAndRightSeparately, &faceRect, &leftEye, &rightEye, &searchedLeftEye, &searchedRightEye);

bool gotFaceAndEyes = false;

if (preprocessedFace.data)

gotFaceAndEyes = true;

// Draw an anti-aliased rectangle around the detected face.

if (faceRect.width > 0)

cout<<"outer face"<<endl;

rectangle(displayedFrame, faceRect, CV_RGB(255, 255, 0), 2, CV_AA);

// Draw light-blue anti-aliased circles for the 2 eyes.

Scalar eyeColor = CV_RGB(0,255,255);

if (leftEye.x >= 0) // Check if the eye was detected

circle(displayedFrame, Point(faceRect.x + leftEye.x, faceRect.y + leftEye.y), 6, eyeColor, 1, CV_AA);

if (rightEye.x >= 0) // Check if the eye was detected

circle(displayedFrame, Point(faceRect.x + rightEye.x, faceRect.y + rightEye.y), 6, eyeColor, 1, CV_AA);

imshow("detect",displayedFrame);

char keypress = waitKey(20); // This is needed if you want to see anything!

if (keypress == 27)

break;

int main()

CascadeClassifier faceCascade;

CascadeClassifier eyeCascade1;

CascadeClassifier eyeCascade2;

VideoCapture videoCapture;

cout << "WebcamFaceRec, by Shervin Emami (www.shervinemami.info), June 2012." << endl;

cout << "Realtime face detection + face recognition from a webcam using LBP and Eigenfaces or Fisherfaces." << endl;

// Load the face and 1 or 2 eye detection XML classifiers.

initDetectors(faceCascade, eyeCascade1, eyeCascade2);

cout << endl;

cout << "Hit 'Escape' in the GUI window to quit." << endl;

// Allow the user to specify a camera number, since not all computers will be the same camera number.

int cameraNumber = 0; // Change this if you want to use a different camera device.

// Get access to the webcam.

initWebcam(videoCapture, cameraNumber);

// Try to set the camera resolution. Note that this only works for some cameras on

// some computers and only for some drivers, so don't rely on it to work!

videoCapture.set(CV_CAP_PROP_FRAME_WIDTH, DESIRED_CAMERA_WIDTH);

videoCapture.set(CV_CAP_PROP_FRAME_HEIGHT, DESIRED_CAMERA_HEIGHT);

recognizeAndTrainUsingWebcam(videoCapture, faceCascade, eyeCascade1, eyeCascade2);

return 0;

检测结果:

检测出人脸和眼睛之后需要进行预处理包括:

1,几何变换和裁剪:包括伸缩,旋转,平移为了让眼睛对齐,然后去除额头,下巴,耳朵以及人脸图像的背景

2,分离左右两边的直方图均衡:分别对左右两边脸进行亮度和对比度的归一化。

3,平滑:使用双边滤波进行去噪

4,椭圆模板:从人脸图像中去掉剩余的头发以及背景。

几何转换

人脸对齐是十分重要的,要不然人脸识别算法就会将部分鼻子和眼睛等等进行比较。人脸检测的输出是一定程度的对齐,但是不准确。

为了更好地对齐,使用人眼检测来对齐人脸,这样两个眼睛就能在期望的位置呈现准确的水平线。使用warpAffine()函数,它可以做四件事情:旋转人脸这样两个眼睛成水平的,伸缩人脸这样两眼之间的距离会一直是一样的,转换人脸这样人眼就会一直是水平的并且在特定高度,裁剪人脸的其他部分,例如图像背景,头发,额头,耳朵,下巴等。

仿射变形使用仿射矩阵来转换检测到的两只眼睛的位置到期望的位置,然后裁剪到期望的尺寸和位置。为了产生仿射矩阵,计算两眼的中心,两眼的角度以及分别距离两眼的距离。

现实生活中,非常普通的现象是半张脸的光线强,半张脸弱。这会对人脸检测产生巨大的影响,所以需要单独对左右半张脸进行亮度和对比对的标准化。如果简单的分别对左右半张脸进行直方图均衡就会看到中间有非常清晰的边界,所以为了去掉这个边界,使用两个直方图均衡逐步的从左边或者从右边向中间并且混合全脸的直方图均衡,这样最左边的会使用左直方图均衡,同理右边,而中间使用平滑混合左边或者右边和整个人脸的均值。

虽然几何变换已经去除掉很多背景信息以及额头,头发等,但是还是有些角落没有被去除,所以使用椭圆形的mask

以上是关于人脸识别《一》opencv人脸识别之预处理的主要内容,如果未能解决你的问题,请参考以下文章