14.State-理解原理即可Flink中状态的自动管理无状态计算和有状态计算状态分类Managed State & Raw StateKeyed State&Operator Sta

Posted 涂作权的博客

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了14.State-理解原理即可Flink中状态的自动管理无状态计算和有状态计算状态分类Managed State & Raw StateKeyed State&Operator Sta相关的知识,希望对你有一定的参考价值。

14.State-理解原理即可

14.1.Flink中状态的自动管理

14.2.无状态计算和有状态计算

14.2.2.有状态计算,需要考虑历史值,如:sum

14.2.3.状态分类

14.2.4.Managed State & Raw State

14.2.5.Keyed State & Operator State

14.2.5.1.Keyed State & Operator State

14.2.6.代码演示-ManagerState-keyState

14.2.7.代码演示–ManagerState - OperatorState

14.State-理解原理即可

14.1.Flink中状态的自动管理

之前写的Flink代码中其实已经做好了状态自动管理,如

发送hello ,得出(hello,1)

再发送hello ,得出(hello,2)

说明Flink已经自动的将当前数据和历史状态/历史结果进行了聚合,做到了状态的自动管理

在实际开发中绝大多数情况下,我们直接使用自动管理即可

一些特殊情况才会使用手动的状态管理!—后面项目中会使用!

所以这里得先学习state状态如何手动管理!

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* @author tuzuoquan

* @date 2022/5/9 9:37

*/

public class SourceDemo03_Socket

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<String> lines = env.socketTextStream("localhost", 9999);

//TODO 2.transformation

/*SingleOutputStreamOperator<String> words = lines.flatMap(new FlatMapFunction<String, String>()

@Override

public void flatMap(String value, Collector<String> out) throws Exception

String[] arr = value.split(" ");

for (String word : arr)

out.collect(word);

);

words.map(new MapFunction<String, Tuple2<String,Integer>>()

@Override

public Tuple2<String, Integer> map(String value) throws Exception

return Tuple2.of(value,1);

);*/

SingleOutputStreamOperator<Tuple2<String,Integer>> wordAndOne = lines.flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>()

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception

String[] arr = value.split(" ");

for (String word : arr)

out.collect(Tuple2.of(word, 1));

);

SingleOutputStreamOperator<Tuple2<String, Integer>> result =

wordAndOne.keyBy(t -> t.f0).sum(1);

//TODO 3.sink

result.print();

//TODO 4.execute

env.execute();

输出结果:

5> (world,1)

3> (hello,1)

5> (aaa,1)

4> (bbb,1)

7> (ccc,1)

5> (aaa,2)

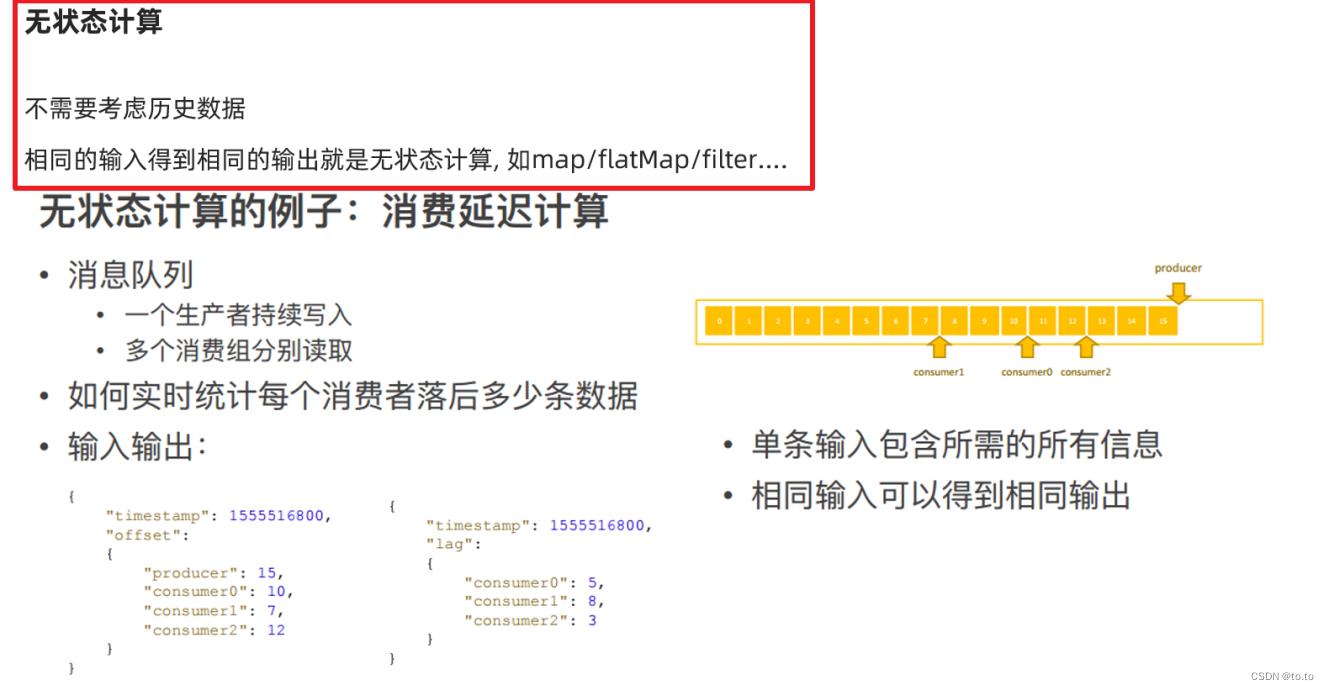

14.2.无状态计算和有状态计算

无状态计算,不需要考虑历史值,如map

hello --> (hello,1)

hello --> (hello,1)

14.2.1.无状态计算

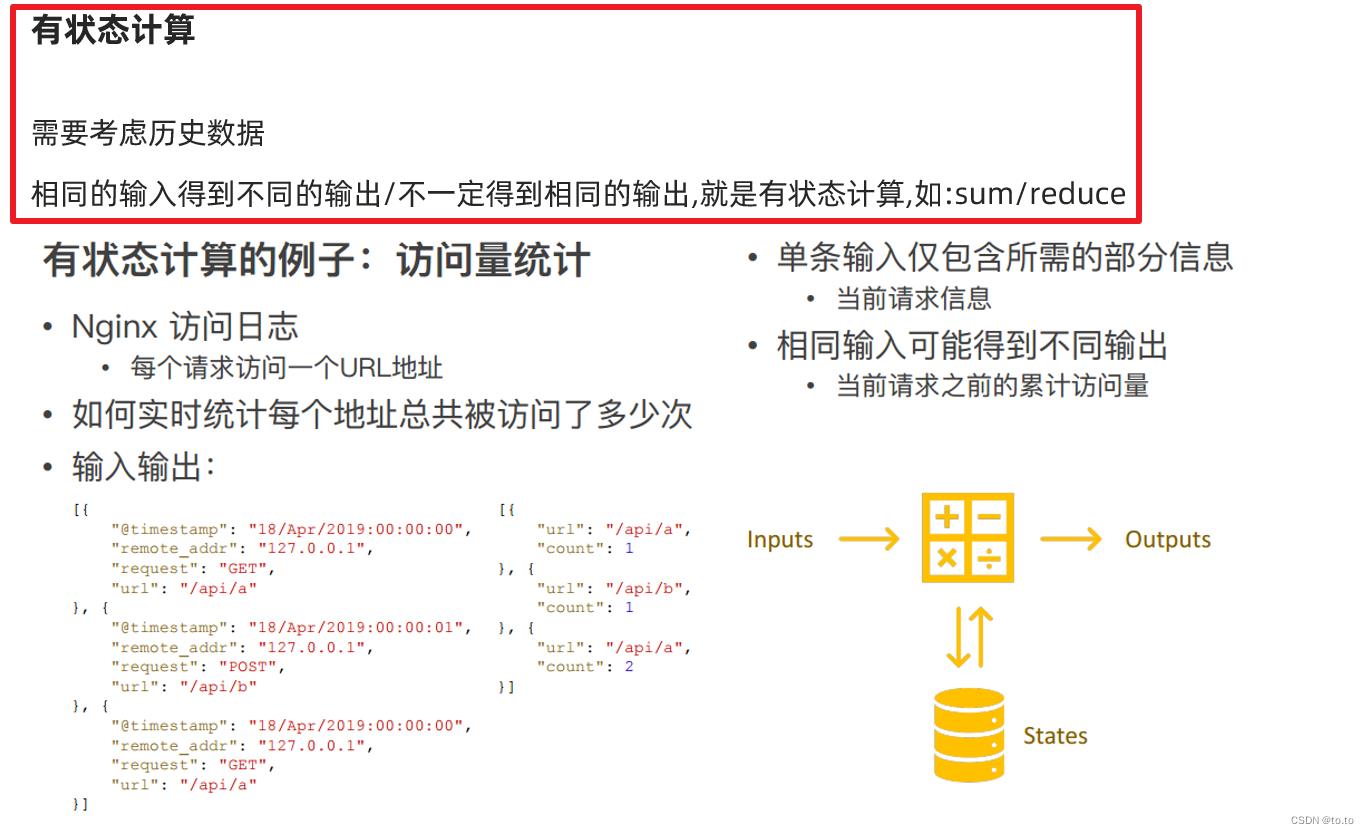

14.2.2.有状态计算,需要考虑历史值,如:sum

hello , (hello,1)

hello , (hello,2)

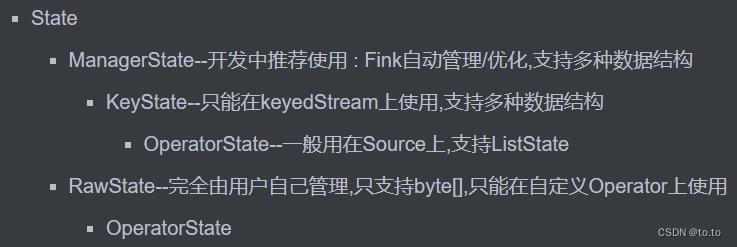

14.2.3.状态分类

分类详细图解:

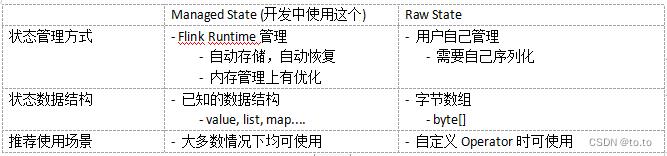

14.2.4.Managed State & Raw State

14.2.5.Keyed State & Operator State

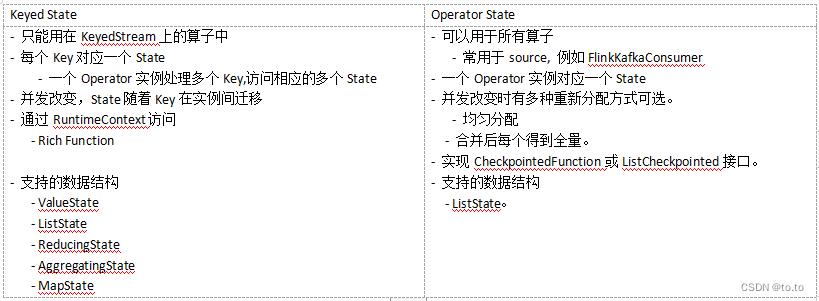

Managed State分为两种,Keyed State和Operator State (Raw State都是Operator State)

14.2.5.1.Keyed State & Operator State

Managed State分为两种,Keyed State和Operator State(Raw State都是Operator State)

14.2.6.代码演示-ManagerState-keyState

https://ci.apache.org/projects/flink/flink-docs-release-1.12/dev/stream/state/state.html

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* Desc 使用KeyState中的ValueState获取流数据中的最大值/实际中可以使用maxBy即可

*

* @author tuzuoquan

* @date 2022/5/10 0:37

*/

public class StateDemo01_KeyState

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//TODO 1.source

DataStream<Tuple2<String, Long>> tupleDS = env.fromElements(

Tuple2.of("北京", 1L),

Tuple2.of("上海", 2L),

Tuple2.of("北京", 6L),

Tuple2.of("上海", 8L),

Tuple2.of("北京", 3L),

Tuple2.of("上海", 4L)

);

//TODO 2.transformation

//需求:求各个城市的value最大值

//实际中使用maxBy即可

DataStream<Tuple2<String, Long>> result1 = tupleDS.keyBy(t -> t.f0).maxBy(1);

//学习时可以使用KeyState中的ValueState来实现maxBy的底层

DataStream<Tuple3<String, Long, Long>> result2 = tupleDS.keyBy(t -> t.f0).map(new RichMapFunction<Tuple2<String, Long>, Tuple3<String, Long, Long>>()

//-1.定义一个状态用来存放最大值

private ValueState<Long> maxValueState;

//-2.状态初始化

@Override

public void open(Configuration parameters) throws Exception

//创建状态描述器

ValueStateDescriptor stateDescriptor = new ValueStateDescriptor("maxValueState", Long.class);

//根据状态描述器获取/初始化状态

maxValueState = getRuntimeContext().getState(stateDescriptor);

//-3.使用状态

@Override

public Tuple3<String, Long, Long> map(Tuple2<String, Long> value) throws Exception

Long currentValue = value.f1;

//获取状态

Long historyValue = maxValueState.value();

//判断状态

if (historyValue == null || currentValue > historyValue)

historyValue = currentValue;

//更新状态

maxValueState.update(historyValue);

return Tuple3.of(value.f0, currentValue, historyValue);

else

return Tuple3.of(value.f0, currentValue, historyValue);

);

//TODO 3.sink

//result1.print();

//4> (北京,6)

//1> (上海,8)

result2.print();

//1> (上海,xxx,8)

//4> (北京,xxx,6)

//TODO 4.execute

env.execute();

输出结果:

4> (北京,1,1)

4> (北京,6,6)

4> (北京,3,6)

1> (上海,2,2)

1> (上海,8,8)

1> (上海,4,8)

14.2.7.代码演示–ManagerState - OperatorState

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.common.state.ListStateDescriptor;

import org.apache.flink.runtime.state.FunctionInitializationContext;

import org.apache.flink.runtime.state.FunctionSnapshotContext;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.checkpoint.CheckpointedFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.RichParallelSourceFunction;

import java.util.Iterator;

/**

* Desc 使用OperatorState中的ListState模拟KafkaSource进行offset维护

*

* @author tuzuoquan

* @date 2022/5/16 12:12

*/

public class StateDemo02_OperatorState

public static void main(String[] args) throws Exception

//TODO 0.env

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

//并行度设置为1方便观察

env.setParallelism(1);

//每隔1s执行一次Checkpoint

env.enableCheckpointing(1000);

env.setStateBackend(new FsStateBackend("file:///D:/ckp"));

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//固定延迟重启策略: 程序出现异常的时候,重启2次,每次延迟3秒钟重启,超过2次,程序退出

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(2, 3000));

//TODO 1.source

DataStreamSource<String> ds = env.addSource(new MyKafkaSource()).setParallelism(1);

//TODO 2.transformation

//TODO 3.sink

ds.print();

//TODO 4.execute

env.execute();

public static class MyKafkaSource extends RichParallelSourceFunction<String> implements CheckpointedFunction

private boolean flag = true;

//-1.声明ListState,用来存放offset

private ListState<Long> offsetState = null;

//用来存放offset的值

private Long offset = 0L;

//-2.初始化/创建ListState

@Override

public void initializeState(FunctionInitializationContext context) throws Exception

ListStateDescriptor<Long> stateDescriptor = new ListStateDescriptor<Long>("offsetState", Long.class);

offsetState = context.getOperatorStateStore().getListState(stateDescriptor);

//-3.使用state

@Override

public void run(SourceContext<String> ctx) throws Exception

while (flag)

Iterator<Long> iterator = offsetState.get().iterator();

if(iterator.hasNext())

offset = iterator.next();

offset += 1;

int subTaskId = getRuntimeContext().getIndexOfThisSubtask();

ctx.collect("subTaskId:"+ subTaskId + ",当前的offset值为:"+offset);

Thread.sleep(1000);

//模拟异常

if(offset % 5 == 0)

throw new Exception("bug出现了.....");

//-4.state持久化

//该方法会定时执行将state状态从内存存入Checkpoint磁盘目录中

@Override

public void snapshotState(FunctionSnapshotContext context) throws Exception

//清理内容数据并存入Checkpoint磁盘目录中

offsetState.clear();

offsetState.add(offset);

@Override

public void cancel()

flag = false;

输出结果:

subTaskId:0,当前的offset值为:1

subTaskId:0,当前的offset值为:2

subTaskId:0,当前的offset值为:3

subTaskId:0,当前的offset值为:4

subTaskId:0,当前的offset值为:5

subTaskId:0,当前的offset值为:6

subTaskId:0,当前的offset值为:7

subTaskId:0,当前的offset值为:8

subTaskId:0,当前的offset值为:9

subTaskId:0,当前的offset值为:10

subTaskId:0,当前的offset值为:11

subTaskId:0,当前的offset值为:12

subTaskId:0,当前的offset值为:13

subTaskId:0,当前的offset值为:14

subTaskId:0,当前的offset值为:15

Exception in thread "main" org.apache.flink.runtime.client.JobExecutionException: Job execution failed.

at org.apache.flink.runtime.jobmaster.JobResult.toJobExecutionResult(JobResult.java:147)

at org.apache.flink.runtime.minicluster.MiniClusterJobClient.lambda$getJobExecutionResult$2(MiniClusterJobClient.java:119)

at java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:616)

at java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:591)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

at java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

at org.apache.flink.runtime.rpc.akka.AkkaInvocationHandler.lambda$invokeRpc$0(AkkaInvocationHandler.java:229)

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

at java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:750)

at java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:488)

at java.util.concurrent.CompletableFuture.complete(CompletableFuture.java:1975)

at org.apache.flink.runtime.concurrent.FutureUtils$1.onComplete(FutureUtils.java:996)

at akka.dispatch.OnComplete.internal(Future.scala:264)

at akka.dispatch.OnComplete.internal(Future.scala:261)

at akka.dispatch.japi$CallbackBridge.apply(Future.scala:191)

at akka.dispatch.japi$CallbackBridge.apply(Future.scala:188)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:60)

at org.apache.flink.runtime.concurrent.Executors$DirectExecutionContext.execute(Executors.java:74)

at scala.concurrent.impl.CallbackRunnable.executeWithValue(Promise.scala:68)

at scala.concurrent.impl.Promise$DefaultPromise.$anonfun$tryComplete$1(Promise.scala:284)

at scala.concurrent.impl.Promise$DefaultPromise.$anonfun$tryComplete$1$adapted(Promise.scala:284)

at scala.concurrent.impl.Promise$DefaultPromise.tryComplete(Promise.scala:284)

at akka.pattern.PromiseActorRef.$bang(AskSupport.scala:573)

at akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:22)

at akka.pattern.PipeToSupport$PipeableFuture$$anonfun$pipeTo$1.applyOrElse(PipeToSupport.scala:21)

at scala.concurrent.Future.$anonfun$andThen$1(Future.scala:532)

at scala.concurrent.impl.Promise.liftedTree1$1(Promise.scala:29)

at scala.concurrent.impl.Promise.$anonfun$transform$1(Promise.scala:29)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:60)

at akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

at akka.dispatch.BatchingExecutor$BlockableBatch.$anonfun$run$1(BatchingExecutor.scala:91)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:12)

at scala.concurrent.BlockContext$.withBlockContext(BlockContext.scala:81)

at akka.dispatch.BatchingExecutor$BlockableBatch.run(BatchingExecutor.scala:91)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:40)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(ForkJoinExecutorConfigurator.scala:44)

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: org.apache.flink.runtime.JobException: Recovery is suppressed by FixedDelayRestartBackoffTimeStrategy(maxNumberRestartAttempts=2, backoffTimeMS=3000)

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:116)

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:78)

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:224)

at org.apache.flink.runtime.scheduler.DefaultScheduler.maybeHandleTaskFailure(DefaultScheduler.java:217)

at org.apache.flink.runtime.scheduler.DefaultScheduler.updateTaskExecutionStateInternal(DefaultScheduler.java:208)

at org.apache.flink.runtime.scheduler.SchedulerBase.updateTaskExecutionState(SchedulerBase.java:610)

at org.apache.flink.runtime.scheduler.SchedulerNG.updateTaskExecutionState(SchedulerNG.java:89)

at org.apache.flink.runtime.jobmaster.JobMaster.updateTaskExecutionState(JobMaster.java:419)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:286)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:201)

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:74)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:154)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21)

at scala.PartialFunction.applyOrElse(PartialFunction.scala:123)

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122)

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172)

at akka.actor.Actor.aroundReceive(Actor.scala:517)

at akka.actor.Actor.aroundReceive$(Actor.scala:515)

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592)

以上是关于14.State-理解原理即可Flink中状态的自动管理无状态计算和有状态计算状态分类Managed State & Raw StateKeyed State&Operator Sta的主要内容,如果未能解决你的问题,请参考以下文章