Keras CIFAR-10图像分类 ResNet 篇

Posted 风信子的猫Redamancy

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Keras CIFAR-10图像分类 ResNet 篇相关的知识,希望对你有一定的参考价值。

Keras CIFAR-10图像分类 ResNet 篇

除了用pytorch可以进行图像分类之外,我们也可以利用tensorflow来进行图像分类,其中利用tensorflow的后端keras更是尤为简单,接下来我们就利用keras对CIFAR10数据集进行分类。

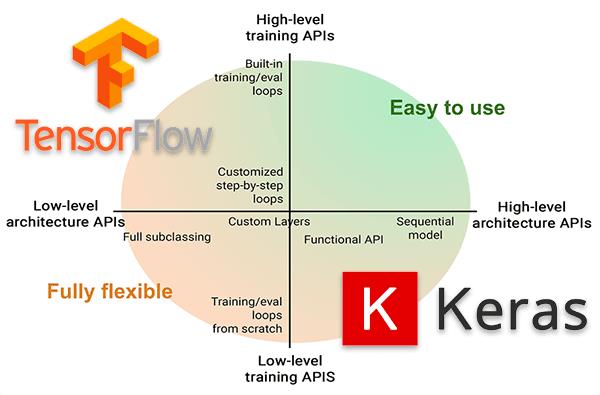

keras介绍

keras是python深度学习中常用的一个学习框架,它有着极其强大的功能,基本能用于常用的各个模型。

keras具有的特性

1、相同的代码可以在cpu和gpu上切换;

2、在模型定义上,可以用函数式API,也可以用Sequential类;

3、支持任意网络架构,如多输入多输出;

4、能够使用卷积网络、循环网络及其组合。

keras与后端引擎

Keras 是一个模型级的库,在开发中只用做高层次的操作,不处于张量计算,微积分计算等低级操作。但是keras最终处理数据时数据都是以张量形式呈现,不处理张量操作的keras是如何解决张量运算的呢?

keras依赖于专门处理张量的后端引擎,关于张量运算方面都是通过后端引擎完成的。这也就是为什么下载keras时需要下载TensorFlow 或者Theano的原因。而TensorFlow 、Theano、以及CNTK都属于处理数值张量的后端引擎。

keras设计原则

- 用户友好:Keras是为人类而不是天顶星人设计的API。用户的使用体验始终是我们考虑的首要和中心内容。Keras遵循减少认知困难的最佳实践:Keras提供一致而简洁的API, 能够极大减少一般应用下用户的工作量,同时,Keras提供清晰和具有实践意义的bug反馈。

- 模块性:模型可理解为一个层的序列或数据的运算图,完全可配置的模块可以用最少的代价自由组合在一起。具体而言,网络层、损失函数、优化器、初始化策略、激活函数、正则化方法都是独立的模块,你可以使用它们来构建自己的模型。

- 易扩展性:添加新模块超级容易,只需要仿照现有的模块编写新的类或函数即可。创建新模块的便利性使得Keras更适合于先进的研究工作。

- 与Python协作:Keras没有单独的模型配置文件类型(作为对比,caffe有),模型由python代码描述,使其更紧凑和更易debug,并提供了扩展的便利性。

安装keras

安装也是很简单的,我们直接安装keras即可,如果需要tensorflow,就还需要安装tensorflow

pip install keras

导入库

import keras

from keras.models import Sequential

from keras.datasets import cifar10

from keras.layers import Conv2D, MaxPooling2D, Dropout, Flatten, Dense, Activation

from keras.optimizers import adam_v2

from keras.utils.vis_utils import plot_model

from keras.utils.np_utils import to_categorical

from keras.callbacks import ModelCheckpoint

import matplotlib.pyplot as plt

import numpy as np

import os

import shutil

import matplotlib

matplotlib.style.use('ggplot')

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

控制GPU显存(可选)

这个是tensorflow来控制选择的GPU,因为存在多卡的时候可以指定GPU,其次还可以控制GPU的显存

这段语句就是动态显存,动态分配显存

config.gpu_options.allow_growth = True

这段语句就是说明,我们使用的最大显存不能超过50%

config.gpu_options.per_process_gpu_memory_fraction = 0.5

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 忽略低级别的警告

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

# The GPU id to use, usually either "0" or "1"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

config = tf.compat.v1.ConfigProto()

# config = tf.ConfigProto()

# config.gpu_options.per_process_gpu_memory_fraction = 0.5

config.gpu_options.allow_growth = True

session = tf.compat.v1.Session(config=config)

加载 CIFAR-10 数据集

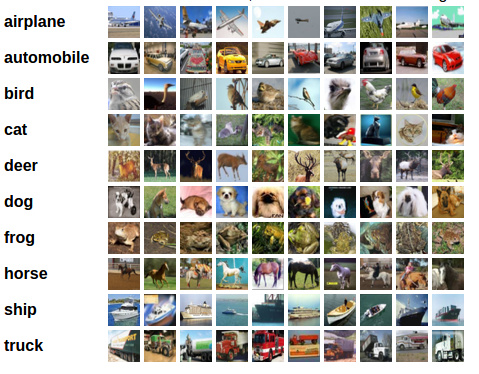

CIFAR-10 是由 Hinton 的学生 Alex Krizhevsky 和 Ilya Sutskever 整理的一个用于识别普适物体的小型数据集。一共包含 10 个类别的 RGB 彩色图 片:飞机( arplane )、汽车( automobile )、鸟类( bird )、猫( cat )、鹿( deer )、狗( dog )、蛙类( frog )、马( horse )、船( ship )和卡车( truck )。图片的尺寸为 32×32 ,数据集中一共有 50000 张训练圄片和 10000 张测试图片。

与 MNIST 数据集中目比, CIFAR-10 具有以下不同点:

- CIFAR-10 是 3 通道的彩色 RGB 图像,而 MNIST 是灰度图像。

- CIFAR-10 的图片尺寸为 32×32, 而 MNIST 的图片尺寸为 28×28,比 MNIST 稍大。

- 相比于手写字符, CIFAR-10 含有的是现实世界中真实的物体,不仅噪声很大,而且物体的比例、 特征都不尽相同,这为识别带来很大困难。

num_classes = 10 # 有多少个类别

(x_train, y_train), (x_val, y_val) = cifar10.load_data()

print("训练集的维度大小:",x_train.shape)

print("验证集的维度大小:",x_val.shape)

训练集的维度大小: (50000, 32, 32, 3)

验证集的维度大小: (10000, 32, 32, 3)

可视化数据

class_names = ['airplane','automobile','bird','cat','deer',

'dog','frog','horse','ship','truck']

fig = plt.figure(figsize=(20,5))

for i in range(num_classes):

ax = fig.add_subplot(2, 5, 1 + i, xticks=[], yticks=[])

idx = np.where(y_train[:]==i)[0] # 取得类别样本

features_idx = x_train[idx,::] # 取得图片

img_num = np.random.randint(features_idx.shape[0]) # 随机挑选图片

im = features_idx[img_num,::]

ax.set_title(class_names[i])

plt.imshow(im)

plt.show()

数据预处理

x_train = x_train.astype('float32')/255

x_val = x_val.astype('float32')/255

# 将向量转化为二分类矩阵,也就是one-hot编码

y_train = to_categorical(y_train, num_classes)

y_val = to_categorical(y_val, num_classes)

output_dir = './output' # 输出目录

if os.path.exists(output_dir) is False:

os.mkdir(output_dir)

# shutil.rmtree(output_dir)

# print('%s文件夹已存在,但是没关系,我们删掉了' % output_dir)

# os.mkdir(output_dir)

print('%s已创建' % output_dir)

print('%s文件夹已存在' % output_dir)

model_name = 'resnet'

./output已创建

./output文件夹已存在

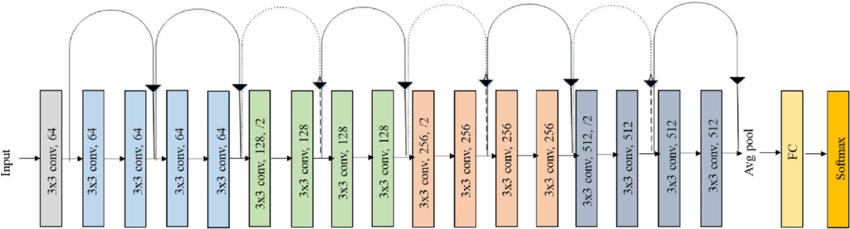

ResNet网络

当大家还在惊叹 GoogLeNet 的 inception 结构的时候,微软亚洲研究院的研究员已经在设计更深但结构更加简单的网络 ResNet,并且凭借这个网络斩获当年ImageNet竞赛中分类任务第一名,目标检测第一名。获得COCO数据集中目标检测第一名,图像分割第一名。

如果想详细了解并查看论文,可以看我的另一篇博客【论文泛读】 ResNet:深度残差网络

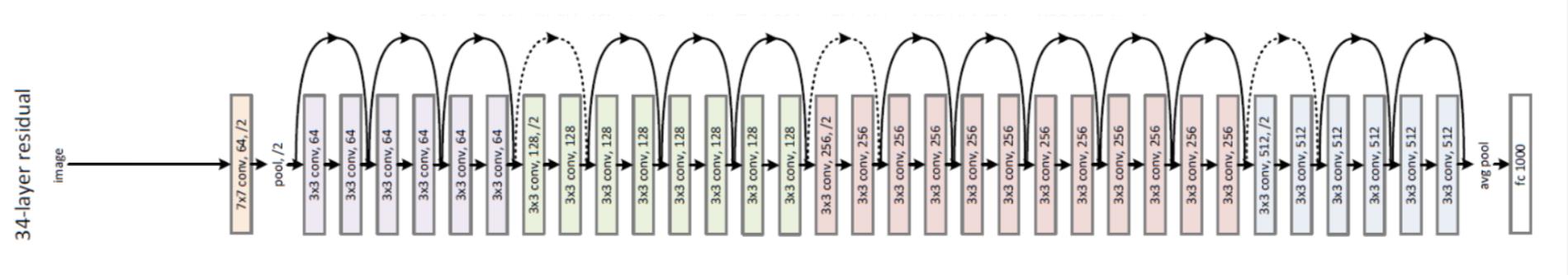

下图是ResNet18层模型的结构简图

还有ResNet-34模型

在ResNet网络中有如下几个亮点:

(1)提出residual结构(残差结构),并搭建超深的网络结构(突破1000层)

(2)使用Batch Normalization加速训练(丢弃dropout)

在ResNet网络提出之前,传统的卷积神经网络都是通过将一系列卷积层与下采样层进行堆叠得到的。但是当堆叠到一定网络深度时,就会出现两个问题。

(1)梯度消失或梯度爆炸。

(2)退化问题(degradation problem)。

残差结构

在ResNet论文中说通过数据的预处理以及在网络中使用BN(Batch Normalization)层能够解决梯度消失或者梯度爆炸问题,residual结构(残差结构)来减轻退化问题。此时拟合目标就变为F(x),F(x)就是残差

这里有一个点是很重要的,对于我们的第二个layer,它是没有relu激活函数的,他需要与x相加最后再进行激活函数relu

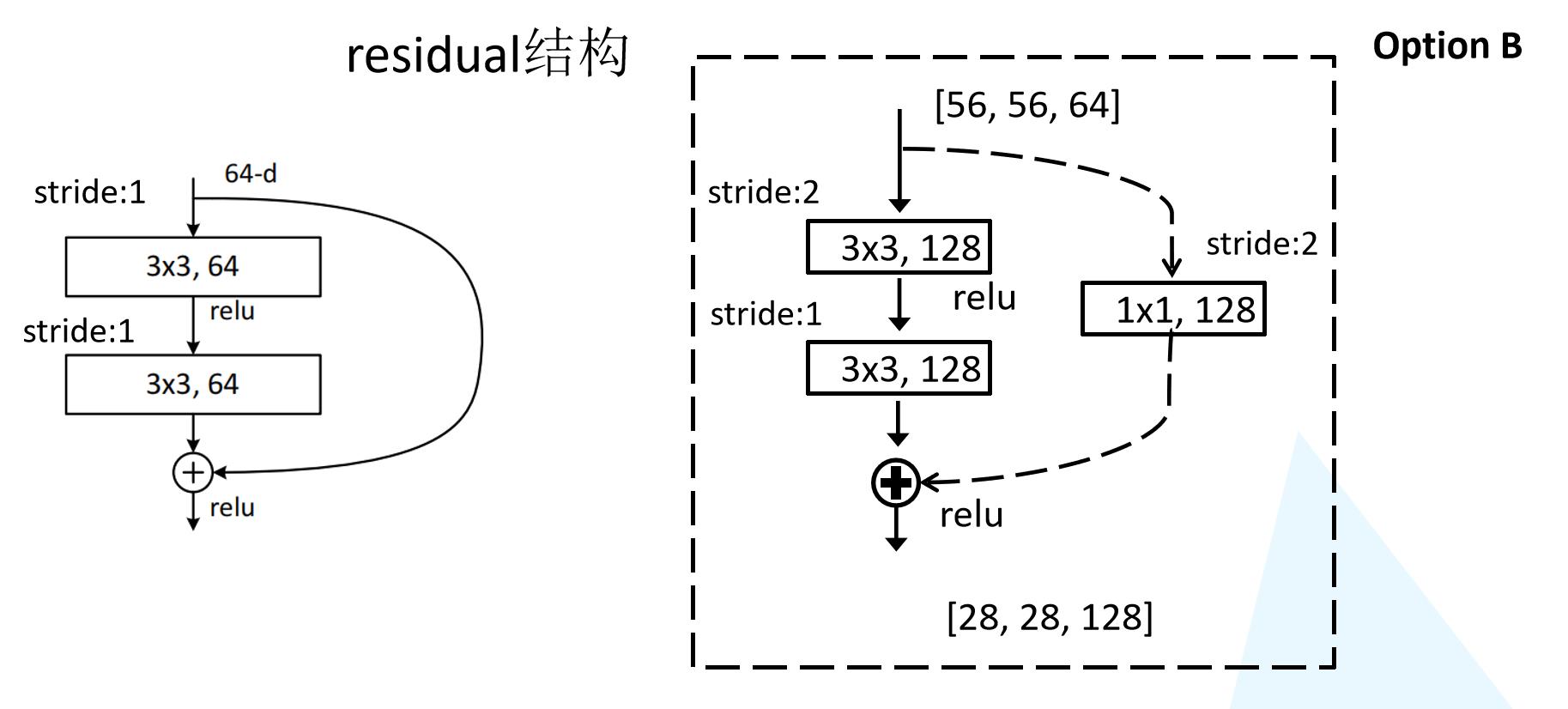

ResNet18/34 的Residual结构

我们先对ResNet18/34的残差结构进行一个分析。如下图所示,该残差结构的主分支是由两层3x3的卷积层组成,而残差结构右侧的连接线是shortcut分支也称捷径分支(注意为了让主分支上的输出矩阵能够与我们捷径分支上的输出矩阵进行相加,必须保证这两个输出特征矩阵有相同的shape)。我们会发现有一些虚线结构,论文中表述为用1x1的卷积进行降维,下图给出了详细的残差结构。

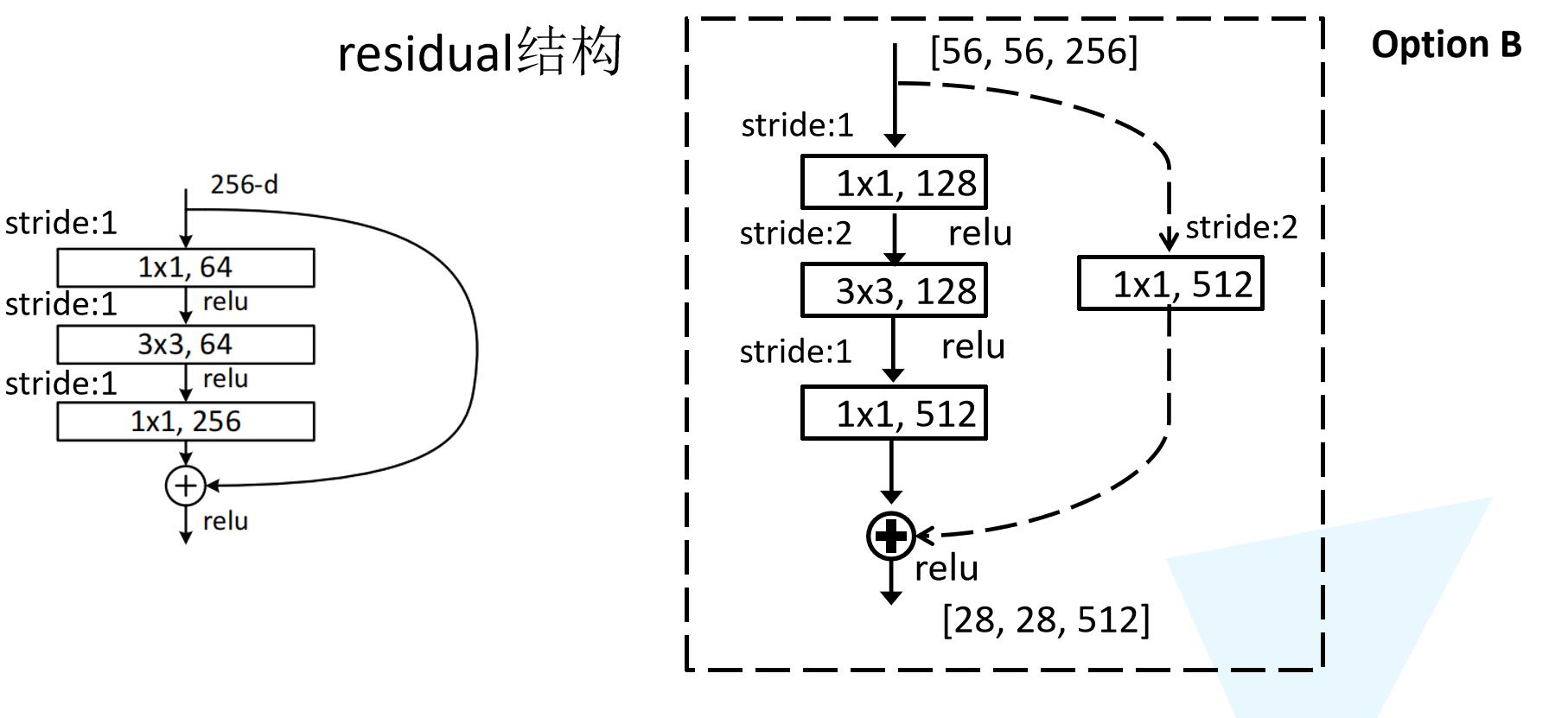

ResNet50/101/152的Bottleneck结构

接着我们再来分析下针对ResNet50/101/152的残差结构,如下图所示。在该残差结构当中,主分支使用了三个卷积层,第一个是1x1的卷积层用来压缩channel维度,第二个是3x3的卷积层,第三个是1x1的卷积层用来还原channel维度(注意主分支上第一层卷积层和第二次卷积层所使用的卷积核个数是相同的,第三次是第一层的4倍),这种又叫做bottleneck模型

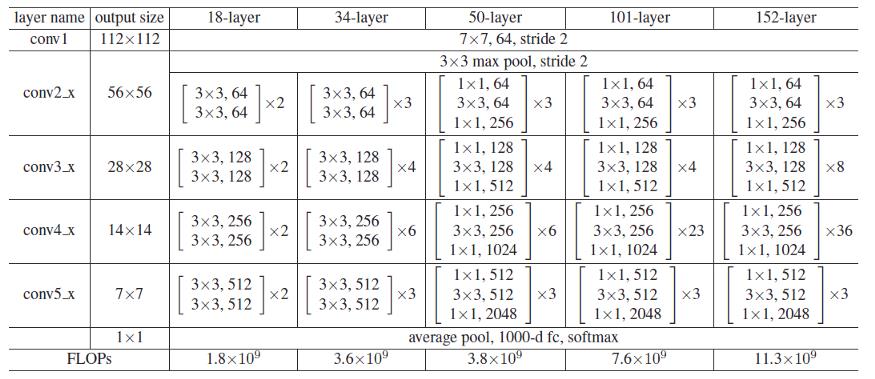

ResNet网络结构配置

这是在ImageNet数据集中更深的残差网络的模型,这里面给出了残差结构给出了主分支上卷积核的大小与卷积核个数,表中的xN表示将该残差结构重复N次。

对于我们ResNet18/34/50/101/152,表中conv3_x, conv4_x, conv5_x所对应的一系列残差结构的第一层残差结构都是虚线残差结构。因为这一系列残差结构的第一层都有调整输入特征矩阵shape的使命(将特征矩阵的高和宽缩减为原来的一半,将深度channel调整成下一层残差结构所需要的channel)

- ResNet-50:我们用3层瓶颈块替换34层网络中的每一个2层块,得到了一个50层ResNe。我们使用1x1卷积核来增加维度。该模型有38亿FLOP。

- ResNet-101/152:我们通过使用更多的3层瓶颈块来构建101层和152层ResNets。值得注意的是,尽管深度显著增加,但152层ResNet(113亿FLOP)仍然比VGG-16/19网络(153/196亿FLOP)具有更低的复杂度。

input_shape = (32,32,3)

from keras.layers import BatchNormalization, AveragePooling2D, Input

from keras.models import Model

from keras.regularizers import l2

from keras import layers

def conv2d_bn(x, filters, kernel_size, weight_decay=.0, strides=(1, 1)):

layer = Conv2D(filters=filters,

kernel_size=kernel_size,

strides=strides,

padding='same',

use_bias=False,

kernel_regularizer=l2(weight_decay)

)(x)

layer = BatchNormalization()(layer)

return layer

def conv2d_bn_relu(x, filters, kernel_size, weight_decay=.0, strides=(1, 1)):

layer = conv2d_bn(x, filters, kernel_size, weight_decay, strides)

layer = Activation('relu')(layer)

return layer

def ResidualBlock(x, filters, kernel_size, weight_decay, downsample=True):

if downsample:

# residual_x = conv2d_bn_relu(x, filters, kernel_size=1, strides=2)

residual_x = conv2d_bn(x, filters, kernel_size=1, strides=2)

stride = 2

else:

residual_x = x

stride = 1

residual = conv2d_bn_relu(x,

filters=filters,

kernel_size=kernel_size,

weight_decay=weight_decay,

strides=stride,

)

residual = conv2d_bn(residual,

filters=filters,

kernel_size=kernel_size,

weight_decay=weight_decay,

strides=1,

)

out = layers.add([residual_x, residual])

out = Activation('relu')(out)

return out

def ResNet18(classes, input_shape, weight_decay=1e-4):

input = Input(shape=input_shape)

x = input

# x = conv2d_bn_relu(x, filters=64, kernel_size=(7, 7), weight_decay=weight_decay, strides=(2, 2))

# x = MaxPool2D(pool_size=(3, 3), strides=(2, 2), padding='same')(x)

x = conv2d_bn_relu(x, filters=64, kernel_size=(3, 3), weight_decay=weight_decay, strides=(1, 1))

# # conv 2

x = ResidualBlock(x, filters=64, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

x = ResidualBlock(x, filters=64, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

# # conv 3

x = ResidualBlock(x, filters=128, kernel_size=(3, 3), weight_decay=weight_decay, downsample=True)

x = ResidualBlock(x, filters=128, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

# # conv 4

x = ResidualBlock(x, filters=256, kernel_size=(3, 3), weight_decay=weight_decay, downsample=True)

x = ResidualBlock(x, filters=256, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

# # conv 5

x = ResidualBlock(x, filters=512, kernel_size=(3, 3), weight_decay=weight_decay, downsample=True)

x = ResidualBlock(x, filters=512, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

x = AveragePooling2D(pool_size=(4, 4), padding='valid')(x)

x = Flatten()(x)

x = Dense(classes, activation='softmax')(x)

model = Model(input, x, name='ResNet18')

return model

def ResNetForCIFAR10(classes, name, input_shape, block_layers_num, weight_decay):

input = Input(shape=input_shape)

x = input

x = conv2d_bn_relu(x, filters=16, kernel_size=(3, 3), weight_decay=weight_decay, strides=(1, 1))

# # conv 2

for i in range(block_layers_num):

x = ResidualBlock(x, filters=16, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

# # conv 3

x = ResidualBlock(x, filters=32, kernel_size=(3, 3), weight_decay=weight_decay, downsample=True)

for i in range(block_layers_num - 1):

x = ResidualBlock(x, filters=32, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

# # conv 4

x = ResidualBlock(x, filters=64, kernel_size=(3, 3), weight_decay=weight_decay, downsample=True)

for i in range(block_layers_num - 1):

x = ResidualBlock(x, filters=64, kernel_size=(3, 3), weight_decay=weight_decay, downsample=False)

x = AveragePooling2D(pool_size=(8, 8), padding='valid')(x)

x = Flatten()(x)

x = Dense(classes, activation='softmax')(x)

model = Model(input, x, name=name)

return model

def ResNet20ForCIFAR10(classes, input_shape, weight_decay):

return ResNetForCIFAR10(classes, 'resnet20', input_shape, 3, weight_decay)

def ResNet32ForCIFAR10(classes, input_shape, weight_decay):

return ResNetForCIFAR10(classes, 'resnet32', input_shape, 5, weight_decay)

def ResNet56ForCIFAR10(classes, input_shape, weight_decay):

return ResNetForCIFAR10(classes, 'resnet56', input_shape, 9, weight_decay)

input_shape = (32,32,3)

weight_decay = 1e-4

model = ResNet32ForCIFAR10(input_shape=(32, 32, 3), classes=num_classes, weight_decay=weight_decay)

model.summary()

Model: "resnet32"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 32, 32, 3)] 0 []

conv2d (Conv2D) (None, 32, 32, 16) 432 ['input_1[0][0]']

batch_normalization (BatchNorm (None, 32, 32, 16) 64 ['conv2d[0][0]']

alization)

activation (Activation) (None, 32, 32, 16) 0 ['batch_normalization[0][0]']

conv2d_1 (Conv2D) (None, 32, 32, 16) 2304 ['activation[0][0]']

batch_normalization_1 (BatchNo (None, 32, 32, 16) 64 ['conv2d_1[0][0]']

rmalization)

activation_1 (Activation) (None, 32, 32, 16) 0 ['batch_normalization_1[0][0]']

conv2d_2 (Conv2D) (None, 32, 32, 16) 2304 ['activation_1[0][0]']

batch_normalization_2 (BatchNo (None, 32, 32, 16) 64 ['conv2d_2[0][0]']

rmalization)

add (Add) (None, 32, 32, 16) 0 ['activation[0][0]',

'batch_normalization_2[0][0]']

activation_2 (Activation) (None, 32, 32, 16) 0 ['add[0][0]']

conv2d_3 (Conv2D) (None, 32, 32, 16) 2304 ['activation_2[0][0]']

batch_normalization_3 (BatchNo (None, 32, 32, 16) 64 ['conv2d_3[0][0]']

rmalization)

activation_3 (Activation) (None, 32, 32, 16) 0 ['batch_normalization_3[0][0]']

conv2d_4 (Conv2D) (None, 32, 32, 16) 2304 ['activation_3[0][0]']

batch_normalization_4 (BatchNo (None, 32, 32, 16) 64 ['conv2d_4[0][0]']

rmalization)

add_1 (Add) (None, 32, 32, 16) 0 ['activation_2[0][0]',

'batch_normalization_4[0][0]']

activation_4 (Activation) (None, 32, 32, 16) 0 ['add_1[0][0]']

conv2d_5 (Conv2D) (None, 32, 32, 16) 2304 ['activation_4[0][0]']

batch_normalization_5 (BatchNo (None, 32, 32, 16) 64 ['conv2d_5[0][0]']

rmalization)

activation_5 (Activation) (None, 32, 32, 16) 0 ['batch_normalization_5[0][0]']

conv2d_6 (Conv2D) (None, 32, 32, 16) 2304 ['activation_5[0][0]']

batch_normalization_6 (BatchNo (None, 32, 32, 16) 64 ['conv2d_6[0][0]']

rmalization)

add_2 (Add) (None, 32, 32, 16) 0 ['activation_4[0][0]',

'batch_normalization_6[0][0]']

activation_6 (Activation) (None, 32, 32, 16) 0 ['add_2[0][0]']

conv2d_7 (Conv2D) (None, 32, 32, 16) 2304 ['activation_6[0][0]']

batch_normalization_7 (BatchNo (None, 32, 32, 16) 64 ['conv2d_7[0][0]']

rmalization)

activation_7 (Activation) (None, 32, 32, 16) 0 ['batch_normalization_7[0][0]']

conv2d_8 (Conv2D) (None, 32, 32, 16) 2304 ['activation_7[0][0]']

batch_normalization_8 (BatchNo (None, 32, 32, 16) 64 ['conv2d_8[0][0]']

rmalization)

add_3 (Add) (None, 32, 32, 16) 0 ['activation_6[0][0]',

'batch_normalization_8[0][0]']

activation_8 (Activation) (None, 32, 32, 16) 0 ['add_3[0][0]']

conv2d_9 (Conv2D) (None, 32, 32, 16) 2304 ['activation_8[0][0]']

batch_normalization_9 (BatchNo (None, 32, 32, 16) 64 ['conv2d_9[0][0]']

rmalization)

activation_9 (Activation) (None, 32, 32, 16) 0 ['batch_normalization_9[0][0]']

conv2d_10 (Conv2D) (None, 32, 32, 16) 2304 ['activation_9[0][0]']

batch_normalization_10 (BatchN (None, 32, 32, 16) 64 ['conv2d_10[0][0]']

ormalization)

add_4 (Add) (None, 32, 32, 16) 0 ['activation_8[0][0]',

'batch_normalization_10[0][0]']

activation_10 (Activatio以上是关于Keras CIFAR-10图像分类 ResNet 篇的主要内容,如果未能解决你的问题,请参考以下文章