Cuda 学习笔记

Posted xbw12138

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Cuda 学习笔记相关的知识,希望对你有一定的参考价值。

Cuda 学习笔记 (一)

1.Hello World

#include<stdio.h>

__global__ void helloFromGPU(void)

printf("Hello World from GPU!\\n");

int main(void)

printf("Hello World from CPU!\\n");

helloFromGPU<<<1,10>>>();

cudaDeviceReset();

return 0;

其中__global__表示后面的函数交由GPU处理,通常GPU编程包含以下五步

- (1)分配GPU内存

- (2)将CPU的内容拷贝给GPU内存

- (3)调用CUDA的内核函数进行处理

- (4)将GPU处理完的数据拷贝给CPU

- (5)释放GPU的内存

上述代码中__global__之后的内容为内核函数<<<>>>代表从主线程到设备端代码的调用,里面的参数10代表调用10个线程。

打开终端,进入cu文件所在的文件夹输入

编译

nvcc -o helloworld helloworld.cu

执行

./helloworld

结果

Hello World from CPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

Hello World from GPU!

2.Python 中安装 pycuda

下载代码

git clone https://github.com/inducer/pycuda.git

编译

cd pycuda

python setup.py install

测试

python

import pycuda

无报错则安装成功

3.GPU信息的输出

#include<iostream>

using namespace std;

int main(void)

int dev = 0;

cudaDeviceProp devProp;

cudaGetDeviceProperties(&devProp, dev);

cout << "使用GPU device " << dev << ": " << devProp.name << endl;

cout << "SM的数量:" << devProp.multiProcessorCount << endl;

cout << "每个线程块的共享内存大小:" << devProp.sharedMemPerBlock / 1024.0 << " KB" << endl;

cout << "每个线程块的最大线程数:" << devProp.maxThreadsPerBlock << endl;

cout << "每个EM的最大线程数:" << devProp.maxThreadsPerMultiProcessor << endl;

cout << "每个EM的最大线程束数:" << devProp.maxThreadsPerMultiProcessor / 32 << endl;

return 0;

执行结果

使用GPU device 0: GeForce GTX 1080

SM的数量:20

每个线程块的共享内存大小:48 KB

每个线程块的最大线程数:1024

每个EM的最大线程数:2048

每个EM的最大线程束数:64

4.遍历多个GPU并输出信息

#include "common/book.h"

int main(void)

cudaDeviceProp prop;

int count;

HANDLE_ERROR( cudaGetDeviceCount( &count ) );

for (int i=0; i< count; i++)

HANDLE_ERROR( cudaGetDeviceProperties( &prop, i ) );

printf( " --- General Information for device %d ---\\n", i );

printf( "Name: %s\\n", prop.name );

printf( "Compute capability: %d.%d\\n", prop.major, prop.minor );

printf( "Clock rate: %d\\n", prop.clockRate );

printf( "Device copy overlap: " );

if (prop.deviceOverlap)

printf( "Enabled\\n" );

else

printf( "Disabled\\n");

printf( "Kernel execution timeout : " );

if (prop.kernelExecTimeoutEnabled)

printf( "Enabled\\n" );

else

printf( "Disabled\\n" );

printf( " --- Memory Information for device %d ---\\n", i );

printf( "Total global mem: %ld\\n", prop.totalGlobalMem );

printf( "Total constant Mem: %ld\\n", prop.totalConstMem );

printf( "Max mem pitch: %ld\\n", prop.memPitch );

printf( "Texture Alignment: %ld\\n", prop.textureAlignment );

printf( " --- MP Information for device %d ---\\n", i );

printf( "Multiprocessor count: %d\\n",

prop.multiProcessorCount );

printf( "Shared mem per mp: %ld\\n", prop.sharedMemPerBlock );

printf( "Registers per mp: %d\\n", prop.regsPerBlock );

printf( "Threads in warp: %d\\n", prop.warpSize );

printf( "Max threads per block: %d\\n",

prop.maxThreadsPerBlock );

printf( "Max thread dimensions: (%d, %d, %d)\\n",

prop.maxThreadsDim[0], prop.maxThreadsDim[1],

prop.maxThreadsDim[2] );

printf( "Max grid dimensions: (%d, %d, %d)\\n",

prop.maxGridSize[0], prop.maxGridSize[1],

prop.maxGridSize[2] );

printf( "\\n" );

return 0;

执行结果

--- General Information for device 0 ---

Name: GeForce GTX 1080

Compute capability: 6.1

Clock rate: 1733500

Device copy overlap: Enabled

Kernel execution timeout : Disabled

--- Memory Information for device 0 ---

Total global mem: 8513978368

Total constant Mem: 65536

Max mem pitch: 2147483647

Texture Alignment: 512

--- MP Information for device 0 ---

Multiprocessor count: 20

Shared mem per mp: 49152

Registers per mp: 65536

Threads in warp: 32

Max threads per block: 1024

Max thread dimensions: (1024, 1024, 64)

Max grid dimensions: (2147483647, 65535, 65535)

--- General Information for device 1 ---

Name: GeForce GTX 1080

Compute capability: 6.1

Clock rate: 1733500

Device copy overlap: Enabled

Kernel execution timeout : Disabled

--- Memory Information for device 1 ---

Total global mem: 8513978368

Total constant Mem: 65536

Max mem pitch: 2147483647

Texture Alignment: 512

--- MP Information for device 1 ---

Multiprocessor count: 20

Shared mem per mp: 49152

Registers per mp: 65536

Threads in warp: 32

Max threads per block: 1024

Max thread dimensions: (1024, 1024, 64)

Max grid dimensions: (2147483647, 65535, 65535)

5.GPU与CPU性能对比

#include "common/book.h"

#include <time.h>

#define N 600000

__global__ void addGPU( int *a, int *b, int *c )

int tid = blockIdx.x; // this thread handles the data at its thread id

if (tid < N)

c[tid] = a[tid] + b[tid];

void addCPU( int *a, int *b, int *c )

for (int i=0; i<N; i++)

c[i] = a[i] + b[i];

int main( void )

int a[N], b[N], c[N];

int *dev_a, *dev_b, *dev_c;

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c, N * sizeof(int) ) );

// fill the arrays 'a' and 'b' on the CPU

for (int i=0; i<N; i++)

a[i] = -i;

b[i] = i * i;

// copy the arrays 'a' and 'b' to the GPU

HANDLE_ERROR( cudaMemcpy( dev_a, a, N * sizeof(int),

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMemcpy( dev_b, b, N * sizeof(int),

cudaMemcpyHostToDevice ) );

clock_t beginGPU = clock();

addGPU<<<N,1>>>( dev_a, dev_b, dev_c );

printf("GPU time -- %f sec\\n", (clock() - beginGPU)*1.0/CLOCKS_PER_SEC);

clock_t beginCPU = clock();

addCPU(a, b, c );

printf("CPU time -- %f sec\\n", (clock() - beginCPU)*1.0/CLOCKS_PER_SEC);

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( c, dev_c, N * sizeof(int),

cudaMemcpyDeviceToHost ) );

// display the results

//for (int i=0; i<N; i++)

// printf( "%d + %d = %d\\n", a[i], b[i], c[i] );

//

// free the memory allocated on the GPU

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaFree( dev_b ) );

HANDLE_ERROR( cudaFree( dev_c ) );

return 0;

执行结果

N分别为 10000,100000,600000

bowen@user-Super-Server:~/cuda/p1$ ./l3

GPU time -- 0.000019 sec

CPU time -- 0.000040 sec

bowen@user-Super-Server:~/cuda/p1$ nvcc -o l3 l3.cu

bowen@user-Super-Server:~/cuda/p1$ ./l3

GPU time -- 0.000022 sec

CPU time -- 0.000406 sec

bowen@user-Super-Server:~/cuda/p1$ ./l3

GPU time -- 0.000020 sec

CPU time -- 0.002561 sec

6.常用API小结

- kernal

__global__ void kernal(void)

kernal<<<1,1>>>();

- cudaMalloc

分配内存

int *dev_c;

cudaMalloc((void**)&dev_c,sizeof(int));

- cudaMemcpy

host指针与device指针的互相访问

此段代码含义,将device的数据给host,相当于 c = dev_c;

最后一个参数 cudaMemcpyDeviceToHost,cudaMemcpyDeviceToDevice,

cudaMemcpyHostToDevice

int c;

int *dev_c;

cudaMalloc((void**)&dev_c,sizeof(int));

cudaMemcpy(&c,dev_c,sizeof(int),cudaMemcpyDeviceToHost)

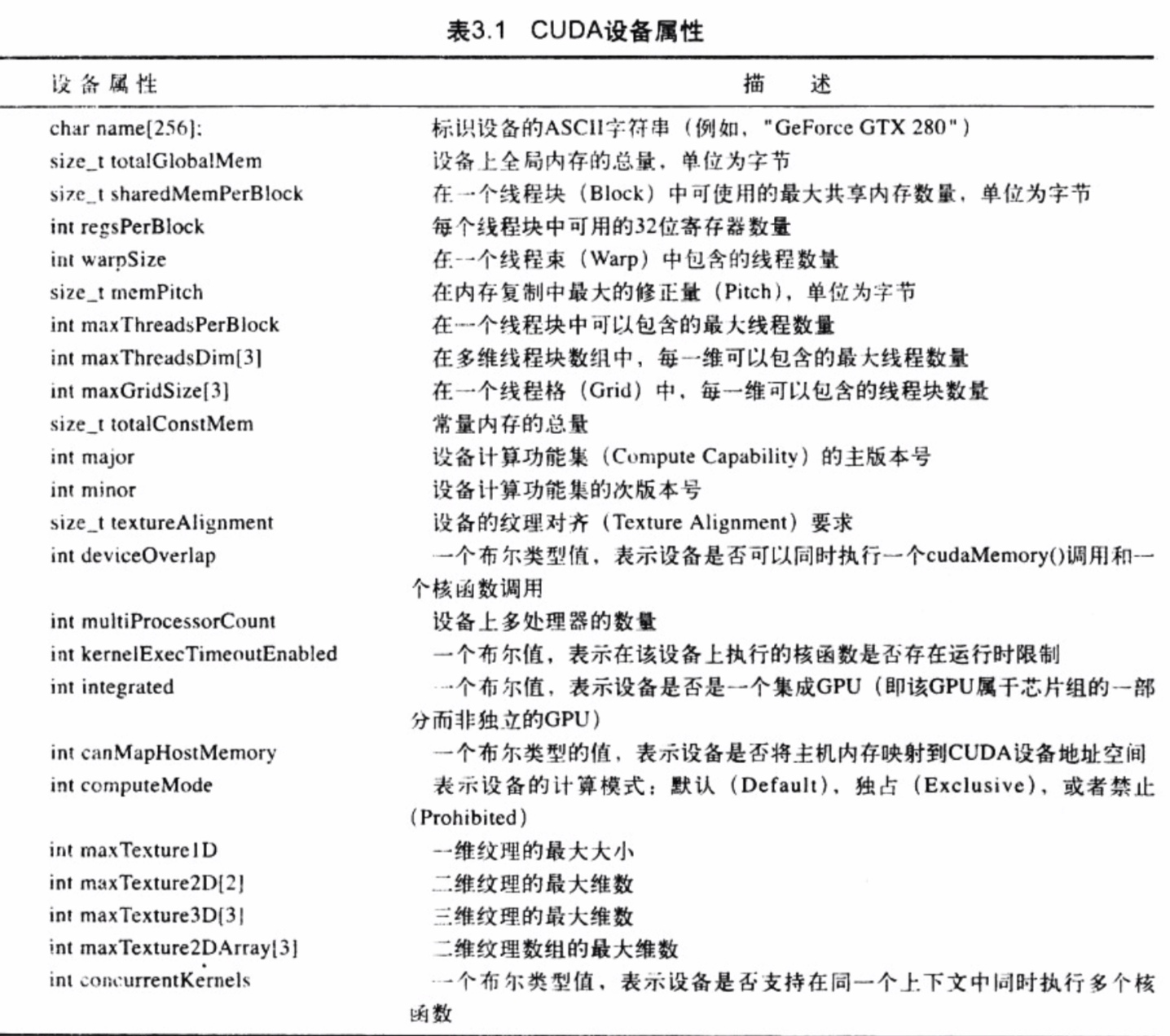

- cudaGetDeviceCount & cudaDeviceProp & cudaGetDeviceProperties & cudaGetDevice

可以获取GPU的个数并查询GPU相关信息,返回一个cudaDeviceProp类型的结构

int count;

cudaGetDeviceCount(&count);

cudaDeviceProp prop;

int count;

cudaGetDeviceCount(&count);

for(int i=0; i < count; i++)

cudaGetDeviceProperties(&prop,i);

//然后使用 prop.name 获取GPU名字属性

...

使用for循环进行迭代查找GPU有点麻烦,cuda提供了一个迭代方法。

例如查找一个主版本号为6,次版本号为大于等于1的GPU,

int dev;

cudaDeviceProp prop;

cudaGetDevice(&dev);//获取当前GPU的id

memset(&prop,0,sizeof(cudaDeviceProp));

prop.major = 6;

prop.minor = 1;

cudaChooseDevice(&dev,&prop);//将符合条件的设备号给dev

printf("%d\\n",dev);

cudaSetDevice(dev);//将所有操作都在这个GPU上执行

- grid、block、thread的关系及thread索引的计算(原文CUDA】grid、block、thread的关系及thread索引的计算)

例子1

dim3 grid(2, 2, 1), block(2, 2, 1);

// 二:线程执行代码

__global__ void vector_add(float* vec1, float* vec2, float* vecres, int length)

// 在第几个块中 * 块的大小 + 块中的x, y维度(几行几列)

int tid = (blockIdx.y * gridDim.x + blockIdx.x) * (blockDim.x * blockDim.y) + threadIdx.y * blockDim.y + threadIdx.x;

if (tid < length)

vecres[tid] = vec1[tid] + vec2[tid];

例子2

dim3 grid(8, 4, 1), block(8, 2, 1);

__global__ void vector_add(float* vec1, float* vec2, float* vecres, int length)

// 在第几个块中 * 块的大小 + 块中的x, y维度(几行几列)

int tid = (blockIdx.y * gridDim.x + blockIdx.x) * (blockDim.x * blockDim.y) + threadIdx.y * blockDim.y + threadIdx.x;

if (tid < length)

vecres[tid] = vec1[tid] + vec2[tid];

二维

__global__ void vector_add(float** mat1, float** mat2, float** matres, int width)

int x = blockIdx.x * blockDim.x + threadIdx.x;

int y = blockIdx.y * blockDim.y + threadIdx.y;

if (x < width && y < width)

matres[x][y] = mat1[x][y] + mat2[x][y];

【参考内容】[GPU高性能编程CUDA实战].(桑德斯).聂雪军等

代码中头文件是本书的源码,该书扫描版与源代码可以在CSDN下载中搜索下载

以上是关于Cuda 学习笔记的主要内容,如果未能解决你的问题,请参考以下文章