kubernetes,ETCD灾备恢复,重新生成证书,重新生成配置文件

Posted shark_西瓜甜

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kubernetes,ETCD灾备恢复,重新生成证书,重新生成配置文件相关的知识,希望对你有一定的参考价值。

ETCD 基本操作

## 备份数据

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key snapshot save /var/lib/etcd/snapshot-20220810.db

成员操作

1 查看成员

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member list

2 移除成员

etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member remove 成员ID

实例:

sh-5.1# etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member list

73478d5cdc15edb2, started, k8s-master2.prod, https://192.168.0.225:2380, https://192.168.0.225:2379, false

eb5c182940e3a951, started, k8s-master1.prod, https://192.168.0.185:2380, https://192.168.0.185:2379, false

sh-5.1# etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member remove 73478d5cdc15edb2

Member 73478d5cdc15edb2 removed from cluster c51f1c2e3181e84f

sh-5.1# etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key member list

eb5c182940e3a951, started, k8s-master1.prod, https://192.168.0.185:2380, https://192.168.0.185:2379, false

sh-5.1#

如何从备份的文件中恢复数据

1 去除备份文件中原有的元数据

因为备份的文件是从原来的集群中备份的,里面有原来集群中的元数据,这些元数据里面有集群的成员信息,如果不清空,在恢复的时候,由于其他成员不在线,就好恢复失败,除非其他成员也在线。

如下示例假设不论之前有多少集群成员,目前只需要一个,可以进行如下操作

删除宿主机目录 /var/lib/etcd/member ,之后把备份的文件复制到 /var/lib/etcd/ 目录下,因为 k8s 集群中的 etcd 默认挂载目录就是

/var/lib/etcd:/var/lib/etcd

rm -rf /var/lib/etcd/member

之后,使用原有 etcd 同样的镜像并挂载 /var/lib/etcd 目录到容器的 /var/lib/etcd,运行容器,让产生去除元数据后的数据文件。

具体命令如下:

# 运行容器,并进入容器

docker run -it --rm --network host -v /var/lib/etcd:/var/lib/etcd k8s.gcr.io/etcd:3.5.1-0 sh

#在容器内执行如下命令:

cd /var/lib/etcd

ETCDCTL_API=3 etcdctl snapshot restore snapshot.db \\

--name 本机的主机名 \\

--initial-cluster 本机的主机名=http://本机ip:2380 \\

--initial-cluster-token etcd-cluster-1 \\

--initial-advertise-peer-urls http://本机ip:2380

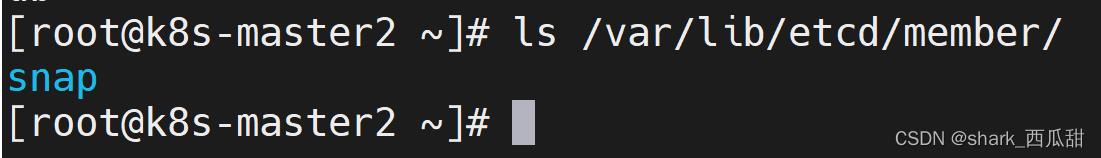

退出 容器,并检查宿主机的 /var/lib/etcd 目录下是否有 member 目录

3 启动新的 etcd

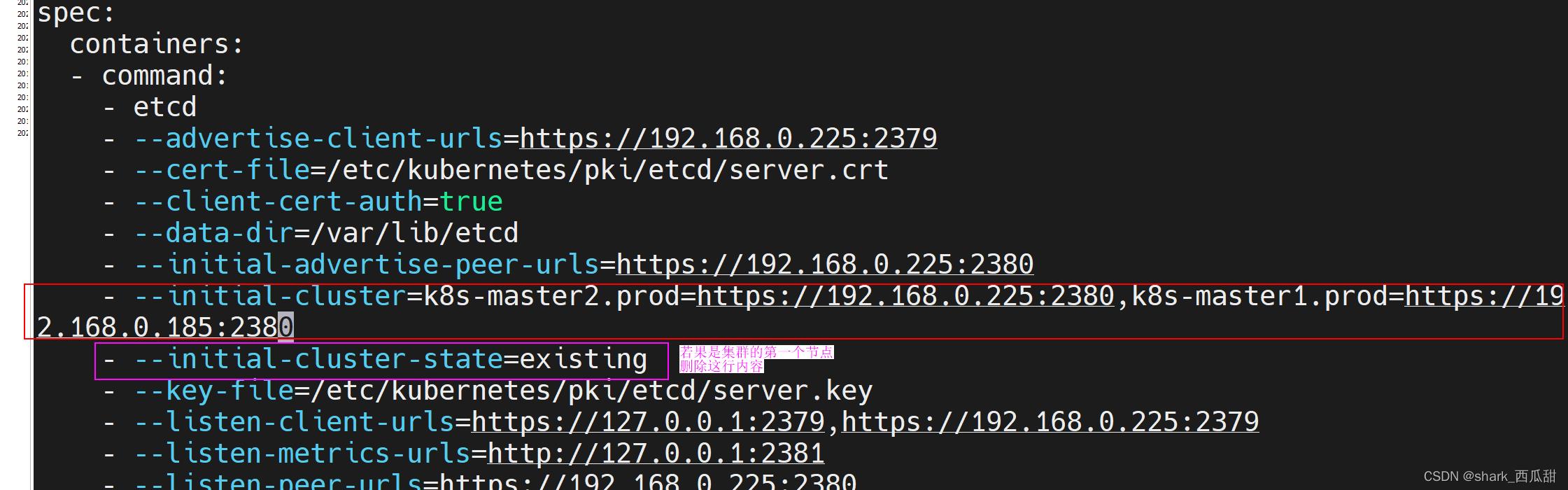

如果是 k8s,修改etcd的 yaml 文件/etc/kubernetes/manifests/etcd.yaml

修改之前可能有其他的ip信息存在,或者可能有集群状态的参数如下图:

去掉非本机的 IP信息,并且删除集群状态的配置(紫色框的内容),这样可以实现单机运行 etcd,并且保留了原有的数据,之后再根据需要,再加入其他节点即可恢复完成。

修改后如下图:

重启 kubelet 服务

systemctl restart kubelet

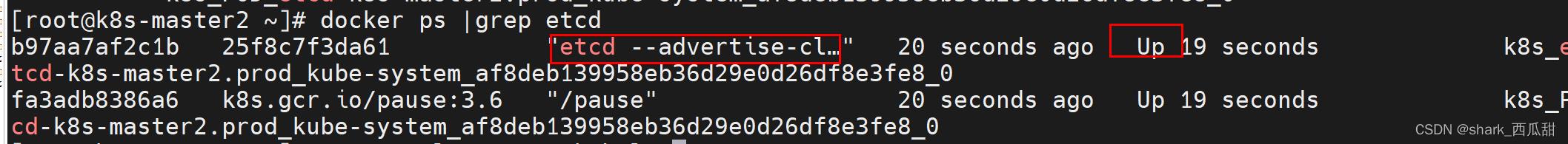

检查 容器是否启动

docker ps |grep etcd

生成 证书(这个有坑,别在生产上实操,目前还没解决)

备份原来的证书,并删除原来的证书

cp -ra /etc/kubernetes/pki,.bak

ls /etc/kubernetes/

find /etc/kubernetes/pki -type f |xargs rm -rf

帮助信息

kubeadm init phase certs --help

This command is not meant to be run on its own. See list of available subcommands.

Usage:

kubeadm init phase certs [flags]

kubeadm init phase certs [command]

Available Commands:

all Generate all certificates

apiserver Generate the certificate for serving the Kubernetes API

apiserver-etcd-client Generate the certificate the apiserver uses to access etcd

apiserver-kubelet-client Generate the certificate for the API server to connect to kubelet

ca Generate the self-signed Kubernetes CA to provision identities for other Kubernetes components

etcd-ca Generate the self-signed CA to provision identities for etcd

etcd-healthcheck-client Generate the certificate for liveness probes to healthcheck etcd

etcd-peer Generate the certificate for etcd nodes to communicate with each other

etcd-server Generate the certificate for serving etcd

front-proxy-ca Generate the self-signed CA to provision identities for front proxy

front-proxy-client Generate the certificate for the front proxy client

sa Generate a private key for signing service account tokens along with its public key

生成新的证书

kubeadm init phase certs all --apiserver-cert-extra-sans kube-apiserver,192.168.0.225,192.168.0.128,192.168.0.100 --control-plane-endpoint kube-apiserver

配置

备份

cp -ra /etc/kubernetes,.bak

删除原有的配置文件

rm -rf /etc/kubernetes/*.conf

重新生成

kubeadm init phase kubeconfig all

复制管理员配置文件

\\cp /etc/kubernetes/admin.conf ~/.kube/config

重启 kubelet 服务

systemctl restart kubelet

删除原有的容器

docker rm -f `docker ps |awk '$NF ~ /^k8s/print $1'`

以上是关于kubernetes,ETCD灾备恢复,重新生成证书,重新生成配置文件的主要内容,如果未能解决你的问题,请参考以下文章

#yyds干货盘点# 怎样对 Kubernetes 集群进行灾备和恢复?(22)

简单实用kubernetes的etcd备份与恢复实现恢复集群配置