Hive提交到yarn的任务一直在running问题排查-V2

Posted 疯狂哈丘

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hive提交到yarn的任务一直在running问题排查-V2相关的知识,希望对你有一定的参考价值。

文章目录

一、问题描述

具体表现和 Hive提交到yarn的任务一直在running问题排查 一样,但排查之后又不是一样的问题。

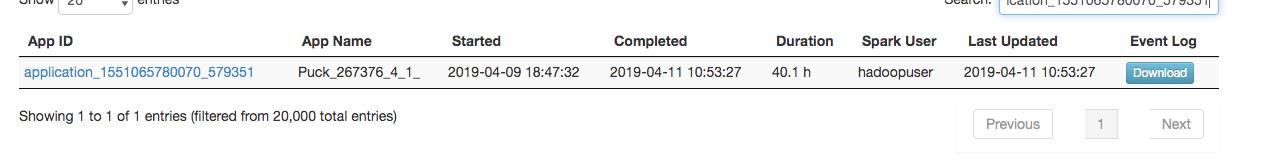

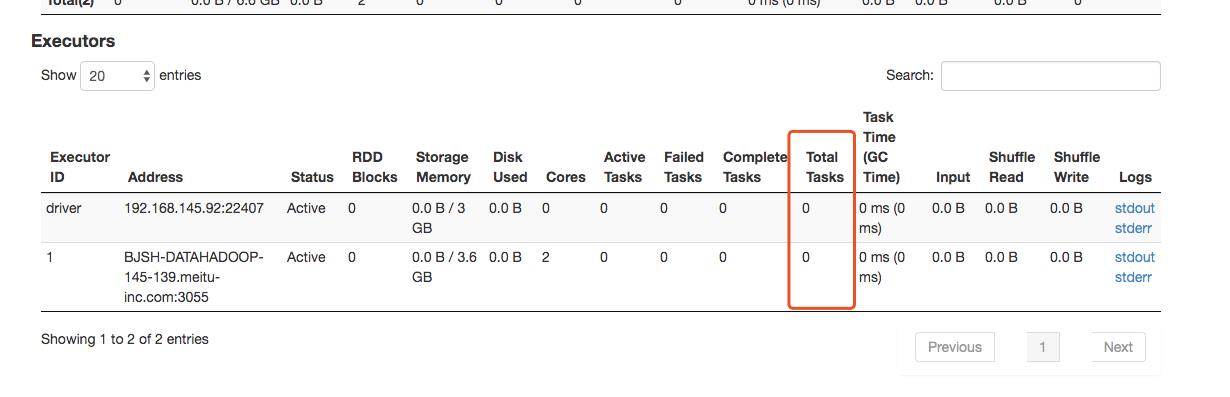

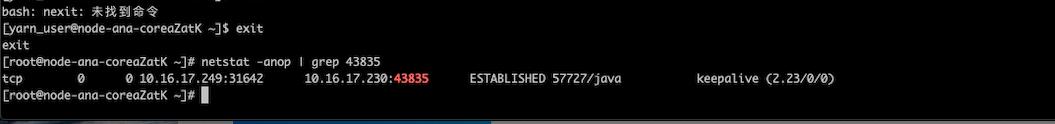

通过日志和运行记录,发现RemoteDriver一直在运行,而HiveServer这边的客户端连接已经关闭。

二、HiveServer中的SparkSession启动和关闭时机

和 Hive提交到yarn的任务一直在running问题排查 一样,还是回到那个问题,为什么HiveSession已经关闭了(HiveSession等同于客户端连接),而RemoteDriver没关闭呢?

在排查这个问题之前,我们先来了解一下*RemoteDriver的启动和关闭时机。

SparkSession的启动时机

在HiveServer中,一般SparkSession就对应了一个yarn的application。也就是说,HiveServer中对SparkSession的相关操作最终都会映射到RemoteDriver中。因此,SparkSession的启动和关闭就会导致RemoteDriver的启动和关闭

先理清一下HiveServer中的三个关键线程:

- HiveServer2-Handler-Pool:HiveServer的rpc线程池,有新的连接进来都会分配一个线程处理rpc请求。比如 HiveServer2-Handler-Pool: Thread-3449151 线程。因为hiveClient的连接都是长链接,因此在有一个新连接进来时,会分配一个Hive SessionId,这时候这个线程会重命名成 d33b7450-6fce-49ce-bfef-b1029bb2376e HiveServer2-Handler-Pool: Thread-3449151 (前面带上了这个SessionId)

- HiveServer2-Background-Pool:HiveServer执行sql的线程池,如果客户端在执行的时候指定runAsync=true(基本都是true),则会将sql的执行放到这个线程池去处理(也就是Driver.run的相关逻辑)。在线程池的线程名字,比如:HiveServer2-Background-Pool: Thread-3456359

- TaskRunner线程:Hive在执行sql时,会先生成执行计划,这里Hive的执行计划是以Task的DAG图(这里的Task不是spark的task,Hive的Task有很多种,比如MapredTask、SparkTask),之后会从这个DAG图的根解决开始一个个执行这些Task。在执行task时,就会创建一个新的线程TaskRunner,专门来执行这个Task。这个线程一般没有前缀,比如 Thread-1697091

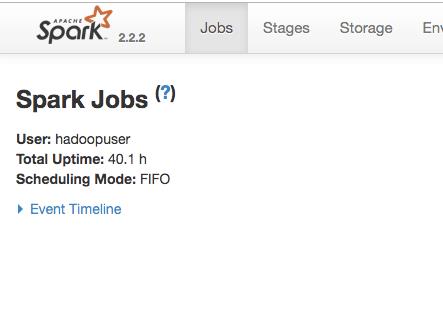

在上图中,SparkSession的启动时机是在SparkSession#open()中,这个方法被调用时,最终底层会调用spark-submit提交一个Spark任务,也就启动了RemoteDriver,之后RemoteDriver反向连接会HiveServer,与HiveServer进行交互(具体流程参考Hive提交到yarn的任务一直在running问题排查 )。

在HiveServer中,HiveSession对应了一个rpc连接,而一般一个HiveSession只会对应一个SparkSession。因此如果一个HiveSession要执行要多个Hive Task,是不会创建多个SparkSession的。在SparkSessionManagerImple#getSession()中,会判断当前HiveSession是否已经开启过SparkSession了,如果已经有SparkSession启动了,那直接返回这个SparkSession就好了

SparkSession的关闭时机

SparkSession类中,有个close()方法,调用时会关闭SparkSession,同时通知远程的RemoteDriver关闭进程。而SparkSession#close()的调用时机主要有3个:

1、在同一个HiveSession中,用户将引擎从spark切到了mr,这时HiveServer会主动关闭SparkSession

2、在同一个HiveSession中,用户对一些Spark参数做了调整,那么这时候也会关闭旧的SparkSession,同时会再启动一个新的SparkSession,保证修改后的Spark参数能生效

3、HiveSession关闭时,也就是用户关闭了rpc连接时,会调用SparkSession#close()关闭SparkSession

三、问题分析

问题的关键在于SparkSession为什么被关闭,前两种情况我们都可以排除。因此最开始怀疑是HiveSession没有正常关闭,但是后面又找到HiveSession的关闭日志:

2021-11-25 17:48:06,704 | INFO | HiveServer2-Handler-Pool: Thread-3449151 | Session opened, SessionHandle [d33b7450-6fce-49ce-bfef-b1029bb2376e],

2021-11-25 17:48:39,495 | INFO | HiveServer2-Handler-Pool: Thread-3449151 | Session closed, SessionHandle [d33b7450-6fce-49ce-bfef-b1029bb2376e]

这里的日志显示对应的HiveSession确实关闭了,正常来说,SparkSession应该也关闭了才对。后面接着排查,发现了以下日志:

#该HiveSession对应的Background线程启动

2021-11-25 17:48:39,495 | INFO | HiveServer2-Background-Pool: Thread-3457536 | Concurrency mode is disabled, not creating a lock manager | org.apache.hadoop.hive.ql.Driver.checkConcurrency(Driver.java:210)

#TaskRunner线程,提交spark任务,意味着此时又开启了一个SparkSession

2021-11-25 17:48:39,630 | INFO | Thread-1697091 | Running client driver with argv: /opt/client/Spark/spark/bin/spark-submit ... ---------SparkClientImpl.java:473

#TaskRunner要提交task到RemoteDriver执行时,发现HiveSession已经关闭了,就会抛出异常,不会真正执行task(但是不会关闭SparkSession)

2021-11-25 17:49:11,554 | ERROR | Thread-1697091 | Failed to execute spark task, with exception 'java.lang.Exception(Failed to submit Spark work, please retry later)' | org.apache.hadoop.hive.ql.exec.spark.SparkTask.execute(SparkTask.java:142)

java.lang.Exception: Failed to submit Spark work, please retry later

通过上面的日志,可以分析出:

用户17:48:06创建了连接,17:48:39,495 关闭了连接,而在同一时间,这个sql的Background线程才启动执行(中间的时间应该在预编译和其他的消耗)。之后,当Background线程要执行Hive Task时(此时HiveSession可能已经关闭完了),TaskRunner又启动了一个新的SparkSession。而这个SparkSession就处于游离状态,没有对应的HiveSession来维护(HiveSession已经关闭)。

对应的流程大概如下:

四、解决方案

第一套方案(不推荐)

根本原因在于多线程导致的并发问题。正常来说,SparkSession是和HiveSession绑定在一起的,而这个问题在于HiveSession关闭后SparkSession又启动了。因此我们可以在SparkSession#open()中判断HiveSession是否已经关闭,确保HiveSession没关闭再去启动RemoteDriver。

那么如何在SparkSession#open()中判断HiveSession是否已经关闭呢。在原本的代码中,SparkSession仅有一个isOpen()状态,通过这个方法时无法知道HiveSession是否已经关闭的(isOpen初始化的时候就是false)。因此我们需要改造源码,在SparkSession中加一个参数isClose,当HiveSession关闭时,也会调用SparkSession#close方法,这是将isClose设置成true。我们就可以通过判断isClose是否为true来判断HiveSession是否关闭了

同时因为涉及到多线程,还需要考虑并发问题,保证SparkSession#close()方法和SparkSession#open()方法的同步。但是这时候就有一个问题,如果集群繁忙无法及时分配资源,SparkSession#open()方法可能就要持续几分钟甚至几十分钟,这时候客户端就会面临无法关闭连接的尴尬情况。另外,考虑到可能还要一些潜在的因素也会导致SparkSession泄露,因此这套解决方案并不成熟。

第二套方案(推荐)

既然无法很好的避免SparkSession的泄露,那么我们可以换一种思路。能不能让HiveServer自动检测哪些SparkSession泄露了,然后自动关闭呢。这样也可以一劳永逸的解决所有SparkSession的泄露问题。

以下是修改的Patch(思路参考https://issues.apache.org/jira/browse/HIVE-14162,Hive在4.0.0版本才实现了这个功能,同时因为4.0.0和我们的2.3.3版本代码差异较大,下面的代码一些地方和14162patch还是不一样的):

以下是文字版:

From 7ff8ea636eadfefce920591e03645ecad651ae20 Mon Sep 17 00:00:00 2001

From: "yjb1@meitu.com" <lyt12#@!>

Date: Wed, 1 Dec 2021 19:45:17 +0800

Subject: [PATCH] =?UTF-8?q?=E6=94=AF=E6=8C=81=E6=A3=80=E6=B5=8B=E9=95=BF?=

=?UTF-8?q?=E6=97=B6=E9=97=B4=E6=B2=A1=E6=9C=89=E5=9C=A8=E8=BF=90=E8=A1=8C?=

=?UTF-8?q?Job=E7=9A=84SparkSession=EF=BC=8C=E5=90=8C=E6=97=B6=E5=85=B3?=

=?UTF-8?q?=E9=97=AD?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

.../org/apache/hadoop/hive/conf/HiveConf.java | 4 +++

conf/hive-default.xml.template | 25 ++++++++++++++

.../hadoop/hive/ql/exec/spark/SparkTask.java | 3 ++

.../ql/exec/spark/session/SparkSession.java | 17 ++++++++++

.../exec/spark/session/SparkSessionImpl.java | 32 +++++++++++++++++-

.../session/SparkSessionManagerImpl.java | 33 +++++++++++++++++++

6 files changed, 113 insertions(+), 1 deletion(-)

diff --git a/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java b/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

index 7e91f5c0..2bb76b0f 100644

--- a/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

+++ b/common/src/java/org/apache/hadoop/hive/conf/HiveConf.java

@@ -429,6 +429,10 @@ public class HiveConf extends Configuration

FLINK_LOG_COLLECT("hive.flink.log.collect",true,"flink sink to hive,false is default, means:uncollect sql logs," +

"true is collect"),

MAX_ERROR_COUNT("hive.max.error.count",10000L,"the max error amount of result can be allowed, 10000 is defalut"),

+ SPARK_SESSION_TIMEOUT("hive.spark.session.timeout", 7200000L, "Amount of time the Spark Remote Driver should wait for " +

+ " a Spark job to be submitted before shutting down. Minimum value is 30 minutes"),

+ SPARK_SESSION_TIMEOUT_PERIOD("hive.spark.session.timeout.period", 300L,

+ "How frequently to check for idle Spark sessions. Minimum value is 60 seconds."),

// QL execution stuff

SCRIPTWRAPPER("hive.exec.script.wrapper", null, ""),

PLAN("hive.exec.plan", "", ""),

diff --git a/conf/hive-default.xml.template b/conf/hive-default.xml.template

index 7e976c0d..f22ff81a 100644

--- a/conf/hive-default.xml.template

+++ b/conf/hive-default.xml.template

@@ -24,6 +24,21 @@

<value>true</value>

<description>flink sink to hive,false is default, means:uncollect sql logs,true is collect</description>

</property>

+ <property>

+ <name>hive.max.error.count</name>

+ <value>10000</value>

+ <description>the max error amount of result can be allowed, 10000 is defalut</description>

+ </property>

+ <property>

+ <name>hive.spark.session.timeout</name>

+ <value>7200000</value>

+ <description>Amount of time the Spark Remote Driver should wait for a Spark job to be submitted before shutting down. Minimum value is 30 minutes</description>

+ </property>

+ <property>

+ <name>hive.spark.session.timeout.period</name>

+ <value>300</value>

+ <description>How frequently to check for idle Spark sessions. Minimum value is 60 seconds.</description>

+ </property>

<property>

<name>hive.exec.script.wrapper</name>

<value/>

@@ -4801,6 +4816,11 @@

using "-hiveconf hive.hadoop.classpath=%HIVE_LIB%".

</description>

</property>

+ <property>

+ <name>hive.serialize.plan.retries</name>

+ <value>3</value>

+ <description>Retry times of the hive serialize plan</description>

+ </property>

<property>

<name>hive.rpc.query.plan</name>

<value>false</value>

@@ -5889,6 +5909,11 @@

<value>0</value>

<description>Batch size for the msck repair command. If the value is greater than zero, it will execute batch wise with the configured batch size. The default value is zero. Zero means it will execute directly (Not batch wise)</description>

</property>

+ <property>

+ <name>hive.msck.partition.name.default</name>

+ <value/>

+ <description>If the value is empty, dir must contain '/partitionName=partitionValue/'. If the value is date_p, then the dir can only contain '/partitionValue/'</description>

+ </property>

<property>

<name>hive.server2.llap.concurrent.queries</name>

<value>-1</value>

diff --git a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/SparkTask.java b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/SparkTask.java

index 4c013299..d7c048f7 100644

--- a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/SparkTask.java

+++ b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/SparkTask.java

@@ -108,12 +108,15 @@ public class SparkTask extends Task<SparkWork>

perfLogger.PerfLogBegin(CLASS_NAME, PerfLogger.SPARK_SUBMIT_JOB);

submitTime = perfLogger.getStartTime(PerfLogger.SPARK_SUBMIT_JOB);

SparkJobRef jobRef = sparkSession.submit(driverContext, sparkWork);

+ sparkSession.onQuerySubmission();

perfLogger.PerfLogEnd(CLASS_NAME, PerfLogger.SPARK_SUBMIT_JOB);

addToHistory(jobRef);

sparkJobID = jobRef.getJobId();

this.jobID = jobRef.getSparkJobStatus().getAppID();

rc = jobRef.monitorJob();

+

+ sparkSession.onQueryCompletion();

SparkJobStatus sparkJobStatus = jobRef.getSparkJobStatus();

getSparkJobInfo(sparkJobStatus, rc);

if (rc == 0)

diff --git a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSession.java b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSession.java

index e1f8c1dc..a432fde1 100644

--- a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSession.java

+++ b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSession.java

@@ -75,4 +75,21 @@ public interface SparkSession

* Get an HDFS dir specific to the SparkSession

* */

Path getHDFSSessionDir() throws IOException;

+

+

+

+ /**

+ * 当SparkTask执行完一个Task时,会调用该方法

+ */

+ void onQueryCompletion();

+

+ /**

+ * 当SparkTask提交了一个新的task时,会调用该方法

+ */

+ void onQuerySubmission();

+

+ /**

+ * 检查当前SparkSession是否超时了,超时了则关闭这个SparkSession

+ */

+ boolean triggerTimeout(long sessionTimeout);

diff --git a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionImpl.java b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionImpl.java

index 51c67152..60aa89a0 100644

--- a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionImpl.java

+++ b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionImpl.java

@@ -18,7 +18,9 @@

package org.apache.hadoop.hive.ql.exec.spark.session;

import java.io.IOException;

+import java.util.Set;

import java.util.UUID;

+import java.util.concurrent.atomic.AtomicInteger;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

@@ -49,7 +51,8 @@ public class SparkSessionImpl implements SparkSession

private HiveSparkClient hiveSparkClient;

private Path scratchDir;

private final Object dirLock = new Object();

-

+ private final AtomicInteger activeJobs = new AtomicInteger(0);

+ private long lastCompleteJobTime = System.currentTimeMillis();

public SparkSessionImpl()

sessionId = makeSessionId();

@@ -164,6 +167,33 @@ public class SparkSessionImpl implements SparkSession

return scratchDir;

+ @Override

+ public void onQueryCompletion()

+ activeJobs.decrementAndGet();

+

+

+ @Override

+ public void onQuerySubmission()

+ activeJobs.incrementAndGet();

+ lastCompleteJobTime = System.currentTimeMillis();

+

+

+ @Override

+ public boolean triggerTimeout(long sessionTimeout)

+ if (hasTimedOut(sessionTimeout))

+ LOG.warn("Closing Spark session " + getSessionId() + " because a Spark job has not " +

+ "been run in the past " + sessionTimeout / 1000 + " seconds");

+ close();

+ return true;

+

+ return false;

+

+

+ private boolean hasTimedOut(long sessionTimeout)

+ return activeJobs.get()==0 &&

+ (System.currentTimeMillis() - lastCompleteJobTime) > sessionTimeout;

+

+

public static String makeSessionId()

return UUID.randomUUID().toString();

diff --git a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionManagerImpl.java b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionManagerImpl.java

index 3c2f0e6e..1db57ea7 100644

--- a/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionManagerImpl.java

+++ b/ql/src/java/org/apache/hadoop/hive/ql/exec/spark/session/SparkSessionManagerImpl.java

@@ -23,6 +23,10 @@ import java.util.HashSet;

import java.util.Iterator;

import java.util.Map;

import java.util.Set;

+import java.util.concurrent.Executors;

+import java.util.concurrent.Future;

+import java.util.concurrent.ScheduledExecutorService;

+import java.util.concurrent.TimeUnit;

import org.apache.hive.common.util.ShutdownHookManager;

import org.slf4j.Logger;

@@ -45,6 +49,7 @@ public class SparkSessionManagerImpl implements SparkSessionManager

private volatile boolean inited = false;

private static SparkSessionManagerImpl instance;

+ private volatile Future<?> timeoutFuture;

static

ShutdownHookManager.addShutdownHook(new Runnable()

@@ -82,6 +87,7 @@ public class SparkSessionManagerImpl implements SparkSessionManager

Map<String, String> conf = HiveSparkClientFactory.initiateSparkConf(hiveConf);

try

SparkClientFactory.initialize(conf);

+ startTimeoutThread(hiveConf);

inited = true;

catch (IOException e)

throw new HiveException("Error initializing SparkClientFactory", e);

@@ -145,6 +151,9 @@ public class SparkSessionManagerImpl implements SparkSessionManager

public void shutdown()

LOG.info("Closing the session manager.");

synchronized (createdSessions)

+ if (timeoutFuture != null)

+ timeoutFuture.cancel(false);

+

Iterator<SparkSession> it = createdSessions.iterator();

while (it.hasNext())

SparkSession session = it.next();

@@ -155,4 +164,28 @@ public class SparkSessionManagerImpl implements SparkSessionManager

inited = false;

SparkClientFactory.stop();

+

+ /**

+ * Starts a scheduled thread that periodically calls @link SparkSession#triggerTimeout(long)

+ * on each @link SparkSession managed by this class.

+ */

+ private void startTimeoutThread(HiveConf conf)

+ final long sessionTimeout = conf.getLongVar(HiveConf.ConfVars.SPARK_SESSION_TIMEOUT);

+ long sessionTimeoutPeriod = conf.getLongVar(HiveConf.ConfVars.SPARK_SESSION_TIMEOUT_PERIOD);

+ ScheduledExecutorService es = Executors.newSingleThreadScheduledExecutor();

+

+ timeoutFuture = es.scheduleAtFixedRate(new Runnable()

+ @Override

+ public void run()

+ LOG.info("check spark session timeout.now sparkSession size:"+createdSessions.size());

+ final Iterator<SparkSession> iterator = createdSessions.iterator();

+ while (iterator.hasNext())

+ final SparkSession sparkSession = iterator.next();

+ if(sparkSession.triggerTimeout(sessionTimeout))

+ iterator.remove();

+

+

+

+ ,0, sessionTimeoutPeriod, TimeUnit.MILLISECONDS);

+

--

2.18.1

以上是关于Hive提交到yarn的任务一直在running问题排查-V2的主要内容,如果未能解决你的问题,请参考以下文章