EM+GMM基于EM和GMM算法的目标轨迹跟踪和异常行为识别matlab仿真

Posted fpga和matlab

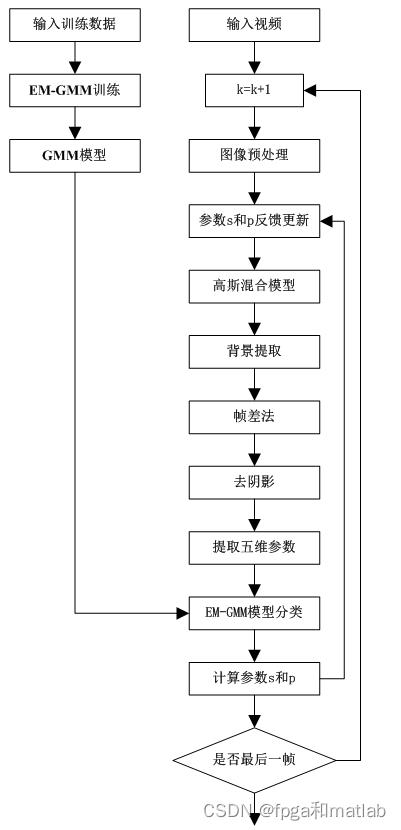

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了EM+GMM基于EM和GMM算法的目标轨迹跟踪和异常行为识别matlab仿真相关的知识,希望对你有一定的参考价值。

1.软件版本

matlab2013b

2.系统概述

3.部分源码

clc;

clear;

close all;

warning off;

addpath 'func\\'

%训练数据

Is = imread('Track_file\\tower1.bmp');

load Track_file\\track1.mat

load Track_file\\track2.mat

figure(1);

subplot(121);

imshow(Is);

hold on;

X1=[];

Y1=[];

T1=[];

W1=[];

H1=[];

for i = 1:length(track1)

i

if isempty(track1(1,i).x) == 1

X = 0;

Y = 0;

else

X = cell2mat(track1(1,i).x(1,1));

Y = cell2mat(track1(1,i).y(1,1));

X1=[X1;cell2mat(track1(1,i).x(1,1))];

Y1=[Y1;cell2mat(track1(1,i).y(1,1))];

T1=[T1;cell2mat(track1(1,i).time(1,1))];

W1=[W1;cell2mat(track1(1,i).w(1,1))];

H1=[H1;cell2mat(track1(1,i).h(1,1))];

end

plot(X,Y,'c.');

hold on;

end

title('Track1');

subplot(122);

imshow(Is);

hold on;

X2=[];

Y2=[];

T2=[];

W2=[];

H2=[];

for i = 1:length(track2)

i

if isempty(track2(1,i).x) == 1

X = 0;

Y = 0;

else

X = cell2mat(track2(1,i).x(1,1));

Y = cell2mat(track2(1,i).y(1,1));

X2=[X2;cell2mat(track2(1,i).x(1,1))];

Y2=[Y2;cell2mat(track2(1,i).y(1,1))];

T2=[T2;cell2mat(track2(1,i).time(1,1))];

W2=[W2;cell2mat(track2(1,i).w(1,1))];

H2=[H2;cell2mat(track2(1,i).h(1,1))];

end

plot(X,Y,'c.');

hold on;

end

title('Track2');

tao=20;

X1s=[];

Y1s=[];

T1s=[];

W1s=[];

H1s=[];

X_samp = [];

Y_samp = [];

T_samp = [];

W_samp = [];

H_samp = [];

X_samps1 = [];

Y_samps1 = [];

T_samps1 = [];

W_samps1 = [];

H_samps1 = [];

for i = 1:length(track1)

i

if isempty(track1(1,i).x) == 1

X1si=0;

Y1si=0;

T1si=0;

W1si=0;

H1si=0;

else

X1si=cell2mat(track1(1,i).x(1,1));

Y1si=cell2mat(track1(1,i).y(1,1));

T1si=cell2mat(track1(1,i).time(1,1));

W1si=cell2mat(track1(1,i).w(1,1));

H1si=cell2mat(track1(1,i).h(1,1));

end

if i <= tao;

X_samp = (X1si);

Y_samp = (Y1si);

T_samp = 0.00001*ones(length(X_samp),1);

W_samp = (W1si);

H_samp = (H1si);

else

X_samp = (X1si);

Y_samp = (Y1si);

T_samp = 0.00001*ones(length(X_samp),1);

Ls = min(length(X1si),length(X1si-tao));

for j = 1:Ls

T_samp(j,1) = sqrt((X1si(j) - X1si-tao(j))^2 + (Y1si(j) - Y1si-tao(j))^2)/tao;

end

W_samp = (W1si);

H_samp = (H1si);

end

X_samps1 = [X_samps1;X_samp];

Y_samps1 = [Y_samps1;Y_samp];

T_samps1 = [T_samps1;T_samp];

W_samps1 = [W_samps1;W_samp];

H_samps1 = [H_samps1;H_samp];

end

X2s=[];

Y2s=[];

T2s=[];

W2s=[];

H2s=[];

X_samp = [];

Y_samp = [];

T_samp = [];

W_samp = [];

H_samp = [];

X_samps2 = [];

Y_samps2 = [];

T_samps2 = [];

W_samps2 = [];

H_samps2 = [];

for i = 1:length(track2)

i

if isempty(track2(1,i).x) == 1

X2si=0;

Y2si=0;

T2si=0;

W2si=0;

H2si=0;

else

X2si=cell2mat(track2(1,i).x(1,1));

Y2si=cell2mat(track2(1,i).y(1,1));

T2si=cell2mat(track2(1,i).time(1,1));

W2si=cell2mat(track2(1,i).w(1,1));

H2si=cell2mat(track2(1,i).h(1,1));

end

if i <= tao;

X_samp = (X2si);

Y_samp = (Y2si);

T_samp = 0.00001*ones(length(X_samp),1);

W_samp = (W2si);

H_samp = (H2si);

else

X_samp = (X2si);

Y_samp = (Y2si);

T_samp = 0.00001*ones(length(X_samp),1);

Ls = min(length(X2si),length(X2si-tao));

for j = 1:Ls

T_samp(j,1) = sqrt((X2si(j) - X2si-tao(j))^2 + (Y2si(j) - Y2si-tao(j))^2)/tao;

end

W_samp = (W2si);

H_samp = (H2si);

end

X_samps2 = [X_samps2;X_samp];

Y_samps2 = [Y_samps2;Y_samp];

T_samps2 = [T_samps2;T_samp];

W_samps2 = [W_samps2;W_samp];

H_samps2 = [H_samps2;H_samp];

end

%原始数据的训练,用set2的数据来训练

feature1 = [X_samps1,Y_samps1,T_samps1,W_samps1,H_samps1];

feature2 = [X_samps2,Y_samps2,T_samps2,W_samps2,H_samps2];

feature = [feature2(5000:7000,:)];%

tmps = feature;

[Px, model] = gmm(tmps,2);

% save EmGmm_model.mat model

% figure;

% imshow(Is);

% hold on;

% [~, cls_ind] = max(Px',[],1);

% for tt = 1:1

% tt

% for jj = 1:length(cls_ind);

% if cls_ind(jj)==1

% plot(feature(jj,1),feature(jj,2),'b.');

% end

% if cls_ind(jj)==2

% plot(feature(jj,1),feature(jj,2),'r.');

% end

% hold on;

% end

% title('异常行为识别');

% end

clc;

clear;

close all;

warning off;

addpath 'func\\'

%初始背景的提取

%注意,原始的视频非常大,这里需要截取其中需要处理的帧数

Bg_Length = 20;

Start_Frame = Bg_Length+1;

End_Frame = 999;

Simulation_frame = min(800,End_Frame);

avi1 = aviread('vedio\\4.AVI',[Start_Frame:End_Frame]);

vedio1 = avi1.cdata;

avi2 = aviread('vedio\\4.AVI',[1:Bg_Length]);

vedio2 = avi2.cdata;

vedio = [vedio2,vedio1];

%步骤一:结合高斯混合模型的帧差法进行目标的提取

%这个步骤主要是目标提取,并获得特征序列两个部分

RR = 240;%处理视频大小

CC = 320;

K = 4; %组件

Alpha = 0.002; %适应权重速度

Rho = 0.01; %适应权重速度协方差

Deviation_sq = 49; %阈值用于查找匹配

Variance = 2; %初始方差为新放置组件

Props = 0.00001; %最初为新放置

Back_Thresh = 0.6; %体重的比例必须占背景模型

Comp_Thresh = 10; %滤掉连接组件的较小的尺寸

SHADOWS = [0.7,0.25,0.85,0.95];

frameNum_Original = length(vedio);

CRGB = 3;

D = RR * CC;

Temp = zeros(RR,CC,CRGB,frameNum_Original,'uint8');

for tt = 1:frameNum_Original

im = vediott;

Temp(:,:,:,tt) = im;

end

Temp = reshape(Temp,size(Temp,1)*size(Temp,2),size(Temp,3),size(Temp,4));

Mus = zeros(D,K,CRGB);

Mus(:,1,:) = double(Temp(:,:,1));

Mus(:,2:K,:) = 255*rand([D,K-1,CRGB]);

Sigmas = Variance*ones(D,K,CRGB);

Weights = [ones(D,1),zeros(D,K-1)];

Squared = zeros(D,K);

Gaussian = zeros(D,K);

Weight = zeros(D,K);

background = zeros(RR,CC);

Shadows = zeros(RR,CC);

Images0 = zeros(RR,CC);

Images1 = zeros(RR,CC);

Images2 = zeros(RR,CC);

background_Update = zeros(RR,CC,CRGB);

Xft = [];

Yft = [];

Timeft = [];

Wft = [];

Hft = [];

Smin = 50;

Smax = 150;

PAlpha_min = 0.001;

PAlpha_max = 0.004;

Pdf1 = 0;

Pdf2 = 0;

tao = 20;

Xx = [];

flag = 0;

Xf_save = cell(End_Frame,1);

Yf_save = cell(End_Frame,1);

Timef_save = cell(End_Frame,1);

Wf_save = cell(End_Frame,1);

Hf_save = cell(End_Frame,1);

S = 0;

load EmGmm_model.mat;

for tt = 1:Simulation_frame

disp('当前帧数');

tt

%由分析得到的反馈信号

%由分析得到的反馈信号

%Minimum Object Size 反馈,目标大小检测

if tt > 1

Ss = Smin*Pdf1 + Smax*(1-Pdf1);

else

Ss = Smin;

end

%Background Learning Rate 反馈,背景更新学习率

if tt > 1

Alpha = PAlpha_min*Pdf2 + PAlpha_max*(1-Pdf2);

else

Alpha = PAlpha_min;

end

%基于高斯混合模型的帧差分背景提取算法

%基于高斯混合模型的帧差分背景提取算法

image = Temp(:,:,tt);

for kk = 1:K

Datac = double(Temp(:,:,tt))-reshape(Mus(:,kk,:),D,CRGB);

Squared(:,kk) = sum((Datac.^ 2)./reshape(Sigmas(:,kk,:),D,CRGB),2);

end

[junk,index] = min(Squared,[],2);

Gaussian = zeros(size(Squared));

Gaussian(sub2ind(size(Squared),1:length(index),index')) = ones(D,1);

Gaussian = Gaussian&(Squared<Deviation_sq);

%参数更新

Weights = (1-Alpha).*Weights+Alpha.*Gaussian;

for kk = 1:K

pixel_matched = repmat(Gaussian(:,kk),1,CRGB);

pixel_unmatched = abs(pixel_matched-1);

Mu_kk = reshape(Mus(:,kk,:),D,CRGB);

Sigma_kk = reshape(Sigmas(:,kk,:),D,CRGB);

Mus(:,kk,:) = pixel_unmatched.*Mu_kk+pixel_matched.*(((1-Rho).*Mu_kk)+(Rho.*double(image)));

Mu_kk = reshape(Mus(:,kk,:),D,CRGB);

Sigmas(:,kk,:) = pixel_unmatched.*Sigma_kk+pixel_matched.*(((1-Rho).*Sigma_kk)+repmat((Rho.* sum((double(image)-Mu_kk).^2,2)),1,CRGB));

end

replaced_gaussian = zeros(D,K);

mismatched = find(sum(Gaussian,2)==0);

for ii = 1:length(mismatched)

[junk,index] = min(Weights(mismatched(ii),:)./sqrt(Sigmas(mismatched(ii),:,1)));

replaced_gaussian(mismatched(ii),index) = 1;

Mus(mismatched(ii),index,:) = image(mismatched(ii),:);

Sigmas(mismatched(ii),index,:) = ones(1,CRGB)*Variance;

Weights(mismatched(ii),index) = Props;

end

Weights = Weights./repmat(sum(Weights,2),1,K);

active_gaussian = Gaussian+replaced_gaussian;

%背景分割

[junk,index] = sort(Weights./sqrt(Sigmas(:,:,1)),2,'descend');

bg_gauss_good = index(:,1);

linear_index = (index-1)*D+repmat([1:D]',1,K);

weights_ordered = Weights(linear_index);

for kk = 1:K

Weight(:,kk)= sum(weights_ordered(:,1:kk),2);

end

bg_gauss(:,2:K) = Weight(:,1:(K-1)) < Back_Thresh;

bg_gauss(:,1) = 1;

bg_gauss(linear_index) = bg_gauss;

active_background_gaussian = active_gaussian & bg_gauss;

foreground_pixels = abs(sum(active_background_gaussian,2)-1);

foreground_map = reshape(sum(foreground_pixels,2),RR,CC);

Images1(:,:,tt) = foreground_map;

objects_map = zeros(size(foreground_map),'int32');

object_sizes = [];

Obj_pos = [];

new_label = 1;

%计算连通区域

[label_map,num_labels] = bwlabel(foreground_map,8);

for label = 1:num_labels

object = (label_map == label);

object_size = sum(sum(object));

if(object_size >= Comp_Thresh)

objects_map = objects_map + int32(object * new_label);

object_sizes(new_label) = object_size;

[X,Y] = meshgrid(1:CC,1:RR);

object_x = X.*object;

object_y = Y.*object;

Obj_pos(:,new_label) = [sum(sum(object_x)) / object_size;

sum(sum(object_y)) / object_size];

new_label = new_label + 1;

end

end

num_objects = new_label - 1;

%去除阴影

index = sub2ind(size(Mus),reshape(repmat([1:D],CRGB,1),D*CRGB,1),reshape(repmat(bg_gauss_good',CRGB,1),D*CRGB,1),repmat([1:CRGB]',D,1));

background = reshape(Mus(index),CRGB,D);

background = reshape(background',RR,CC,CRGB);

background = uint8(background);

background_Update = background;

background_hsv = rgb2hsv(background);

image_hsv = rgb2hsv(vediott);

for i = 1:RR

for j = 1:CC

if (objects_map(i,j))&&...

(abs(image_hsv(i,j,1)-background_hsv(i,j,1))<SHADOWS(1))&&...

(image_hsv(i,j,2)-background_hsv(i,j,2)<SHADOWS(2))&&...

(SHADOWS(3)<=image_hsv(i,j,3)/background_hsv(i,j,3)<=SHADOWS(4))

Shadows(i,j) = 1;

else

Shadows(i,j) = 0;

end

end

end

%运动目标检测,二值图

Images0 = objects_map;

objecs_adjust_map = Shadows;

Images3 = objecs_adjust_map;

%运动目标检测-最后处理结果

[res3,res4,Xf,Yf,Timef,Wf,Hf] = func_fangk(Images0,vediott,tt,Bg_Length,Ss);

%根据上面的结果,提取特征向量

%根据上面的结果,提取特征向量

Lens = 800;

if length(Xft) < Lens

Xft = [Xft;Xf];

Yft = [Yft;Yf];

Timeft= [Timeft;Timef];

Wft = [Wft;Wf];

Hft = [Hft;Hf];

else

Xft = [Xft;Xf];

Yft = [Yft;Yf];

Timeft= [Timeft;Timef];

Wft = [Wft;Wf];

Hft = [Hft;Hf];

Xft(1:end-800) = [];

Yft(1:end-800) = [];

Timeft(1:end-800)= [];

Wft(1:end-800) = [];

Hft(1:end-800) = [];

end

figure(2);

subplot(121)

imshow(vediott);

title('原始图像');

subplot(122)

imshow(uint8(res3),[]);

hold on;

if tt > Bg_Length

if length(Xft) > Lens

VX = Xft(length(Xft)-Lens:length(Xft));

VY = Yft(length(Xft)-Lens:length(Xft));

plot(Xft(length(Xft)-Lens:length(Xft)),Yft(length(Xft)-Lens:length(Xft)),'c.');

else

VX = Xft;

VY = Yft;

plot(Xft,Yft,'c.');

end

end

title('运动目标检测结果');

%计算Pdf1

tmps = [Wf,Hf];

[Ns,Ds] = size(tmps);

Pdf1s = min(calc_prob(tmps,Ns,2,model.Miu(:,4:5),model.Sigma(4:5,4:5,:),Ds));

Pdf1 = mean(Pdf1s);

%计算Pdf2

if mod(tt,Lens) <= 20

tmps = 0;

else

tmps = sqrt((Xft(mod(tt,Lens))-Xft(mod(tt,Lens)-20))^2+(Yft(mod(tt,Lens))-Yft(mod(tt,Lens)-20))^2)/20;

end

[Ns,Ds] = size(tmps);

Pdf2s = min(calc_prob(tmps,Ns,2,model.Miu(:,5),model.Sigma(5,5,:),Ds));

Pdf2 = mean(Pdf2s);

pause(0.01);

%将数据保存,用于后期处理

Xf_savett= Xf;

Yf_savett= Yf;

Timef_savett= Timef;

Wf_savett= Wf;

Hf_savett= Hf;

Pimagestt= Images0;

end

save TEMP\\R4.mat Xf_save Yf_save Timef_save Wf_save Hf_save Pimages

clc;

clear;

close all;

warning off;

addpath 'func\\'

%通过前面的目标跟踪,这里我们主要对最后的结果进行显示

%本质上C这个程序是B里面的,但是由于涉及到的变量较多,如果再增加一些显示的变量,仿真及其缓慢,而且会出现OUT OF Memory

%所以这里单独对结论进行显示

%初始背景的提取

%注意,原始的视频非常大,这里需要截取其中需要处理的帧数

Bg_Length = 20;

Start_Frame = Bg_Length+1;

End_Frame = 999;

Simulation_frame = min(800,End_Frame);

load EmGmm_model.mat;

load TEMP\\R4.mat

avi1 = aviread('vedio\\4.AVI',[Start_Frame:End_Frame]);

vedio1 = avi1.cdata;

avi2 = aviread('vedio\\4.AVI',[1:Bg_Length]);

vedio2 = avi2.cdata;

vedio = [vedio2,vedio1];

tao = 20;

X_samp = [];

Y_samp = [];

T_samp = [];

W_samp = [];

H_samp = [];

X_samps = [];

Y_samps = [];

T_samps = [];

W_samps = [];

H_samps = [];

X_samps2 = [];

Y_samps2 = [];

T_samps2 = [];

W_samps2 = [];

H_samps2 = [];

for tt = 1:Simulation_frame;

tt

Images0 = Pimagestt;

[res3,res4,Xf,Yf,Timef,Wf,Hf] = func_fangk2(Images0,vediott,tt,Bg_Length);

%下面开始进行异常类型识别

%重新采样

X_sampstt = Xf;

Y_sampstt = Yf;

T_sampstt = Timef;

W_sampstt = Wf;

H_sampstt = Hf;

if tt <= tao;

X_samp = Xf;

Y_samp = Yf;

T_samp = Timef;

W_samp = Wf;

H_samp = Hf;

else

X_samp = Xf;

Y_samp = Yf;

T_samp = 0.001*ones(length(X_samp),1);

Ls = min(length(X_sampstt),length(X_sampstt-tao));

for j = 1:Ls

T_samp(j,1) = sqrt((X_sampstt(j) - X_sampstt-tao(j))^2 + (Y_sampstt(j) - Y_sampstt-tao(j))^2)/tao;

end

W_samp = Wf;

H_samp = Hf;

end

X_samps2 = [X_samps2;X_samp];

Y_samps2 = [Y_samps2;Y_samp];

T_samps2 = [T_samps2;T_samp];

W_samps2 = [W_samps2;W_samp];

H_samps2 = [H_samps2;H_samp];

end

feature = [X_samps2,Y_samps2,T_samps2,W_samps2,H_samps2];

[Ns,Ds] = size(feature);

Px = calc_prob(feature,Ns,2,model.Miu,model.Sigma,Ds);

[~, cls_ind] = max(Px',[],1);

%最后完异常检测效果

XV = [];

YV = [];

for tt = 1:1

tt

Images0 = Pimagestt;

[res3,res4,Xf,Yf,Timef,Wf,Hf] = func_fangk2(Images0,vediott,tt,Bg_Length);

figure(1);

subplot(121)

imshow(vediott);

title('原始图像');

subplot(122)

imshow(uint8(res3),[]);

hold on;

for jj = 1:length(cls_ind);

if length(find(cls_ind==1)) > 1.2*length(find(cls_ind==2))

if cls_ind(jj)==1

plot(X_samps2(jj),Y_samps2(jj),'b.');

XV(jj,1) = [X_samps2(jj)];

YV(jj,1) = [Y_samps2(jj)];

XV(jj,2) = [1];

YV(jj,2) = [1];

else

plot(X_samps2(jj),Y_samps2(jj),'r.');

XV(jj,1) = [X_samps2(jj)];

YV(jj,1) = [Y_samps2(jj)];

XV(jj,2) = [2];

YV(jj,2) = [2];

end

end

if length(find(cls_ind==1)) <= 1.2*length(find(cls_ind==2))

if cls_ind(jj)==1

plot(X_samps2(jj),Y_samps2(jj),'r.');

XV(jj,1) = [X_samps2(jj)];

YV(jj,1) = [Y_samps2(jj)];

XV(jj,2) = [2];

YV(jj,2) = [2];

else

plot(X_samps2(jj),Y_samps2(jj),'b.');

XV(jj,1) = [X_samps2(jj)];

YV(jj,1) = [Y_samps2(jj)];

XV(jj,2) = [1];

YV(jj,2) = [1];

end

end

hold on;

end

title('异常行为识别');

pause(0.0025);

end

%异常检测动画效果,将系统最后处理的结果进行显示

S1 = 0;

E1 = 0;

for tt = 1:Simulation_frame;

tt

Images0 = Pimagestt;

[res3,res4,Xf,Yf,Timef,Wf,Hf] = func_fangk2(Images0,vediott,tt,Bg_Length);

figure(2);

subplot(121)

imshow(vediott);

title('原始图像');

subplot(122)

imshow(uint8(res3),[]);

hold on;

S1 = E1 + 1;

E1 = E1 + length(Xf);

Xtmp = XV(1:E1,1);

Ytmp = YV(1:E1,1);

Flag = XV(1:E1,2);

for j = 1:length(Flag)

if Flag(j) == 1

plot(Xtmp(j),Ytmp(j),'b.');

hold on;

end

if Flag(j) == 2

plot(Xtmp(j),Ytmp(j),'r.');

hold on;

end

hold on;

end

hold on;

title('异常行为检测');

pause(0.01);

end

3.仿真结果

A10-19

以上是关于EM+GMM基于EM和GMM算法的目标轨迹跟踪和异常行为识别matlab仿真的主要内容,如果未能解决你的问题,请参考以下文章