Kubernetes关于CSR

Posted saynaihe

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Kubernetes关于CSR相关的知识,希望对你有一定的参考价值。

引子:

今天一个小伙伴问我kuberntes集群中kubectl get csr怎么没有输出呢? 我试了一下我集群内确实没有csr的。what is csr?为什么kubectl get csr一定要有输出呢?什么时候会有csr呢(这里说的是系统默认的,不包括自己创建的!)

1. Kubernetes 关于CSR

1.什么是CSR?

** csr** 的全称是 CertificateSigningRequest 翻译过来就是证书签名请求。具体的定义清参照kubernetes官方文档:https://kubernetes.io/zh/docs/reference/access-authn-authz/certificate-signing-requests/

2.kubernetes集群一定要有CSR吗?什么时候有CSR呢?

注:这里所说的csr都是默认的并不包括手动创建的

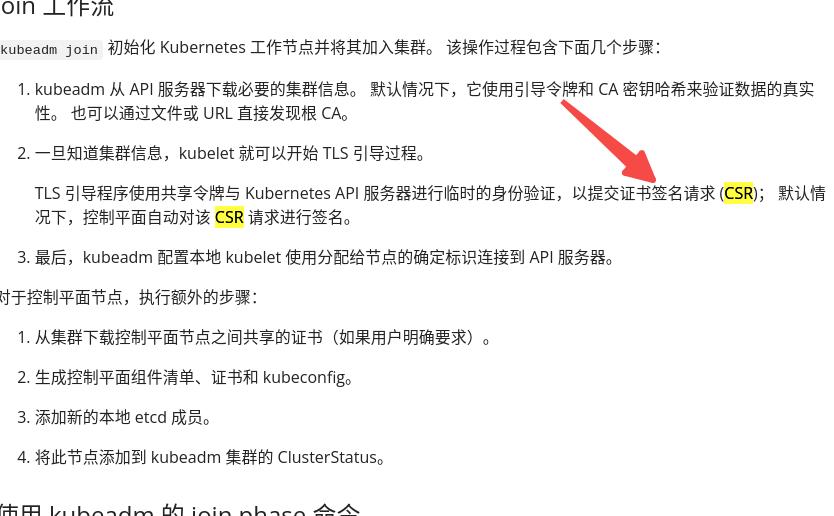

参照官方文档:https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-join/

看文档得出的结论是: kubernetes 集群在join的过程中是会产生csr的!

首先明确一下kubernetes集群的join(即work节点加入kubernetes集群)关于join我们常用的方法有一下两种:

1.使用共享令牌和 API 服务器的 IP 地址,格式如下:

kubeadm join --discovery-token abcdef.1234567890abcdef 1.2.3.4:6443

2. 但是要强调一点加入集群并不是只有这一种方式:

你可以提供一个文件 - 标准 kubeconfig 文件的一个子集。 该文件可以是本地文件,也可以通过 HTTPS URL 下载:

kubeadm join--discovery-file path/to/file.conf 或者kubeadm join --discovery-file https://url/file.conf

一般用户加入集群都是用的第一种方式。第二种方式我今天看文档才看到…就记录一下

3.总结一下

在kubernetes join的过程中会产生csr。下面体验一下

2. 真实环境演示csr的产生

两台rocky8.5为例。只做简单演示,不用于生产环境(系统都没有优化,只是简单跑一下join)

很多东西都只是为了跑一下测试!

| 主机名 | ip | master or work |

|---|---|---|

| k8s-master-01 | 10.0.4.2 | master节点 |

| k8s-work-01 | 10.0.4.36 | work节点 |

1. rocky使用kubeadm搭建简单kubernetes集群

1. 一些系统的简单设置

升级内核其他优化我就不去做了只是想演示一下csr!只做一下update

[root@k8s-master-01 ~]# yum update -y

and

[root@k8s-work-01 ~]# yum update -y

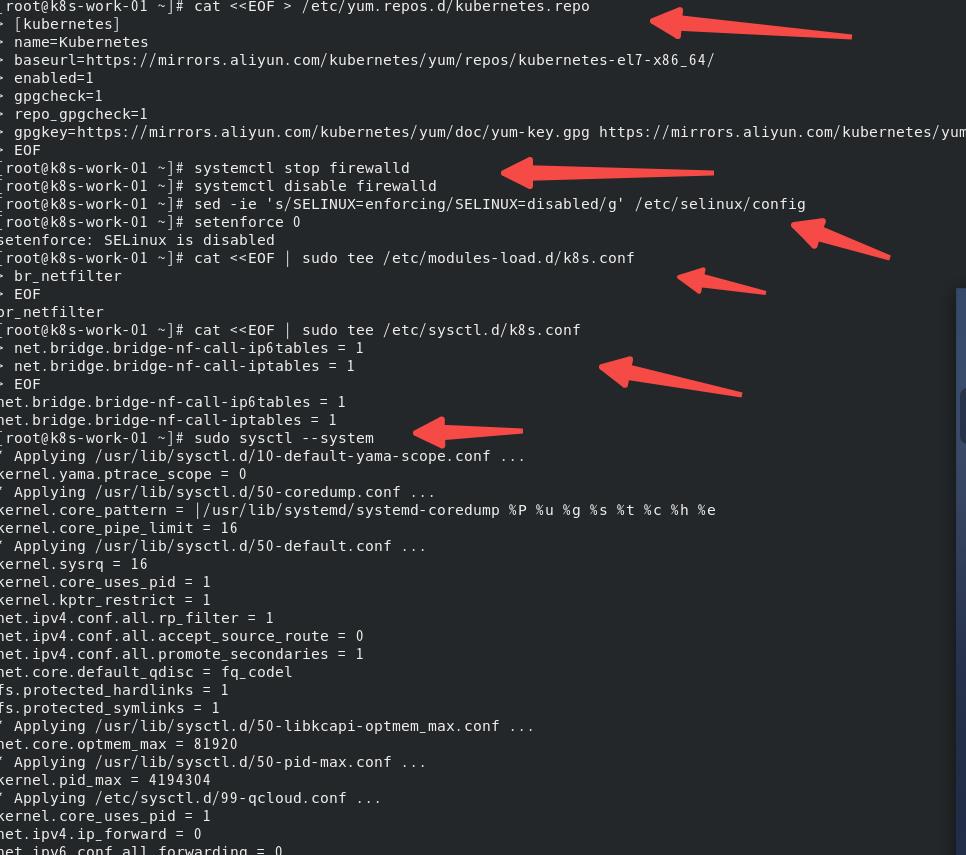

增加yum源,关闭防火墙selinux,设置br_netfilter等

###添加Kubernetes阿里云源

[root@k8s-master-01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#关闭防火墙

[root@k8s-master-01 ~]# systemctl stop firewalld

[root@k8s-master-01 ~]# systemctl disable firewalld

# 关闭selinux

[root@k8s-master-01 ~]# sed -ie 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@k8s-master-01 ~]# setenforce 0

# 允许 iptables 检查桥接流量

[root@k8s-master-01 ~]# cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

[root@k8s-master-01 ~]# cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8s-master-01 ~]# sudo sysctl --system

配图就拿k8s-work-01节点的操作了。两台服务器都要做这些系统的设置

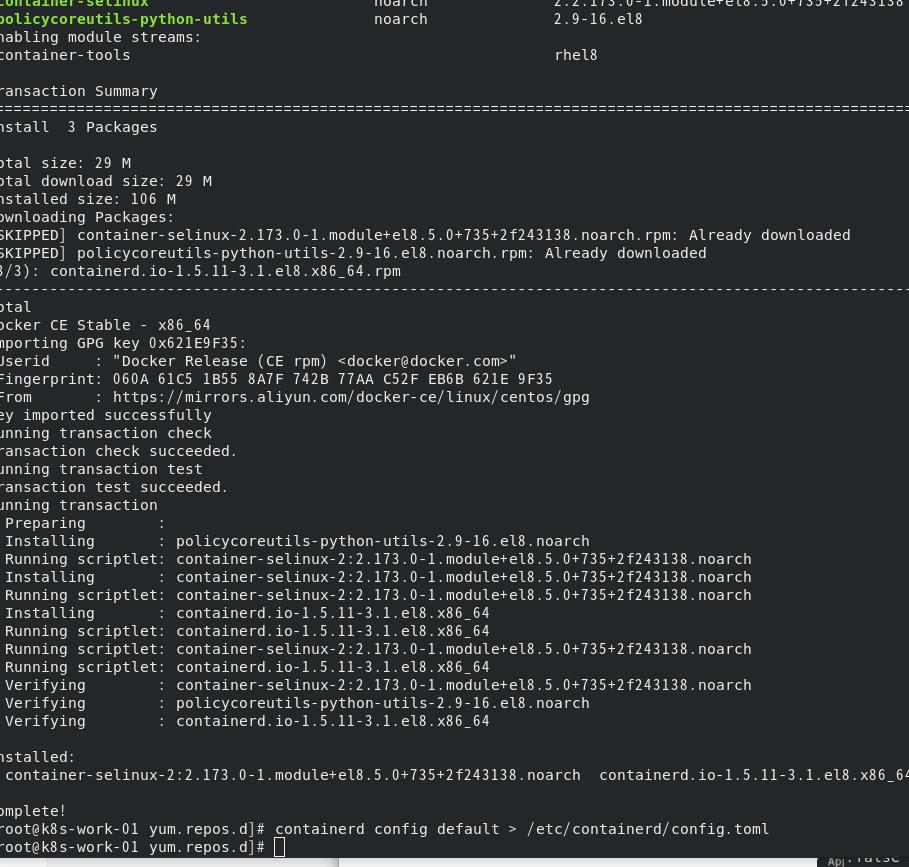

2. 安装并配置containerd

1. install contarinerd

[root@k8s-work-01 ~]# dnf install dnf-utils device-mapper-persistent-data lvm2

[root@k8s-work-01 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Failed to set locale, defaulting to C.UTF-8

Adding repo from: http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-work-01 ~]# sudo yum update -y && sudo yum install -y containerd.io

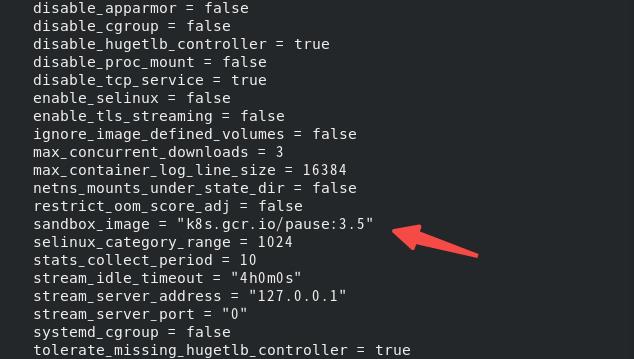

2. 生成配置文件并修改sandbox_image仓库地址

生成配置文件并修改pause仓库为阿里云镜像仓库地址

[root@k8s-work-01 yum.repos.d]# containerd config default > /etc/containerd/config.toml

修改pause容器image registry.aliyuncs.com/google_containers

3. 重新加加载服务

[root@k8s-work-01 yum.repos.d]# systemctl daemon-reload

[root@k8s-work-01 yum.repos.d]# systemctl restart containerd

[root@k8s-work-01 yum.repos.d]# systemctl status containerd

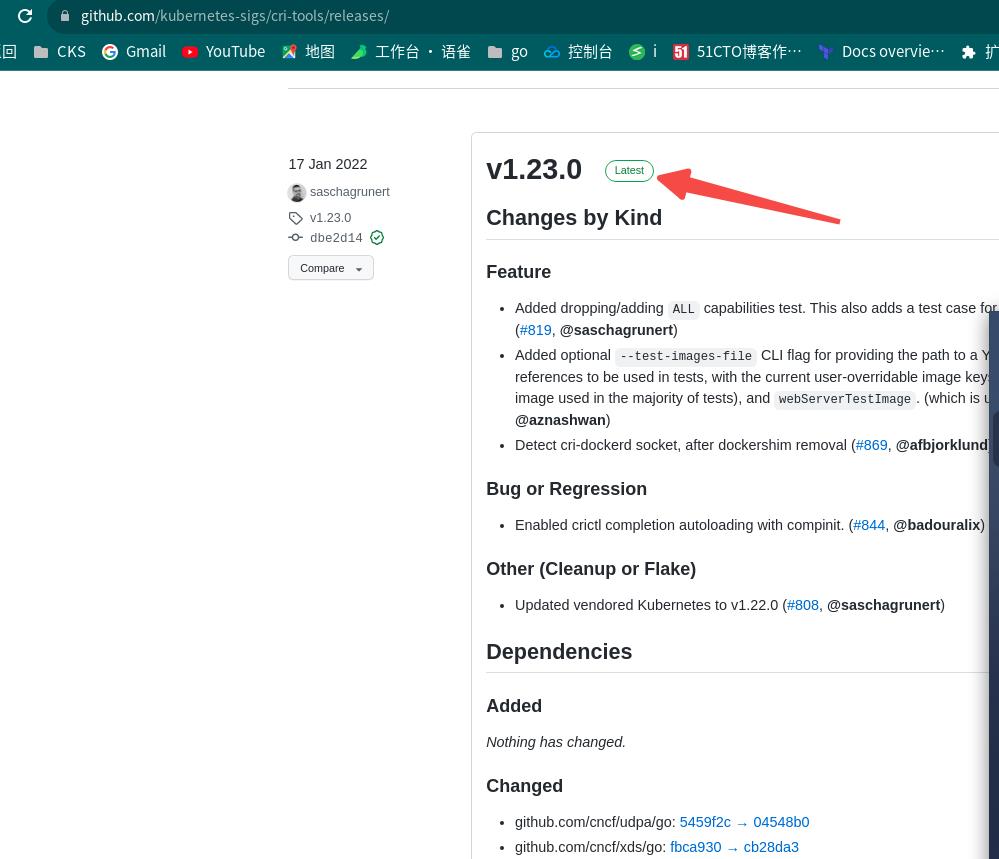

4. 配置 CRI 客户端 crictl

https://github.com/kubernetes-sigs/cri-tools/releases/

[root@k8s-master-01 ~]# VERSION="v1.23.0"

[root@k8s-master-01 ~]# wget https://github.com/kubernetes-sigs/cri-tools/releases/download/$VERSION/crictl-$VERSION-linux-amd64.tar.gz

[root@k8s-master-01 ~]# sudo tar zxvf crictl-$VERSION-linux-amd64.tar.gz -C /usr/local/bin

crictl

[root@k8s-master-01 ~]# rm -f crictl-$VERSION-linux-amd64.tar.gz

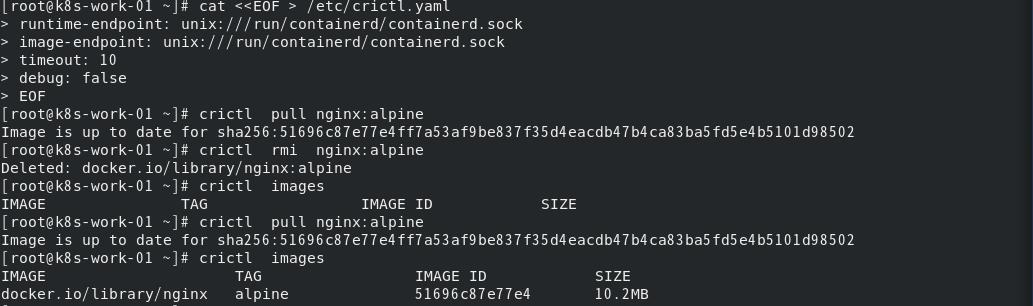

[root@k8s-work-01 ~]# cat <<EOF > /etc/crictl.yaml

> runtime-endpoint: unix:///run/containerd/containerd.sock

> image-endpoint: unix:///run/containerd/containerd.sock

> timeout: 10

> debug: false

> EOF

[root@k8s-work-01 ~]# crictl pull nginx:alpine

Image is up to date for sha256:51696c87e77e4ff7a53af9be837f35d4eacdb47b4ca83ba5fd5e4b5101d98502

[root@k8s-work-01 ~]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/nginx alpine 51696c87e77e4 10.2MB

注:仍然是两台server都安装。crictl可能被墙下载较慢。自己找其他方式解决,不做过讨论!

3. 安装 Kubeadm

# 查看所有可安装版本

# yum list --showduplicates kubeadm --disableexcludes=kubernetes

# 安装版本用下面的命令(安装了最新的1.23.5版本),可以指定版本安装

# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

修改kubelet配置

vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS= --cgroup-driver=systemd --container-runtime=remote --container-runtime-endpoint=/run/containerd/containerd.sock

[root@k8s-master-01 ~]# systemctl enable kubelet.service

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

4. k8s-master-01初始化

1. 生成配置文件

[root@k8s-master-01 ~]# kubeadm config print init-defaults > config.yaml

2. 修改配置文件

advertiseAddress 修改为k8s-master-01ip

criSocket: /run/containerd/containerd.sock 注:默认的还是docker

imageRepository: registry.aliyuncs.com/google_containers 注:默认是gcr的仓库

kubernetesVersion: 1.23.5 注:默认的是1.23.0 直接修改了

podSubnet: 10.244.0.0/16 注:m默认没有,添加一下与flannel对应不想修改flannel了

注: name:node应该修改为主机名否则后面master节点名称显示为node!

3. kubeadm int

[root@k8s-master-01 ~]# kubeadm init --config=config.yaml

产生如下报错: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

两台server进行如下操作:

[root@k8s-master-01 ~]# modprobe br_netfilter

[root@k8s-master-01 ~]# echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

[root@k8s-master-01 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

继续执行init

[root@k8s-master-01 ~]# kubeadm init --config=config.yaml

[root@k8s-master-01 ~]# mkdir -p $HOME/.kube

[root@k8s-master-01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master-01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-work-01 NotReady <none> 46s v1.23.5

5. work节点加入集群

[root@k8s-work-01 ~]# kubeadm join 10.0.4.2:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:abdffa455bed6eeda802563b826d042e6e855b30d2f2dbc9b6e0cd4515dfe1e2

[root@k8s-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-work-01 NotReady <none> 96m v1.23.5

node NotReady control-plane,master 96m v1.23.5

注:为什么master节点显示node前面已经说过了

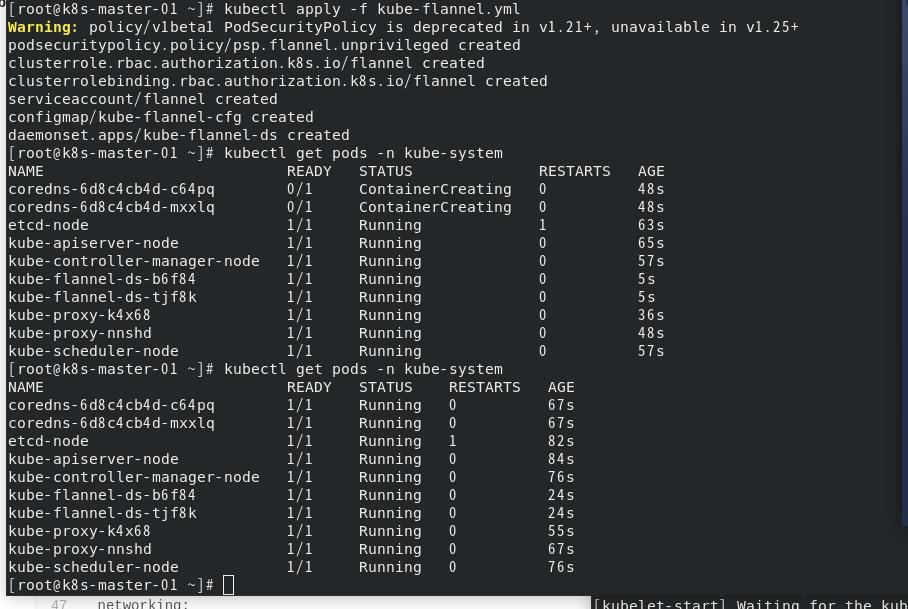

6. 安装网络插件flannel

[root@k8s-master-01 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@k8s-master-01 ~]# kubectl apply -f kube-flannel.yml

flannel配置文件中Network默认网络地址为10.244.0.0/16 kuberentes init的配置文件中podSubnet两个要一致!

[root@k8s-master-01 ~]# kubectl get pods -n kube-system

7. 验证一下是否有csr产生:

[root@k8s-master-01 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

csr-8jq54 8m30s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:abcdef <none> Approved,Issued

csr-qpsst 8m54s kubernetes.io/kube-apiserver-client-kubelet system:node:node <none> Approved,Issued

查看是有age生命周期的!

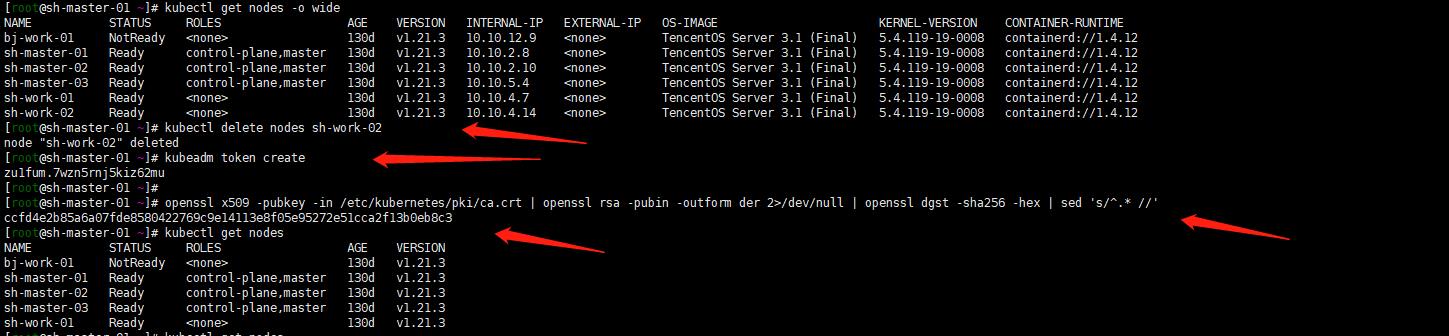

2.现有的kubeadm集群扩容状况下csr产生

找了一个现有集群腾讯云联网环境下搭建kubernetes集群:

[root@sh-master-01 ~]# kubectl get csr

No resources found

[root@sh-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

bj-work-01 NotReady <none> 130d v1.21.3

sh-master-01 Ready control-plane,master 130d v1.21.3

sh-master-02 Ready control-plane,master 130d v1.21.3

sh-master-03 Ready control-plane,master 130d v1.21.3

sh-work-01 Ready <none> 130d v1.21.3

sh-work-02 Ready <none> 130d v1.21.3

准备这样操作:将sh-work-02节点踢出集群,在master节点生成内部令牌和SH256执行加密字符串,sh-work-02重新加入集群

参照:Kubernetes集群扩容,好多年前写的文章了,直接一波梭哈了

[root@sh-master-01 ~]# kubectl delete nodes sh-work-02

node "sh-work-02" deleted

[root@sh-master-01 ~]# kubeadm token create

zu1fum.7wzn5rnj5kiz62mu

[root@sh-master-01 ~]#

[root@sh-master-01 ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

ccfd4e2b85a6a07fde8580422769c9e14113e8f05e95272e51cca2f13b0eb8c3

[root@sh-master-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

bj-work-01 NotReady <none> 130d v1.21.3

sh-master-01 Ready control-plane,master 130d v1.21.3

sh-master-02 Ready control-plane,master 130d v1.21.3

sh-master-03 Ready control-plane,master 130d v1.21.3

sh-work-01 Ready <none> 130d v1.21.3

sh-work-02节点操作:

[root@sh-work-02 ~]# kubeadm reset

[root@sh-work-02 ~]# reboot

[root@sh-work-02 ~]# kubeadm join 10.10.2.4:6443 --token zu1fum.7wzn5rnj5kiz62mu --discovery-token-ca-cert-hash sha256:ccfd4e2b85a6a07fde8580422769c9e14113e8f05e95272e51cca2f13b0eb8c3

sh-master-01节点:

[root@sh-master-01 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-lz6wl 97s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:zu1fum Approved,Issued

3. 创建一个tls证书并且批准证书签名请求

参照官方网站:管理集群中的 TLS 认证

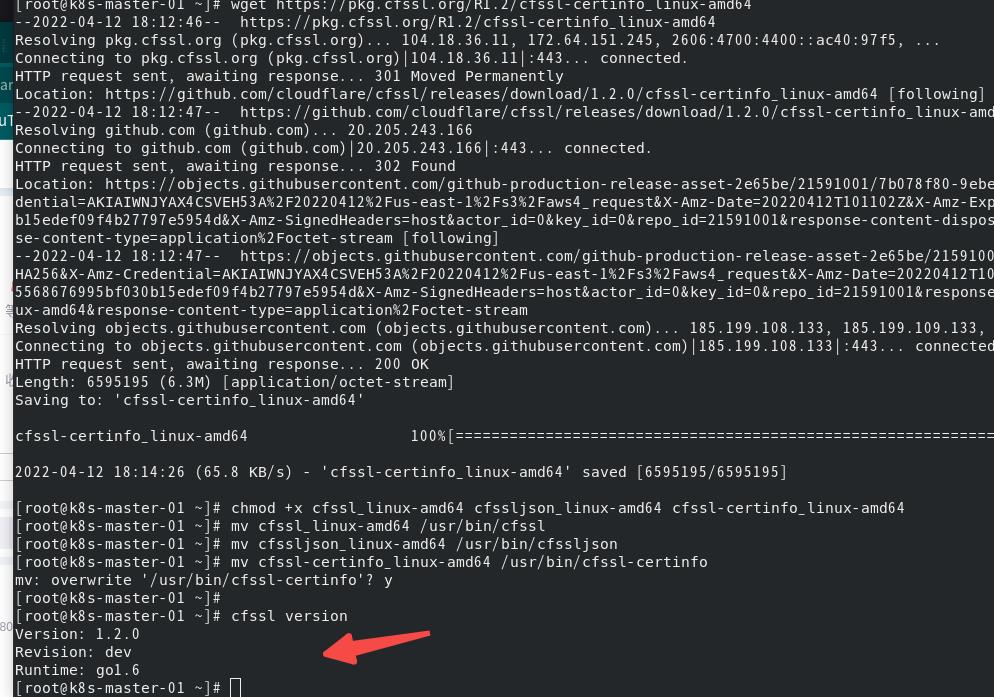

[root@k8s-master-01 ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@k8s-master-01 ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@k8s-master-01 ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@k8s-master-01 ~]# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

[root@k8s-master-01 ~]# mv cfssl_linux-amd64 /usr/bin/cfssl

[root@k8s-master-01 ~]# mv cfssljson_linux-amd64 /usr/bin/cfssljson

[root@k8s-master-01 ~]# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

[root@k8s-master-01 ~]# cfssl version

创建所需要的pod service namespace

[root@k8s-master-01 ~]# kubectl create ns my-namespace

namespace/my-namespace created

[root@k8s-master-01 ~]# kubectl run my-pod --image=nginx -n my-namespace

pod/my-pod created

kubectl apply -f service.yaml

[root@k8s-master-01 ~]# cat service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-svc

namespace: my-namespace

labels:

run: my-pod

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: my-pod

[root@k8s-master-01 ~]# kubectl get all -n my-namespace -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/my-pod 1/1 Running 0 51m 10.244.1.2 k8s-work-01 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/my-svc ClusterIP 10.109.248.68 <none> 80/TCP 7m6s run=my-pod

创建证书签名请求

service pod 对应域名与ip替换

cat <<EOF | cfssl genkey - | cfssljson -bare server

"hosts": [

"my-svc.my-namespace.svc.cluster.local",

"my-pod.my-namespace.pod.cluster.local",

"10.244.1.2",

"10.109.248.68"

],

"CN": "system:node:my-pod.my-namespace.pod.cluster.local",

"key":

"algo": "ecdsa",

"size": 256

,

"names": [

"O": "system:nodes"

]

EOF

创建证书签名请求对象发送到 Kubernetes API

cat <<EOF | kubectl apply -f -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: my-svc.my-namespace

spec:

request: $(cat server.csr | base64 | tr -d '\\n')

signerName: kubernetes.io/kubelet-serving

usages:

- digital signature

- key encipherment

- server auth

EOF

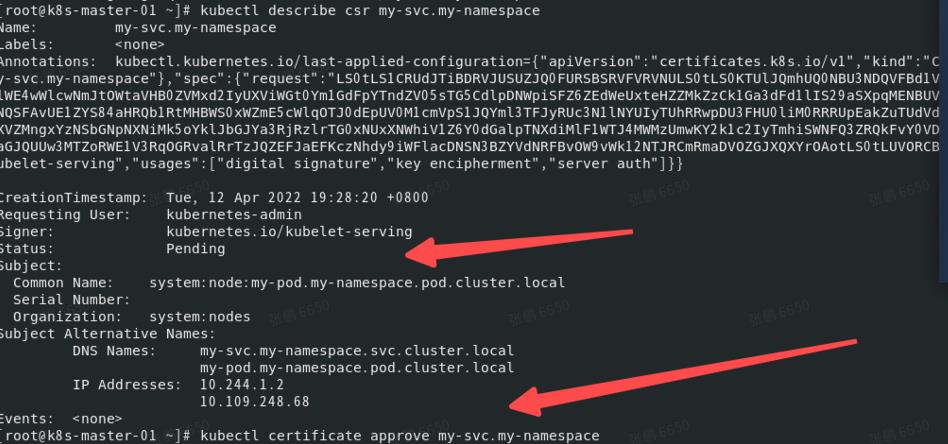

[root@k8s-master-01 ~]# kubectl describe csr my-svc.my-namespace

批准证书签名请求

[root@k8s-master-01 ~]# kubectl certificate approve my-svc.my-namespace

certificatesigningrequest.certificates.k8s.io/my-svc.my-namespace approved

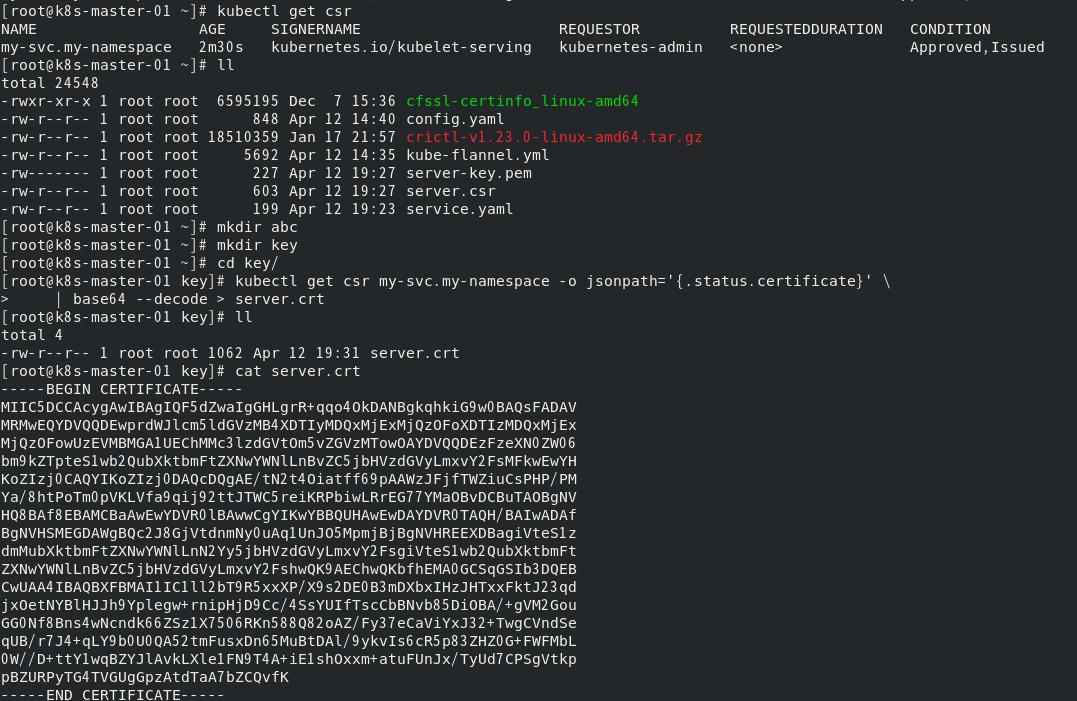

[root@k8s-master-01 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

my-svc.my-namespace 87s kubernetes.io/kubelet-serving kubernetes-admin <none> Approved,Issue

下载证书并使用它

[root@k8s-master-01 key]# kubectl get csr

[root@k8s-master-01 key]# kubectl get csr my-svc.my-namespace -o jsonpath='.status.certificate' \\

| base64 --decode > server.crt

将 server.crt 和 server-key.pem 作为键值对来启动 HTTPS 服务器?没有此应用场景…内部证书现在都是剥离的。Kubernetes 1.20.5 安装traefik在腾讯云下的实践挂载在slb上…所以这场景对我无用!

总结:

- 在rocky环境下搭建了一下kubeadm 1.23

- 看了一遍csr的产生。并不是Kubectl get csr没有输出就是异常

- 体验了一下内部tls证书的证书签名与批准(虽然实际环境没有使用)

- 了解了kubectl join的另外一种方式kubeadm join–discovery-file ,讲真之前没有仔细看过。

- 当然还有创建用户鉴权这里也可以使用csr方式https://kubernetes.io/zh/docs/reference/access-authn-authz/certificate-signing-requests/#authorization。我之前是这样做的Kubernetes之kuberconfig–普通用户授权kubernetes集群。

以上是关于Kubernetes关于CSR的主要内容,如果未能解决你的问题,请参考以下文章