kubespray安装高可用k8s集群

Posted 琦彦

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kubespray安装高可用k8s集群相关的知识,希望对你有一定的参考价值。

kubespray快速安装高可用k8s集群

Kubespray 简介

Kubespray (opens new window)是 Kubernetes incubator 中的项目,目标是提供 Production Ready Kubernetes 部署方案,该项目基础是通过 Ansible Playbook 来定义系统与 Kubernetes 集群部署的任务,具有以下几个特点:

- 可以部署在 AWS, GCE, Azure, OpenStack 以及裸机上.

- 部署 High Available Kubernetes 集群.

- 可组合性 (Composable),可自行选择 Network Plugin (flannel, calico, canal, weave) 来部署.

- 支持多种 Linux distributions(CoreOS, Debian Jessie, Ubuntu 16.04, CentOS/RHEL7).

Kubespray 由一系列的 Ansible (opens new window)playbook、生成 inventory (opens new window)的命令行工具以及生成 OS/Kubernetes 集群配置管理任务的专业知识构成。

环境介绍

| 系统环境 | 主机名 / IP地址 | 角色 | 内核版本 |

|---|---|---|---|

| CentOS 7.6.1810 | master1 / 10.0.41.95 | master && node | 5.4 |

| CentOS 7.6.1810 | master2 / 10.0.41.96 | master && node | 5.4 |

工具介绍

| 工具名称 | 版本 | 官网下载 | 安装机器 |

|---|---|---|---|

| ansible | 2.9.16 | 阿里云的epel.repo | master1 |

| kubespray | 2.15.0 | https://github.com/kubernetes-sigs/kubespray | master1 |

| chronyd | 3.2 | 系统自带的就好 | master1 && master2 |

| 阿里云yum源 | https://developer.aliyun.com/mirror/ | master1 && master2 |

环境准备工作(所有机器都需要)

1.关闭防火墙、SElinux

## 防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

# 关闭selinux

# 临时禁用selinux

setenforce 0

# 永久关闭 修改/etc/sysconfig/selinux文件设置

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

2.编辑/etc/hosts文件

## /etc/hosts文件中添加所有主机的域名解析

10.0.41.95 master1

10.0.41.96 master2

3.ssh免密

配置 SSH Key 认证。确保本机也可以 SSH 连接,否则下面部署失败。

## 生成密钥

# 一路直接回车,不用输入其他字符

ssh-keygen -t rsa

## 公钥复制到其他主机

ssh-copy-id master1

ssh-copy-id master2

## 可以测试访问是否成功

ssh master2

4.升级内核至5.4

查看当前内核版本

[root@master1 data]# uname -r

3.10.0-957.el7.x86_64

设置ELRepo源

## 导入公钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

## 安装yum源

yum install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

查看可用内核

[root@master1 data]# yum --disablerepo \\* --enablerepo elrepo-kernel list available

已加载插件:fastestmirror, langpacks

Loading mirror speeds from cached hostfile

* elrepo-kernel: mirrors.tuna.tsinghua.edu.cn

可安装的软件包

kernel-lt.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 5.4.95-1.el7.elrepo elrepo-kernel

kernel-ml.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

perf.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.10.13-1.el7.elrepo elrepo-kernel

安装lt内核

## 安装

yum --enablerepo elrepo-kernel -y install kernel-lt

## 查看当前所有内核

grubby --info=ALL

## 设置5.4内核为默认启动内核

grub2-set-default 0

grub2-reboot 0

或

grep menuentry /boot/efi/EFI/centos/grub.cfg

grub2-set-default 'CentOS Linux (5.4.95-1.el7.x86_64) 7 (Core)'

## 查看修改结果

grub2-editenv list

## 重启服务器

systemctl reboot

验证内核版本

[root@master1 ~]# uname -r

5.4.191-1.el7.elrepo.x86_64

[root@master2 ~]# uname -r

5.4.95-1.el7.elrepo.x86_64

扩展: 如何将yum所安装的所有安装包及依赖包下载到本地,以供在没有外网环境时安装使用:

## 安装yumdownloader工具

yum -y install yum-utils

## 下载kernel包及其所需依赖包

yumdownloader --resolve --destdir /data/kernel/ --enablerepo elrepo-kernel kernel-lt

--resolve 连带依赖包一起下载

--destdir 包下载到的路径

--enablerepo 使用哪个repo库

5.开启内核路由转发功能

## 临时开始,写入内存

echo 1 > /proc/sys/net/ipv4/ip_forward

## 永久开启写入内核参数

echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

## 加载配置

sysctl -p

## 验证是否生效

[root@master2 ~]# sysctl -a | grep 'ip_forward'

net.ipv4.ip_forward = 1

net.ipv4.ip_forward_update_priority = 1

net.ipv4.ip_forward_use_pmtu = 0

6.关闭swap分区

Kubernetes 1.8 开始要求关闭系统的 Swap 交换分区

## 临时关闭

swapoff -a

## 永久关闭

sed -i "s/.*swap.*//" /etc/fstab

7.开启 iptables filter表中FOWARD链 《待确定》

Docker 从 1.13 版本开始调整了默认的防火墙规则,禁用了 iptables filter表中FOWARD链,这样会引起 Kubernetes 集群中跨 Node 的 Pod 无法通信,在各个 Docker 节点执行下面的命令:

iptables -P FORWARD ACCEPT

工具准备工作

1.安装阿里云yum源(两台机器都需要)

CentOS-Base.repo

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

## 非阿里云ECS要执行

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

epel.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

docker-ce.repo

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

kubernetes.repo

# 执行配置k8s的yum--阿里源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum源准备完毕后,创建元数据

[root@master1 data]# yum clean all

[root@master1 data]# yum makecache

2.更新python的pip、jinja2等 《有疑问》

## 先安装gcc编译需要,和zlib*压缩解压缩需要,

## libffi-devel为python需要,不然ansible安装K8S时会报类似:ModuleNotFoundError: No module named '_ctypes'

## python2-pip pip的安装

yum -y install gcc zlib* libffi-develpython2-pip-8.1.2-14.el7.noarch

#安装相关软件(Ansible 版本必须 >= 2.7):

yum install -y python-pip python3 python-netaddr python3-pip ansible git

## 配置pip源,这里配置的aliyun的pip源

# Linux下,修改 ~/.pip/pip.conf (没有就创建一个文件夹及文件。文件夹要加“.”,表示是隐藏文件夹)

# windows下,直接在user目录中创建一个pip目录,如:C:\\Users\\xx\\pip,新建文件pip.ini,内容同上

[global]

index-url=http://mirrors.aliyun.com/pypi/simple

[install]

trusted-host=mirrors.aliyun.com

## 更新pip、jinja2,如果不更新jinja2,安装k8s会报错:AnsibleError: template error while templating string: expected token '=', got 'end of statement block'.

# Jinja2 是一个 Python 的功能齐全的模板引擎。

pip install --upgrade pip

pip install --upgrade jinja2

## Command "python setup.py egg_info" failed with error code 1 in /tmp/pip-build-5bCglP/MarkupSafe/

## You are using pip version 8.1.2, however version 22.0.4 is available.

## You should consider upgrading via the 'pip install --upgrade pip' command.

Python问题:You are using pip version 8.1.2, however version 22.0.4 is available.

关键是我按照提示输入$ pip install --upgrade pip进行更新,然而仍然有这个“温馨”提示,太奇怪了。网上查了一些资料,解决方案如下:

#先输入如下指令:

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

#这条指令是下载get-pip.py文件到当前路径,然后执行下面的这条语句:

sudo python get-pip.py

#然后pip的版本就到最新的22.0.4版本了:

pip -V

Python问题:This script does not work on Python 2.7 The minimum supported Python version is 3.7.

#原因

#其他版本也是因为新旧版本冲突出现这种报错解决方法也同样,自行修改需要的版本号

#解决方法1:

#根据你遇到的当前报错内容,拷贝报错中提供的路径,如上图所示,输入命令

curl https://bootstrap.pypa.io/pip/2.7/get-pip.py -o get-pip.py

#再输入命令

python get-pip.py

#解决方法2:

# centos7从python2.7升级到python3.6

sudo yum groupinstall 'Development Tools'

sudo yum install centos-release-scl

sudo yum install rh-python36

python --version

Python 2.7.5

# Software Collections (SCL)

scl enable rh-python36 bash

python --version

Python 3.6.3

yum -y install python-pip

备注:

python2x和python3x都可以存在于系统。打开linux机器默认的python2x版本是python2x。

如果你正在Linux上工作,你可以始终键入

python3 version来检查它是否已安装,并确保它是你要使用的版本。在默认情况下,

python调用python3有几种方法。例如,你可以创建别名。输入whereis python3,这样就可以得到python3(通常它位于/usr/bin/python3)的安装路径。如果是这种情况,你可以简单地添加到~/.bashrc以下行:alias python='/usr/bin/python3'然后,

source该文件或重新加载会话。这假设/usr/bin/python3是python3的位置。请注意,依赖于Python安装的其他命令(例如pip或coverage)仍然指向python2安装的命令,因此你可能需要对它们执行相同的操作,或者如果要安装任何额外的包,请确保调用pip3而不是pip。

Linux系统修改默认pip3版本,使其关联Python3.6

#备份系统自带python2的软链接(可不做):

mv /usr/bin/python /usr/bin/python.2.7.bak

#创建python3软链接:

#安装python一般会自动生成软链接/usr/bin/python3,如果没有,请将“/usr/bin/python3”改为你安装的python3的位置

sudo ln -s /usr/bin/python3 /usr/bin/python

#同理,pip也一样

mv /usr/bin/pip /usr/bin/pip.2.7.bak

sudo ln -s /usr/bin/pip3 /usr/bin/pip

3.安装ansible

## 阿里云的epel.repo中有ansible,直接yum即可

yum -y install ansible

## 然后更新jinja2,必须指定国内的源pip源,这里指定阿里云的pip源

pip install jinja2 --upgrade

4.配置时钟服务

这里以master1为时钟服务端,master2为时钟客户端

[master下操作]

vim /etc/chrony.conf

...

# 主要下面几个点

server 10.0.41.95 #指定服务端

allow 192.168.181.0/24 #把自身当作服务端

...

[slave下操作]

vim /etc/chrony.conf

...

server 10.0.41.95 #指定服务端

...

## 然后重启服务,查看状态

systemctl enable chronyd

systemctl restart chronyd

timedatectl

chronyc sources -v

配置kubespray

1.安装requirements.txt

## 首先要配置pip源,前边如果配置了可忽略

mkdir -p ~/.pip/

cat > pip.conf << EOF

> [global]

> index-url = http://mirrors.aliyun.com/pypi/simple

> trusted-host = mirrors.aliyun.com

> EOF

## 更新pip

python3 -m pip install --upgrade pip

## 安装requirements.txt

pip install -r requirements.txt

2.更改inventory

## 复制inventory/sample到inventory/mycluster

cd /data/kubespray-master

cp -rfp inventory/sample inventory/mycluster

## 使用库存生成器更新Ansible inventory 文件

declare -a IPS=(10.0.41.95 10.0.41.96)

CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py $IPS[@]

## 生成的hosts.yaml文件,这里的node1和node2将会被更改为主机的hostname,可以根据实际场景设定

[root@master1 kubespray]# cat inventory/mycluster/hosts.yaml

all:

hosts:

node1:

ansible_host: 10.0.41.95

ip: 10.0.41.95

access_ip: 10.0.41.95

node2:

ansible_host: 10.0.41.96

ip: 10.0.41.96

access_ip: 10.0.41.96

children:

kube-master:

hosts:

node1:

node2:

kube-node:

hosts:

node1:

node2:

etcd:

hosts:

node1:

k8s-cluster:

children:

kube-master:

kube-node:

calico-rr:

hosts:

3.根据需求修改默认配置

## 一些组件的安装,比如helm、registry、local_path_provisioner、ingress等,默认都是关闭状态,如果有需求,可以将其打开并设置

vim inventory/mycluster/group_vars/k8s-cluster/addons.yml

...

# Helm deployment

helm_enabled: true

# Registry deployment

registry_enabled: true

# Rancher Local Path Provisioner

local_path_provisioner_enabled: false

...

## 还有网络插件(默认为calico)、网池、kube-proxy的模式等一些可以自己修改

vim inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml

...

kube_network_plugin: flannel

kube_network_plugin_multus: false

kube_service_addresses: 10.233.0.0/18

kube_pods_subnet: 10.233.64.0/18

kube_proxy_mode: ipvs

...

## 还有docker的一些配置象存储位置、端口等配置,都可以在配置文件中修改,不再一一赘述

4.替换镜像源为国内镜像源

cd /data/kubespray

find ./ -type f |xargs sed -i 's/k8s.gcr.io/registry.cn-hangzhou.aliyuncs.com/g'

find ./ -type f |xargs sed -i 's/gcr.io/registry.cn-hangzhou.aliyuncs.com/g'

find ./ -type f |xargs sed -i 's/google-containers/google_containers/g'

这个部署脚本中,使用了很多已经被墙的国外网站的地址,要想在国内顺利的下载,需要将其替换为国内的下载源。

4.1 修改 inventory/mycluster/group_vars/all/all.yml

vim inventory/mycluster/group_vars/all/all.yml

# 添加如下内容:

kubelet_load_modules: true

gcr_image_repo: "registry.aliyuncs.com"

kube_image_repo: " gcr_image_repo /google_containers"

quay_image_repo: "quay.mirrors.ustc.edu.cn"

4.2 修改下个文件 inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml

vim inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml

#添加如下内容

kube_image_repo: "registry.aliyuncs.com/google_containers"

#我自己建的一个命名空间,里面放了这次需要下载的一些镜像,在下面的main.yml里面用到

my_k8s_repo: "registry.cn-hangzhou.aliyuncs.com/easy-k8s"

4.3 修改roles/download/defaults/main.yml

这些设置完毕之后,大部分下载和镜像都没问题了,但是还有个别镜像,国内可能更新比较慢,阿里的google_containers里没有,需要要单独设置地址,

#修改roles/download/defaults/main.yml

#如下两个镜像,我是单独下载的,然后存在我创建的阿里仓库里面

#修改后的内容

nodelocaldns_image_repo: " my_k8s_repo /k8s-dns-node-cache"

dnsautoscaler_image_repo: " my_k8s_repo /cluster-proportional-autoscaler- image_arch "

除了上面的镜像外,还有一些二进制文件的下载地址,速度太慢,基本没法下载。

还是这个文件,roles/download/defaults/main.yml ,下面这几个地址倒是都能连的上,就是可能速度不太快。多尝试几次,应该是能下载完的。

# Download URLs

kubelet_download_url: "https://storage.googleapis.com/kubernetes-release/release/ kube_version /bin/linux/ image_arch /kubelet"

kubectl_download_url: "https://storage.googleapis.com/kubernetes-release/release/ kube_version /bin/linux/ image_arch /kubectl"

kubeadm_download_url: "https://storage.googleapis.com/kubernetes-release/release/ kubeadm_version /bin/linux/ image_arch /kubeadm"

etcd_download_url: "https://github.com/coreos/etcd/releases/download/ etcd_version /etcd- etcd_version -linux- image_arch .tar.gz"

cni_download_url: "https://github.com/containernetworking/plugins/releases/download/ cni_version /cni-plugins-linux- image_arch - cni_version .tgz"

calicoctl_download_url: "https://github.com/projectcalico/calicoctl/releases/download/ calico_ctl_version /calicoctl-linux- image_arch "

crictl_download_url: "https://github.com/kubernetes-sigs/cri-tools/releases/download/ crictl_version /crictl- crictl_version - ansible_system | lower - image_arch .tar.gz"

如果嫌弃实在太慢,有两种方法

1.手动下载,然后放到kubespray_cache中去。

可以在配置 inventory/mycluster/group_vars/all/all.yml 中添加内容 download_force_cache: true

手动下载好各个文件之后,放到各节点的 /tmp/kubespray_cache/ 目录, 没有该目录自行创建

2.自己建一个nginx下载服务,然后把地址改成自己的nginx下载服务中的文件地址。

### change gcr.io to mirror

cat > inventory/mycluster/group_vars/k8s_cluster/vars.yml << EOF

gcr_image_repo: "registry.aliyuncs.com/google_containers"

kube_image_repo: "registry.aliyuncs.com/google_containers"

etcd_download_url: "https://ghproxy.com/https://github.com/coreos/etcd/releases/download/ etcd_version /etcd- etcd_version -linux- image_arch .tar.gz"

cni_download_url: "https://ghproxy.com/https://github.com/containernetworking/plugins/releases/download/ cni_version /cni-plugins-linux- image_arch - cni_version .tgz"

calicoctl_download_url: "https://ghproxy.com/https://github.com/projectcalico/calicoctl/releases/download/ calico_ctl_version /calicoctl-linux- image_arch "

calico_crds_download_url: "https://ghproxy.com/https://github.com/projectcalico/calico/archive/ calico_version .tar.gz"

crictl_download_url: "https://ghproxy.com/https://github.com/kubernetes-sigs/cri-tools/releases/download/ crictl_version /crictl- crictl_version - ansible_system | lower - image_arch .tar.gz"

nodelocaldns_image_repo: "cncamp/k8s-dns-node-cache"

dnsautoscaler_image_repo: "cncamp/cluster-proportional-autoscaler-amd64"

EOF

5.开始部署

## 部署前提前安装 netaddr 包,不然执行过程会报错:

"failed": true, "msg": "The ipaddr filter requires python-netaddr be installed on the ansible controller"

yum -y install python-netaddr

## 如果yum安装python-netaddr后,执行ansible-playbook时还报以上错,则执行pip安装

pip install python-netaddr --upgrade

## 部署

ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml

5.1 常见异常

报错1:kubeadm-v1.20.2-amd64、kubectl-v1.20.2-amd64两个下载不下来,网络问题,可手动下载之后传上去,所有机器都要传,传至/tmp/releases/目录下

https://storage.googleapis.com/kubernetes-release/release/v1.20.2/bin/linux/amd64/kubeadm

https://storage.googleapis.com/kubernetes-release/release/v1.20.2/bin/linux/amd64/kubectl

报错2:有两个镜像基础镜像pull不下来的问题,我自己手动pull的

cluster-proportional-autoscaler-amd64:1.8.3

k8s-dns-node-cache:1.16.0

报错3:AnsibleError: template error while templating string: expected token ‘=’, got ‘end of statement block’.

这个报错是由于jinja2版本较低造成的,前边 工具准备工作 的 第二步有提及到,直接pip install --upgrade jinja2即可,我是更新到了2.11

这里注意一下,pip版本必须是pip2,因为ansible默认的python模式是python2.7的

报错4:error running kubectl (/usr/local/bin/kubectl apply --force --filename=/etc/kubernetes/k8s-cluster-critical-pc.yml) command (rc=1), out=‘’, err='Unable to connect to the server: net/http: TLS handshake timeout

报这个是因为内存不够,我只给了2G内存,再加点内存就ok

报错5:fatal: [master]: UNREACHABLE! => “changed”: false, “msg”: “Failed to connect to the host via ssh: Warning: Permanently added ‘10.0.41.95’ (ECDSA) to the list of known hosts.\\r\\nPermission denied (publickey,gssapi-keyex,gssapi-with-mic,password).”, “unreachable”: true

需要配置ssh免密登录

报错6:fatal: [master]: FAILED! => “changed”: false, “msg”: “The Python 2 bindings for rpm are needed for this module. If you require Python 3 support use the dnf Ansible module instead… The Python 2 yum module is needed for this module. If you require Python 3 support use the dnf Ansible module instead.”

解决:

# 猜测yum调用了高版本的python。 # 解决方法: # 查找yum和 yum-updatest文件,并编辑此py文件 [root@develop local]# which yum /usr/bin/yum [root@develop local]# vi /usr/bin/yum [root@develop local]# vi /usr/bin/yum-updatest # 将 #!/usr/bin/python 改为: #!/usr/bin/python2.4

报错7:TASK [download_container | Download image if required] ****************************************************************

fatal: [master -> master]: FAILED! => “attempts”: 4, “changed”: true, “cmd”: [“/usr/bin/docker”, “pull”, “registry.cn-hangzhou.aliyuncs.com/cluster-proportional-autoscaler-amd64:1.8.1”], “delta”: “0:00:00.399391”, “end”: “2022-05-01 22:18:09.778167”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2022-05-01 22:18:09.378776”, “stderr”: “Error response from daemon: pull access denied for registry.cn-hangzhou.aliyuncs.com/cluster-proportional-autoscaler-amd64, repository does not exist or may require ‘docker login’: denied: requested access to the resource is denied”, “stderr_lines”: [“Error response from daemon: pull access denied for registry.cn-hangzhou.aliyuncs.com/cluster-proportional-autoscaler-amd64, repository does not exist or may require ‘docker login’: denied: requested access to the resource is denied”], “stdout”: “”, “stdout_lines”: []

6.部署完成

[root@node1 kubespray]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready control-plane,master 24m v1.20.2

node2 Ready control-plane,master 23m v1.20.2

[root@node1 kubespray]# kubectl version

Client Version: version.InfoMajor:"1", Minor:"20", GitVersion:"v1.20.2", GitCommit:"faecb196815e248d3ecfb03c680a4507229c2a56", GitTreeState:"clean", BuildDate:"2021-01-13T13:28:09Z", GoVersion:"go1.15.5", Compiler:"gc", Platform:"linux/amd64"

[root@node1 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5bfb6bc97d-g7j4v 1/1 Running 0 18m

coredns-5bfb6bc97d-vqz2m 1/1 Running 0 17m

dns-autoscaler-74877b64cd-gjnpb 1/1 Running 0 17m

kube-apiserver-node1 1/1 Running 0 40m

kube-apiserver-node2 1/1 Running 0 39m

kube-controller-manager-node1 1/1 Running 0 40m

kube-controller-manager-node2 1/1 Running 0 39m

kube-flannel-82x65 1/1 Running 0 19m

kube-flannel-cps8x 1/1 Running 0 19m

kube-proxy-4bzmh 1/1 Running 0 19m

kube-proxy-h8xqx 1/1 Running 0 19m

kube-scheduler-node1 1/1 Running 0 40m

kube-scheduler-node2 1/1 Running 0 39m

nodelocaldns-nfmjz 1/1 Running 0 17m

nodelocaldns-ngn6z 1/1 Running 0 17m

registry-proxy-qk24l 1/1 Running 0 17m

registry-rwb9k 1/1 Running 0 17m

这里也提一下,cpu最少要2核,不然会有些基础pod都起不来

#6、重置

ansible-playbook -i inventory/mycluster/hosts.ini --become --become-user=root reset.yml

#7、修改集群基础配置

vim inventory/mycluster/group_vars/k8s-cluster/k8s-cluster.yml

kube_proxy_mode: ipvs --proxy_mode:ipvs,iptables

kube_network_plugin: flannel --network_plugin:cilium, calico, contiv, weave or flannel

dns_mode: coredns --dns: dnsmasq_kubedns, kubedns, coredns, coredns_dual, manual or none

#8、启用ingress-nginx插件

vim inventory/mycluster/group_vars/k8s-cluster/addons.yml

ingress_nginx_enabled: false --开启nginx-ingress-controller 插件

扩展

Adding node

1、Add the new worker node to your inventory in the appropriate group (or utilize a dynamic inventory).

2、Run the ansible-playbook command, substituting cluster.yml for scale.yml:

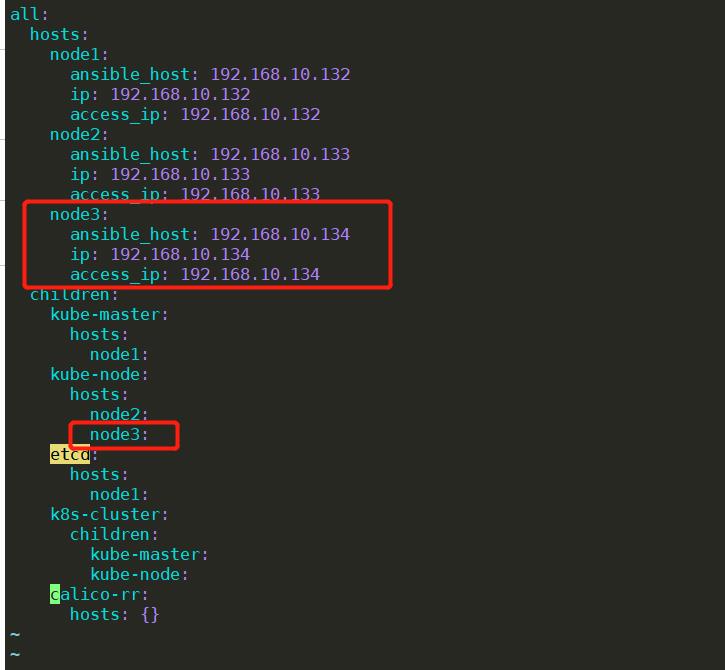

如下图:node3为新增节点

ansible-playbook -i inventory/mycluster/hosts.yaml scale.yml -b -v

Remove nodes

1、hosts.yaml无需修改,运行命令–extra-vars指定node。

ansible-playbook -i inventory/mycluster/hosts.yaml remove-node.yml -b -v --extra-vars "node=node3"

地址:https://github.com/kubernetes-sigs/kubespray/blob/master/docs/getting-started.md

升级

ansible-playbook upgrade-cluster.yml -b -i inventory/sample/hosts.ini -e kube_version=v1.15.0

地址:https://github.com/kubernetes-sigs/kubespray/blob/master/docs/upgrades.md

卸载

ansible-playbook -i inventory/mycluster/hosts.ini reset.yml` `#每台node都要执行``rm -rf /etc/kubernetes/``rm -rf /``var``/lib/kubelet``rm -rf /``var``/lib/etcd``rm -rf /usr/local/bin/kubectl``rm -rf /etc/systemd/system/calico-node.service``rm -rf /etc/systemd/system/kubelet.service` `reboot

后记:

1、默认从国外下载镜像因为node需要能上外网。

2、安装需要点时间耐心等待,遇到报错解决后继续安装。

3、当添加worker node或者升级集群版本时,会把原先手动修改的集群参数给还原这点要特别注意。

参考连接

https://github.com/kubernetes-sigs/kubespray

https://github.com/kubernetes-sigs/kubespray/blob/master/docs/setting-up-your-first-cluster.md

https://github.com/kubernetes-sigs/kubespray/blob/master/docs/offline-environment.md

https://github.com/kubernetes-sigs/kubespray/tree/v2.14.2

https://github.com/kubernetes-sigs/kubespray/releases/tag/v2.14.2

https://kubernetes.io/docs/setup/production-environment/tools/kubespray/

使用 kubeplay 来离线部署 kubernetes 集群

https://github.com/sealerio/sealer

https://rclone.org/docs/

https://www.modb.pro/db/58785

以上是关于kubespray安装高可用k8s集群的主要内容,如果未能解决你的问题,请参考以下文章

k8s系列-05-k8s集群搭建方案对比,以及containerd是什么,如何安装

使用Kubespray自动化部署Kubernetes 1.13.1