HTTP的并发下载 Accept-Ranges

Posted 刘贤松handler

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HTTP的并发下载 Accept-Ranges相关的知识,希望对你有一定的参考价值。

大家都用过迅雷等下载工具,特点就是支持并发下载,断点续传。

主要讲三个方面,如何HTTP的并发下载、通过Golang进行多协程开发、如何断点续传。

想要并发下载,就是把下载内容分块,然后并行下载这些块。这就要求服务器能够支持分块获取数据。大迅雷、电驴这种都有自己的协议,thunder://这种,我们只研究原理,就说说HTTP协议对于并发的支持。

| HTTP头 | 对应值 | 含义 | ||||

| Content-Length | 14247 | HTTP响应的Body大小,下载的时候,Body就是文件,也可以认为是文件大小,单位是比特 | ||||

| Content-Disposition | inline; filename=”bryce.jpg” | 是MIME协议的扩展,MIME协议指示MIME用户代理如何显示附加的文件。当浏览器接收到头时,它会激活文件下载。这里还包含了文件名 | ||||

| Accept-Ranges | bytes | 允许客户端以bytes的形式获取文件 | ||||

| Range | bytes=0-511 | 分块获取数据,这里表示获取第0到第511的数据,共512字节 |

nginx官网ngx_http_slice_module: Module ngx_http_slice_module

Nginx的ngx_http_slice_module模块是用来支持Range回源的。 ngx_http_slice_module从Nginx的1.9.8版本开始有的。启用ngx_http_slice_module模块需要在编译Nginx时,加参数--with-http_slice_module。

location /

slice 1m;

proxy_cache cache;

proxy_cache_key $uri$is_args$args$slice_range;

proxy_set_header Range $slice_range;

proxy_cache_valid 200 206 1h;

proxy_pass http://localhost:8000;

Module ngx_http_slice_module 缓存配置文件

#user nobody;

worker_processes 1;

events

worker_connections 1024;

http

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

sendfile on;

keepalive_timeout 65;

#cache

proxy_cache_path /data/cache

keys_zone=cache_my:100m

levels=1:1

inactive=12d

max_size=200m;

server

listen 80;

server_name localhost;

location /

#slice

slice 1k;

proxy_cache cache_my;

proxy_cache_key $uri$is_args$args$slice_range;

add_header X-Cache-Status $upstream_cache_status;

proxy_set_header Range $slice_range;

proxy_cache_valid 200 206 3h;

proxy_pass http://192.168.1.10:80;

proxy_cache_purge PURGE from 127.0.0.1;

三、运行结果

查看的是源站的日志

index.html文件大小为5196

curl www.*****.com/index.html -r 0-1024

四 GO实例代码

package main

import (

"flag"

"fmt"

"io"

"log"

"math"

"mime"

"net/http"

"net/http/httputil"

"os"

"strings"

"sync"

"time"

)

const (

DEFAULT_DOWNLOAD_BLOCK int64 = 4096

)

type GoGet struct

Url string

Cnt int

DownloadBlock int64

CostomCnt int

Latch int

Header http.Header

MediaType string

MediaParams map[string]string

FilePath string // 包括路径和文件名

GetClient *http.Client

ContentLength int64

DownloadRange [][]int64

File *os.File

TempFiles []*os.File

WG sync.WaitGroup

func NewGoGet() *GoGet

get := new(GoGet)

get.FilePath = "./"

get.GetClient = new(http.Client)

flag.Parse()

get.Url = *urlFlag

get.DownloadBlock = DEFAULT_DOWNLOAD_BLOCK

return get

var urlFlag = flag.String("u", "http://7b1h1l.com1.z0.glb.clouddn.com/bryce.jpg", "Fetch file url")

// var cntFlag = flag.Int("c", 1, "Fetch concurrently counts")

func main()

get := NewGoGet()

download_start := time.Now()

req, err := http.NewRequest("HEAD", get.Url, nil)

resp, err := get.GetClient.Do(req)

get.Header = resp.Header

if err != nil

log.Panicf("Get %s error %v.\\n", get.Url, err)

get.MediaType, get.MediaParams, _ = mime.ParseMediaType(get.Header.Get("Content-Disposition"))

get.ContentLength = resp.ContentLength

get.Cnt = int(math.Ceil(float64(get.ContentLength / get.DownloadBlock)))

if strings.HasSuffix(get.FilePath, "/")

get.FilePath += get.MediaParams["filename"]

get.File, err = os.Create(get.FilePath)

if err != nil

log.Panicf("Create file %s error %v.\\n", get.FilePath, err)

log.Printf("Get %s MediaType:%s, Filename:%s, Size %d.\\n", get.Url, get.MediaType, get.MediaParams["filename"], get.ContentLength)

if get.Header.Get("Accept-Ranges") != ""

log.Printf("Server %s support Range by %s.\\n", get.Header.Get("Server"), get.Header.Get("Accept-Ranges"))

else

log.Printf("Server %s doesn't support Range.\\n", get.Header.Get("Server"))

log.Printf("Start to download %s with %d thread.\\n", get.MediaParams["filename"], get.Cnt)

var range_start int64 = 0

for i := 0; i < get.Cnt; i++

if i != get.Cnt-1

get.DownloadRange = append(get.DownloadRange, []int64range_start, range_start + get.DownloadBlock - 1)

else

// 最后一块

get.DownloadRange = append(get.DownloadRange, []int64range_start, get.ContentLength - 1)

range_start += get.DownloadBlock

// Check if the download has paused.

for i := 0; i < len(get.DownloadRange); i++

range_i := fmt.Sprintf("%d-%d", get.DownloadRange[i][0], get.DownloadRange[i][1])

temp_file, err := os.OpenFile(get.FilePath+"."+range_i, os.O_RDONLY|os.O_APPEND, 0)

if err != nil

temp_file, _ = os.Create(get.FilePath + "." + range_i)

else

fi, err := temp_file.Stat()

if err == nil

get.DownloadRange[i][0] += fi.Size()

get.TempFiles = append(get.TempFiles, temp_file)

go get.Watch()

get.Latch = get.Cnt

for i, _ := range get.DownloadRange

get.WG.Add(1)

go get.Download(i)

get.WG.Wait()

for i := 0; i < len(get.TempFiles); i++

temp_file, _ := os.Open(get.TempFiles[i].Name())

cnt, err := io.Copy(get.File, temp_file)

if cnt <= 0 || err != nil

log.Printf("Download #%d error %v.\\n", i, err)

temp_file.Close()

get.File.Close()

log.Printf("Download complete and store file %s with %v.\\n", get.FilePath, time.Now().Sub(download_start))

defer func()

for i := 0; i < len(get.TempFiles); i++

err := os.Remove(get.TempFiles[i].Name())

if err != nil

log.Printf("Remove temp file %s error %v.\\n", get.TempFiles[i].Name(), err)

else

log.Printf("Remove temp file %s.\\n", get.TempFiles[i].Name())

()

func (get *GoGet) Download(i int)

defer get.WG.Done()

if get.DownloadRange[i][0] > get.DownloadRange[i][1]

return

range_i := fmt.Sprintf("%d-%d", get.DownloadRange[i][0], get.DownloadRange[i][1])

log.Printf("Download #%d bytes %s.\\n", i, range_i)

defer get.TempFiles[i].Close()

req, err := http.NewRequest("GET", get.Url, nil)

req.Header.Set("Range", "bytes="+range_i)

resp, err := get.GetClient.Do(req)

defer resp.Body.Close()

if err != nil

log.Printf("Download #%d error %v.\\n", i, err)

else

cnt, err := io.Copy(get.TempFiles[i], resp.Body)

if cnt == int64(get.DownloadRange[i][1]-get.DownloadRange[i][0]+1)

log.Printf("Download #%d complete.\\n", i)

else

req_dump, _ := httputil.DumpRequest(req, false)

resp_dump, _ := httputil.DumpResponse(resp, true)

log.Panicf("Download error %d %v, expect %d-%d, but got %d.\\nRequest: %s\\nResponse: %s\\n", resp.StatusCode, err, get.DownloadRange[i][0], get.DownloadRange[i][1], cnt, string(req_dump), string(resp_dump))

// http://stackoverflow.com/questions/15714126/how-to-update-command-line-output

func (get *GoGet) Watch()

fmt.Printf("[=================>]\\n")

单元测试

package tests

import (

"fmt"

"testing"

"github.com/mnhkahn/go_code/goget"

)

func TestProcess(t *testing.T)

schedule := goget.NewGoGetSchedules(2)

schedule.SetDownloadBlock(1)

job := schedule.NextJob()

fmt.Println(job)

schedule.FinishJob(job)

job = schedule.NextJob()

fmt.Println(job)

schedule.FinishJob(job)

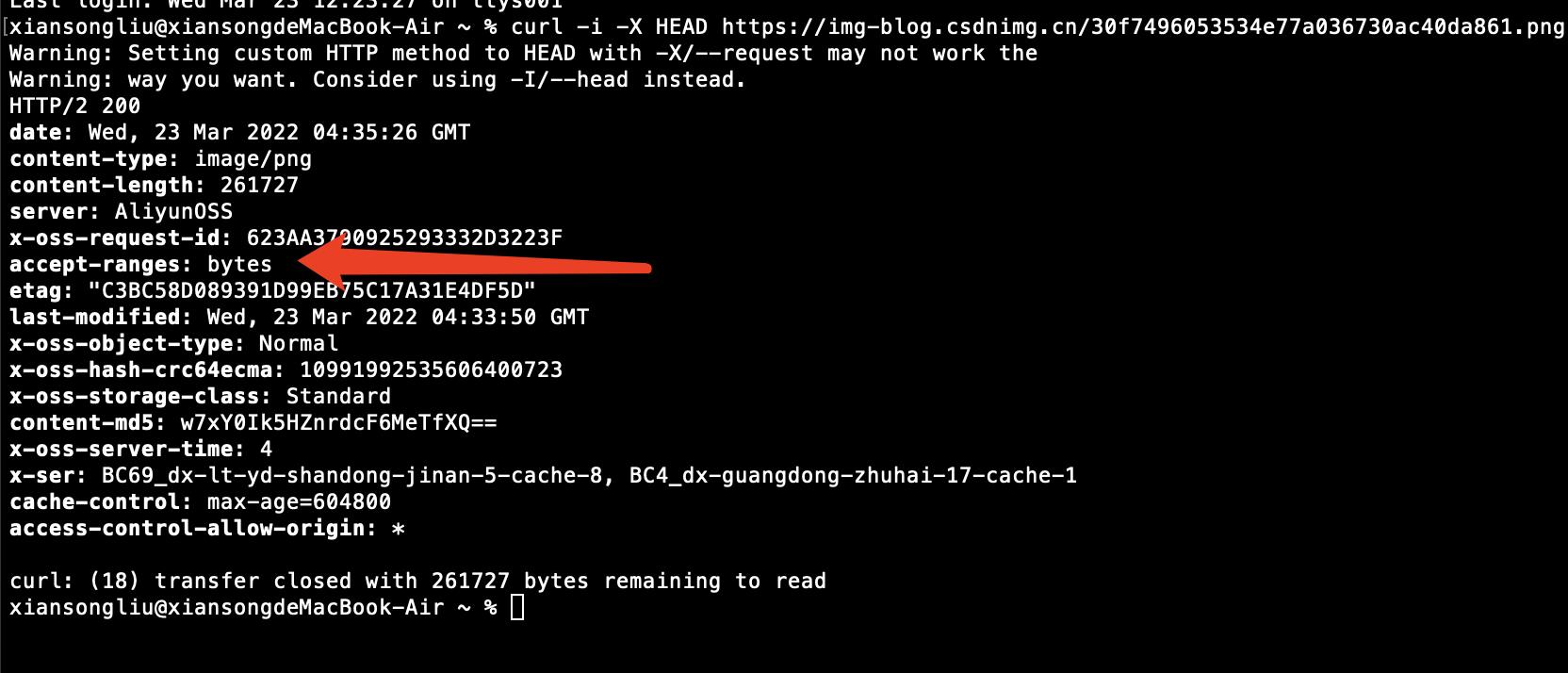

判断是否支持 Accept-Ranges : bytes

curl -i -X HEAD https://img-blog.csdnimg.cn/30f7496053534e77a036730ac40da861.png

参考资料:

ngx_http_slice_module (ngx_http_slice_module) - Nginx 中文开发手册 - 开发者手册 - 云+社区 - 腾讯云

CDN如何使用nginx负载均衡实现回源请求_BigChen_up的博客-CSDN博客_nginx 回源

Nginx进行Range缓存_zzhongcy的博客-CSDN博客_nginx range

Golang实现多线程并发下载 - Go语言中文网 - Golang中文社区

以上是关于HTTP的并发下载 Accept-Ranges的主要内容,如果未能解决你的问题,请参考以下文章