USDP使用笔记打通双集群HDFS实现跨nameservice访问

Posted 虎鲸不是鱼

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了USDP使用笔记打通双集群HDFS实现跨nameservice访问相关的知识,希望对你有一定的参考价值。

尝试跨nameservice访问

在zhiyong2:

┌──────────────────────────────────────────────────────────────────────┐

│ • MobaXterm Personal Edition v21.4 • │

│ (SSH client, X server and network tools) │

│ │

│ ➤ SSH session to root@192.168.88.101 │

│ • Direct SSH : ✔ │

│ • SSH compression : ✔ │

│ • SSH-browser : ✔ │

│ • X11-forwarding : ✔ (remote display is forwarded through SSH) │

│ │

│ ➤ For more info, ctrl+click on help or visit our website. │

└──────────────────────────────────────────────────────────────────────┘

Last login: Wed Mar 2 22:16:34 2022

/usr/bin/xauth: file /root/.Xauthority does not exist

[root@zhiyong2 ~]# cd /opt/usdp-srv/srv/udp/2.0.0.0/hdfs/bin

[root@zhiyong2 bin]# ll

总用量 804

-rwxr-xr-x. 1 hadoop hadoop 98 3月 1 23:06 bootstrap-namenode.sh

-rwxr-xr-x. 1 hadoop hadoop 372928 11月 15 2020 container-executor

-rwxr-xr-x. 1 hadoop hadoop 88 3月 1 23:06 format-namenode.sh

-rwxr-xr-x. 1 hadoop hadoop 86 3月 1 23:06 format-zkfc.sh

-rwxr-xr-x. 1 hadoop hadoop 8580 11月 15 2020 hadoop

-rwxr-xr-x. 1 hadoop hadoop 11417 3月 1 23:06 hdfs

-rwxr-xr-x. 1 hadoop hadoop 6237 11月 15 2020 mapred

-rwxr-xr-x. 1 hadoop hadoop 387368 11月 15 2020 test-container-executor

-rwxr-xr-x. 1 hadoop hadoop 11888 11月 15 2020 yarn

[root@zhiyong2 bin]# ./hadoop fs -ls /

Found 6 items

drwxr-xr-x - hadoop supergroup 0 2022-03-02 22:27 /hbase

drwxr-xr-x - hadoop supergroup 0 2022-03-01 23:08 /tez

drwxrwxr-x - hadoop supergroup 0 2022-03-01 23:08 /tez-0.10.0

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 /tmp

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 /user

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:12 /zhiyong-1

也可以直接通过IP或者映射访问:

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong2:8020/

ls: Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong3:8020/

Found 6 items

drwxr-xr-x - hadoop supergroup 0 2022-03-02 22:27 hdfs://zhiyong3:8020/hbase

drwxr-xr-x - hadoop supergroup 0 2022-03-01 23:08 hdfs://zhiyong3:8020/tez

drwxrwxr-x - hadoop supergroup 0 2022-03-01 23:08 hdfs://zhiyong3:8020/tez-0.10.0

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 hdfs://zhiyong3:8020/tmp

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 hdfs://zhiyong3:8020/user

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:12 hdfs://zhiyong3:8020/zhiyong-1

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong4:8020/

ls: Call From zhiyong2/192.168.88.101 to zhiyong4:8020 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

也可以跨集群访问:

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong5:8020/

ls: Operation category READ is not supported in state standby. Visit https://s.apache.org/sbnn-error

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong6:8020/

Found 6 items

drwxr-xr-x - hadoop supergroup 0 2022-03-02 22:39 hdfs://zhiyong6:8020/hbase

drwxr-xr-x - hadoop supergroup 0 2022-03-01 23:34 hdfs://zhiyong6:8020/tez

drwxrwxr-x - hadoop supergroup 0 2022-03-01 23:35 hdfs://zhiyong6:8020/tez-0.10.0

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:35 hdfs://zhiyong6:8020/tmp

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:35 hdfs://zhiyong6:8020/user

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:38 hdfs://zhiyong6:8020/zhiyong-2

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong7:8020/

ls: Call From zhiyong2/192.168.88.101 to zhiyong7:8020 failed on connection exception: java.net.ConnectException: 拒绝连接; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

可以直接访问域:

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong-1/

Found 6 items

drwxr-xr-x - hadoop supergroup 0 2022-03-02 22:27 hdfs://zhiyong-1/hbase

drwxr-xr-x - hadoop supergroup 0 2022-03-01 23:08 hdfs://zhiyong-1/tez

drwxrwxr-x - hadoop supergroup 0 2022-03-01 23:08 hdfs://zhiyong-1/tez-0.10.0

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 hdfs://zhiyong-1/tmp

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:09 hdfs://zhiyong-1/user

drwxrwxrwx - hadoop supergroup 0 2022-03-01 23:12 hdfs://zhiyong-1/zhiyong-1

[root@zhiyong2 bin]# ./hadoop fs -ls hdfs://zhiyong-2/

-ls: java.net.UnknownHostException: zhiyong-2

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst>]

[-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] <path> ...]

[-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-v] [-x] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] -n name | -d [-e en] <path>]

[-getmerge [-nl] [-skip-empty-file] <src> <localdst>]

[-head <file>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [-b|-k -m|-x <acl_spec> <path>]|[--set <acl_spec> <path>]]

[-setfattr -n name [-v value] | -x name <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

Generic options supported are:

-conf <configuration file> specify an application configuration file

-D <property=value> define a value for a given property

-fs <file:///|hdfs://namenode:port> specify default filesystem URL to use, overrides 'fs.defaultFS' property from configurations.

-jt <local|resourcemanager:port> specify a ResourceManager

-files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster

-libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath

-archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines

The general command line syntax is:

command [genericOptions] [commandOptions]

Usage: hadoop fs [generic options] -ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]

但是集群之间默认没有打通,故USDP新集群不能直接通过域进行跨集群操作。

打通集群

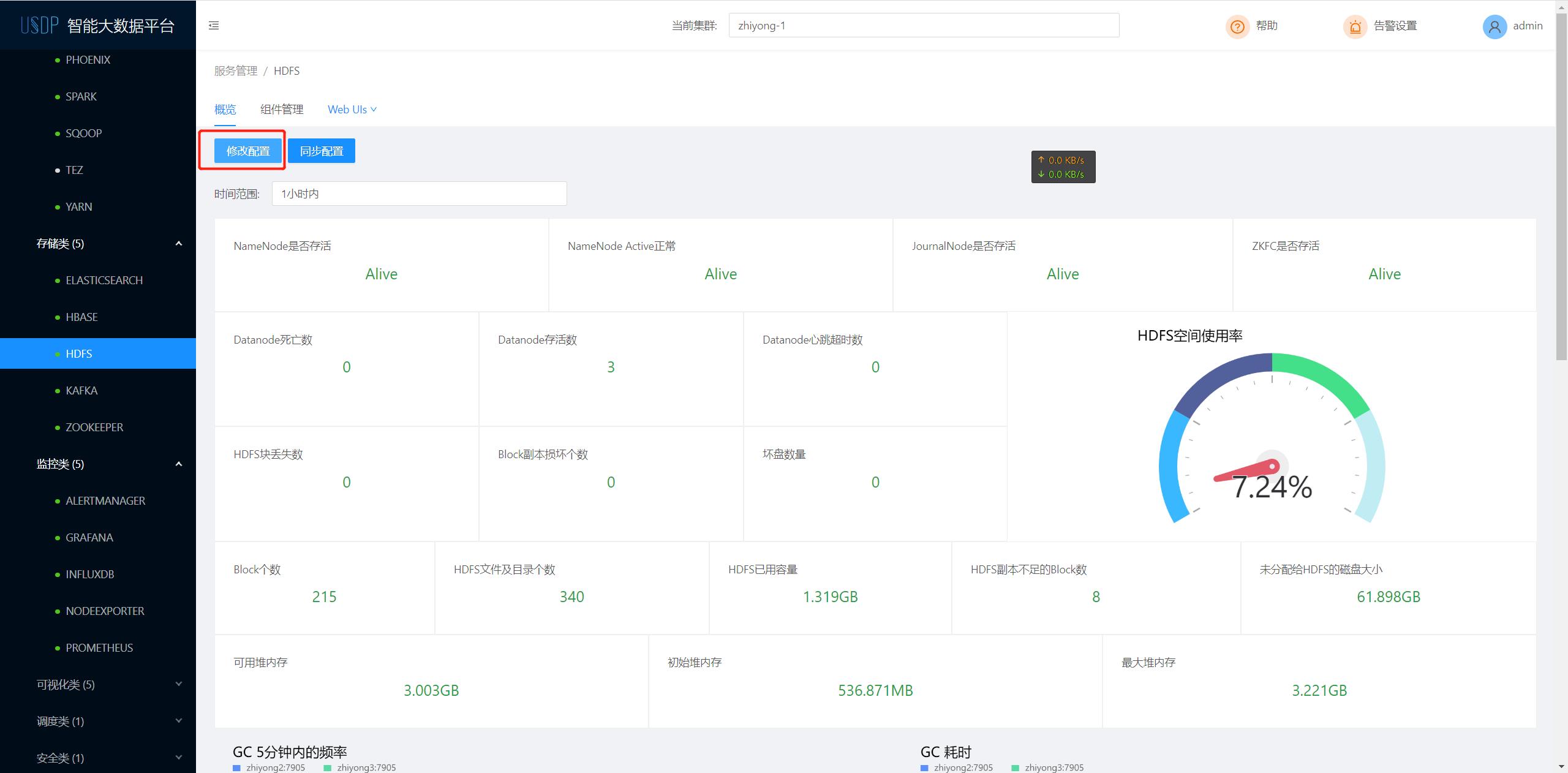

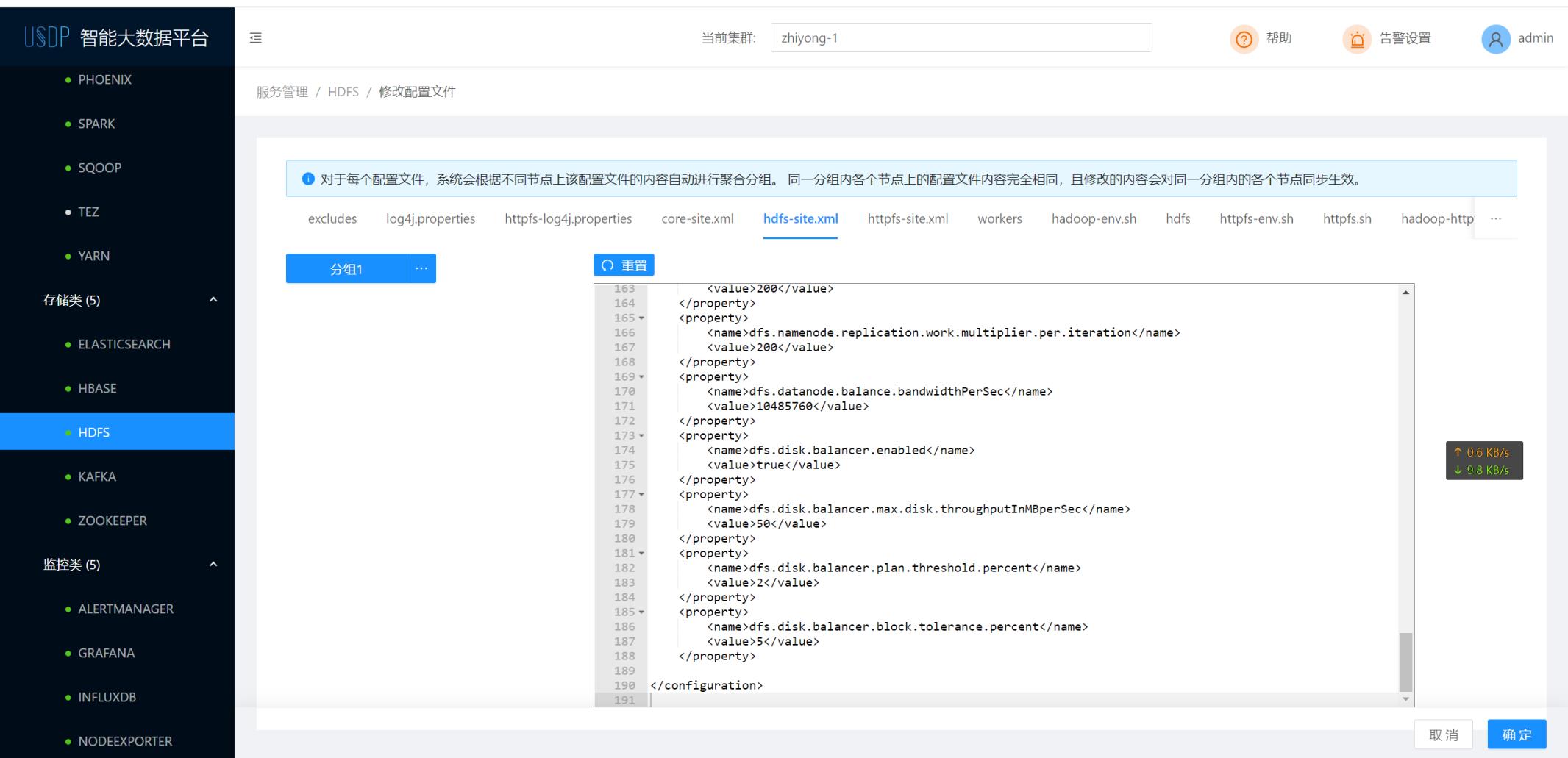

查找hdfs-site.xml配置

顺便提一句,这个监控界面还是很讨我喜欢的。。。

可以在hdfs-site.xml看到这些内容:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/data/udp/2.0.0.0/hdfs/dfs/nn</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>/data/udp/2.0.0.0/hdfs/dfs/data</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/udp/2.0.0.0/hdfs/jnData</value>

</property>

<property>

<name>dfs.ha.namenodes.zhiyong-1</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.zhiyong-1.nn1</name>

<value>zhiyong2:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.zhiyong-1.nn2</name>

<value>zhiyong3:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.zhiyong-1.nn1</name>

<value>zhiyong2:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.zhiyong-1.nn2</name>

<value>zhiyong3:50070</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zhiyong2:2181,zhiyong3:2181,zhiyong4:2181</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://zhiyong2:8485;zhiyong3:8485;zhiyong4:8485/zhiyong-1</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.zhiyong-1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence(hadoop:22)</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.datanode.max.xcievers</name>

<value>4096</value>

</property>

<property>

<name>dfs.permissions.enable</name>

<value>false</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.heartbeat.recheck-interval</name>

<value>45000</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>7320</value>

</property>

<property>

<name>dfs.datanode.max.transfer.threads</name>

<value>8192</value>

</property>

<property>

<name>dfs.image.compress</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.num.checkpoints.retained</name>

<value>12</value>

</property>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>750</value>

</property>

<!-- 开启短路读 -->

<!--

<property>

<name>dfs.client.read.shortcircuit</name>

<value>true</value>

</property>

<property>

<name>dfs.domain.socket.path</name>

<value>/var/lib/hadoop-hdfs/dn_socket</value>

</property>

<property>

<name>dfs.client.use.legacy.blockreader.local</name>

<value>true</value>

</property>

<property>

<name>dfs.block.local-path-access.user</name>

<value>hadoop,root</value>

</property>

-->

<property>

<name>dfs.datanode.handler.count</name>

<value>50</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>50</value>

</property>

<property>

<name>dfs.socket.timeout</name>

<value>900000</value>

</property>

<property>

<name>dfs.hosts.exclude</name>

<value>/srv/udp/2.0.0.0/hdfs/etc/hadoop/excludes</value>

</property>

<property>

<name>dfs.namenode.replication.max-streams</name>

<value>32</value>

</property>

<property>

<name>dfs.namenode.replication.max-streams-hard-limit</name>

<value>200</value>

</property>

<property>

<name>dfs.namenode.replication.work.multiplier.per.iteration</name>

<value>200</value>

</property>

<property>

<name>dfs.datanode.balance.bandwidthPerSec</name>

<value>10485760</value>

</property>

<property>

<name>dfs.disk.balancer.enabled</name>

<value>true</value>

</以上是关于USDP使用笔记打通双集群HDFS实现跨nameservice访问的主要内容,如果未能解决你的问题,请参考以下文章

USDP使用笔记解决HBase的ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing报错

USDP使用笔记解决HBase的ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing报错

使用Java继承UDF类或GenericUDF类给Hive3.1.2编写UDF实现编码解码加密解密并运行在USDP大数据集群