kafka集群搭建

Posted 健康平安的活着

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了kafka集群搭建相关的知识,希望对你有一定的参考价值。

一 集群规划阐述

| 192.168.152.128 master 192.168.152.129 slaver01 192.168.152.130 slaver02 安装包存放的目录:/ bigdata-software 安装程序存放的目录:/export/servers 三台机器执行以下命令创建统一文件目录 mkdir -p /export/servers mkdir -p /export/ bigdata-software |

二 kafka集群搭建

在操作kafka操作需要,先启动zk集群,然后再每个节点去启动kafka节点。kafka搭建工作首先需要在:

https://blog.csdn.net/u011066470/article/details/122796425

这篇文章操作之后进行

2.1 前期准备工作

1.所有的机器均安装了jdk

2.zk的集群:保证三台机器的zk服务都正常启动,且正常运行,查看zk的运行状态,保证有一台zk的服务状态为leader,且两台为follower即可。

2.2 kafka的下载地址

| http://archive.apache.org/dist/kafka/0.10.0.0/kafka_2.11-0.10.0.0.tgz |

2.3 在master节点上kafka的解压和安装

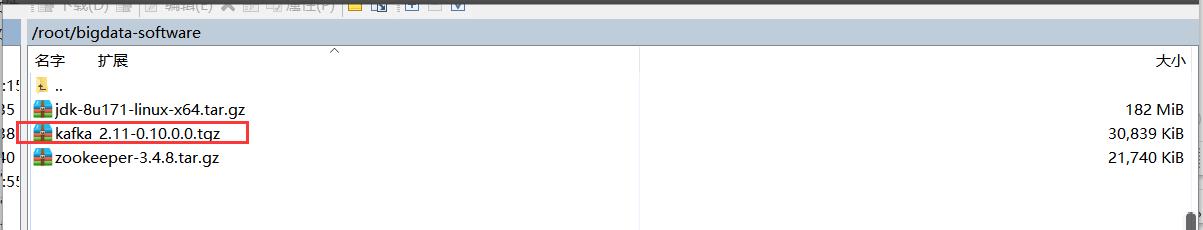

将下载好的安装包上传到master服务器的/root/bigdata-software路径下,

然后进行解压kafka的压缩包,执行以下命令进行解压安装包:

| [root@localhost ~]# cd bigdata-software/ [root@localhost bigdata-software]# ls jdk-8u171-linux-x64.tar.gz kafka_2.11-0.10.0.0.tgz zookeeper-3.4.8.tar.gz [root@localhost bigdata-software]# pwd /root/bigdata-software [root@localhost bigdata-software]# tar -zxvf kafka_2.11-0.10.0.0.tgz -C /root/export/servers/ |

2.3.1 在master节点上新建kafka的log文件

在kafka的安装目录下,新建一个logs目录,如下:

| [root@localhost kafka_2.11-0.10.0.0]# mkdir -p logs [root@localhost kafka_2.11-0.10.0.0]# ls bin config libs LICENSE logs NOTICE site-docs |

2.3.2 在master节点上修改kafka的配置文件

在master节点下执行以下命令进入到kafka的配置文件目录,修改配置文件

| cd /root/export/servers/kafka_2.11-0.10.0.0/config [root@localhost config]# vi server.properties #修改的内容为如下:其中标红为本次修改的内容 |

| broker.id=0 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/root/export/servers/kafka_2.11-0.10.0.0/logs num.partitions=2 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.flush.interval.messages=10000 log.flush.interval.ms=1000 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=192.168.152.136:2181,192.168.152.138:2181,192.168.152.140:2181 zookeeper.connection.timeout.ms=6000 group.initial.rebalance.delay.ms=0 delete.topic.enable=true host.name=192.168.152.136 |

2.3.3 在master节点上复制kfaka配置到slaver01节点上

| root@localhost servers]# scp -r kafka_2.11-0.10.0.0/ root@192.168.152.138:/root/export/servers/ root@192.168.152.129's password: LICENSE 100% 28KB 2.8MB/s 00:00 NOTICE 100% 336 91.8KB/s 00:00 connect-distributed.sh 100% 1052 435.7KB/s 00:00 connect-standalone.sh 100% 1051 422.7KB/s 00:00 kafka-acls.sh |

2.3.4 在slaver01节点上修改配置

在slaver01节点下执行以下命令进入到kafka的配置文件目录,修改配置文件

| cd /root/export/servers/kafka_2.11-0.10.0.0/config [root@localhost config]# vi server.properties #修改的内容为如下:其中标红为本次修改的内容 broker.id=1 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/root/export/servers/kafka_2.11-0.10.0.0/logs num.partitions=2 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.flush.interval.messages=10000 log.flush.interval.ms=1000 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=192.168.152.136:2181,192.168.152.138:2181,192.168.152.140:2181 zookeeper.connection.timeout.ms=6000 group.initial.rebalance.delay.ms=0 delete.topic.enable=true host.name=192.168.152.138 |

2.3.5 在master节点上复制kafka配置到slaver02节点上

| [root@localhost servers]# scp -r kafka_2.11-0.10.0.0/ root@192.168.152.140:/root/export/servers/ root@192.168.152.129's password: |

2.3.6 在slaver02节点上修改配置文件

在slaver02节点下执行以下命令进入到kafka的配置文件目录,修改配置文件

| broker.id=2 num.network.threads=3 num.io.threads=8 socket.send.buffer.bytes=102400 socket.receive.buffer.bytes=102400 socket.request.max.bytes=104857600 log.dirs=/root/export/servers/kafka_2.11-0.10.0.0/logs num.partitions=2 num.recovery.threads.per.data.dir=1 offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 log.flush.interval.messages=10000 log.flush.interval.ms=1000 log.retention.hours=168 log.segment.bytes=1073741824 log.retention.check.interval.ms=300000 zookeeper.connect=192.168.152.136:2181,192.168.152.138:2181,192.168.152.140:2181 zookeeper.connection.timeout.ms=6000 group.initial.rebalance.delay.ms=0 delete.topic.enable=true host.name=192.168.152.140 |

2.4 kafka的启动

2.4.1 kafka的前提约束

注意事项:在kafka启动前,一定要让zookeeper启动起来。

2.4.2 在master节点上后台启动命令

在master节点执行以下命令将kafka进程启动在后台,通过jps命令来查看进程是否存在

| [root@localhost kafka_2.11-0.10.0.0]# jps 8486 Jps 4216 QuorumPeerMain [root@localhost kafka_2.11-0.10.0.0]# nohup bin/kafka-server-start.sh config/server.properties 2>&1 & [1] 8502 nohup: ignoring input and appending output to ‘nohup.out’ [root@localhost kafka_2.11-0.10.0.0]# jps 8752 Jps 8502 Kafka 4216 QuorumPeerMain [root@localhost kafka_2.11-0.10.0.0]# |

2.4.3 在master节点上后台关闭命令

| bin/kafka-server-stop.sh |

2.4.4 在slaver01节点上后台启动命令

在slaver01节点执行以下命令将kafka进程启动在后台,通过jps命令来查看进程是否存在

| [root@localhost kafka_2.11-0.10.0.0]# nohup bin/kafka-server-start.sh config/server.properties 2>&1 & [1] 2904 nohup: ignoring input and appending output to ‘nohup.out’ [root@localhost kafka_2.11-0.10.0.0]# jps 2904 Kafka 2763 QuorumPeerMain 3118 Jps [root@localhost kafka_2.11-0.10.0.0]# ls bin config libs LICENSE logs nohup.out NOTICE site-docs |

2.4.5 在slaver01节点上后台关闭命令

| bin/kafka-server-stop.sh |

2.4.6 在slaver02节点上后台启动命令

在slaver02节点执行以下命令将kafka进程启动在后台,通过jps命令来查看进程是否存在

| [root@localhost kafka_2.11-0.10.0.0]# nohup bin/kafka-server-start.sh config/server.properties 2>&1 & [1] 2904 nohup: ignoring input and appending output to ‘nohup.out’ [root@localhost kafka_2.11-0.10.0.0]# jps 2904 Kafka 2763 QuorumPeerMain 3118 Jps [root@localhost kafka_2.11-0.10.0.0]# ls bin config libs LICENSE logs nohup.out NOTICE site-docs |

2.4.7 在slaver02节点上后台关闭命令

| bin/kafka-server-stop.sh |

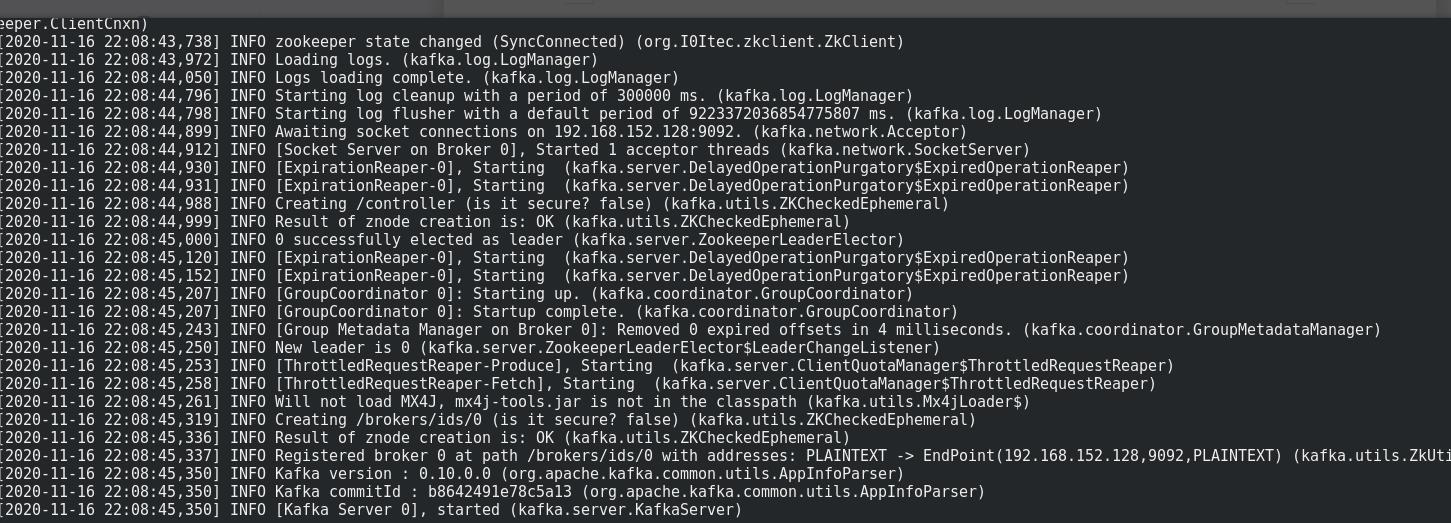

2.4.8 查看每个节点的nohup.out的输出日志

#master节点:

[root@bogon kafka_2.12-2.1.0]# tail -f nohup.out

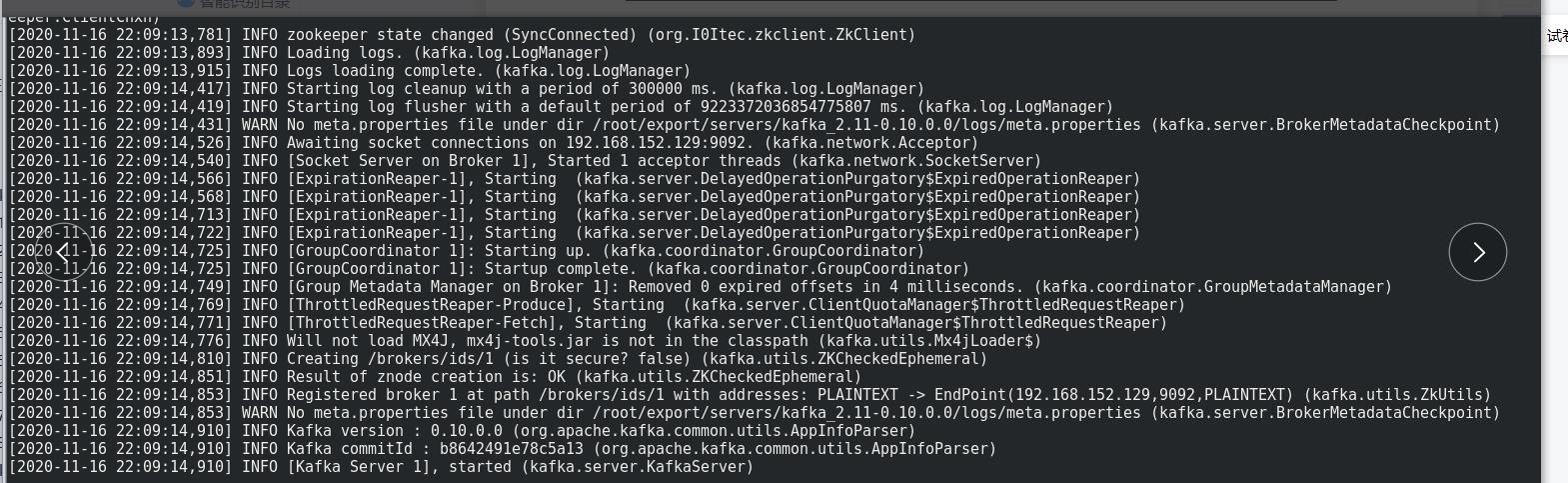

#slaver01节点:

[root@bogon kafka_2.12-2.1.0]# tail -f nohup.out

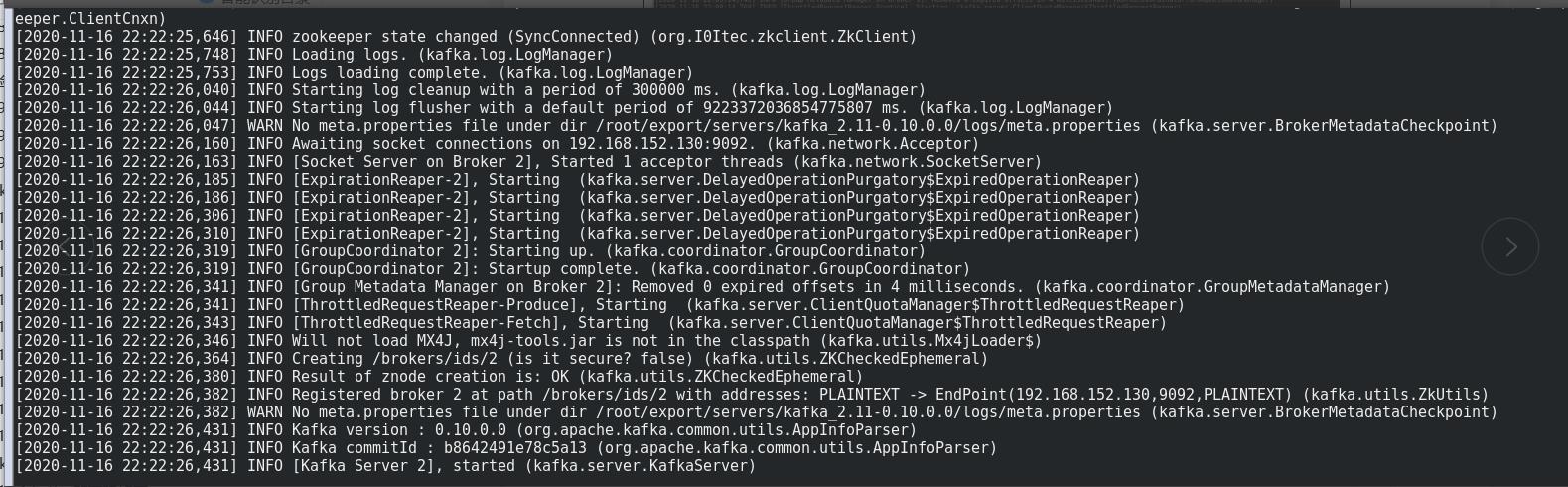

#slaver02节点:

[root@bogon kafka_2.12-2.1.0]# tail -f nohup.out

可以看到:这3个节点都成功start,没有报错,ok,到此kafka的集群搭建完成。

以上是关于kafka集群搭建的主要内容,如果未能解决你的问题,请参考以下文章