elasticsearch7.x 开启安全认证xpack,以及kibanalogstashfilebeat组件连接开启安全认证的es;

Posted 不知名运维:

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了elasticsearch7.x 开启安全认证xpack,以及kibanalogstashfilebeat组件连接开启安全认证的es;相关的知识,希望对你有一定的参考价值。

文章目录

一、 elasticsearch7.x 开启安全认xpack

1.生成认证文件

在es集群中任意一台节点生成即可

1.1 生成ca证书

[root@elk01 ~]# /hqtbj/hqtwww/elasticsearch_workspace/bin/elasticsearch-certutil ca

...

#直接回车即可;

Please enter the desired output file [elastic-stack-ca.p12]:

#这里直接回车,不要设置密码,否则会报错!!

Enter password for elastic-stack-ca.p12 :

设置完成后,会在elasticsearch的家目录下看到新生成的证书elastic-stack-ca.p12

1.2 生成p12密钥

使用上面生成的ca证书"elastic-stack-ca.p12"生成p12密钥

[root@elk01 ~]# /hqtbj/hqtwww/elasticsearch_workspace/bin/elasticsearch-certutil cert --ca /hqtbj/hqtwww/elasticsearch_workspace/elastic-stack-ca.p12

...

#下面三项直接回车即可

Enter password for CA (/hqtbj/hqtwww/elasticsearch_workspace/elastic-stack-ca.p12) :

Please enter the desired output file [elastic-certificates.p12]:

#这里直接回车,不要设置密码,否则es会启动不了

Enter password for elastic-certificates.p12 :

Certificates written to /hqtbj/hqtwww/elasticsearch_workspace/elastic-certificates.p12

...

设置完成后,会在elasticsearch的家目录下看到新生成的密钥elastic-certificates.p12

1.3 拷贝p12密钥到其它es节点

首先在config/目录下创建个certs目录,然后将p12证书移动到certs目录下,方便后面在配置文件里引用

[root@elk01 ~]# cd /hqtbj/hqtwww/elasticsearch_workspace/config/

[root@elk01 config]# mkdir certs/

[root@elk01 config]# mv /hqtbj/hqtwww/elasticsearch_workspace/elastic-certificates.p12 certs/

[root@elk01 config]# chmod -R +755 certs

[root@elk01 config]# ll certs/

total 4

-rwxr-xr-x 1 root root 3443 Jan 12 10:01 elastic-certificates.p12

将p12证书移动到certs目录下后直接将certs目录拷贝到其他es节点

[root@elk01 config]# scp -pr certs root@10.8.0.6:/hqtbj/hqtwww/elasticsearch_workspace/config/

[root@elk01 config]# scp -pr certs root@10.8.0.9:/hqtbj/hqtwww/elasticsearch_workspace/config/

2.修改elasticsearch配置文件开启xpack

所有es节点都需要配置

[root@elk01 ~]# vim /hqtbj/hqtwww/elasticsearch_workspace/config/elasticsearch.yml

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

#下面是p12密钥文件的存储位置,建议使用绝对路径,当然相对路径也是可以的:"certs/elastic-certificates.p12"

xpack.security.transport.ssl.keystore.path: /hqtbj/hqtwww/elasticsearch_workspace/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /hqtbj/hqtwww/elasticsearch_workspace/config/certs/elastic-certificates.p12

3.重启各个es节点并设置用户名密码

3.1 重启es节点

[root@elk01 ~]# systemctl restart elasticsearch.service

3.2 设置用户名密码

在es集群中任意一台节点执行即可

需要在集群状态正常的情况下设置,否则会报错

手动设置各个用户的密码:

[root@elk01 ~]# /hqtbj/hqtwww/elasticsearch_workspace/bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

#手动输入每个用户的密码,每个用户需要输入两遍!!!

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana_system]:

Reenter password for [kibana_system]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

自动随机生成每个用户的密码命令如下:

[root@elk01 ~]# /hqtbj/hqtwww/elasticsearch_workspace/bin/elasticsearch-setup-passwords auto

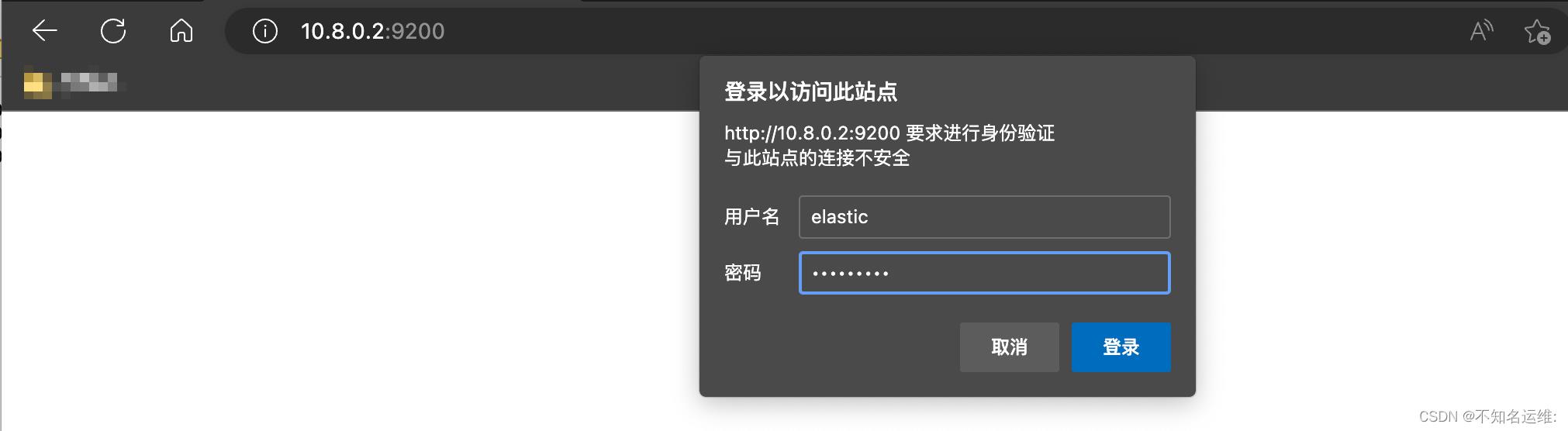

4.访问es验证

此时es的安全认证已经打开了,我们再去访问es的时候是需要刚刚设置的用户名密码的这里使用elastic账号登录

二、配置kibana使用es安全认证

1.kibana配置连接ES的安全认证

由于es配置了安全认证,那么kafka是需要去连接es集群的,所以就需要给kibana添加登录es的用户名密码才可以正常访问

#编辑kibana的主配置文件"kibana.yml"

[root@localhost ~]# vim /hqtbj/hqtwww/kibana_workspace/config/kibana.yml

...

#ES的连接地址

elasticsearch.hosts: ["http://10.8.0.2:9200","http://10.8.0.6:9200","http://10.8.0.9:9200"]

#连接ES的用户名

elasticsearch.username: "kibana_system"

#连接es的密码

elasticsearch.password: "123456"

...

这里的用户名密码可以填上一步设置用户名密码里的"kibana_system"用户;

2.重启kibana并访问验证

[root@localhost ~]# systemctl restart kibana.service

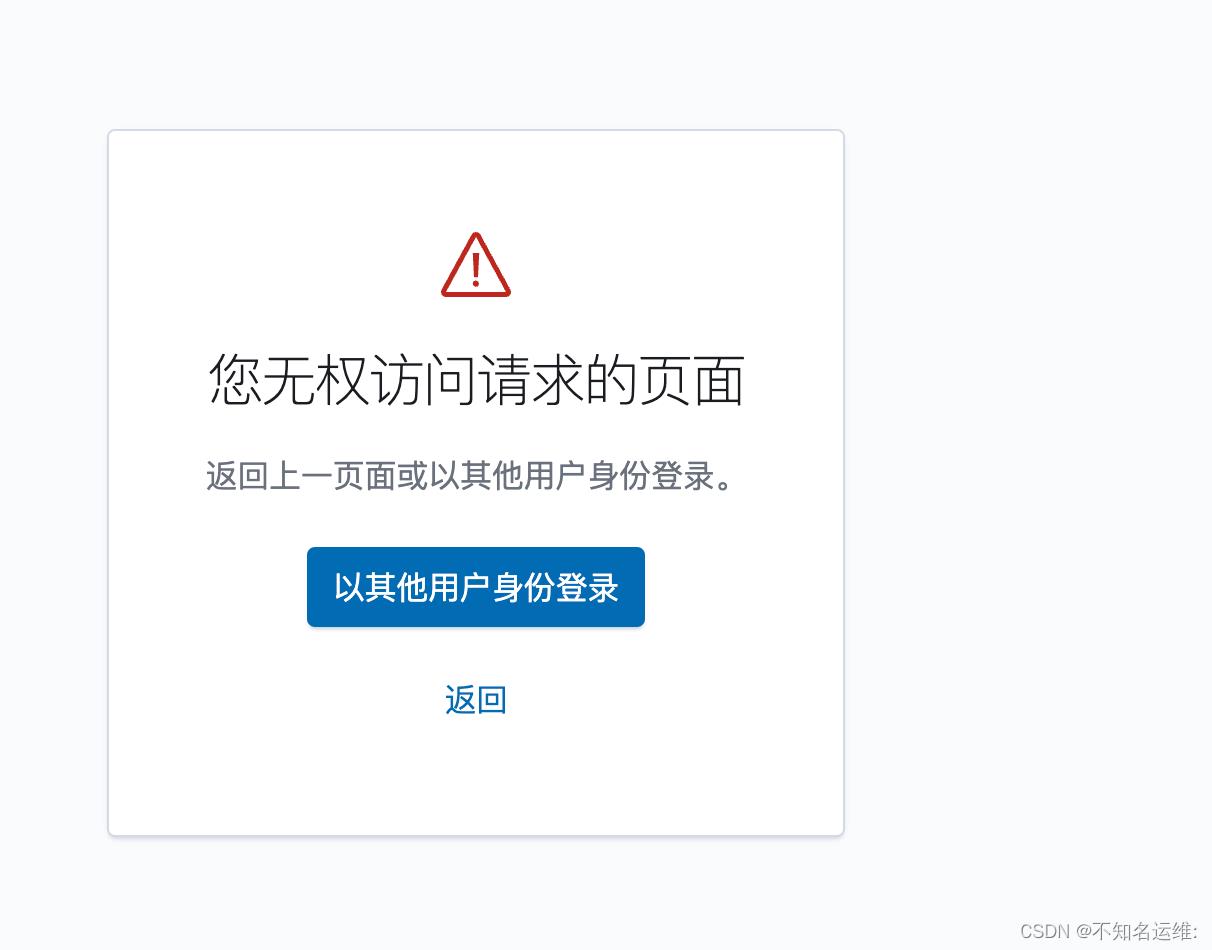

输入kibana用户登录

发现可以登录,但是显示没有权限访问

此时需要使用"elastic"用户登录即可成功;

kibana界⾯⿏标依次点击如下:

(1)菜单栏;

(2)StackManagement;

(3)安全;

(4)用户;

即可看到我们上面配置过密码的用户

这些用户都是默认的,无法修改!

这些用户都是默认的,无法修改!可以自己新建用户和角色来满足自己的需求

3.在kibana里创建只读角色与只读账号

只读账号--例如给开发使用

3.1 创建角色;

kibana界⾯⿏标依次点击如下:

(1)菜单栏;

(2)StackManagement;

(3)安全;

(4)角色;

(5)创建角色;

需要给只读角色创建kibana权限,否则拥有此角色的用户登录上来会没有权限

3.2创建用户

kibana界⾯⿏标依次点击如下:

(1)菜单栏;

(2)StackManagement;

(3)安全;

(4)用户;

(5)创建用户;

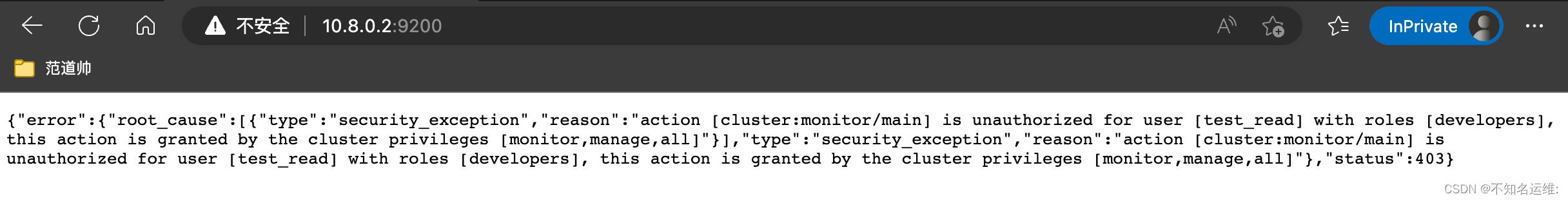

3.3只读账号登录效果如下:

可以查看日志

因为只是对索引的只读权限,不能对集群进行任何操作,包括通过Restful API的方式也不可以,会报403的错误 例如:

#查看所有的索引信息

[root@kafka01 conf.d]# curl --user test_read:123456 'http://10.8.0.2:9200/_cat/indices?v' -k

"error":"root_cause":["type":"security_exception","reason":"action [indices:monitor/stats] is unauthorized for user [test_read] with roles [developers], this action is granted by the index privileges [monitor,manage,all]","suppressed":["type":"security_exception","reason":"action [cluster:monitor/state] is unauthorized for user [test_read] with roles [developers], this action is granted by the cluster privileges [read_ccr,transport_client,manage_ccr,monitor,manage,all]","type":"security_exception","reason":"action [cluster:monitor/health] is unauthorized for user [test_read] with roles [developers], this action is granted by the cluster privileges [monitor,manage,all]"]],"type":"security_exception","reason":"action [indices:monitor/stats] is unauthorized for user [test_read] with roles [developers], this action is granted by the index privileges [monitor,manage,all]","suppressed":["type":"security_exception","reason":"action [cluster:monitor/state] is unauthorized for user [test_read] with roles [developers], this action is granted by the cluster privileges [read_ccr,transport_client,manage_ccr,monitor,manage,all]","type":"security_exception","reason":"action [cluster:monitor/health] is unauthorized for user [test_read] with roles [developers], this action is granted by the cluster privileges [monitor,manage,all]"],"status":403

#查看es节点信息也会报错:

三、配置logstash使用es安全认证

虽然我们在开启es认证时设置过"logstash_system"用户,但是这个用户并不能像kibana那样直接使用,如果直接使用"logstash_system"用户的话,在将数据写入索引的时候,会报403的错误,如下:

[2023-01-13T13:32:59,126][ERROR][logstash.outputs.elasticsearch][main][53f2ca2de58f24f94b83b4a2ddfacfaaa953df1f59b7c9426f204cd3cda13a80] Encountered a retryable error (will retry with exponential backoff) :code=>403, :url=>"http://10.8.0.6:9200/_bulk", :content_length=>3145

这是因为自带的"logstash_system"用户使用的"logstash_system"角色没有写入的权限,所以需要重新建个有写入权限的角色和用户

1.创建logstash用户

1.1 首先创建个角色,名为"logstash_write",集群权限为:monitor;

1.2 此角色对索引的操作权限为:write、delete、create_index monitor;

1.3 新建一个用户拥有此权限

2.修改logstash输出到es的配置

因为es开启了认证,所以需要在logstash output到es时添加上刚创建用户和密码

[root@kafka02 ~]# vim /hqtbj/hqtwww/logstash_workspace/conf.d/gotone-kafka-to-es.conf

...

output

elasticsearch

#es集群地址

hosts => ["10.8.0.2:9200","10.8.0.6:9200","10.8.0.9:9200"]

#写入的索引名称

index => "hqt-gotone-pro-%+YYYY.MM.dd"

##具有对索引写权限的用户

user => "logstash_to_es"

##用户的密码

password => "123456"

修改完之后需要重启logstash;

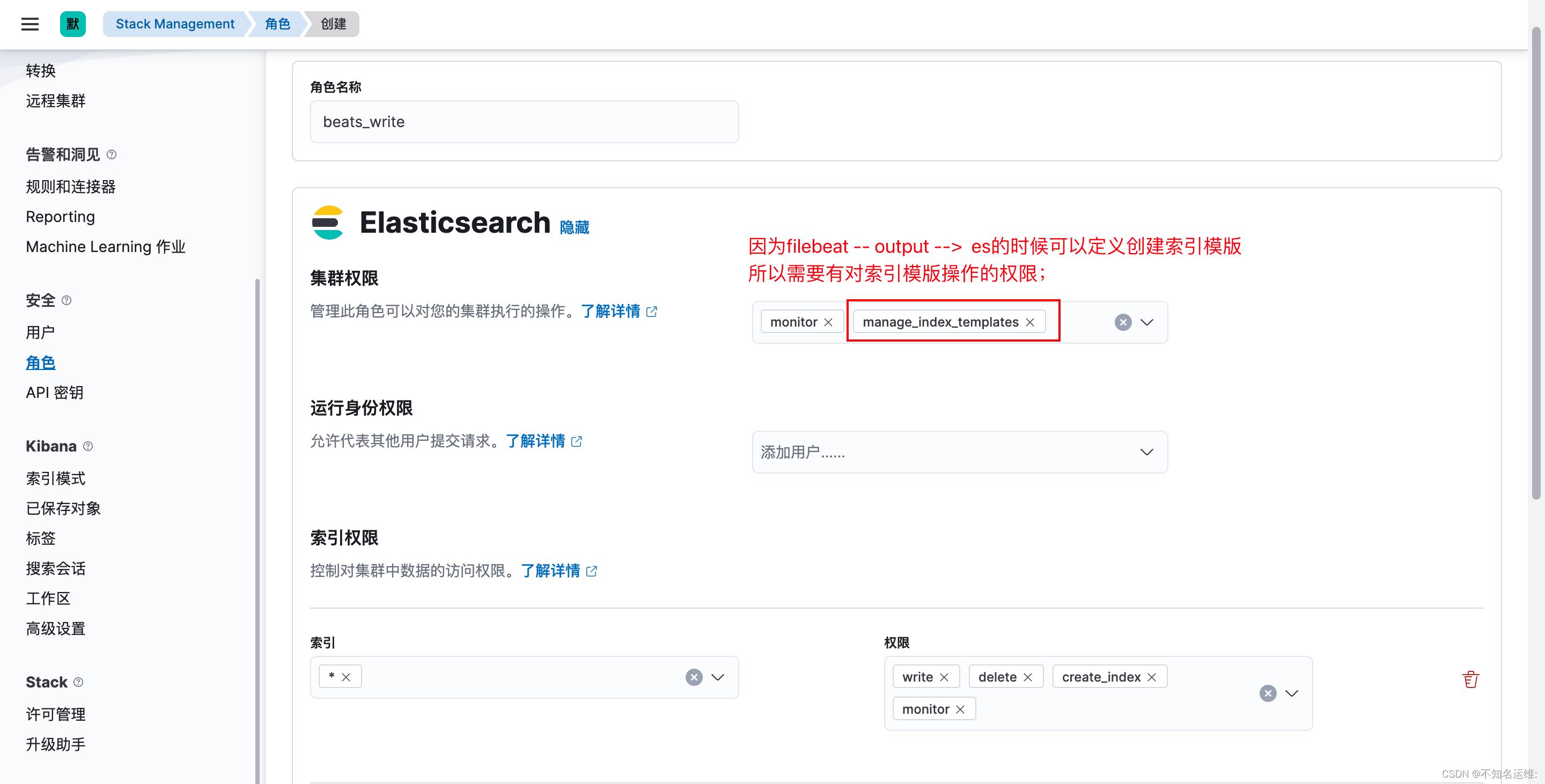

四、配置filebeat使用es安全认证

fielbeat跟logstash的步骤基本是相同的,自带的“beats_system”用户一样不能用会报403错误,如下:

2023-01-13T13:52:19.569+0800 ERROR [publisher_pipeline_output] pipeline/output.go:154 Failed to connect to backoff(elasticsearch(http://10.8.0.9:9200)): Connection marked as failed because the onConnect callback failed: error loading template: failed to load template: couldn't load template: 403 Forbidden: "error":"root_cause":["type":"security_exception","reason":"action [indices:admin/index_template/put] is unauthorized for user [beats_system] with roles [beats_system], this action is granted by the cluster privileges [manage_index_templates,manage,all]"],"type":"security_exception","reason":"action [indices:admin/index_template/put] is unauthorized for user [beats_system] with roles [beats_system], this action is granted by the cluster privileges [manage_index_templates,manage,all]","status":403. Response body: "error":"root_cause":["type":"security_exception","reason":"action [indices:admin/index_template/put] is unauthorized for user [beats_system] with roles [beats_system], this action is granted by the cluster privileges [manage_index_templates,manage,all]"],"type":"security_exception","reason":"action [indices:admin/index_template/put] is unauthorized for user [beats_system] with roles [beats_system], this action is granted by the cluster privileges [manage_index_templates,manage,all]","status":403

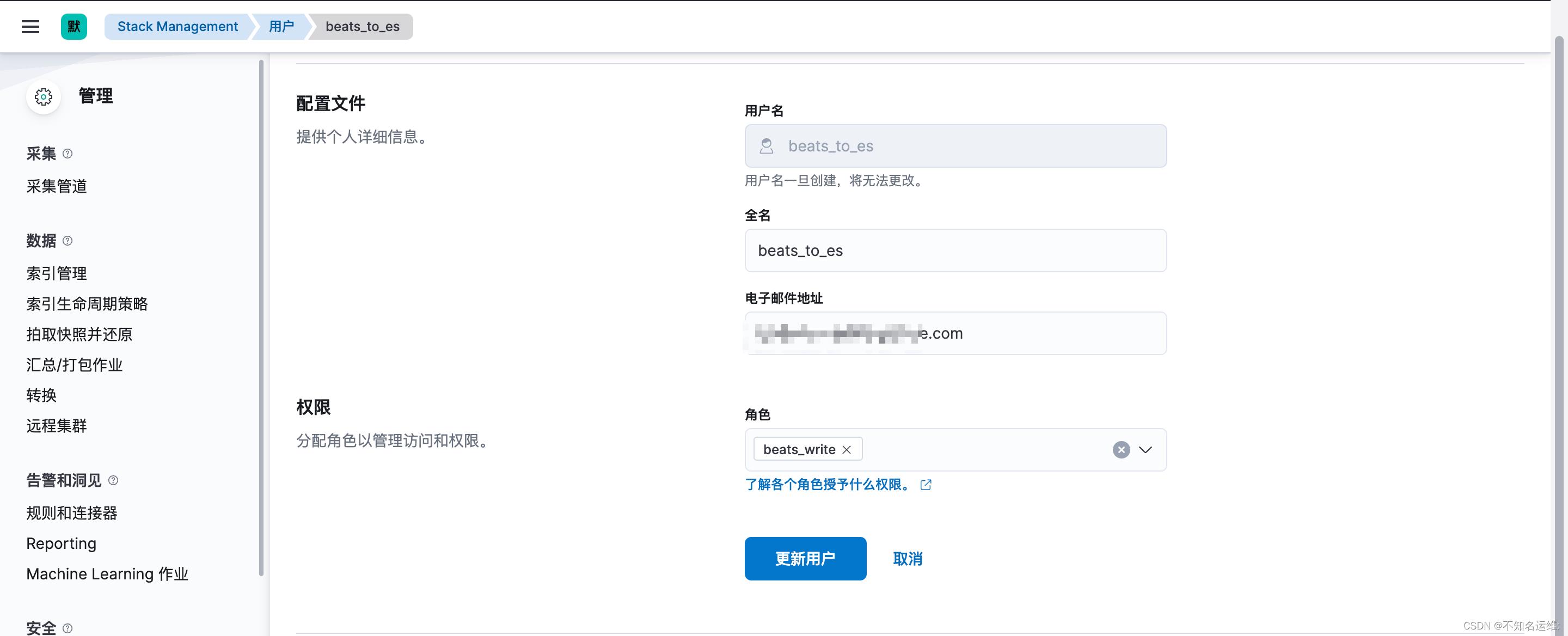

1.创建filebeat用户

1.1 首先创建个角色,名为"beats_write",集群权限为:monitor,manage_index_templates;

1.2 此角色对索引的操作权限为:write、delete、create_index monitor;

1.3 新建一个用户拥有此权限

2.修改filebeat输出到es的配置

output.elasticsearch:

enabled: true

hosts: ["http://10.8.0.2:9200","http://10.8.0.6:9200","http://10.8.0.9:9200"]

index: "oldboyedu-linux-elk-%+yyyy.MM.dd"

#用户名

username: "beats_to_es"

#密码

password: "123456"

##创建索引模版

#禁用索引生命周期管理,如果开启的话则会忽略我们自定义的索引;

setup.ilm.enabled: false

#设置索引模板的名称

setup.template.name: "oldboyedu-linux"

#设置索引模板的匹配模式

setup.template.pattern: "oldboyedu-linux-*"

修改完之后需要重启filebeat;

xp系统远程桌面连接为啥没有权限关机啊?

你远程别人的时候必须是开机且有网的状态,所以不让关机也是正常的,你可以在远程的机器上装一个自动关机的小软件不就行了。。。。 参考技术A windows系统出于安全考虑,没有给远程用户关机权限以上是关于elasticsearch7.x 开启安全认证xpack,以及kibanalogstashfilebeat组件连接开启安全认证的es;的主要内容,如果未能解决你的问题,请参考以下文章