ElasticStack的入门学习

Posted biehongli

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ElasticStack的入门学习相关的知识,希望对你有一定的参考价值。

Beats,Logstash负责数据收集与处理。相当于ETL(Extract Transform Load)。

Elasticsearch负责数据存储、查询、分析。

Kibana负责数据探索与可视化分析。

1、Elasticsearch 6.x版本的安装,我这里使用Elasticsearch 6.7.0版本的。

备注:之前安装过5.4.3版本的,https://www.cnblogs.com/biehongli/p/11643482.html

2、将下载好的安装包上传到服务器上面,或者你在线下载也可以的哦。

注意:记得先安装好jdk1.8版本及其以上的哦。由于之前安装出现了错误,有了一点经验,这里先创建用户和所属组。

1 [root@slaver4 package]# groupadd elsearch 2 [root@slaver4 package]# useradd elsearch -g elsearch 3 [root@slaver4 package]# passwd elsearch 4 更改用户 elsearch 的密码 。 5 新的 密码: 6 无效的密码: 密码少于 8 个字符 7 重新输入新的 密码: 8 passwd:所有的身份验证令牌已经成功更新。 9 [root@slaver4 package]# tar -zxvf elasticsearch-6.7.0.tar.gz -C /home/hadoop/soft/ 10 [root@slaver4 soft]# chown -R elsearch:elsearch elasticsearch-6.7.0/ 11 [root@slaver4 soft]# ls 12 elasticsearch-6.7.0 13 [root@slaver4 soft]# ll 14 总用量 8 15 drwxr-xr-x. 8 elsearch elsearch 143 3月 21 2019 elasticsearch-6.7.0 16 [root@slaver4 soft]# 17 [root@slaver4 soft]# su elsearch 18 [elsearch@slaver4 soft]$ cd elasticsearch-6.7.0/ 19 [elsearch@slaver4 elasticsearch-6.7.0]$ ls 20 bin config lib LICENSE.txt logs modules NOTICE.txt plugins README.textile 21 [elsearch@slaver4 elasticsearch-6.7.0]$ cd bin/ 22 [elsearch@slaver4 bin]$ ls 23 elasticsearch elasticsearch-cli.bat elasticsearch-migrate elasticsearch-service-mgr.exe elasticsearch-sql-cli-6.7.0.jar elasticsearch-users.bat x-pack-watcher-env.bat 24 elasticsearch.bat elasticsearch-croneval elasticsearch-migrate.bat elasticsearch-service-x64.exe elasticsearch-sql-cli.bat x-pack 25 elasticsearch-certgen elasticsearch-croneval.bat elasticsearch-plugin elasticsearch-setup-passwords elasticsearch-syskeygen x-pack-env 26 elasticsearch-certgen.bat elasticsearch-env elasticsearch-plugin.bat elasticsearch-setup-passwords.bat elasticsearch-syskeygen.bat x-pack-env.bat 27 elasticsearch-certutil elasticsearch-env.bat elasticsearch-saml-metadata elasticsearch-shard elasticsearch-translog x-pack-security-env 28 elasticsearch-certutil.bat elasticsearch-keystore elasticsearch-saml-metadata.bat elasticsearch-shard.bat elasticsearch-translog.bat x-pack-security-env.bat 29 elasticsearch-cli elasticsearch-keystore.bat elasticsearch-service.bat elasticsearch-sql-cli elasticsearch-users x-pack-watcher-env 30 [elsearch@slaver4 bin]$ ./elasticsearch

这次居然很顺利,但是在浏览器使用http://192.168.110.133:9200/访问是不行的,这里修改一下配置文件,使用浏览器也可以进行访问。

1 [elsearch@slaver4 bin]$ ./elasticsearch 2 OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N 3 [2019-10-25T15:09:46,963][INFO ][o.e.e.NodeEnvironment ] [99_nTdv] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [10.5gb], net total_space [17.7gb], types [rootfs] 4 [2019-10-25T15:09:46,968][INFO ][o.e.e.NodeEnvironment ] [99_nTdv] heap size [1015.6mb], compressed ordinary object pointers [true] 5 [2019-10-25T15:09:46,978][INFO ][o.e.n.Node ] [99_nTdv] node name derived from node ID [99_nTdvNRUS0U0dJBpu7kA]; set [node.name] to override 6 [2019-10-25T15:09:46,978][INFO ][o.e.n.Node ] [99_nTdv] version[6.7.0], pid[8690], build[default/tar/8453f77/2019-03-21T15:32:29.844721Z], OS[Linux/3.10.0-957.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_181/25.181-b13] 7 [2019-10-25T15:09:46,978][INFO ][o.e.n.Node ] [99_nTdv] JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Des.networkaddress.cache.ttl=60, -Des.networkaddress.cache.negative.ttl=10, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch-8871744481955517150, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -XX:+PrintGCDetails, -XX:+PrintGCDateStamps, -XX:+PrintTenuringDistribution, -XX:+PrintGCApplicationStoppedTime, -Xloggc:logs/gc.log, -XX:+UseGCLogFileRotation, -XX:NumberOfGCLogFiles=32, -XX:GCLogFileSize=64m, -Des.path.home=/home/hadoop/soft/elasticsearch-6.7.0, -Des.path.conf=/home/hadoop/soft/elasticsearch-6.7.0/config, -Des.distribution.flavor=default, -Des.distribution.type=tar] 8 [2019-10-25T15:09:58,240][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [aggs-matrix-stats] 9 [2019-10-25T15:09:58,241][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [analysis-common] 10 [2019-10-25T15:09:58,241][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-common] 11 [2019-10-25T15:09:58,241][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-geoip] 12 [2019-10-25T15:09:58,241][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-user-agent] 13 [2019-10-25T15:09:58,241][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-expression] 14 [2019-10-25T15:09:58,242][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-mustache] 15 [2019-10-25T15:09:58,242][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-painless] 16 [2019-10-25T15:09:58,242][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [mapper-extras] 17 [2019-10-25T15:09:58,242][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [parent-join] 18 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [percolator] 19 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [rank-eval] 20 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [reindex] 21 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [repository-url] 22 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [transport-netty4] 23 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [tribe] 24 [2019-10-25T15:09:58,243][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ccr] 25 [2019-10-25T15:09:58,244][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-core] 26 [2019-10-25T15:09:58,244][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-deprecation] 27 [2019-10-25T15:09:58,244][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-graph] 28 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ilm] 29 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-logstash] 30 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ml] 31 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-monitoring] 32 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-rollup] 33 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-security] 34 [2019-10-25T15:09:58,245][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-sql] 35 [2019-10-25T15:09:58,246][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-upgrade] 36 [2019-10-25T15:09:58,246][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-watcher] 37 [2019-10-25T15:09:58,246][INFO ][o.e.p.PluginsService ] [99_nTdv] no plugins loaded 38 [2019-10-25T15:10:17,907][INFO ][o.e.x.s.a.s.FileRolesStore] [99_nTdv] parsed [0] roles from file [/home/hadoop/soft/elasticsearch-6.7.0/config/roles.yml] 39 [2019-10-25T15:10:20,420][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [99_nTdv] [controller/8755] [Main.cc@109] controller (64 bit): Version 6.7.0 (Build d74ae2ac01b10d) Copyright (c) 2019 Elasticsearch BV 40 [2019-10-25T15:10:23,540][DEBUG][o.e.a.ActionModule ] [99_nTdv] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security 41 [2019-10-25T15:10:24,562][INFO ][o.e.d.DiscoveryModule ] [99_nTdv] using discovery type [zen] and host providers [settings] 42 [2019-10-25T15:10:28,665][INFO ][o.e.n.Node ] [99_nTdv] initialized 43 [2019-10-25T15:10:28,666][INFO ][o.e.n.Node ] [99_nTdv] starting ... 44 [2019-10-25T15:10:29,316][INFO ][o.e.t.TransportService ] [99_nTdv] publish_address {127.0.0.1:9300}, bound_addresses {[::1]:9300}, {127.0.0.1:9300} 45 [2019-10-25T15:10:29,379][WARN ][o.e.b.BootstrapChecks ] [99_nTdv] max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535] 46 [2019-10-25T15:10:29,380][WARN ][o.e.b.BootstrapChecks ] [99_nTdv] max number of threads [3756] for user [elsearch] is too low, increase to at least [4096] 47 [2019-10-25T15:10:29,380][WARN ][o.e.b.BootstrapChecks ] [99_nTdv] max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144] 48 [2019-10-25T15:10:32,536][INFO ][o.e.c.s.MasterService ] [99_nTdv] zen-disco-elected-as-master ([0] nodes joined), reason: new_master {99_nTdv}{99_nTdvNRUS0U0dJBpu7kA}{MMkMOY4eSzmE1qOyNEXang}{127.0.0.1}{127.0.0.1:9300}{ml.machine_memory=1019797504, xpack.installed=true, ml.max_open_jobs=20, ml.enabled=true} 49 [2019-10-25T15:10:32,545][INFO ][o.e.c.s.ClusterApplierService] [99_nTdv] new_master {99_nTdv}{99_nTdvNRUS0U0dJBpu7kA}{MMkMOY4eSzmE1qOyNEXang}{127.0.0.1}{127.0.0.1:9300}{ml.machine_memory=1019797504, xpack.installed=true, ml.max_open_jobs=20, ml.enabled=true}, reason: apply cluster state (from master [master {99_nTdv}{99_nTdvNRUS0U0dJBpu7kA}{MMkMOY4eSzmE1qOyNEXang}{127.0.0.1}{127.0.0.1:9300}{ml.machine_memory=1019797504, xpack.installed=true, ml.max_open_jobs=20, ml.enabled=true} committed version [1] source [zen-disco-elected-as-master ([0] nodes joined)]]) 50 [2019-10-25T15:10:32,902][INFO ][o.e.h.n.Netty4HttpServerTransport] [99_nTdv] publish_address {127.0.0.1:9200}, bound_addresses {[::1]:9200}, {127.0.0.1:9200} 51 [2019-10-25T15:10:32,903][INFO ][o.e.n.Node ] [99_nTdv] started 52 [2019-10-25T15:10:32,945][WARN ][o.e.x.s.a.s.m.NativeRoleMappingStore] [99_nTdv] Failed to clear cache for realms [[]] 53 [2019-10-25T15:10:33,180][INFO ][o.e.g.GatewayService ] [99_nTdv] recovered [0] indices into cluster_state 54 [2019-10-25T15:10:34,414][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.triggered_watches] for index patterns [.triggered_watches*] 55 [2019-10-25T15:10:34,832][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.watch-history-9] for index patterns [.watcher-history-9*] 56 [2019-10-25T15:10:34,904][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.watches] for index patterns [.watches*] 57 [2019-10-25T15:10:35,020][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.monitoring-logstash] for index patterns [.monitoring-logstash-6-*] 58 [2019-10-25T15:10:35,158][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.monitoring-es] for index patterns [.monitoring-es-6-*] 59 [2019-10-25T15:10:35,237][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.monitoring-beats] for index patterns [.monitoring-beats-6-*] 60 [2019-10-25T15:10:35,304][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.monitoring-alerts] for index patterns [.monitoring-alerts-6] 61 [2019-10-25T15:10:35,395][INFO ][o.e.c.m.MetaDataIndexTemplateService] [99_nTdv] adding template [.monitoring-kibana] for index patterns [.monitoring-kibana-6-*] 62 [2019-10-25T15:10:35,761][INFO ][o.e.l.LicenseService ] [99_nTdv] license [3bf82dcc-622e-4a1e-ab9e-a2eb1a194bde] mode [basic] - valid

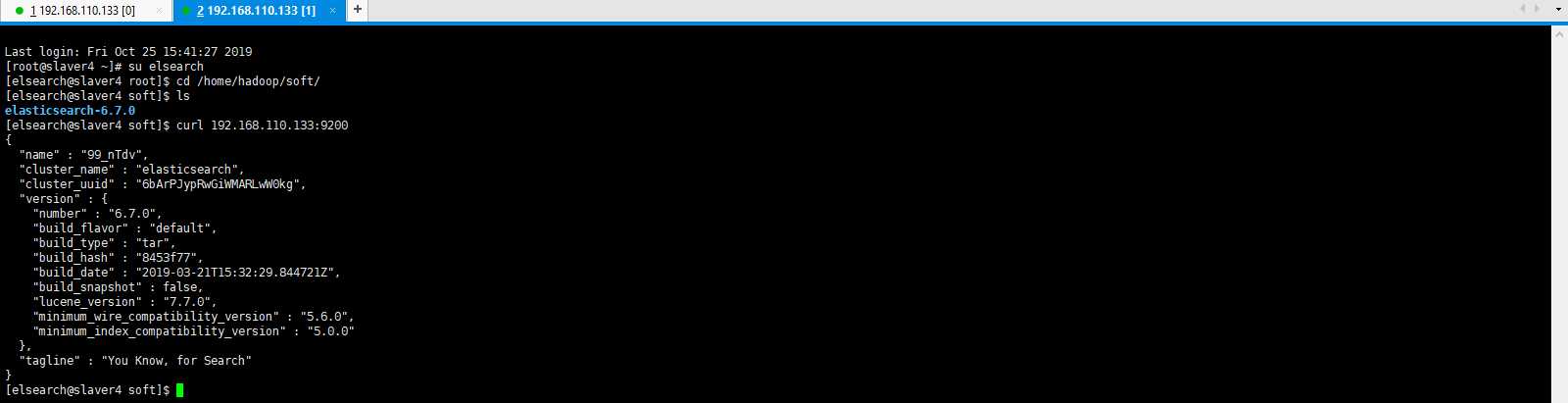

使用命令curl http://127.0.0.1:9200/是正常的。

1 [elsearch@slaver4 soft]$ curl http://127.0.0.1:9200/ 2 { 3 "name" : "99_nTdv", 4 "cluster_name" : "elasticsearch", 5 "cluster_uuid" : "6bArPJypRwGiWMARLwW0kg", 6 "version" : { 7 "number" : "6.7.0", 8 "build_flavor" : "default", 9 "build_type" : "tar", 10 "build_hash" : "8453f77", 11 "build_date" : "2019-03-21T15:32:29.844721Z", 12 "build_snapshot" : false, 13 "lucene_version" : "7.7.0", 14 "minimum_wire_compatibility_version" : "5.6.0", 15 "minimum_index_compatibility_version" : "5.0.0" 16 }, 17 "tagline" : "You Know, for Search" 18 }

在配置文件elasticsearch.yml中添加如下所示配置:

network.host: 192.168.110.133

1 [elsearch@slaver4 soft]$ cd elasticsearch-6.7.0/ 2 [elsearch@slaver4 elasticsearch-6.7.0]$ ls 3 bin config data lib LICENSE.txt logs modules NOTICE.txt plugins README.textile 4 [elsearch@slaver4 elasticsearch-6.7.0]$ cd config/ 5 [elsearch@slaver4 config]$ ls 6 elasticsearch.keystore elasticsearch.yml jvm.options log4j2.properties role_mapping.yml roles.yml users users_roles 7 [elsearch@slaver4 config]$ vim elasticsearch.yml

好吧,这个配置文件一修改就报错了,错误和第一次基本一致,这里也贴一下吧。

1 [elsearch@slaver4 bin]$ ./elasticsearch 2 OpenJDK 64-Bit Server VM warning: If the number of processors is expected to increase from one, then you should configure the number of parallel GC threads appropriately using -XX:ParallelGCThreads=N 3 [2019-10-25T15:20:42,865][INFO ][o.e.e.NodeEnvironment ] [99_nTdv] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [10.5gb], net total_space [17.7gb], types [rootfs] 4 [2019-10-25T15:20:42,901][INFO ][o.e.e.NodeEnvironment ] [99_nTdv] heap size [1015.6mb], compressed ordinary object pointers [true] 5 [2019-10-25T15:20:42,911][INFO ][o.e.n.Node ] [99_nTdv] node name derived from node ID [99_nTdvNRUS0U0dJBpu7kA]; set [node.name] to override 6 [2019-10-25T15:20:42,911][INFO ][o.e.n.Node ] [99_nTdv] version[6.7.0], pid[8990], build[default/tar/8453f77/2019-03-21T15:32:29.844721Z], OS[Linux/3.10.0-957.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_181/25.181-b13] 7 [2019-10-25T15:20:42,912][INFO ][o.e.n.Node ] [99_nTdv] JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Des.networkaddress.cache.ttl=60, -Des.networkaddress.cache.negative.ttl=10, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch-8887605790162217955, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -XX:+PrintGCDetails, -XX:+PrintGCDateStamps, -XX:+PrintTenuringDistribution, -XX:+PrintGCApplicationStoppedTime, -Xloggc:logs/gc.log, -XX:+UseGCLogFileRotation, -XX:NumberOfGCLogFiles=32, -XX:GCLogFileSize=64m, -Des.path.home=/home/hadoop/soft/elasticsearch-6.7.0, -Des.path.conf=/home/hadoop/soft/elasticsearch-6.7.0/config, -Des.distribution.flavor=default, -Des.distribution.type=tar] 8 [2019-10-25T15:20:56,645][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [aggs-matrix-stats] 9 [2019-10-25T15:20:56,648][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [analysis-common] 10 [2019-10-25T15:20:56,650][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-common] 11 [2019-10-25T15:20:56,651][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-geoip] 12 [2019-10-25T15:20:56,652][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [ingest-user-agent] 13 [2019-10-25T15:20:56,653][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-expression] 14 [2019-10-25T15:20:56,673][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-mustache] 15 [2019-10-25T15:20:56,674][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [lang-painless] 16 [2019-10-25T15:20:56,675][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [mapper-extras] 17 [2019-10-25T15:20:56,675][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [parent-join] 18 [2019-10-25T15:20:56,677][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [percolator] 19 [2019-10-25T15:20:56,677][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [rank-eval] 20 [2019-10-25T15:20:56,677][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [reindex] 21 [2019-10-25T15:20:56,677][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [repository-url] 22 [2019-10-25T15:20:56,677][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [transport-netty4] 23 [2019-10-25T15:20:56,678][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [tribe] 24 [2019-10-25T15:20:56,678][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ccr] 25 [2019-10-25T15:20:56,678][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-core] 26 [2019-10-25T15:20:56,678][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-deprecation] 27 [2019-10-25T15:20:56,678][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-graph] 28 [2019-10-25T15:20:56,679][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ilm] 29 [2019-10-25T15:20:56,679][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-logstash] 30 [2019-10-25T15:20:56,680][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-ml] 31 [2019-10-25T15:20:56,683][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-monitoring] 32 [2019-10-25T15:20:56,703][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-rollup] 33 [2019-10-25T15:20:56,703][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-security] 34 [2019-10-25T15:20:56,703][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-sql] 35 [2019-10-25T15:20:56,704][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-upgrade] 36 [2019-10-25T15:20:56,704][INFO ][o.e.p.PluginsService ] [99_nTdv] loaded module [x-pack-watcher] 37 [2019-10-25T15:20:56,706][INFO ][o.e.p.PluginsService ] [99_nTdv] no plugins loaded 38 [2019-10-25T15:21:18,215][INFO ][o.e.x.s.a.s.FileRolesStore] [99_nTdv] parsed [0] roles from file [/home/hadoop/soft/elasticsearch-6.7.0/config/roles.yml] 39 [2019-10-25T15:21:21,668][INFO ][o.e.x.m.p.l.CppLogMessageHandler] [99_nTdv] [controller/9054] [Main.cc@109] controller (64 bit): Version 6.7.0 (Build d74ae2ac01b10d) Copyright (c) 2019 Elasticsearch BV 40 [2019-10-25T15:21:24,554][DEBUG][o.e.a.ActionModule ] [99_nTdv] Using REST wrapper from plugin org.elasticsearch.xpack.security.Security 41 [2019-10-25T15:21:25,965][INFO ][o.e.d.DiscoveryModule ] [99_nTdv] using discovery type [zen] and host providers [settings] 42 [2019-10-25T15:21:29,066][INFO ][o.e.n.Node ] [99_nTdv] initialized 43 [2019-10-25T15:21:29,066][INFO ][o.e.n.Node ] [99_nTdv] starting ... 44 [2019-10-25T15:21:29,420][INFO ][o.e.t.TransportService ] [99_nTdv] publish_address {192.168.110.133:9300}, bound_addresses {192.168.110.133:9300} 45 [2019-10-25T15:21:29,573][INFO ][o.e.b.BootstrapChecks ] [99_nTdv] bound or publishing to a non-loopback address, enforcing bootstrap checks 46 ERROR: [3] bootstrap checks failed 47 [1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535] 48 [2]: max number of threads [3756] for user [elsearch] is too low, increase to at least [4096] 49 [3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144] 50 [2019-10-25T15:21:29,726][INFO ][o.e.n.Node ] [99_nTdv] stopping ... 51 [2019-10-25T15:21:29,811][INFO ][o.e.n.Node ] [99_nTdv] stopped 52 [2019-10-25T15:21:29,811][INFO ][o.e.n.Node ] [99_nTdv] closing ... 53 [2019-10-25T15:21:29,860][INFO ][o.e.n.Node ] [99_nTdv] closed 54 [2019-10-25T15:21:29,865][INFO ][o.e.x.m.p.NativeController] [99_nTdv] Native controller process has stopped - no new native processes can be started 55 [elsearch@slaver4 bin]$

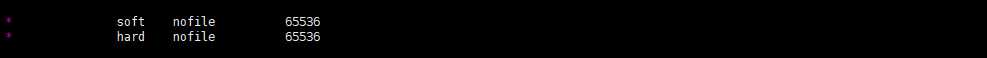

错误一、[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65535],解决方法如下所示:

错误原因,每个进程最大同时打开文件数太小,可通过下面2个命令查看当前数量。

注意,切记,如果按照此方法修改,必须重启你的虚拟机,而且是root用户修改的配置文件,不然你解决完报的这两个错误,再次启动elasticsearch还是会报错误一,但是不会报错误二,所以重启虚拟机以后解决这两个错误。

1 [root@slaver4 ~]# vim /etc/security/limits.conf

添加如下所示内容:

注意:解释如是,*是代表任何用户,此配置的意思是任何用户都可以打开文件的数量。

1 * soft nofile 65536

2 * hard nofile 65536

操作如下所示:

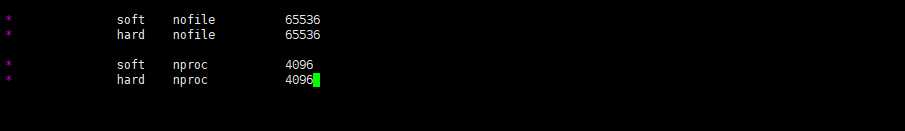

错误二、[2]: max number of threads [3756] for user [elsearch] is too low, increase to at least [4096]

错误原因,最大线程个数太低。修改配置文件/etc/security/limits.conf(和问题1是一个文件),增加配置。

* soft nproc 4096 * hard nproc 4096

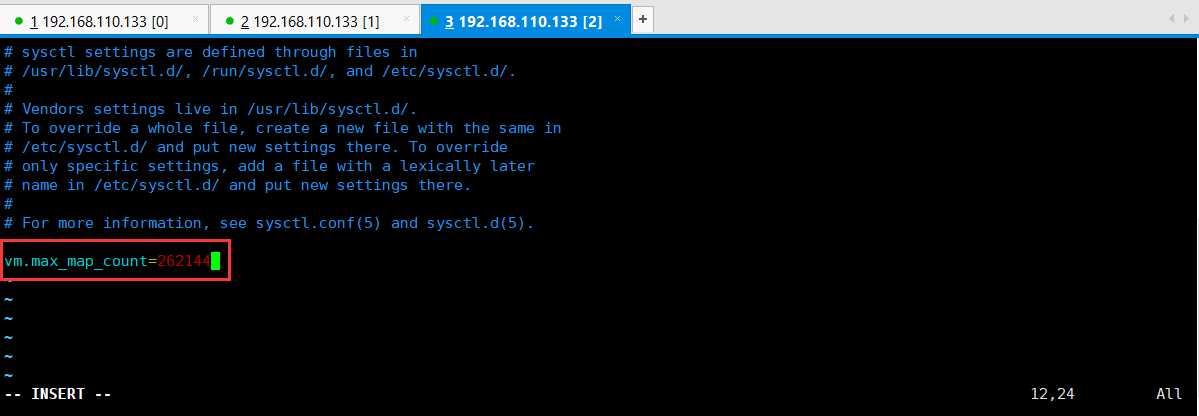

错误三、[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

错误原因,应该是虚拟内存不足导致的错误。修改/etc/sysctl.conf文件,增加配置vm.max_map_count=262144。执行命令sysctl -p生效。

1 [root@slaver4 ~]# vim /etc/sysctl.conf

2 [root@slaver4 ~]# sysctl -p

3 vm.max_map_count = 262144

4 [root@slaver4 ~]#

添加内容如下所示:

1 vm.max_map_count=262144

操作如下所示:

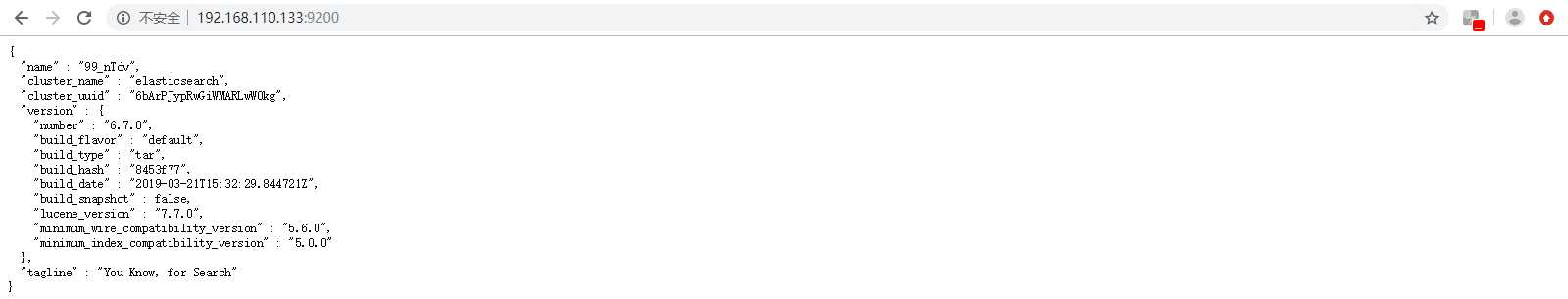

解决完上述两个错误以后,最好停机虚拟机,再次启动即可,使用浏览器访问也出现了正常,如下所示:

使用curl 192.168.110.133:9200可以看到访问成功了。也说明你的ElasticSearch启动成功了。

3、elasticsearch.yml的关键配置说明。

cluster.name是集群的名称,以此作为是否同一集群的判断条件。 node.name节点名称,以此作为集群中不同节点的区分条件。 network.host/http.port是网络地址和端口,用于http和tranport服务使用。 path.data是数据存储地址。 path.log是日志存储地址。

elasticsearch两种模式,一种是开发者模式,一种是生产模式。

Development与Production模式说明。

以transport的地址是否绑定在localhost为判断标准network.host。如果不是localhost或者127.0.0.1都认为是生产模式。

Development模式下在启动时候会以warning的方式提示配置检查异常。

Production模式下在启动时候会以error的方式提示配置检查异常并退出。

参数修改的第二种方式:bin/elasticsearch -Ehttp.port=19200

4、Elasticsearch集群的搭建:https://www.cnblogs.com/biehongli/p/11650045.html

elasticsearch本地快速启动集群的方式,自行练习即可: bin/elasticsearch bin/elasticsearch -Ehttp.port=8200 -Epath.data=node2 bin/elasticsearch -Ehttp.port=7200 -Epath.data=node3 http://192.168.110.133:9200/_cat/nodes可以查看集群是否组成集群。 http://192.168.110.133:9200/_cluster/stats可以查看集群的状态。

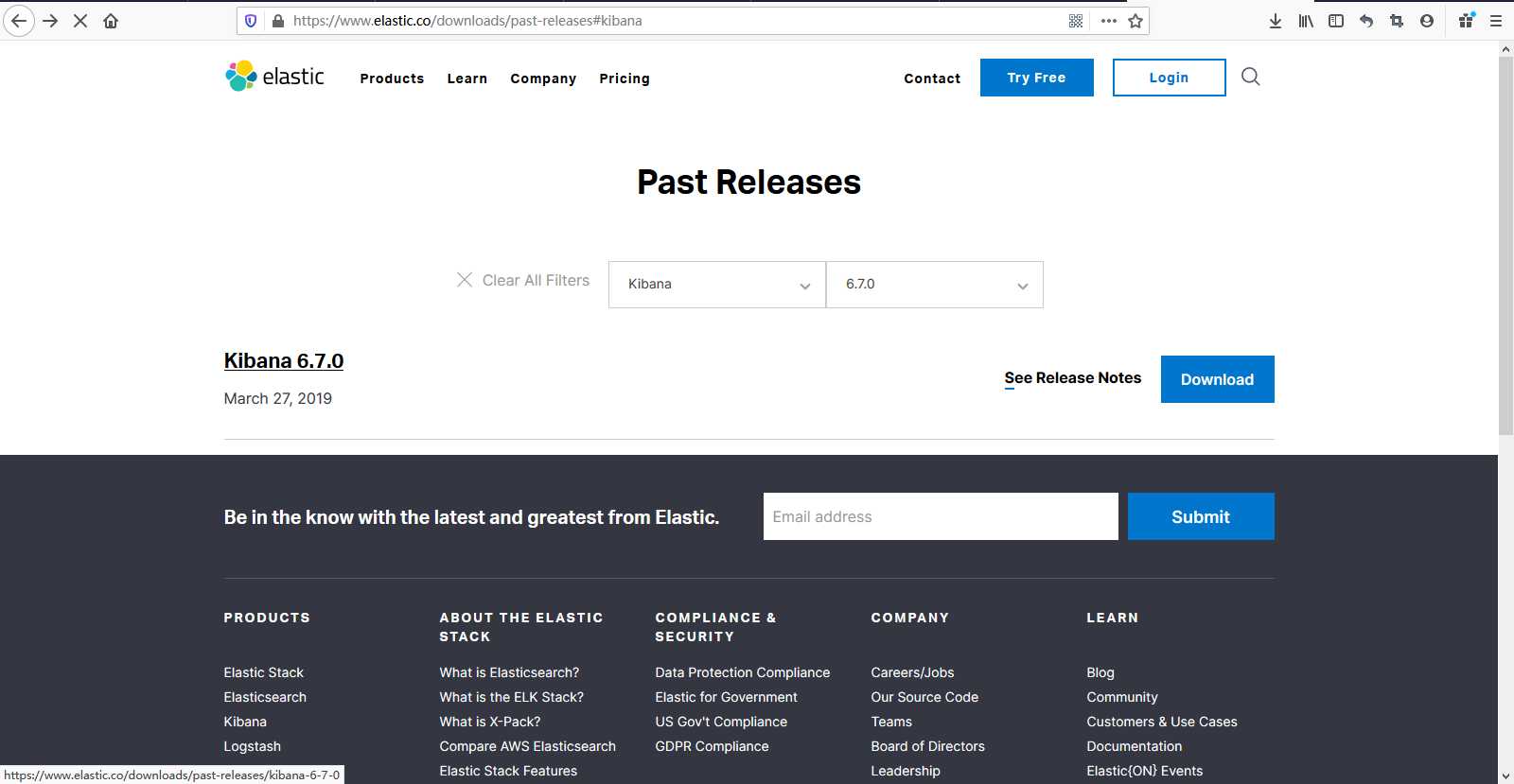

5、Kibane的安装与运行。

Kibane的安装下载,解压缩操作如下所示:

1 [root@slaver4 package]# ls 2 elasticsearch-6.7.0.tar.gz 3 [root@slaver4 package]# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.7.0-linux-x86_64.tar.gz 4 --2019-10-25 16:12:36-- https://artifacts.elastic.co/downloads/kibana/kibana-6.7.0-linux-x86_64.tar.gz 5 正在解析主机 artifacts.elastic.co (artifacts.elastic.co)... 151.101.110.222, 2a04:4e42:1a::734 6 正在连接 artifacts.elastic.co (artifacts.elastic.co)|151.101.110.222|:443... 已连接。 7 已发出 HTTP 请求,正在等待回应... 200 OK 8 长度:186406262 (178M) [application/x-gzip] 9 正在保存至: “kibana-6.7.0-linux-x86_64.tar.gz” 10 11 100%[======================================================================================================================================================================================>] 186,406,262 5.31MB/s 用时 40s 12 13 2019-10-25 16:13:17 (4.41 MB/s) - 已保存 “kibana-6.7.0-linux-x86_64.tar.gz” [186406262/186406262]) 14 15 [root@slaver4 package]# ls 16 elasticsearch-6.7.0.tar.gz kibana-6.7.0-linux-x86_64.tar.gz 17 [root@slaver4 package]# tar -zxvf kibana-6.7.0-linux-x86_64.tar.gz -C /home/hadoop/soft/

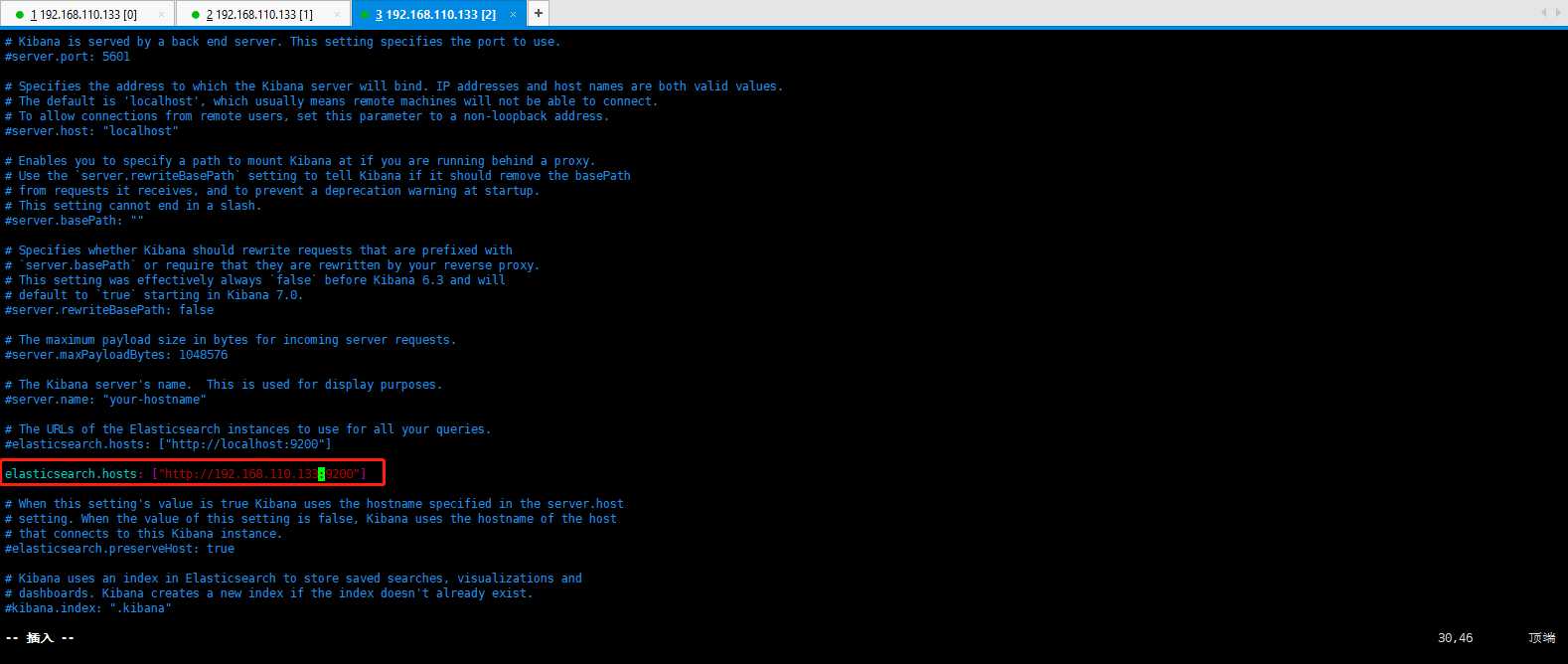

解压缩完毕,修改配置文件,我将kibana的目录赋给自己创建的用户及其用户组,如下所示:

server.port: 5601 # 默认是5601,不改也可以。

server.host: "192.168.110.133" # 修改此参数,可以在浏览器访问的。

elasticsearch.hosts: ["http://192.168.110.133:9200"]

修改完毕,可以启动kibana,更多参数修改你可以自己尝试。当出现Server running at http://localhost:5601就已经启动成功了。

1 [elsearch@slaver4 kibana-6.7.0-linux-x86_64]$ bin/kibana 2 log [08:31:34.724] [info][status][plugin:kibana@6.7.0] Status changed from uninitialized to green - Ready 3 log [08:31:34.921] [info][status][plugin:elasticsearch@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 4 log [08:31:34.928] [info][status][plugin:xpack_main@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 5 log [08:31:34.958] [info][status][plugin:graph@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 6 log [08:31:34.976] [info][status][plugin:monitoring@6.7.0] Status changed from uninitialized to green - Ready 7 log [08:31:34.984] [info][status][plugin:spaces@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 8 log [08:31:34.997] [warning][security] Generating a random key for xpack.security.encryptionKey. To prevent sessions from being invalidated on restart, please set xpack.security.encryptionKey in kibana.yml 9 log [08:31:35.008] [warning][security] Session cookies will be transmitted over insecure connections. This is not recommended. 10 log [08:31:35.037] [info][status][plugin:security@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 11 log [08:31:35.067] [info][status][plugin:searchprofiler@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 12 log [08:31:35.073] [info][status][plugin:ml@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 13 log [08:31:35.164] [info][status][plugin:tilemap@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 14 log [08:31:35.167] [info][status][plugin:watcher@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 15 log [08:31:35.190] [info][status][plugin:grokdebugger@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 16 log [08:31:35.196] [info][status][plugin:dashboard_mode@6.7.0] Status changed from uninitialized to green - Ready 17 log [08:31:35.198] [info][status][plugin:logstash@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 18 log [08:31:35.210] [info][status][plugin:beats_management@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 19 log [08:31:35.269] [info][status][plugin:apm@6.7.0] Status changed from uninitialized to green - Ready 20 log [08:31:35.272] [info][status][plugin:tile_map@6.7.0] Status changed from uninitialized to green - Ready 21 log [08:31:35.276] [info][status][plugin:task_manager@6.7.0] Status changed from uninitialized to green - Ready 22 log [08:31:35.279] [info][status][plugin:maps@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 23 log [08:31:35.287] [info][status][plugin:interpreter@6.7.0] Status changed from uninitialized to green - Ready 24 log [08:31:35.309] [info][status][plugin:canvas@6.7.0] Status changed from uninitialized to green - Ready 25 log [08:31:35.326] [info][status][plugin:license_management@6.7.0] Status changed from uninitialized to green - Ready 26 log [08:31:35.334] [info][status][plugin:cloud@6.7.0] Status changed from uninitialized to green - Ready 27 log [08:31:35.344] [info][status][plugin:index_management@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 28 log [08:31:35.383] [info][status][plugin:console@6.7.0] Status changed from uninitialized to green - Ready 29 log [08:31:35.386] [info][status][plugin:console_extensions@6.7.0] Status changed from uninitialized to green - Ready 30 log [08:31:35.404] [info][status][plugin:notifications@6.7.0] Status changed from uninitialized to green - Ready 31 log [08:31:35.408] [info][status][plugin:index_lifecycle_management@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 32 log [08:31:35.745] [info][status][plugin:infra@6.7.0] Status changed from uninitialized to green - Ready 33 log [08:31:35.778] [info][status][plugin:rollup@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 34 log [08:31:35.853] [info][status][plugin:remote_clusters@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 35 log [08:31:35.883] [info][status][plugin:cross_cluster_replication@6.7.0] Status changed from uninitialized to yellow - Waiting for Elasticsearch 36 log [08:31:35.936] [info][status][plugin:translations@6.7.0] Status changed from uninitialized to green - Ready 37 log [08:31:35.991] [info][status][plugin:upgrade_assistant@6.7.0] Status changed from uninitialized to green - Ready 38 log [08:31:36.026] [info][status][plugin:uptime@6.7.0] Status changed from uninitialized to green - Ready 39 log [08:31:36.039] [info][status][plugin:oss_telemetry@6.7.0] Status changed from uninitialized to green - Ready 40 log [08:31:36.103] [info][status][plugin:metrics@6.7.0] Status changed from uninitialized to green - Ready 41 log [08:31:36.849] [info][status][plugin:timelion@6.7.0] Status changed from uninitialized to green - Ready 42 log [08:31:37.858] [info][status][plugin:elasticsearch@6.7.0] Status changed from yellow to green - Ready 43 log [08:31:38.051] [info][license][xpack] Imported license information from Elasticsearch for the [data] cluster: mode: basic | status: active 44 log [08:31:38.057] [info][status][plugin:xpack_main@6.7.0] Status changed from yellow to green - Ready 45 log [08:31:38.058] [info][status][plugin:graph@6.7.0] Status changed from yellow to green - Ready 46 log [08:31:38.072] [info][status][plugin:searchprofiler@6.7.0] Status changed from yellow to green - Ready 47 log [08:31:38.073] [info][status][plugin:ml@6.7.0] Status changed from yellow to green - Ready 48 log [08:31:38.074] [info][status][plugin:tilemap@6.7.0] Status changed from yellow to green - Ready 49 log [08:31:38.074] [info][status][plugin:watcher@6.7.0] Status changed from yellow to green - Ready 50 log [08:31:38.074] [info][status][plugin:grokdebugger@6.7.0] Status changed from yellow to green - Ready 51 log [08:31:38.075] [info][status][plugin:logstash@6.7.0] Status changed from yellow to green - Ready 52 log [08:31:38.075] [info][status][plugin:beats_management@6.7.0] Status changed from yellow to green - Ready 53 log [08:31:38.075] [info][status][plugin:index_management@6.7.0] Status changed from yellow to green - Ready 54 log [08:31:38.076] [info][status][plugin:index_lifecycle_management@6.7.0] Status changed from yellow to green - Ready 55 log [08:31:38.076] [info][status][plugin:rollup@6.7.0] Status changed from yellow to green - Ready 56 log [08:31:38.077] [info][status][plugin:remote_clusters@6.7.0] Status changed from yellow to green - Ready 57 log [08:31:38.077] [info][status][plugin:cross_cluster_replication@6.7.0] Status changed from yellow to green - Ready 58 log [08:31:38.078] [info][kibana-monitoring][monitoring-ui] Starting monitoring stats collection 59 log [08:31:38.139] [info][status][plugin:security@6.7.0] Status changed from yellow to green - Ready 60 log [08:31:38.140] [info][status][plugin:maps@6.7.0] Status changed from yellow to green - Ready 61 log [08:31:38.411] [info][license][xpack] Imported license information from Elasticsearch for the [monitoring] cluster: mode: basic | status: active 62 log [08:31:40.064] [warning][browser-driver][reporting] Enabling the Chromium sandbox provides an additional layer of protection. 63 log [08:31:40.067] [warning][reporting] Generating a random key for xpack.reporting.encryptionKey. To prevent pending reports from failing on restart, please set xpack.reporting.encryptionKey in kibana.yml 64 log [08:31:40.220] [info][status][plugin:reporting@6.7.0] Status changed from uninitialized to green - Ready 65 log [08:31:44.022] [info][listening] Server running at http://192.168.110.133:5601 66 log [08:31:44.413] [info][status][plugin:spaces@6.7.0] Status changed from yellow to green - Ready

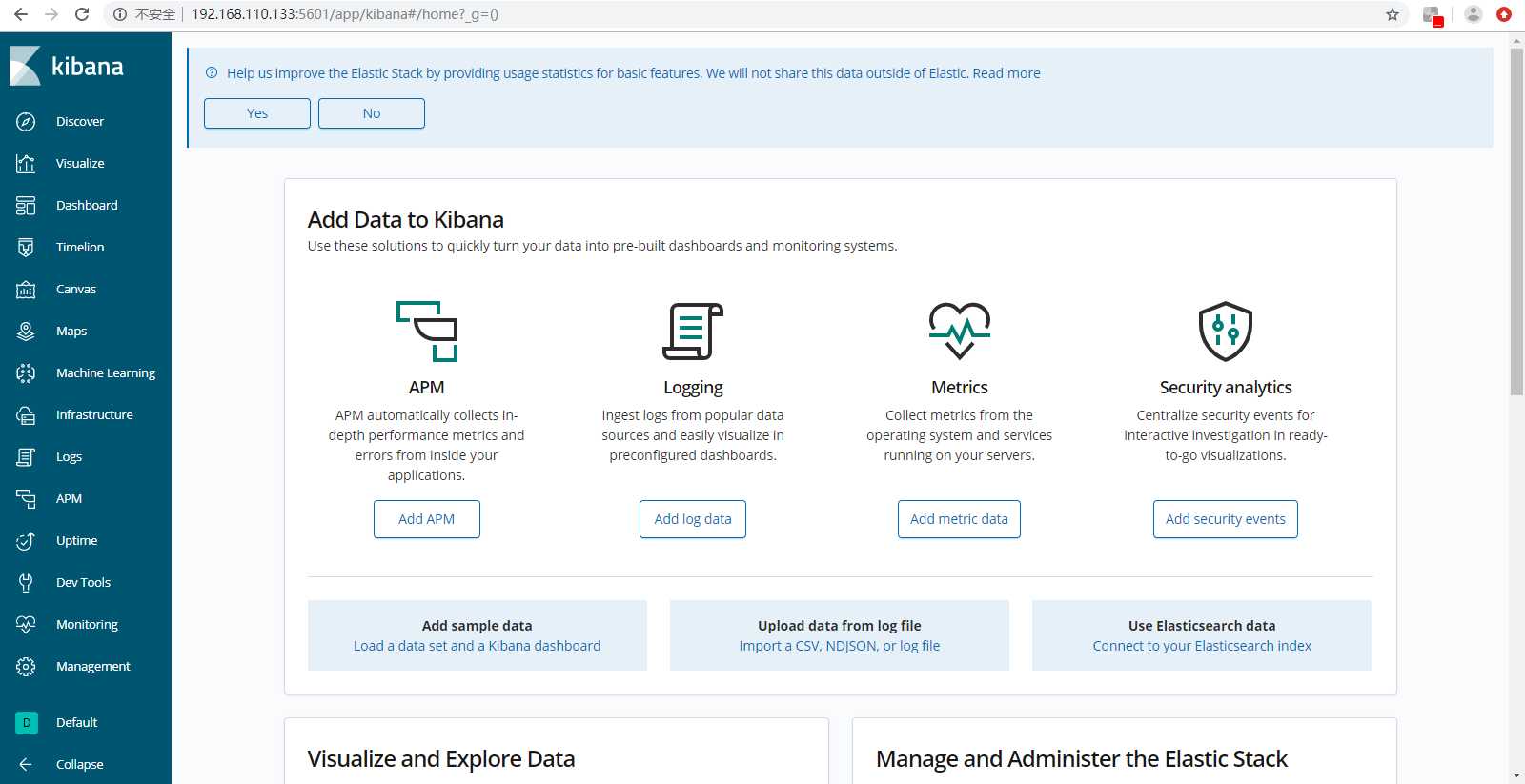

kibana访问界面如下所示:

Kibana配置说明,配置位于config文件夹中。kibana.yml关键配置说明。

server.host/server.port 访问kibana的地址和端口号。

elasticsearh.host(之前是elasticsearh.url)待访问elasticsearh的地址。

Kibana常用功能说明。

Discover数据搜索查看。Visualize图标制作。Dashboard仪表盘制作。Timelion时序数据的高级可视化分析。DevTools开发者工具。Management配置。

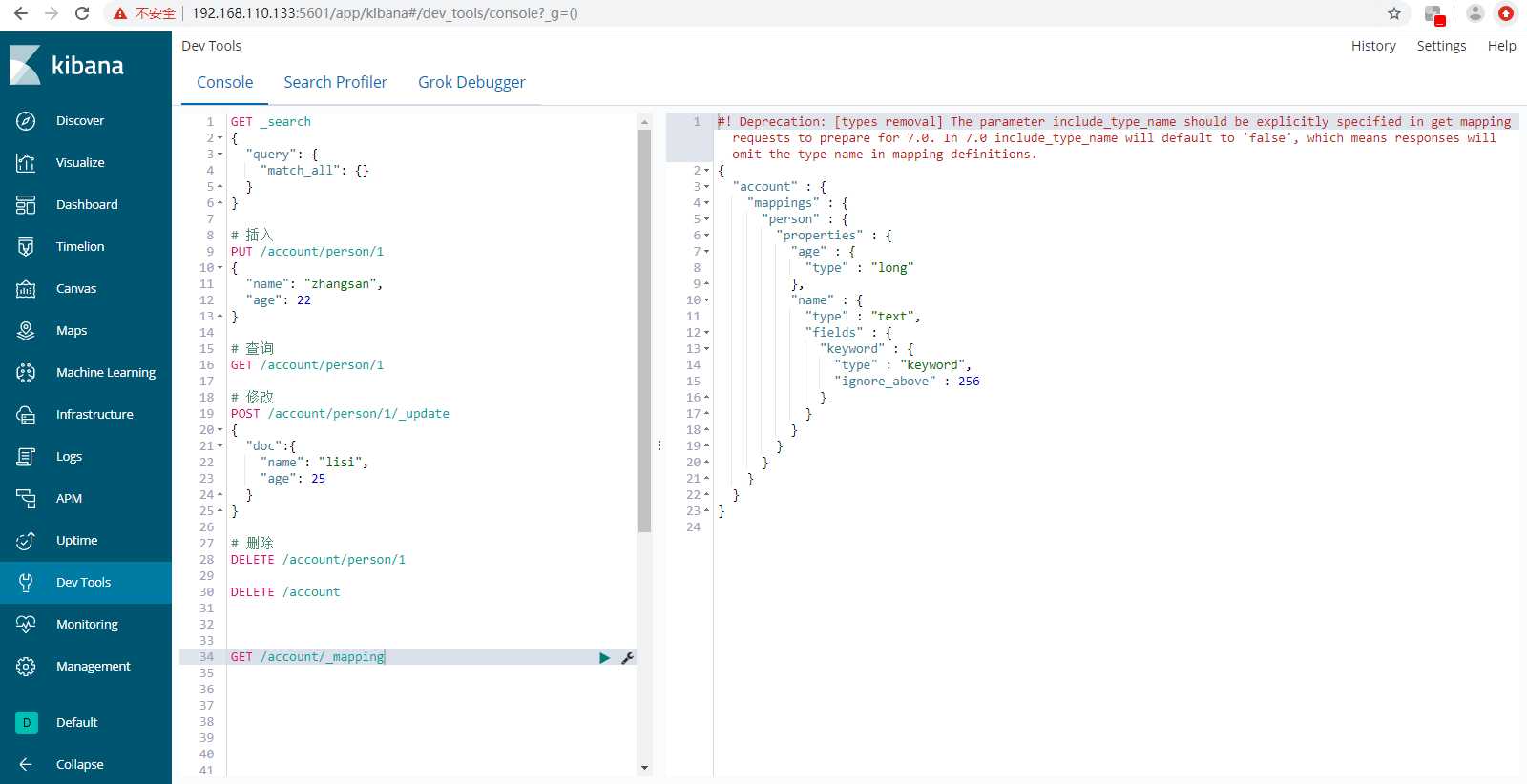

6、Elasticsearch与Kibana入门。Elasticsearch术语介绍与CRUD实际操作。

Elasticsearch常用术语。

Document文档数据。

Index索引。

Type索引中的数据类型。6.x版本以及以后版本慢慢废除此说法。

Field字段,文档的属性。

Query DESL查询语法。

Create创建文档。Read读取文档。Update更新文档。Delete删除文档。

1 GET _search 2 { 3 "query": { 4 "match_all": {} 5 } 6 } 7 8 # 插入 9 PUT /account/person/1 10 { 11 "name": "zhangsan", 12 "age": 22 13 } 14 15 PUT /account/person/2 16 { 17 "name": "zhangsan", 18 "age": 22 19 } 20 21 # 查询 22 GET /account/person/2 23 24 # 修改 25 POST /account/person/1/_update 26 { 27 "doc":{ 28 "name": "lisi", 29 "age": 25 30 } 31 } 32 33 # 删除 34 DELETE /account/person/1 35 36 DELETE /account 37 38 39 40 GET /account/_mapping 41 42 43 # Query String 44 GET /account/person/_search?q=zhangsan 45 46 # Query DSL 47 GET /account/person/_search 48 { 49 "query":{ 50 "match":{ 51 "name": "lisi" 52 } 53 } 54 }

操作如下所示:

7、Beats入门学习。Lightweight Data Shipper,轻量级的数据传送者。

Filebeat日志文件。

处理流程:输入Input、处理Filter、输出Output。

Metricbeat度量数据。

主要用来搜集cpu数据,内存数据,磁盘数据,nginx,mysql。

Packetbeat网络数据。

Winlogbeat,Windows数据。

Auditbeat

Heartbeat建康检查。

Functionbeat

Filebeat的配置简介:

a、Filebeat Input配置简介,使用的是yaml语法。input_type目前有两个类型,分别是log日志文件、stdin标准输入。 案例如下所示: filebeat.properties: -input_type:log paths: -/var/log/apache/httpd-*.log -input_type:log paths: -/var/log/messages -/var/log/*.log b、Filebeat Output配置简介,支持的Output对象包含,Console标准输出、Elasticsearch、Logstash、Kafka、Redis、File。 案例如下所示: output.elasticsearch: hosts:["http://localhost:9200"] # elasticsearch的连接地址 username:"admin" # 用户权限认证,需要配置账号密码 password:"123456" output.console: # 输出到控制台,方便调试。 pretty:true # 输出做json的格式化。 c、Filebeat Filter配置简介。 Input 时处理 Include_lines : 达到某些条件的时候,读入这一行。 exclude_lines :达到某些条件的时候,不读入这一行。 exclude_files:当文件名符合某些条件的时候,不读取这个文件。 output 前处理 --Processor drop_event :读取到某一条,满足了某个条件,不输出。 drop_fields :读取到某一条,满足了某个条件,不输出这个字段。 Decode_json_fields :把这条数据里面符合json格式的字段,去做json的解析。 Include_fields :加入一些字段,或者是只想取数据里面的某一些字段。 案例如下所示: processors: -drop_event: when: regexp: # 正则表达式,当匹配到message字段以DBG开头的进行丢弃。 message:"^DBG:" processors: -decode_json_fields: # 将结果处理成正常的json格式的。 fields:["inner"] d、Filebeat高级使用简介: Filebeat + Elasticsearch Ingest Node组合使用。 原因:Filebeat 缺乏数据转换的能力。 Elasticsearch Ingest Node介绍如下所示: 新增的node类型。 在数据写入es前对数据进行处理转换。 使用的api是pipeline api。

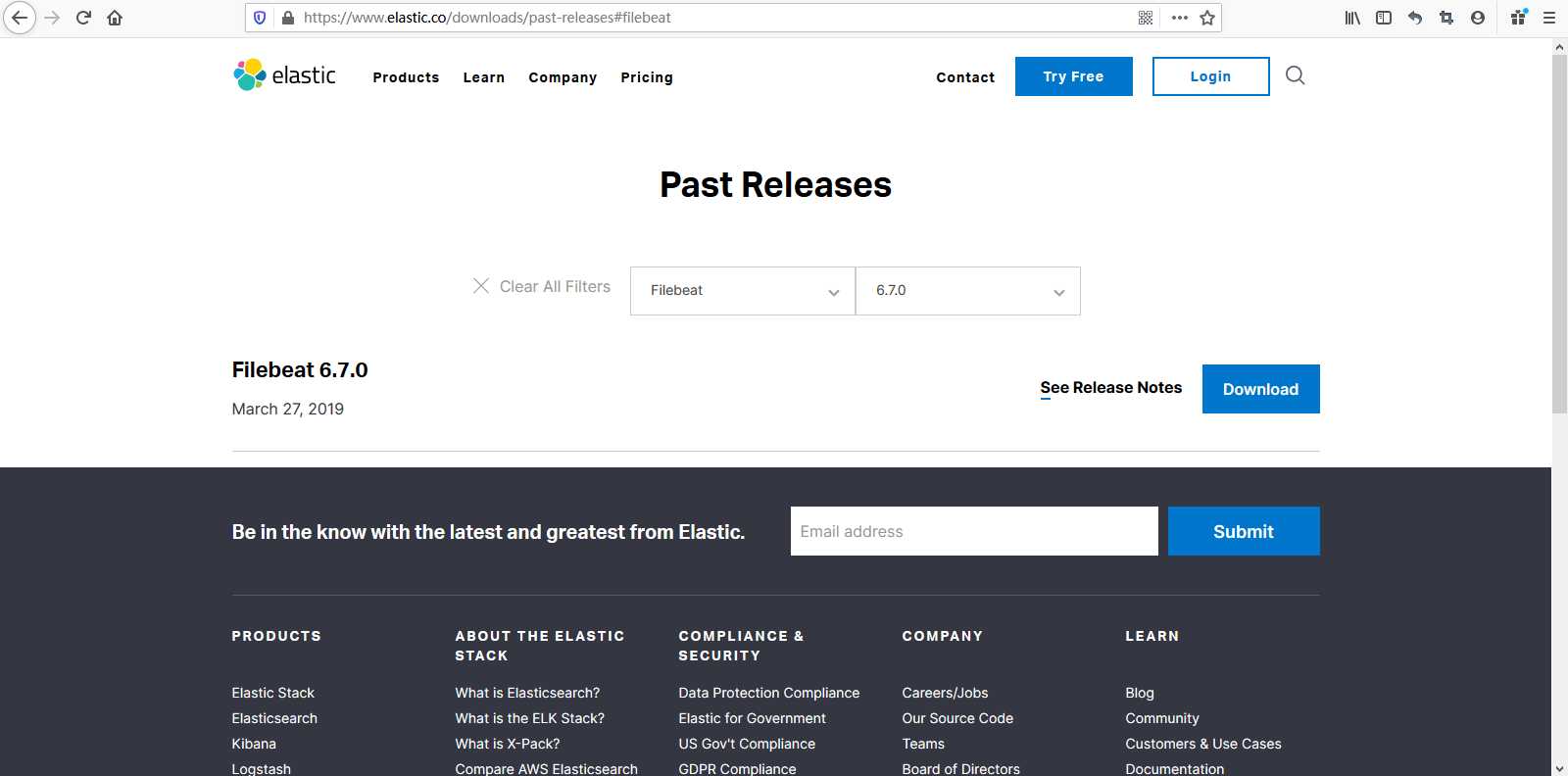

8、Filebeat的下载,安装部署。Filebeat是go开发的,所以分操作系统的。根据自己需求下载哦。

你可以下载好,上传到服务器,我是使用wget命令直接下载了。

1 [root@slaver4 package]# ls 2 elasticsearch-6.7.0.tar.gz kibana-6.7.0-linux-x86_64.tar.gz 3 [root@slaver4 package]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.7.0-linux-x86_64.tar.gz 4 --2019-10-26 10:33:52-- https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.7.0-linux-x86_64.tar.gz 5 正在解析主机 artifacts.elastic.co (artifacts.elastic.co)... 151.101.230.222, 2a04:4e42:36::734 6 正在连接 artifacts.elastic.co (artifacts.elastic.co)|151.101.230.222|:443... 已连接。 7 已发出 HTTP 请求,正在等待回应... 200 OK 8 长度:11703213 (11M) [application/x-gzip] 9 正在保存至: “filebeat-6.7.0-linux-x86_64.tar.gz” 10 11 100%[======================================================================================================================================================================================>] 11,703,213 3.97MB/s 用时 2.8s 12 13 2019-10-26 10:33:56 (3.97 MB/s) - 已保存 “filebeat-6.7.0-linux-x86_64.tar.gz” [11703213/11703213]) 14 15 [root@slaver4 package]# ls 16 elasticsearch-6.7.0.tar.gz filebeat-6.7.0-linux-x86_64.tar.gz kibana-6.7.0-linux-x86_64.tar.gz 17 [root@slaver4 package]# tar -zxvf filebeat-6.7.0-linux-x86_64.tar.gz -C /home/hadoop/soft/

由于使用的是root进行解压缩操作的,将文件拥有者赋予给elsearch自己新建的用户和用户组。

filebeat-6.7.0-linux-x86_64的文件解释如下所示:

data存储的是filebeat解析过程中会去存日志读到的位置。

filebeat是可执行文件。

module是filebeat支持的模块的功能。

1 [root@slaver4 package]# cd ../soft/ 2 [root@slaver4 soft]# ls 3 elasticsearch-6.7.0 filebeat-6.7.0-linux-x86_64 kibana-6.7.0-linux-x86_64 4 [root@slaver4 soft]# chown -R elsearch:elsearch filebeat-6.7.0-linux-x86_64/ 5 [root@slaver4 soft]# su elsearch 6 [elsearch@slaver4 soft]$ ls 7 elasticsearch-6.7.0 filebeat-6.7.0-linux-x86_64 kibana-6.7.0-linux-x86_64 8 [elsearch@slaver4 soft]$ ll 9 总用量 0 10 drwxr-xr-x. 9 elsearch elsearch 155 10月 25 15:09 elasticsearch-6.7.0 11 drwxr-xr-x. 5 elsearch elsearch 212 10月 26 10:35 filebeat-6.7.0-linux-x86_64 12 drwxr-xr-x. 13 elsearch elsearch 246 10月 25 16:13 kibana-6.7.0-linux-x86_64 13 [elsearch@slaver4 soft]$

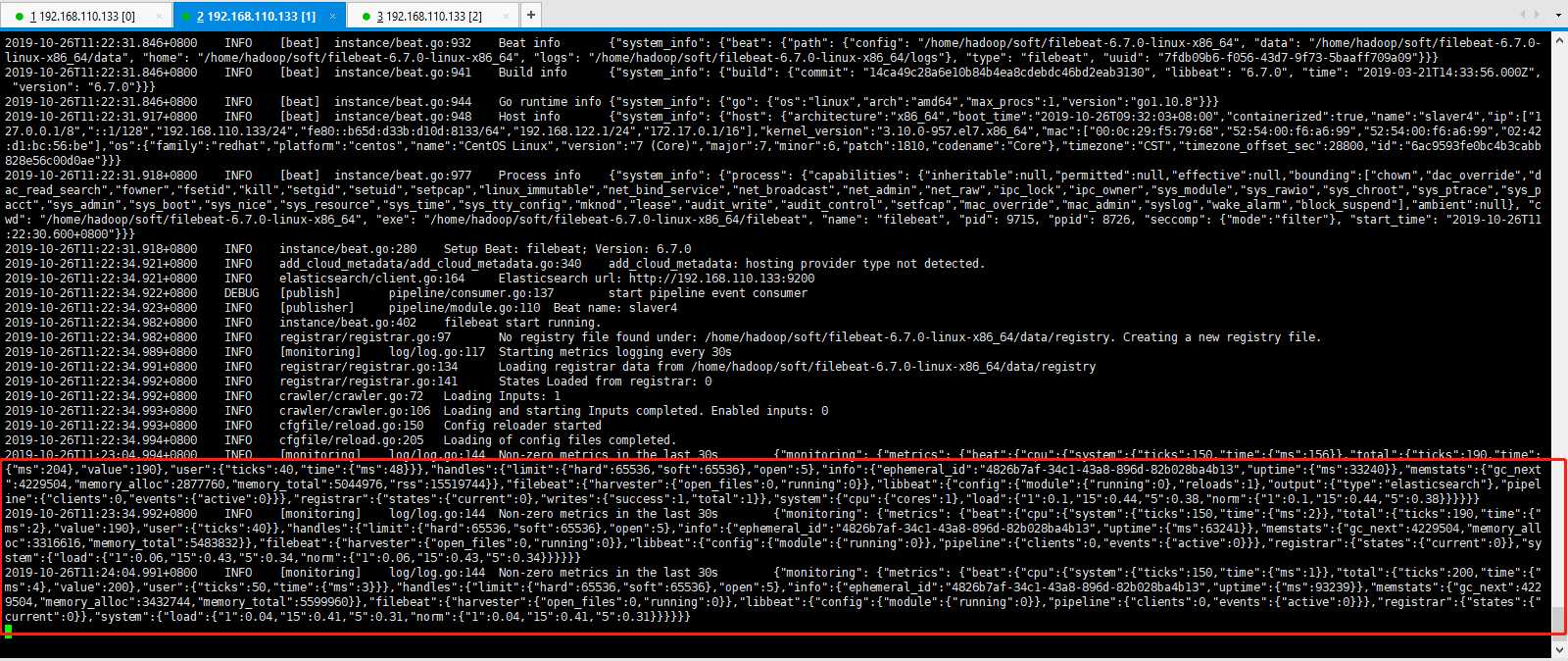

下面,通过一个简单案例,使用Filebeat收集nginx log日志,通过stdin收集日志。通过console输出结果。

首先修改一下filebeat的配置,修改配置如谢谢所示:

1 #=========================== Filebeat inputs ============================= 2 3 filebeat.inputs: 4 5 # Each - is an input. Most options can be set at the input level, so 6 # you can use different inputs for various configurations. 7 # Below are the input specific configurations. 8 9 - type: log 10 11 # Change to true to enable this input configuration. 12 enabled: false 13 14 # Paths that should be crawled and fetched. Glob based paths. 15 paths: 16 # - /var/log/*.log 17 - /home/hadoop/soft/elasticsearch-6.7.0/logs 18 #- c:programdataelasticsearchlogs* 19 20 21 22 #-------------------------- Elasticsearch output ------------------------------ 23 output.elasticsearch: 24 # Array of hosts to connect to. 25 # hosts: ["localhost:9200"] 26 hosts: ["192.168.110.133:9200"] 27 28 # Enabled ilm (beta) to use index lifecycle management instead daily indices. 29 #ilm.enabled: false 30 31 # Optional protocol and basic auth credentials. 32 #protocol: "https" 33 #username: "elastic" 34 #password: "changeme" 35 36

启动你的filebeat就可以看到日志信息。

[elsearch@slaver4 filebeat-6.7.0-linux-x86_64]$ ./filebeat -e -c filebeat.yml -d "publish"

9、Logstash入门,下载安装部署,如下所示。

简介data shipper (不是轻量级的,会比beats占用更多的资源,但是功能强大)。

ETL的概念:Extract 对数据进行提取、Transform 转换、Load 对外的输出。

Logstash 是一个开源的,服务端的数据处理流,可以同时从多个数据源提取数据、转换数据、最后把数据放到你要存储的地方。

10、Logstash处理流程,如下所示:

input:可以从file 、Redis 、beats、kafka等读取数据。

filter :gork(表达式,简单理解为基于正则的,可以将非格式化数据转化成格式化数据的语法)、mutate(可以对结构化的数据的字段进行增删改查)、drop、date。

output :可以向stdout 、elasticsearch 、Redis、kafka等中输出。

处理流程,Input和Output的配置,由于Logstash不是yaml语法。 input{file{path => "/tmp/abc.log"}} output{stdout{codec => rubydebug}} 处理流程,Filter配置。 Grok,基于正则表达式提供了丰富可重用的模式(pattern)。基于此可以将非结构化数据做结构化处理。 Date,将字符串类型的时间字段转换为时间戳类型,方便后续数据处理。 Mutate,进行增加,修改,删除,替换等字段相关的处理。

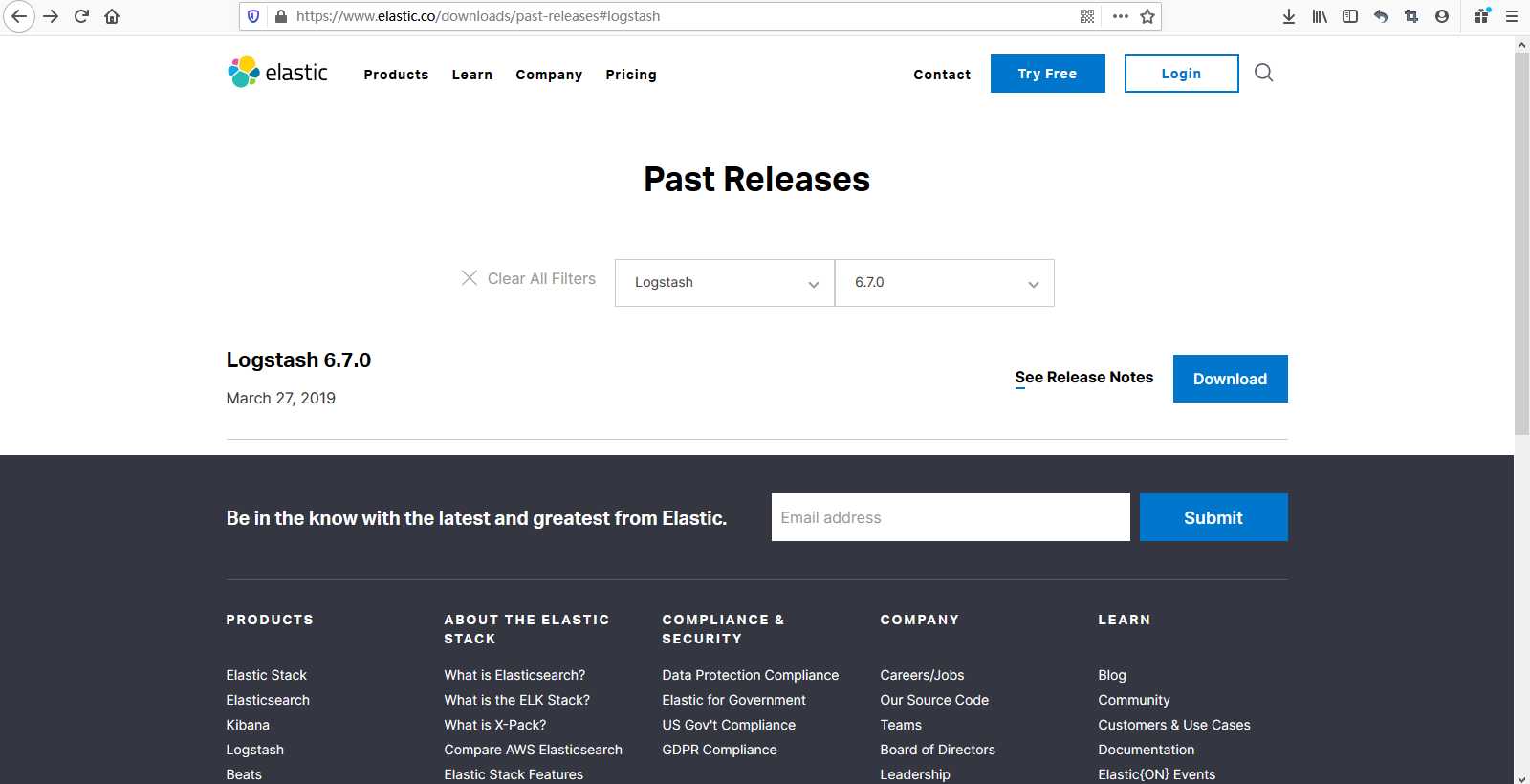

11、Logstash的下载,安装,Logstash是Ruby开发的哦。如下所示:

Logstash也是基于JVM的应用,我这里直接下载tar包,方便操作,压缩包略大,百十兆哈。

1 [root@slaver4 package]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.7.0.tar.gz 2 --2019-10-26 14:31:48-- https://artifacts.elastic.co/downloads/logstash/logstash-6.7.0.tar.gz 3 正在解析主机 artifacts.elastic.co (artifacts.elastic.co)... 151.101.110.222, 2a04:4e42:1a::734 4 正在连接 artifacts.elastic.co (artifacts.elastic.co)|151.101.110.222|:443... 已连接。 5 已发出 HTTP 请求,正在等待回应... 200 OK 6 长度:175824513 (168M) [application/x-gzip] 7 正在保存至: “logstash-6.7.0.tar.gz” 8 9 100%[======================================================================================================================================================================================>] 175,824,513 3.29MB/s 用时 4m 13s 10 11 2019-10-26 14:36:02 (679 KB/s) - 已保存 “logstash-6.7.0.tar.gz” [175824513/175824513]) 12 13 [root@slaver4 package]# ll 14 总用量 510692 15 -rw-r--r--. 1 elsearch elsearch 149006122 10月 25 14:44 elasticsearch-6.7.0.tar.gz 16 -rw-r--r--. 1 root root 11703213 3月 26 2019 filebeat-6.7.0-linux-x86_64.tar.gz 17 -rw-r--r--. 1 root root 186406262 3月 26 2019 kibana-6.7.0-linux-x86_64.tar.gz 18 -rw-r--r--. 1 root root 175824513 3月 26 2019 logstash-6.7.0.tar.gz 19 drwxr-xr-x. 2 elsearch elsearch 131 10月 26 10:44 materials 20 [root@slaver4 package]# tar -zxvf logstash-6.7.0.tar.gz -C /home/hadoop/soft/ 21 [root@slaver4 package]# cd ../soft/ 22 [root@slaver4 soft]# ls 23 elasticsearch-6.7.0 filebeat-6.7.0-linux-x86_64 kibana-6.7.0-linux-x86_64 logstash-6.7.0 24 [root@slaver4 soft]# chown -R elsearch:elsearch logstash-6.7.0/ 25 [root@slaver4 soft]# ls 26 elasticsearch-6.7.0 filebeat-6.7.0-linux-x86_64 kibana-6.7.0-linux-x86_64 logstash-6.7.0 27 [root@slaver4 soft]# su elsearch 28 [elsearch@slaver4 soft]$ cd logstash-6.7.0/ 29 [elsearch@slaver4 logstash-6.7.0]$ ls 30 bin config CONTRIBUTORS data Gemfile Gemfile.lock lib LICENSE.txt logstash-core logstash-core-plugin-api modules NOTICE.TXT tools vendor x-pack

更深入学习后面见咯!!!

待续.......

以上是关于ElasticStack的入门学习的主要内容,如果未能解决你的问题,请参考以下文章