phoenix创建表失败:phoenixIOException: Max attempts exceeded

Posted caoweixiong

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了phoenix创建表失败:phoenixIOException: Max attempts exceeded相关的知识,希望对你有一定的参考价值。

下面的问题,搞了1天才解决,太坑了,在这里记录一下。

问题现像:执行命令后,1分钟没有返回, 然后报下面的错,偶尔会出现以下不同的报错信息。

jdbc:phoenix:10.0.xx.1:2181> create table IF NOT EXISTS test.Person1 (IDCardNum INTEGER not null primary key, Name varchar(20),Age INTEGER) COMPRESSION = ‘SNAPPY‘,BLOOMFILTER = ‘ROW‘;

Error: Max attempts exceeded (state=08000,code=101)

org.apache.phoenix.exception.PhoenixIOException: Max attempts exceeded

at org.apache.phoenix.util.ServerUtil.parseServerException(ServerUtil.java:138)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.ensureTableCreated(ConnectionQueryServicesImpl.java:1204)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.createTable(ConnectionQueryServicesImpl.java:1501)

at org.apache.phoenix.schema.MetaDataClient.createTableInternal(MetaDataClient.java:2721)

at org.apache.phoenix.schema.MetaDataClient.createTable(MetaDataClient.java:1114)

at org.apache.phoenix.compile.CreateTableCompiler$1.execute(CreateTableCompiler.java:192)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:408)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:391)

at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:390)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:378)

at org.apache.phoenix.jdbc.PhoenixStatement.execute(PhoenixStatement.java:1825)

at sqlline.Commands.execute(Commands.java:822)

at sqlline.Commands.sql(Commands.java:732)

at sqlline.SqlLine.dispatch(SqlLine.java:813)

at sqlline.SqlLine.begin(SqlLine.java:686)

at sqlline.SqlLine.start(SqlLine.java:398)

at sqlline.SqlLine.main(SqlLine.java:291)

Caused by: org.apache.hadoop.hbase.client.RetriesExhaustedException: Max attempts exceeded

at org.apache.hadoop.hbase.master.assignment.AssignProcedure.startTransition(AssignProcedure.java:180)

at org.apache.hadoop.hbase.master.assignment.RegionTransitionProcedure.execute(RegionTransitionProcedure.java:295)

at org.apache.hadoop.hbase.master.assignment.RegionTransitionProcedure.execute(RegionTransitionProcedure.java:85)

at org.apache.hadoop.hbase.procedure2.Procedure.doExecute(Procedure.java:845)

at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.execProcedure(ProcedureExecutor.java:1453)

at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.executeProcedure(ProcedureExecutor.java:1221)

at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.access$800(ProcedureExecutor.java:75)

at org.apache.hadoop.hbase.procedure2.ProcedureExecutor$WorkerThread.run(ProcedureExecutor.java:1741)

jdbc:phoenix:10.0.xx.1:2181> create table IF NOT EXISTS test.Person1 (IDCardNum INTEGER not null primary key, Name varchar(20),Age INTEGER) COMPRESSION = ‘SNAPPY‘,BLOOMFILTER = ‘ROW‘;

Error: ERROR 1102 (XCL02): Cannot get all table regions. (state=XCL02,code=1102)

java.sql.SQLException: ERROR 1102 (XCL02): Cannot get all table regions.

at org.apache.phoenix.exception.SQLExceptionCode$Factory$1.newException(SQLExceptionCode.java)

.....................

问题原因:

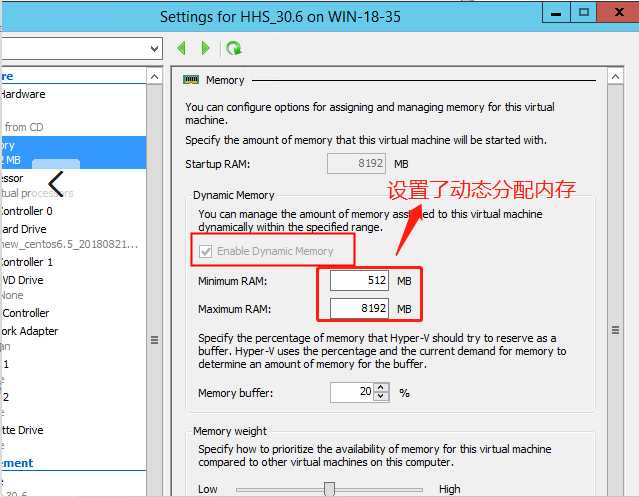

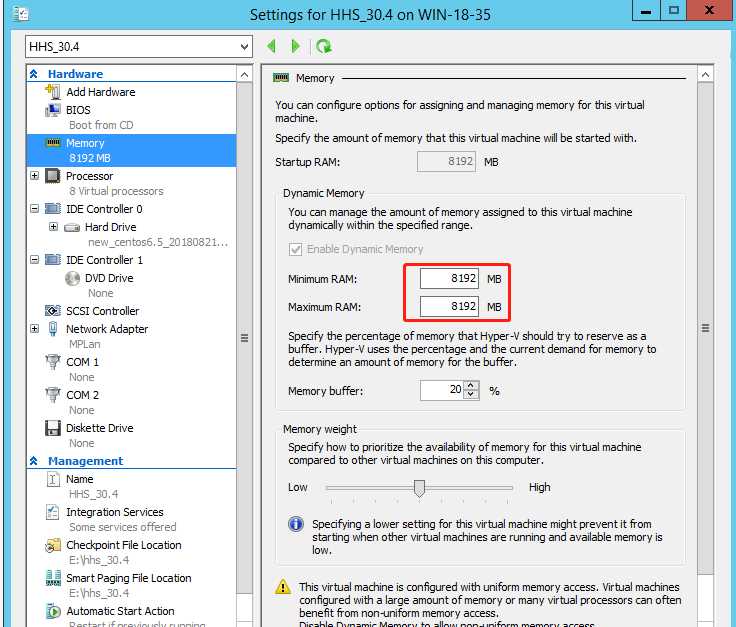

因为该服务器用的是虚拟机,运维人员设置该虚拟机的内存为动态分配,内存不够所以产生问题。

改成下面的固定内存就好了:

以上是关于phoenix创建表失败:phoenixIOException: Max attempts exceeded的主要内容,如果未能解决你的问题,请参考以下文章

phoenix 利用CsvBulkLoadTool 批量带入数据并自动创建索引

Pyspark Phoenix 集成在 oozie 工作流程中失败

phoenix连接hbase数据库,创建二级索引报错:Error: org.apache.phoenix.exception.PhoenixIOException: Failed after atte