第十一章 读写分离及分布式架构

Posted lpcsf

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了第十一章 读写分离及分布式架构相关的知识,希望对你有一定的参考价值。

atlas 实现读写分离

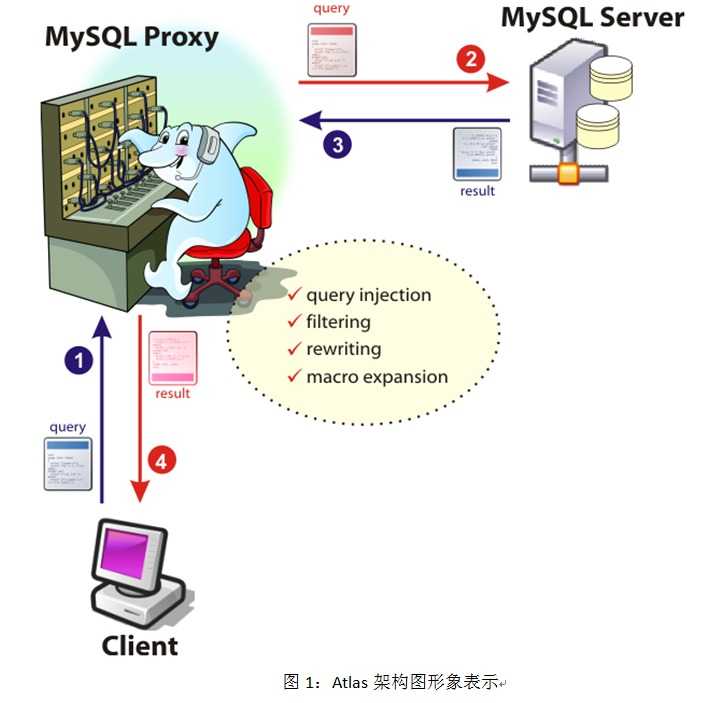

Atlas是由 Qihoo 360, Web平台部基础架构团队开发维护的一个基于mysql协议的数据中间层项目。

atlas 说明

Atlas是一个位于应用程序与MySQL之间中间件。在后端DB看来,Atlas相当于连接它的客户端,在前端应用看来,Atlas相当于一个DB。Atlas作为服务端与应用程序通讯,它实现了MySQL的客户端和服务端协议,同时作为客户端与MySQL通讯。它对应用程序屏蔽了DB的细节,同时为了降低MySQL负担,它还维护了连接池。

atlas 部署

安装atlas

atlas 安装包下载地址:https://github.com/Qihoo360/Atlas

rpm -ivh Atlas-2.2.1.el6.x86_64.rpm修改test.cnf配置文件

配置文件说明具体可以看安装后自带的test.cnf文件。

cd /usr/local/mysql-proxy/conf

mv test.cnf test.cnf.bak

vim test.cnf

[mysql-proxy]

admin-username = user

admin-password = pwd

# Atlas后端连接的MySQL主库的IP和端口

proxy-backend-addresses = 10.0.0.55:3306

# Atlas后端连接的MySQL从库的IP和端口,@后面的数字代表权重,用作负载均衡,则默认为1,可设多项,逗号分隔

proxy-read-only-backend-addresses = 10.0.0.51:3306,10.0.0.53:3306

# 用户名与其对应的加密过的MySQL密码,密码使用PREFIX/bin目录下的加密程序encrypt加密

pwds = repl:3yb5jEku5h4=,mha:O2jBXONX098=

daemon = true

keepalive = true

event-threads = 8

log-level = message

log-path = /usr/local/mysql-proxy/log

sql-log=ON

proxy-address = 0.0.0.0:33060

admin-address = 0.0.0.0:2345

charset=utf8启动atlas

/usr/local/mysql-proxy/bin/mysql-proxyd test start

ps -ef |grep proxy测试读写分离

[root@db03 ~]# mysql -umha -pmha -h 10.0.0.53 -P 33060

读:

db03 [(none)]>select @@server_id;

写:

db03 [(none)]>begin;select @@server_id; commit;生产授权用户

例子: root@'10.0.0.%'

(1) 业务主库中

db01 [(none)]>grant all on *.* to root@'10.0.0.%' identified by '123';

(2) 将密码加密处理

cd /usr/local/mysql-proxy/bin

[root@m01 /usr/local/mysql-proxy/bin]# ./encrypt 123

3yb5jEku5h4=

(3) 修改配置文件

vim /usr/local/mysql-proxy/conf/test.cnf

pwds = repl:3yb5jEku5h4=,mha:O2jBXONX098=,root:3yb5jEku5h4=

(4) 重启atlas

/usr/local/mysql-proxy/bin/mysql-proxyd test restart

(5) 用户测试

[root@db03 ~]# mysql -uroot -p123 -h 10.0.0.61 -P 33060atlas基础管理

[root@db03 ~]# mysql -uuser -ppwd -h 10.0.0.53 -P 2345

db03 [(none)]>select * from help;

(1) 查帮助:

SELECT * FROM help;

(2) 查看节点信息

SELECT * FROM backends;

(3) 上线和下线节点

SET OFFLINE $backend_id;

SET ONLINE $backend_id

(4) 删除和添加节点

REMOVE BACKEND 3;

ADD SLAVE 10.0.0.53:3306;

(5) 添加用户和删除用户

SELECT * FROM pwds;

REMOVE PWD root;

ADD PWD root:123

ADD ENPWD root:3yb5jEku5h4=

(6) 持久化配置

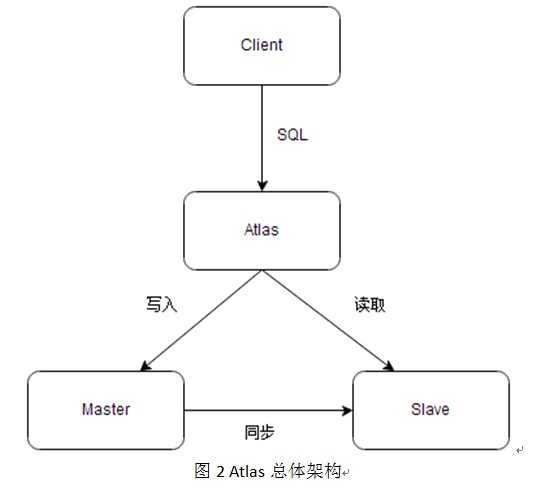

db03 [(none)]> SAVE CONFIG MyCAT基础架构准备

MyCAT基础架构图 (模拟)

这里为了避免开太多台虚拟机,所以使用了多实例,不代表生产环境

环境准备:

- 两台虚拟机 db01 db02

- 每台创建四个mysql实例:3307 3308 3309 3310

删除历史环境:

pkill mysqld

rm -rf /data/330*

mv /etc/my.cnf /etc/my.cnf.bak创建相关目录初始化数据

mkdir /data/33{07..10}/data -p

mysqld --initialize-insecure --user=mysql --datadir=/data/3307/data --basedir=/application/mysql

mysqld --initialize-insecure --user=mysql --datadir=/data/3308/data --basedir=/application/mysql

mysqld --initialize-insecure --user=mysql --datadir=/data/3309/data --basedir=/application/mysql

mysqld --initialize-insecure --user=mysql --datadir=/data/3310/data --basedir=/application/mysql准备配置文件和启动脚本

========db01============

cat >/data/3307/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3307/data

socket=/data/3307/mysql.sock

port=3307

log-error=/data/3307/mysql.log

log_bin=/data/3307/mysql-bin

binlog_format=row

skip-name-resolve

server-id=7

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3308/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3308/data

port=3308

socket=/data/3308/mysql.sock

log-error=/data/3308/mysql.log

log_bin=/data/3308/mysql-bin

binlog_format=row

skip-name-resolve

server-id=8

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3309/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3309/data

socket=/data/3309/mysql.sock

port=3309

log-error=/data/3309/mysql.log

log_bin=/data/3309/mysql-bin

binlog_format=row

skip-name-resolve

server-id=9

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3310/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3310/data

socket=/data/3310/mysql.sock

port=3310

log-error=/data/3310/mysql.log

log_bin=/data/3310/mysql-bin

binlog_format=row

skip-name-resolve

server-id=10

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/etc/systemd/system/mysqld3307.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3307/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3308.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3308/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3309.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3309/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3310.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3310/my.cnf

LimitNOFILE = 5000

EOF========db02============

cat >/data/3307/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3307/data

socket=/data/3307/mysql.sock

port=3307

log-error=/data/3307/mysql.log

log_bin=/data/3307/mysql-bin

binlog_format=row

skip-name-resolve

server-id=17

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3308/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3308/data

port=3308

socket=/data/3308/mysql.sock

log-error=/data/3308/mysql.log

log_bin=/data/3308/mysql-bin

binlog_format=row

skip-name-resolve

server-id=18

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3309/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3309/data

socket=/data/3309/mysql.sock

port=3309

log-error=/data/3309/mysql.log

log_bin=/data/3309/mysql-bin

binlog_format=row

skip-name-resolve

server-id=19

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/data/3310/my.cnf<<EOF

[mysqld]

basedir=/application/mysql

datadir=/data/3310/data

socket=/data/3310/mysql.sock

port=3310

log-error=/data/3310/mysql.log

log_bin=/data/3310/mysql-bin

binlog_format=row

skip-name-resolve

server-id=20

gtid-mode=on

enforce-gtid-consistency=true

log-slave-updates=1

EOF

cat >/etc/systemd/system/mysqld3307.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3307/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3308.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3308/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3309.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3309/my.cnf

LimitNOFILE = 5000

EOF

cat >/etc/systemd/system/mysqld3310.service<<EOF

[Unit]

Description=MySQL Server

Documentation=man:mysqld(8)

Documentation=http://dev.mysql.com/doc/refman/en/using-systemd.html

After=network.target

After=syslog.target

[Install]

WantedBy=multi-user.target

[Service]

User=mysql

Group=mysql

ExecStart=/application/mysql/bin/mysqld --defaults-file=/data/3310/my.cnf

LimitNOFILE = 5000

EOF修改权限,启动多实例

chown -R mysql.mysql /data/*

systemctl start mysqld3307

systemctl start mysqld3308

systemctl start mysqld3309

systemctl start mysqld3310

mysql -S /data/3307/mysql.sock -e "show variables like 'server_id'"

mysql -S /data/3308/mysql.sock -e "show variables like 'server_id'"

mysql -S /data/3309/mysql.sock -e "show variables like 'server_id'"

mysql -S /data/3310/mysql.sock -e "show variables like 'server_id'"节点主从规划

箭头指向谁是主库

? 10.0.0.51:3307 <-----> 10.0.0.52:3307

? 10.0.0.51:3309 ------> 10.0.0.51:3307

? 10.0.0.52:3309 ------> 10.0.0.52:3307

? 10.0.0.52:3308 <-----> 10.0.0.51:3308

? 10.0.0.52:3310 -----> 10.0.0.52:3308

分片规划

shard1:

? Master:10.0.0.51:3307

? slave1:10.0.0.51:3309

? Standby Master:10.0.0.52:3307

? slave2:10.0.0.52:3309

shard2:

? Master:10.0.0.52:3308

? slave1:10.0.0.52:3310

? Standby Master:10.0.0.51:3308

? slave2:10.0.0.51:3310

开始配置

shard1 配置

10.0.0.51:3307 <-----> 10.0.0.52:3307

db02

mysql -S /data/3307/mysql.sock -e "grant replication slave on *.* to repl@'10.0.0.%' identified by '123';"

mysql -S /data/3307/mysql.sock -e "grant all on *.* to root@'10.0.0.%' identified by '123' with grant option;"db01

mysql -S /data/3307/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3307, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3307/mysql.sock -e "start slave;"

mysql -S /data/3307/mysql.sock -e "show slave statusG"db02

mysql -S /data/3307/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.51', MASTER_PORT=3307, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3307/mysql.sock -e "start slave;"

mysql -S /data/3307/mysql.sock -e "show slave statusG"10.0.0.51:3309 ------> 10.0.0.51:3307

db01

mysql -S /data/3309/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.51', MASTER_PORT=3307, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3309/mysql.sock -e "start slave;"

mysql -S /data/3309/mysql.sock -e "show slave statusG"10.0.0.52:3309 ------> 10.0.0.52:3307

db02

mysql -S /data/3309/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3307, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3309/mysql.sock -e "start slave;"

mysql -S /data/3309/mysql.sock -e "show slave statusG"shard2 配置

10.0.0.52:3308 <-----> 10.0.0.51:3308

db01

mysql -S /data/3308/mysql.sock -e "grant replication slave on *.* to repl@'10.0.0.%' identified by '123';"

mysql -S /data/3308/mysql.sock -e "grant all on *.* to root@'10.0.0.%' identified by '123' with grant option;"db02

mysql -S /data/3308/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.51', MASTER_PORT=3308, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3308/mysql.sock -e "start slave;"

mysql -S /data/3308/mysql.sock -e "show slave statusG"db01

mysql -S /data/3308/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3308, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3308/mysql.sock -e "start slave;"

mysql -S /data/3308/mysql.sock -e "show slave statusG"10.0.0.52:3310 -----> 10.0.0.52:3308

db02

mysql -S /data/3310/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.52', MASTER_PORT=3308, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3310/mysql.sock -e "start slave;"

mysql -S /data/3310/mysql.sock -e "show slave statusG"10.0.0.51:3310 -----> 10.0.0.51:3308

db01

mysql -S /data/3310/mysql.sock -e "CHANGE MASTER TO MASTER_HOST='10.0.0.51', MASTER_PORT=3308, MASTER_AUTO_POSITION=1, MASTER_USER='repl', MASTER_PASSWORD='123';"

mysql -S /data/3310/mysql.sock -e "start slave;"

mysql -S /data/3310/mysql.sock -e "show slave statusG"检测主从状态

mysql -S /data/3307/mysql.sock -e "show slave statusG"|grep Running:

mysql -S /data/3308/mysql.sock -e "show slave statusG"|grep Running:

mysql -S /data/3309/mysql.sock -e "show slave statusG"|grep Running:

mysql -S /data/3310/mysql.sock -e "show slave statusG"|grep Running:如果中间出现错误,在每个节点进行执行以下命令(2.9 状态正确,忽略此步骤)

mysql -S /data/3307/mysql.sock -e "stop slave; reset slave all;"

mysql -S /data/3308/mysql.sock -e "stop slave; reset slave all;"

mysql -S /data/3309/mysql.sock -e "stop slave; reset slave all;"

mysql -S /data/3310/mysql.sock -e "stop slave; reset slave all;"Mycat的安装

这里只是为了测试搭建Mycat,所以直接将MyCAT服务安装到了DB01服务器上

安装java环境

yum install -y java上传到/application 并解压

略

文件介绍

conf:

schema.xml 主配置文件

rule.xml 分片策略定义文件

server.xml mycat服务有关的配置

xxxx.txt 分片参数功能有关

启动mycat

[root@db02 ~]# vim /etc/profile

export PATH=/application/mycat/bin:$PATH

source /etc/profile

[root@db02 ~]# mycat start

[root@db02 ~]# mysql -uroot -p123456 -h 10.0.0.52 -P8066测试数据准备

db01:

mysql -S /data/3307/mysql.sock

grant all on *.* to root@'10.0.0.%' identified by '123';

source /root/world.sql

mysql -S /data/3308/mysql.sock

grant all on *.* to root@'10.0.0.%' identified by '123';

source /root/world.sqlMyCAT 核心配置文件使用介绍(schema.xml)

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="dn1">

</schema>

<dataNode name="dn1" dataHost="localhost1" database= "world" />

<dataHost name="localhost1" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

<heartbeat>select user()</heartbeat>

<writeHost host="db1" url="10.0.0.51:3307" user="root" password="123">

<readHost host="db2" url="10.0.0.51:3309" user="root" password="123" />

</writeHost>

</dataHost>

</mycat:schema>Mycat实现读写分离功能

[root@db02 /application/mycat/conf]# cat schema.xml

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="sh1">

</schema>

<dataNode name="sh1" dataHost="oldguo1" database= "world" />

<dataHost name="oldguo1" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

<heartbeat>select user()</heartbeat>

<writeHost host="db1" url="10.0.0.51:3307" user="root" password="123">

<readHost host="db2" url="10.0.0.51:3309" user="root" password="123" />

</writeHost>

</dataHost>

</mycat:schema>重启生效:

[root@db02 /application/mycat/conf]# mycat restart测试:

[root@db02 /application/mycat/conf]# mysql -uroot -p123456 -h 10.0.0.52 -P8066

mysql> select @@server_id;

mysql> begin; select @@server_id;commit;读写分离+高可用功能

编写配置文件

[root@db02 conf]# mv schema.xml schema.xml.rw

[root@db02 conf]# vim schema.xml

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="sh1">

</schema>

<dataNode name="sh1" dataHost="oldguo1" database= "world" />

<dataHost name="oldguo1" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

<heartbeat>select user()</heartbeat>

<writeHost host="db1" url="10.0.0.51:3307" user="root" password="123">

<readHost host="db2" url="10.0.0.51:3309" user="root" password="123" />

</writeHost>

<writeHost host="db3" url="10.0.0.52:3307" user="root" password="123">

<readHost host="db4" url="10.0.0.52:3309" user="root" password="123" />

</writeHost>

</dataHost>

</mycat:schema>真正的 writehost:负责写操作的writehost

standby writeHost :和readhost一样,只提供读服务当写节点宕机后,后面跟的readhost也不提供服务,这时候standby的writehost就提供写服务,

后面跟的readhost提供读服务

测试:

mysql -uroot -p123456 -h 10.0.0.52 -P 8066

show variables like 'server_id';读写分离测试

mysql -uroot -p -h 127.0.0.1 -P8066

show variables like 'server_id';

show variables like 'server_id';

show variables like 'server_id';

begin;

show variables like 'server_id';对db01 3307节点进行关闭和启动,测试读写操作

配置中的属性介绍:(了解一下)

balance属性

负载均衡类型,目前的取值有3种:

balance="0", 不开启读写分离机制,所有读操作都发送到当前可用的writeHost上。

balance="1",全部的readHost与standby writeHost参与select语句的负载均衡,简单的说,当双主双从模式(M1->S1,M2->S2,并且M1与M2互为主备),正常情况,M2,S1,S2都参与select语句的负载均衡。

balance="2",所有读操作都随机的在writeHost、readhost上分发。

writeType属性

负载均衡类型,目前的取值有2种:

writeType="0", 所有写操作发送到配置的第一个writeHost,第一个挂了切到还生存的第二个writeHost,

重新启动后已切换后的为主,切换记录在配置文件中:dnindex.properties .writeType=“1”,所有写操作都随机的发送到配置的writeHost,但不推荐使用

switchType属性

-1 表示不自动切换

1 默认值,自动切换

2 基于MySQL主从同步的状态决定是否切换 ,心跳语句为 show slave status

datahost其他配置

<dataHost name="localhost1" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

maxCon="1000":最大的并发连接数

minCon="10" :mycat在启动之后,会在后端节点上自动开启的连接线程

tempReadHostAvailable="1"

这个一主一从时(1个writehost,1个readhost时),可以开启这个参数,如果2个writehost,2个readhost时

<heartbeat>select user()</heartbeat> 监测心跳MyCAT核心特性 - 切分

数据切分

指通过某种特定的条件,将我们存放在同一个数据库中的数据分散存放到多个数据库(主机)上面,以达到分散单台设备负载的效果。

切分类型

- 垂直拆分

是按照不同的表(或者 Schema)来切分到不同的数据库(主机)上 - 水平(横向)拆分

根据表中数据的逻辑关系,将同一个表中数据按照某种条件查分到多台数据库(主机)

分片策略

- 范围 range 800w 1-400w 400w01-800w

- 取模 mod 取余数

- 枚举

- 哈希 hash

- 时间 流水

垂直分表

编写配置文件schema.xml

最好先将配置文件备份一份,

cd /application/mycat/conf/

mv schema.xml schema.xml.ha

vim schema.xml

------ 配置文件内容如下

<?xml version="1.0"?>

<!DOCTYPE mycat:schema SYSTEM "schema.dtd">

<mycat:schema xmlns:mycat="http://io.mycat/">

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="sh1">

<table name="user" dataNode="sh1"/>

<table name="order_t" dataNode="sh2"/>

</schema>

<dataNode name="sh1" dataHost="oldguo1" database= "taobao" />

<dataNode name="sh2" dataHost="oldguo2" database= "taobao" />

<dataHost name="oldguo1" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

<heartbeat>select user()</heartbeat>

<writeHost host="db1" url="10.0.0.51:3307" user="root" password="123">

<readHost host="db2" url="10.0.0.51:3309" user="root" password="123" />

</writeHost>

<writeHost host="db3" url="10.0.0.52:3307" user="root" password="123">

<readHost host="db4" url="10.0.0.52:3309" user="root" password="123" />

</writeHost>

</dataHost>

<dataHost name="oldguo2" maxCon="1000" minCon="10" balance="1" writeType="0" dbType="mysql" dbDriver="native" switchType="1">

<heartbeat>select user()</heartbeat>

<writeHost host="db1" url="10.0.0.51:3308" user="root" password="123">

<readHost host="db2" url="10.0.0.51:3310" user="root" password="123" />

</writeHost>

<writeHost host="db3" url="10.0.0.52:3308" user="root" password="123">

<readHost host="db4" url="10.0.0.52:3310" user="root" password="123" />

</writeHost>

</dataHost>

</mycat:schema>创建测试库和表

双活状态所以在哪一台服务器操作都可以

mysql -S /data/3307/mysql.sock -e "create database taobao charset utf8;"

mysql -S /data/3308/mysql.sock -e "create database taobao charset utf8;"

mysql -S /data/3307/mysql.sock -e "use taobao;create table user(id int,name varchar(20))";

mysql -S /data/3308/mysql.sock -e "use taobao;create table order_t(id int,name varchar(20))"水平拆分

范围分片

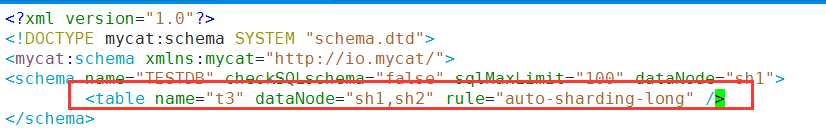

(1)修改配置文件

修改如下:

vim /application/mycat/conf/schema.xml

--- 修改内容如下

<schema name="TESTDB" checkSQLschema="false" sqlMaxLimit="100" dataNode="sh1">

<table name="t3" dataNode="sh1,sh2" rule="auto-sharding-long" />

</schema>

<dataNode name="sh1" dataHost="oldguo1" database= "taobao" />

<dataNode name="sh2" dataHost="oldguo2" database= "taobao" />vim /application/mycat/conf/autopartition-long.txt

0-10=0

11-20=1(2)创建测试表

mysql -S /data/3307/mysql.sock -e "use taobao;create table t3 (id int not null primary key auto_increment,name varchar(20) not null);"

mysql -S /data/3308/mysql.sock -e "use taobao;create table t3 (id int not null primary key auto_increment,name varchar(20) not null);"(3)测试

重启mycat

mycat restart

mysql -uroot -p123456 -h 127.0.0.1 -P 8066

use TESTDB;

insert into t3(id,name) values(1,'a');

insert into t3(id,name) values(2,'b');

insert into t3(id,name) values(3,'c');

insert into t3(id,name) values(4,'d');

insert into t3(id,name) values(11,'aa');

insert into t3(id,name) values(12,'bb');

insert into t3(id,name) values(13,'cc');

insert into t3(id,name) values(14,'dd');(4)查看数据存放

mysql -S /data/3307/mysql.sock -e "use taobao;select * from t5";

mysql -S /data/3308/mysql.sock -e "use taobao;select * from t5";

mysql -S /data/3309/mysql.sock -e "use taobao;select * from t5";

mysql -S /data/3310/mysql.sock -e "use taobao;select * from t5";11.2 取模分片(mod-long)

取余分片方式:分片键(一个列)与节点数量进行取余,得到余数,将数据写入对应节点

(1)修改配置文件

vim schema.xml

<table name="t4" dataNode="sh1,sh2" rule="mod-long" />

vim rule.xml

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<property name="count">2</property>

</function>(2)创建测试表:

mysql -S /data/3307/mysql.sock -e "use taobao;create table t4 (id int not null primary key auto_increment,name varchar(20) not null);"

mysql -S /data/3308/mysql.sock -e "use taobao;create table t4 (id int not null primary key auto_increment,name varchar(20) not null);"(3)测试

重启mycat

mycat restart

测试:

mysql -uroot -p123456 -h10.0.0.52 -P8066

use TESTDB

insert into t4(id,name) values(1,'a');

insert into t4(id,name) values(2,'b');

insert into t4(id,name) values(3,'c');

insert into t4(id,name) values(4,'d');(4)查看结果,分别登录后端节点查询数据

mysql -S /data/3307/mysql.sock

use taobao

select * from t4;

mysql -S /data/3308/mysql.sock

use taobao

select * from t4;11.3 枚举分片

vim schema.xml

<table name="t5" dataNode="sh1,sh2" rule="sharding-by-intfile" />

vim rule.xml

<tableRule name="sharding-by-intfile">

<rule> <columns>name</columns>

<algorithm>hash-int</algorithm>

</rule>

</tableRule>

<function name="hash-int" class="org.opencloudb.route.function.PartitionByFileMap">

<property name="mapFile">partition-hash-int.txt</property>

<property name="type">1</property>

</function>

partition-hash-int.txt 配置:

bj=0

sh=1

DEFAULT_NODE=1

columns 标识将要分片的表字段,algorithm 分片函数, 其中分片函数配置中,mapFile标识配置文件名称创建测试表:

mysql -S /data/3307/mysql.sock -e "use taobao;create table t5 (id int not null primary key auto_increment,name varchar(20) not null);"

mysql -S /data/3308/mysql.sock -e "use taobao;create table t5 (id int not null primary key auto_increment,name varchar(20) not null);"测试

重启mycat

mycat restart

mysql -uroot -p123456 -h10.0.0.52 -P8066

use TESTDB

insert into t5(id,name) values(1,'bj');

insert into t5(id,name) values(2,'sh');

insert into t5(id,name) values(3,'bj');

insert into t5(id,name) values(4,'sh');

insert into t5(id,name) values(5,'tj');分别登录后端节点查询数据

mysql -S /data/3307/mysql.sock -e "use taobao;select * from t5;"

mysql -S /data/3308/mysql.sock -e "use taobao;select * from t5;"MyCAT 全局表

使用场景:

如果你的业务中有些数据类似于数据字典,比如配置文件的配置,

常用业务的配置或者数据量不大很少变动的表,这些表往往不是特别大,

而且大部分的业务场景都会用到,那么这种表适合于Mycat全局表,无须对数据进行切分,

要在所有的分片上保存一份数据即可,Mycat 在Join操作中,业务表与全局表进行Join聚合会优先选择相同分片内的全局表join,

避免跨库Join,在进行数据插入操作时,mycat将把数据分发到全局表对应的所有分片执行,在进行数据读取时候将会随机获取一个节点读取数据。

修改配置文件

vim schema.xml

<table name="t_area" primaryKey="id" type="global" dataNode="sh1,sh2" /> 后端数据准备

mysql -S /data/3307/mysql.sock -e "use taobao;create table t_area (id int not null primary key auto_increment,name varchar(20) not null);"

mysql -S /data/3308/mysql.sock -e "use taobao;create table t_area (id int not null primary key auto_increment,name varchar(20) not null);"测试

重启mycat

mycat restart

测试:

mysql -uroot -p123456 -h10.0.0.52 -P8066

use TESTDB

insert into t_area(id,name) values(1,'a');

insert into t_area(id,name) values(2,'b');

insert into t_area(id,name) values(3,'c');

insert into t_area(id,name) values(4,'d');分别登录后端节点查询数据

mysql -S /data/3307/mysql.sock -e "use taobao;select * from t_area;"

mysql -S /data/3308/mysql.sock -e "use taobao;select * from t_area;"以上是关于第十一章 读写分离及分布式架构的主要内容,如果未能解决你的问题,请参考以下文章

深入浅出Zabbix 3.0 -- 第十一章 VMware 监控