LVS+Keepalived 高可用环境部署记录(主主和主从模式)

Posted jians

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了LVS+Keepalived 高可用环境部署记录(主主和主从模式)相关的知识,希望对你有一定的参考价值。

一、LVS+Keepalived主从热备的高可用环境部署

1)环境准备

|

1

2

3

4

5

6

7

8

9

10

11

12

|

LVS_Keepalived_Master 182.148.15.237LVS_Keepalived_Backup 182.148.15.236Real_Server1 182.148.15.233Real_Server2 182.148.15.238VIP 182.148.15.239 系统版本都是centos6.8特别注意:Director Server与Real Server必须有一块网卡连在同一物理网段上!否则lvs会转发失败!在远程telnet vip port会报错:"telnet: connect to address *.*.*.*: No route to host" |

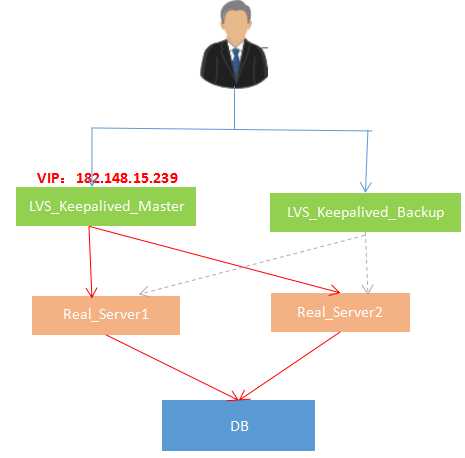

基本的网络拓扑图如下:

2)LVS_keepalived_Master和LVS_keepalived_Backup两台服务器上安装配置LVS和keepalived的操作记录:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

|

1)关闭 SElinux、配置防火墙(在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上都要操作)[root@LVS_Keepalived_Master ~]# vim /etc/sysconfig/selinux#SELINUX=enforcing #注释掉#SELINUXTYPE=targeted #注释掉SELINUX=disabled #增加 [root@LVS_Keepalived_Master ~]# setenforce 0 #临时关闭selinux。上面文件配置后,重启机器后就永久生效。 注意下面182.148.15.0/24是服务器的公网网段,192.168.1.0/24是服务器的私网网段一定要注意:加上这个组播规则后,MASTER和BACKUP故障时,才能实现VIP资源的正常转移。其故障恢复后,VIP也还会正常转移回来。[root@LVS_Keepalived_Master ~]# vim /etc/sysconfig/iptables.......-A INPUT -s 182.148.15.0/24 -d 224.0.0.18 -j ACCEPT #允许组播地址通信。-A INPUT -s 192.168.1.0/24 -d 224.0.0.18 -j ACCEPT-A INPUT -s 182.148.15.0/24 -p vrrp -j ACCEPT #允许 VRRP(虚拟路由器冗余协)通信-A INPUT -s 192.168.1.0/24 -p vrrp -j ACCEPT-A INPUT -m state --state NEW -m tcp -p tcp --dport 80 -j ACCEPT [root@LVS_Keepalived_Master ~]# /etc/init.d/iptables restart ----------------------------------------------------------------------------------------------------------------------2)LVS安装(在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上都要操作)需要安装以下软件包[root@LVS_Keepalived_Master ~]# yum install -y libnl* popt* 查看是否加载lvs模块[root@LVS_Keepalived_Master src]# modprobe -l |grep ipvs 下载并安装LVS[root@LVS_Keepalived_Master ~]# cd /usr/local/src/[root@LVS_Keepalived_Master src]# wget http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.26.tar.gz解压安装[root@LVS_Keepalived_Master src]# ln -s /usr/src/kernels/2.6.32-431.5.1.el6.x86_64/ /usr/src/linux[root@LVS_Keepalived_Master src]# tar -zxvf ipvsadm-1.26.tar.gz[root@LVS_Keepalived_Master src]# cd ipvsadm-1.26[root@LVS_Keepalived_Master ipvsadm-1.26]# make && make install LVS安装完成,查看当前LVS集群[root@LVS_Keepalived_Master ipvsadm-1.26]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn ----------------------------------------------------------------------------------------------------------------------3)编写LVS启动脚本/etc/init.d/realserver(在Real_Server1 和Real_Server2上都要操作,realserver脚本内容是一样的)[root@Real_Server1 ~]# vim /etc/init.d/realserver#!/bin/shVIP=182.148.15.239. /etc/rc.d/init.d/functions case "$1" in# 禁用本地的ARP请求、绑定本地回环地址start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up #在回环地址上绑定VIP,设定掩码,与Direct Server(自身)上的IP保持通信 /sbin/route add -host $VIP dev lo:0 echo "LVS-DR real server starts successfully.

" ;;stop) /sbin/ifconfig lo:0 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announceecho "LVS-DR real server stopped.

" ;;status) isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;;*) echo "Usage: $0 {start|stop|status}" exit 1esacexit 0 将lvs脚本加入开机自启动[root@Real_Server1 ~]# chmod +x /etc/init.d/realserver[root@Real_Server1 ~]# echo "/etc/init.d/realserver start" >> /etc/rc.d/rc.local 启动LVS脚本(注意:如果这两台realserver机器重启了,一定要确保service realserver start 启动了,即lo:0本地回环上绑定了vip地址,否则lvs转发失败!)[root@Real_Server1 ~]# service realserver startLVS-DR real server starts successfully.

查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了[root@Real_Server1 ~]# ifconfigeth0 Link encap:Ethernet HWaddr 52:54:00:D1:27:75 inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224 inet6 addr: fe80::5054:ff:fed1:2775/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:309741 errors:0 dropped:0 overruns:0 frame:0 TX packets:27993954 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:37897512 (36.1 MiB) TX bytes:23438654329 (21.8 GiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) lo:0 Link encap:Local Loopback inet addr:182.148.15.239 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1 ----------------------------------------------------------------------------------------------------------------------4)安装Keepalived(LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器都要操作)[root@LVS_Keepalived_Master ~]# yum install -y openssl-devel[root@LVS_Keepalived_Master ~]# cd /usr/local/src/[root@LVS_Keepalived_Master src]# wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz[root@LVS_Keepalived_Master src]# tar -zvxf keepalived-1.3.5.tar.gz[root@LVS_Keepalived_Master src]# cd keepalived-1.3.5[root@LVS_Keepalived_Master keepalived-1.3.5]# ./configure --prefix=/usr/local/keepalived[root@LVS_Keepalived_Master keepalived-1.3.5]# make && make install [root@LVS_Keepalived_Master keepalived-1.3.5]# cp /usr/local/src/keepalived-1.3.5/keepalived/etc/init.d/keepalived /etc/rc.d/init.d/[root@LVS_Keepalived_Master keepalived-1.3.5]# cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/[root@LVS_Keepalived_Master keepalived-1.3.5]# mkdir /etc/keepalived/[root@LVS_Keepalived_Master keepalived-1.3.5]# cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/[root@LVS_Keepalived_Master keepalived-1.3.5]# cp /usr/local/keepalived/sbin/keepalived /usr/sbin/[root@LVS_Keepalived_Master keepalived-1.3.5]# echo "/etc/init.d/keepalived start" >> /etc/rc.local [root@LVS_Keepalived_Master keepalived-1.3.5]# chmod +x /etc/rc.d/init.d/keepalived #添加执行权限[root@LVS_Keepalived_Master keepalived-1.3.5]# chkconfig keepalived on #设置开机启动[root@LVS_Keepalived_Master keepalived-1.3.5]# service keepalived start #启动[root@LVS_Keepalived_Master keepalived-1.3.5]# service keepalived stop #关闭[root@LVS_Keepalived_Master keepalived-1.3.5]# service keepalived restart #重启 ----------------------------------------------------------------------------------------------------------------------5)接着配置LVS+Keepalived配置现在LVS_Keepalived_Master和LVS_Keepalived_Backup两台机器上打开ip_forward转发功能[root@LVS_Keepalived_Master ~]# echo "1" > /proc/sys/net/ipv4/ip_forward LVS_Keepalived_Master机器上的keepalived.conf配置:[root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf! Configuration File for keepalived global_defs { router_id LVS_Master} vrrp_instance VI_1 { state MASTER #指定instance初始状态,实际根据优先级决定.backup节点不一样 interface eth0 #虚拟IP所在网 virtual_router_id 51 #VRID,相同VRID为一个组,决定多播MAC地址 priority 100 #优先级,另一台改为90.backup节点不一样 advert_int 1 #检查间隔 authentication { auth_type PASS #认证方式,可以是pass或ha auth_pass 1111 #认证密码 } virtual_ipaddress { 182.148.15.239 #VIP }} virtual_server 182.148.15.239 80 { delay_loop 6 #服务轮询的时间间隔 lb_algo wrr #加权轮询调度,LVS调度算法 rr|wrr|lc|wlc|lblc|sh|sh lb_kind DR #LVS集群模式 NAT|DR|TUN,其中DR模式要求负载均衡器网卡必须有一块与物理网卡在同一个网段 #nat_mask 255.255.255.0 persistence_timeout 50 #会话保持时间 protocol TCP #健康检查协议 ## Real Server设置,80就是连接端口 real_server 182.148.15.233 80 { weight 3 ##权重 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} 启动keepalived[root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived startStarting keepalived: [ OK ] [root@LVS_Keepalived_Master ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.48.115.237/27 brd 182.48.115.255 scope global eth0 inet 182.48.115.239/32 scope global eth0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 注意此时网卡的变化,可以看到虚拟网卡已经分配到了realserver上。此时查看LVS集群状态,可以看到集群下有两个Real Server,调度算法,权重等信息。ActiveConn代表当前Real Server的活跃连接数。[root@LVS_Keepalived_Master ~]# ipvsadm -lnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 182.48.115.239:80 wrr persistent 50 -> 182.48.115.233:80 Route 3 0 0 -> 182.48.115.238:80 Route 3 0 0 -------------------------------------------------------------------------LVS_Keepalived_Backup机器上的keepalived.conf配置:[root@LVS_Keepalived_Backup ~]# vim /etc/keepalived/keepalived.conf! Configuration File for keepalived global_defs { router_id LVS_Backup} vrrp_instance VI_1 { state BACKUP interface eth0 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 }} virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} [root@LVS_Keepalived_Backup ~]# /etc/init.d/keepalived startStarting keepalived: [ OK ] 查看LVS_Keepalived_Backup机器上,发现VIP默认在LVS_Keepalived_Master机器上,只要当LVS_Keepalived_Backup发生故障时,VIP资源才会飘到自己这边来。[root@LVS_Keepalived_Backup ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:7c:b8:f0 brd ff:ff:ff:ff:ff:ff inet 182.48.115.236/27 brd 182.48.115.255 scope global eth0 inet 182.48.115.239/27 brd 182.48.115.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe7c:b8f0/64 scope link valid_lft forever preferred_lft forever[root@LVS_Keepalived_Backup ~]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 182.48.115.239:80 wrr persistent 50 -> 182.48.115.233:80 Route 3 0 0 -> 182.48.115.238:80 Route 3 0 0 |

3)后端两台Real Server上的操作

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

在两台Real Server上配置好nginx,nginx安装配置过程省略。分别在两台Real Server上配置两个域名www.wangshibo.com和www.guohuihui.com。在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上要能正常访问这两个域名[root@LVS_Keepalived_Master ~]# curl http://www.wangshibo.comthis is page of Real_Server1:182.148.15.238 www.wangshibo.com[root@LVS_Keepalived_Master ~]# curl http://www.guohuihui.comthis is page of Real_Server2:182.148.15.238 www.guohuihui.com[root@LVS_Keepalived_Backup ~]# curl http://www.wangshibo.comthis is page of Real_Server1:182.148.15.238 www.wangshibo.com[root@LVS_Keepalived_Backup ~]# curl http://www.guohuihui.comthis is page of Real_Server2:182.148.15.238 www.guohuihui.com关闭182.148.15.238这台机器(即Real_Server2)的nginx,发现对应域名的请求就会到Real_Server1上[root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx -s stop[root@Real_Server2 ~]# lsof -i:80[root@Real_Server2 ~]#再次在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上访问这两个域名,就会发现已经负载到Real_Server1上了[root@LVS_Keepalived_Master ~]# curl http://www.wangshibo.comthis is page of Real_Server1:182.148.15.233 www.wangshibo.com[root@LVS_Keepalived_Master ~]# curl http://www.guohuihui.comthis is page of Real_Server1:182.148.15.233 www.guohuihui.com[root@LVS_Keepalived_Backup ~]# curl http://www.wangshibo.comthis is page of Real_Server1:182.148.15.233 www.wangshibo.com[root@LVS_Keepalived_Backup ~]# curl http://www.guohuihui.comthis is page of Real_Server1:182.148.15.233 www.guohuihui.com另外,设置这两台Real Server的iptables,让其80端口只对前面的两个vip资源开放[root@Real_Server1 ~]# vim /etc/sysconfig/iptables......-A INPUT -s 182.148.15.239 -m state --state NEW -m tcp -p tcp --dport 80 -j ACCEPT[root@Real_Server1 ~]# /etc/init.d/iptables restart |

4)测试

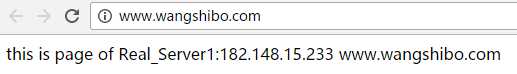

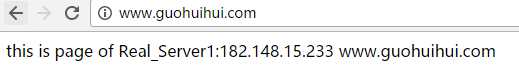

将www.wangshibo.com和www.guohuihui.com测试域名解析到VIP:182.148.15.239,然后在浏览器里是可以正常访问的。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

|

1)测试LVS功能(上面Keepalived的lvs配置中,自带了健康检查,当后端服务器的故障出现故障后会自动从lvs集群中踢出,当故障恢复后,再自动加入到集群中)先查看当前LVS集群,如下:发现后端两台Real Server的80端口都运行正常[root@LVS_Keepalived_Master ~]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 -> 182.148.15.238:80 Route 3 0 0 现在测试关闭一台Real Server,比如Real_Server2[root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx -s stop 过一会儿再次查看当前LVS集群,如下:发现Real_Server2已经被踢出当前LVS集群了[root@LVS_Keepalived_Master ~]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 最后重启Real_Server2的80端口,发现LVS集群里又再次将其添加进来了[root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx [root@LVS_Keepalived_Master ~]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 182.148.15.239:80 wrr persistent 50 -> 182.148.15.233:80 Route 3 0 0 -> 182.148.15.238:80 Route 3 0 0 以上测试中,http://www.wangshibo.com和http://www.guohuihui.com域名访问都不受影响。 2)测试Keepalived心跳测试的高可用默认情况下,VIP资源是在LVS_Keepalived_Master上[root@LVS_Keepalived_Master ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 然后关闭LVS_Keepalived_Master的keepalived,发现VIP就会转移到LVS_Keepalived_Backup上。[root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived stopStopping keepalived: [ OK ][root@LVS_Keepalived_Master ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP的移动信息[root@LVS_Keepalived_Master ~]# tail -f /var/log/messages.............May 8 10:19:36 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: TCP connection to [182.148.15.233]:80 failed.May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: TCP connection to [182.148.15.233]:80 failed.May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: Check on service [182.148.15.233]:80 failed after 1 retry.May 8 10:19:39 Haproxy_Keepalived_Master Keepalived_healthcheckers[20875]: Removing service [182.148.15.233]:80 from VS [182.148.15.239]:80 [root@LVS_Keepalived_Backup ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:7c:b8:f0 brd ff:ff:ff:ff:ff:ff inet 182.148.15.236/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe7c:b8f0/64 scope link valid_lft forever preferred_lft forever 接着再重新启动LVS_Keepalived_Master的keepalived,发现VIP又转移回来了[root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived startStarting keepalived: [ OK ][root@LVS_Keepalived_Master ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 52:54:00:68:dc:b6 brd ff:ff:ff:ff:ff:ff inet 182.148.15.237/27 brd 182.148.15.255 scope global eth0 inet 182.148.15.239/32 scope global eth0 inet 182.148.15.239/27 brd 182.148.15.255 scope global secondary eth0:0 inet6 fe80::5054:ff:fe68:dcb6/64 scope link valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP转移回来的信息[root@LVS_Keepalived_Master ~]# tail -f /var/log/messages.............May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.148.15.239May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239May 8 10:23:12 Haproxy_Keepalived_Master Keepalived_vrrp[5863]: Sending gratuitous ARP on eth0 for 182.148.15.239 |

二、LVS+Keepalived主主热备的高可用环境部署

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

|

主主环境相比于主从环境,区别只在于:1)LVS负载均衡层需要两个VIP。比如182.148.15.239和182.148.15.2352)后端的realserver上要绑定这两个VIP到lo本地回环口上3)Keepalived.conf的配置相比于上面的主从模式也有所不同 主主架构的具体配置如下:1)编写LVS启动脚本(在Real_Server1 和Real_Server2上都要操作,realserver脚本内容是一样的) 由于后端realserver机器要绑定两个VIP到本地回环口lo上(分别绑定到lo:0和lo:1),所以需要编写两个启动脚本[root@Real_Server1 ~]# vim /etc/init.d/realserver1#!/bin/shVIP=182.148.15.239. /etc/rc.d/init.d/functions case "$1" in start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:0 echo "LVS-DR real server starts successfully.

" ;;stop) /sbin/ifconfig lo:0 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announceecho "LVS-DR real server stopped.

" ;;status) isLoOn=`/sbin/ifconfig lo:0 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;;*) echo "Usage: $0 {start|stop|status}" exit 1esacexit 0 [root@Real_Server1 ~]# vim /etc/init.d/realserver2#!/bin/shVIP=182.148.15.235. /etc/rc.d/init.d/functions case "$1" in start) /sbin/ifconfig lo down /sbin/ifconfig lo up echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce /sbin/sysctl -p >/dev/null 2>&1 /sbin/ifconfig lo:1 $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:1 echo "LVS-DR real server starts successfully.

" ;;stop) /sbin/ifconfig lo:1 down /sbin/route del $VIP >/dev/null 2>&1 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announceecho "LVS-DR real server stopped.

" ;;status) isLoOn=`/sbin/ifconfig lo:1 | grep "$VIP"` isRoOn=`/bin/netstat -rn | grep "$VIP"` if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then echo "LVS-DR real server has run yet." else echo "LVS-DR real server is running." fi exit 3 ;;*) echo "Usage: $0 {start|stop|status}" exit 1esacexit 0 将lvs脚本加入开机自启动[root@Real_Server1 ~]# chmod +x /etc/init.d/realserver1[root@Real_Server1 ~]# chmod +x /etc/init.d/realserver2[root@Real_Server1 ~]# echo "/etc/init.d/realserver1" >> /etc/rc.d/rc.local[root@Real_Server1 ~]# echo "/etc/init.d/realserver2" >> /etc/rc.d/rc.local 启动LVS脚本[root@Real_Server1 ~]# service realserver1 startLVS-DR real server starts successfully.

[root@Real_Server1 ~]# service realserver2 startLVS-DR real server starts successfully.

查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了[root@Real_Server1 ~]# ifconfigeth0 Link encap:Ethernet HWaddr 52:54:00:D1:27:75 inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224 inet6 addr: fe80::5054:ff:fed1:2775/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:309741 errors:0 dropped:0 overruns:0 frame:0 TX packets:27993954 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:37897512 (36.1 MiB) TX bytes:23438654329 (21.8 GiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:0 (0.0 b) TX bytes:0 (0.0 b) lo:0 Link encap:Local Loopback inet addr:182.148.15.239 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1 lo:1 Link encap:Local Loopback inet addr:182.148.15.235 Mask:255.255.255.255 UP LOOPBACK RUNNING MTU:65536 Metric:1 2)Keepalived.conf的配置LVS_Keepalived_Master机器上的Keepalived.conf配置先打开ip_forward路由转发功能[root@LVS_Keepalived_Master ~]# echo "1" > /proc/sys/net/ipv4/ip_forward[root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf! Configuration File for keepalived global_defs { router_id LVS_Master} vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 }} vrrp_instance VI_2 { state BACKUP interface eth0 virtual_router_id 52 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.235 }} virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} virtual_server 182.148.15.235 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} LVS_Keepalived_Backup机器上的Keepalived.conf配置[root@LVS_Keepalived_Backup ~]# vim /etc/keepalived/keepalived.conf! Configuration File for keepalived global_defs { router_id LVS_Backup} vrrp_instance VI_1 { state Backup interface eth0 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.239 }} vrrp_instance VI_2 { state Master interface eth0 virtual_router_id 52 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 182.148.15.235 }} virtual_server 182.148.15.239 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} virtual_server 182.148.15.235 80 { delay_loop 6 lb_algo wrr lb_kind DR #nat_mask 255.255.255.0 persistence_timeout 50 protocol TCP real_server 182.148.15.233 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } real_server 182.148.15.238 80 { weight 3 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } }} 其他验证操作和上面主从模式一样~~~ |

以上是关于LVS+Keepalived 高可用环境部署记录(主主和主从模式)的主要内容,如果未能解决你的问题,请参考以下文章