Log4j整合Flume

Posted wuning

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Log4j整合Flume相关的知识,希望对你有一定的参考价值。

1.环境

CDH 5.16.1

Spark 2.3.0 cloudera4

Kafka 2.1.0+kafka4.0.0

2.Log4j——>Flume

2.1 Log4j 产生日志

import org.apache.log4j.Logger;

/**

* @ClassName LoggerGenerator

* @Author wuning

* @Date: 2020/2/3 10:54

* @Description: 模拟日志输出

*/

public class LoggerGenerator {

private static Logger logger = Logger.getLogger(LoggerGenerator.class.getName());

public static void main(String[] args) throws InterruptedException {

int index = 0;

while (true) {

Thread.sleep(1000);

logger.info("value : " + index++);

}

}

}log4j.properties

#log4j.rootLogger=debug,stdout,info,debug,warn,error

log4j.rootLogger=info,stdout,flume

#console

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern= %d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] -%m%n

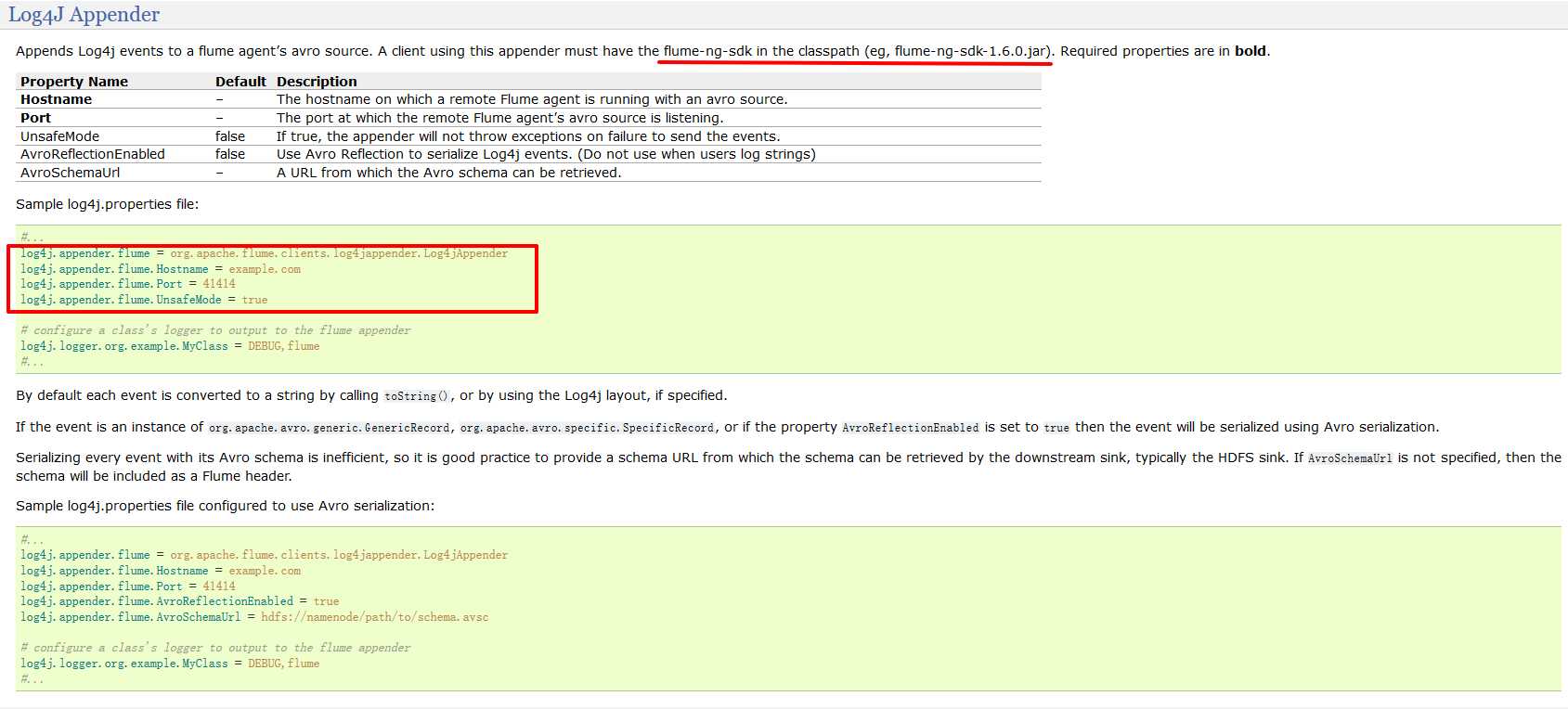

log4j.appender.flume = org.apache.flume.clients.log4jappender.Log4jAppender

log4j.appender.flume.Hostname = cdh03

log4j.appender.flume.Port = 9876

log4j.appender.flume.UnsafeMode = true注意:需要引入flume-ng-log4jappender jar包

<dependency>

<groupId>org.apache.flume.flume-ng-clients</groupId>

<artifactId>flume-ng-log4jappender</artifactId>

<version>1.6.0</version>

</dependency>2.2 Flume采集日志

地址:http://flume.apache.org/releases/content/1.6.0/FlumeUserGuide.html#flume-sources

a1.sources = s1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.s1.type = avro

a1.sources.s1.bind = cdh03

a1.sources.s1.port = 9876

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.s1.channels = c1

a1.sinks.k1.channel = c1以上是关于Log4j整合Flume的主要内容,如果未能解决你的问题,请参考以下文章