Paper ReadingR-CNN(V5)论文解读

Posted ireland

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Paper ReadingR-CNN(V5)论文解读相关的知识,希望对你有一定的参考价值。

R-CNN论文:Rich feature hierarchies for accurate object detection and semantic segmentation

用于精确目标检测和语义分割的丰富特征层次结构

作者:Ross Girshick, Jeff Donahue, Trevor Darrell, Jitendra Malik,UC Berkeley(加州大学伯克利分校)

一作者Ross Girshick的个人首页:http://www.rossgirshick.info/,有其许多论文和代码,也包括本文的[代码](https://github.com/rbgirshick/rcnn)、幻灯片(slides)、海报(poster)等。文章的工作量和成果的确让人佩服,幻灯片讲的很详细,海报也炫酷。

关键词:accurate object detection、semantic segmentation

引用格式:Girshick, R.,Donahue, J.,Darrell, T.,Malik, J.. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation[P]. Computer Vision and Pattern Recognition (CVPR), 2014 IEEE Conference on,2014.

前言

参考[学者]对R-CNN的前言介绍:overfeat是用深度学习的方法做目标检测,但RCNN是第一个可以真正可以工业级应用的解决方案。可以说改变了目标检测领域的主要研究思路,紧随其后的系列文章:Fast-RCNN、Faster-RCNN、Mask RCNN都沿袭R-CNN的思路。在2013年11月发布了第一版本,一直到2014年10月共计发布5个版本,2014年发布在CVPR,CVPR是IEEE Conference on Computer Vision and Pattern Recognition的缩写,即IEEE国际计算机视觉与模式识别会议。该会议是由IEEE举办的计算机视觉和模式识别领域的顶级会议。近年来每年有约1500名参加者,收录的论文数量一般300篇左右。会议每年都会有固定的研讨主题,而每一年都会有公司赞助该会议并获得在会场展示的机会。三大顶级会议有CVPR、ICCV和ECCV。

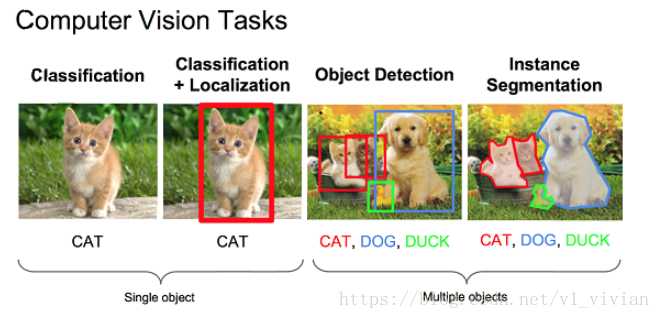

引用下图区分计算机视觉的任务:

classify:识别目标类别

localization:单个目标,标出目标位置

detection:多个目标,标出目标位置,识别类别

segementation:目标分割

0 Abstract

Object detection performance, as measured on the canonical PASCAL VOC dataset, has plateaued in the last few years. The best-performing methods are complex ensemble systems that typically combine multiple low-level image features with high-level context. In this paper, we propose a simple and scalable detection algorithm that improves mean average precision (mAP) by more than 30% relative to the previous best result on VOC 2012---achieving a mAP of 53.3%. Our approach combines two key insights: (1) one can apply high-capacity convolutional neural networks (CNNs) to bottom-up region proposals in order to localize and segment objects and (2) when labeled training data is scarce, supervised pre-training for an auxiliary task, followed by domain-specific fine-tuning, yields a significant performance boost. Since we combine region proposals with CNNs, we call our method R-CNN: Regions with CNN features. We also compare R-CNN to OverFeat, a recently proposed sliding-window detector based on a similar CNN architecture. We find that R-CNN outperforms OverFeat by a large margin on the 200-class ILSVRC2013 detection dataset. Source code for the complete system is available at this http URL.

## 地方

$$f=sum_{t=1}^{T}left(f_{mathbf{S}}^{t}+f_{mathbf{L}}^{t} ight)$$

dfads

以上是关于Paper ReadingR-CNN(V5)论文解读的主要内容,如果未能解决你的问题,请参考以下文章

论文阅读Ask me anything: Dynamic memory networks for natural language processing

如何写科技论文How to write a technical paper